Beyond English: The Revolution of Multilingual Large Language Models and the Future of Global AI

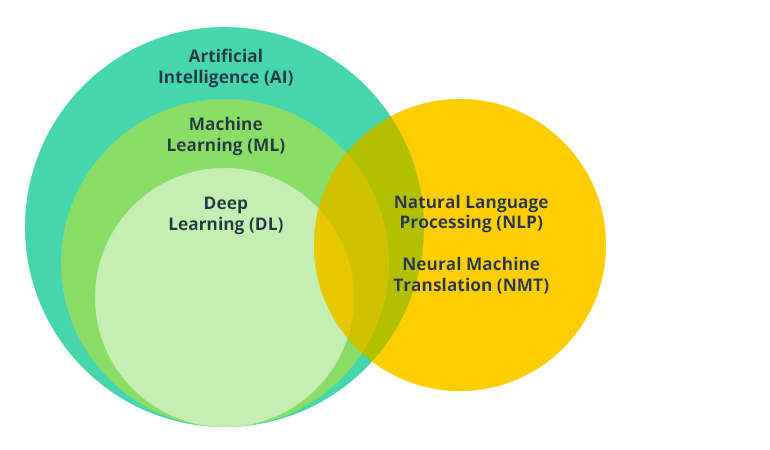

The landscape of artificial intelligence is undergoing a seismic shift. For years, the headline-grabbing advancements in Generative Pre-trained Transformers (GPT) were predominantly Anglocentric. While early iterations of large language models (LLMs) demonstrated startling capabilities, they often viewed the world through a strictly English lens, leaving billions of non-English speakers in a technological blind spot. However, recent developments in GPT Multilingual News indicate a massive pivot. The industry is moving from models that are “multilingual by accident”—simply because they ingested the entire internet—to models that are “multilingual by design,” engineered with curated datasets to democratize access to AI.

This transition is not merely about translation; it is about cultural preservation, global business efficiency, and the fundamental democratization of technology. As GPT Models News cycles accelerate, we are witnessing the emergence of open-access architectures and proprietary giants clashing and collaborating to solve the “curse of multilinguality.” This article delves deep into the technical, ethical, and practical implications of this multilingual revolution, exploring how GPT Architecture News and GPT Open Source News are redefining what it means to build a truly global intelligence.

Section 1: The Shift from Monolingual Giants to Polyglot Powerhouses

To understand the significance of current GPT Multilingual News, one must first look at the limitations of previous generations. Early models, including the initial iterations of GPT-3.5 News cycles, were trained on datasets like the Common Crawl, which is overwhelmingly English. While these models could perform translation or generate text in Spanish or French, their proficiency dropped precipitously when tasked with low-resource languages like Swahili, Urdu, or Yoruba.

The “Curse of Multilinguality” vs. Transfer Learning

In the realm of GPT Research News, there has long been a debate regarding the “curse of multilinguality.” The theory posits that for a fixed model size, adding more languages dilutes the model’s capacity to represent any single language effectively. Essentially, the neural network’s parameters are a finite resource; if they are split between English, Chinese, and Arabic, performance in English might degrade compared to a monolingual model.

However, recent breakthroughs highlighted in GPT Scaling News suggest that this trade-off is not absolute. Through cross-lingual transfer learning, a model can learn reasoning patterns in a high-resource language (like English) and apply them to a lower-resource language. For example, if a model learns the logic of coding in Python (which uses English keywords), it often improves its ability to process natural languages, leading to interesting overlaps in GPT Code Models News.

The Rise of Open Science and Community Efforts

A major driver in this space is the push for open science. While OpenAI GPT News often dominates the headlines with proprietary advancements, there is a surging wave of GPT Competitors News focused on open-access models. These initiatives involve thousands of researchers collaborating to build models that are not only larger than their predecessors but also trained on datasets specifically curated to represent dozens of languages and programming languages equally. This shift is critical for GPT Ecosystem News, as it prevents a monopoly on intelligence where only English-speaking nations reap the benefits of high-level AI reasoning.

Section 2: Technical Deep Dive: Tokenization, Training, and Architecture

Building a multilingual model is not as simple as feeding text into a transformer. It requires a fundamental rethinking of GPT Training Techniques News and architecture. The devil is in the details, specifically in how the model digests language.

The Tokenization Challenge

One of the most critical aspects of GPT Tokenization News is the vocabulary size. Traditional tokenizers (like Byte-Pair Encoding) trained on English text are notoriously inefficient for other scripts. For instance, a single English word might be one token, whereas a common Hindi word might be fragmented into four or five tokens of byte-level garbage. This inefficiency leads to:

- Increased Latency: Generating non-English text takes longer because the model has to predict more tokens for the same amount of information.

- Context Window Exhaustion: If a prompt in Japanese takes up 3x the tokens of its English equivalent, the model “forgets” earlier parts of the conversation much faster.

- Cost: For API users tracking GPT APIs News, this means higher costs for non-English markets.

Modern multilingual models address this by expanding the vocabulary size (often to 250,000 tokens or more) to include native characters and sub-words from target languages, significantly improving GPT Inference News metrics.

Data Curation and Balancing

GPT Datasets News is central to this discussion. “Multilingual by design” means manually curating the training mix. Instead of a random scrape, engineers define ratios: 30% English, 10% Chinese, 10% French, 5% Code, etc. This prevents the model from being overwhelmed by the dominant language. Furthermore, this curation helps in mitigating bias—a key topic in GPT Bias & Fairness News. If a model only sees English internet debates, it adopts Western cultural norms. By ingesting diverse global media, the model gains a more nuanced, multicultural perspective.

Hardware and Optimization

Training these massive polyglot models pushes the boundaries of GPT Hardware News. It requires thousands of GPUs running in parallel for months. This has spurred innovation in GPT Efficiency News and GPT Optimization News. Techniques like 3D parallelism (splitting the model across pipeline, tensor, and data dimensions) are standard. Furthermore, to make these models usable, we are seeing advancements in GPT Quantization News and GPT Distillation News, allowing massive multilingual models to be compressed for deployment on smaller servers or even edge devices, relevant to GPT Edge News.

Section 3: Real-World Applications and Industry Implications

The advancements in GPT Multilingual News are not just academic; they are reshaping industries. From GPT in Education News to GPT in Finance News, the ability to process and generate native-level text in 40+ languages changes the operational landscape.

Case Study: Global Customer Support and Marketing

Consider GPT in Marketing News. Previously, a global campaign required human copywriters for every region to ensure nuance. Now, GPT Custom Models News allow companies to fine-tune a base multilingual model on their brand voice. The model can then generate campaign materials in Portuguese, Swahili, and Vietnamese that maintain the brand’s tone while adapting to local idioms. Similarly, GPT Chatbots News reports that customer support automation is moving beyond rigid scripts. An AI agent can now seamlessly switch between English and Hindi (code-switching) during a single support interaction, mirroring how bilingual speakers actually talk.

Democratizing Coding and Technical Knowledge

GPT Code Models News often focuses on Python or JavaScript. However, the intersection of code and natural language is vital. A developer in Indonesia can now prompt an AI in Bahasa Indonesia to explain a complex piece of C++ code. This lowers the barrier to entry for software development globally, acting as a massive equalizer in the tech sector. This is a prime example of GPT Agents News facilitating skill acquisition.

Healthcare and Legal Access

In GPT in Healthcare News, multilingual models are assisting in translating medical records and patient instructions in regions with linguistic diversity, such as India or Africa. Accuracy is paramount here, which is why GPT Safety News is heavily focused on hallucination rates in non-English languages. Similarly, in GPT in Legal Tech News, the ability to analyze contracts in multiple languages aids in cross-border litigation and compliance, though human oversight remains essential.

Section 4: Challenges, Ethics, and the Road Ahead

Despite the optimism surrounding GPT Future News, significant hurdles remain. The expansion of language capabilities introduces complex ethical and regulatory challenges.

The Safety and Alignment Gap

Most safety alignment (RLHF – Reinforcement Learning from Human Feedback) is done in English. GPT Ethics News highlights a dangerous gap: a model might be perfectly safe in English, refusing to generate hate speech, but might happily generate it in a low-resource language because the safety filters haven’t been trained on that linguistic topology. Ensuring GPT Safety News applies across all 46+ supported languages is a massive, ongoing undertaking requiring native speakers for red-teaming.

Regulation and Sovereignty

As GPT Regulation News heats up (such as the EU AI Act), nations are becoming concerned about “AI Sovereignty.” If the dominant models are all trained by US companies, do they impose American cultural values on the rest of the world? This has fueled GPT Open Source News, where countries and independent organizations want to control the weights and training data of the models they use. This is also driving GPT Privacy News, as different regions have vastly different laws regarding data usage (e.g., GDPR in Europe vs. laxer laws elsewhere).

Benchmarking and Evaluation

How do we know if a model is actually good at Bengali? Standard benchmarks are often just translated versions of English tests, which fail to capture cultural nuances. GPT Benchmark News suggests a need for native evaluation suites—tests written in the target language for the target culture. Without this, we are measuring translation ability, not reasoning ability.

Conclusion: The Polyglot Future

The latest wave of GPT Multilingual News signifies a maturation of the AI industry. We are moving past the era where “state-of-the-art” implicitly meant “state-of-the-art in English.” The release of massive, open-access, multilingual models challenges the proprietary status quo and forces the entire GPT Ecosystem News to adapt.

For developers, this means better tools and GPT Integrations News that work globally out of the box. For businesses, it opens up GPT Applications News in previously underserved markets. And for the research community, it presents the exciting challenge of solving GPT Bias & Fairness News on a global scale. As we look toward GPT-5 News and beyond, one thing is clear: the future of AI is not just intelligent; it is inherently, deeply, and beautifully multilingual.