OpenAI Just Hit Undo on Model Deprecation (And Thank God)

We were supposed to be living in the future by now.

I spent most of October rewriting my backend to prepare for the “inevitable” sunset of the GPT-4 legacy snapshots. You know the drill. We got the emails. We saw the warnings in the dashboard. The narrative was clear: the old, heavy, expensive models were dinosaurs. Efficient, distilled, “omni-modal” architectures were the future. And, supposedly, GPT-5 was going to sweep in and render everything else obsolete anyway, so why cling to the past?

Well, it’s New Year’s Eve. I’m looking at my logs, and guess what? The dinosaurs are back.

OpenAI’s recent move to un-deprecate—or effectively “bring back”—the heavy-duty models that were on the chopping block is probably the most significant non-announcement of late 2025. It’s a quiet admission of something we in the trenches have known for months: newer isn’t always smarter. sometimes, newer is just faster and cheaper for the provider, not the user.

I, for one, am cancelling my migration tickets. And I’m opening a bottle of champagne. Not for the new year, but because I don’t have to refactor my entire prompt library next week.

The “Efficiency” Trap We Almost Fell Into

Let’s be real about what happened this year. The push throughout 2025 was entirely focused on latency and cost reduction. We got models that were blazing fast. We got models that could listen and see and speak. But if you were building complex agentic workflows—systems that actually had to think through a 20-step logic chain without hallucinating—you probably noticed a degradation.

I noticed it in my SQL generation pipelines first. The “optimized” mid-2025 models had this nasty habit of being lazy. They’d give me the SELECT statement but skip the JOIN logic I explicitly asked for, or they’d hallucinate column names that sounded plausible but didn’t exist in the schema I just fed them.

The older, denser models—the ones GPT-5 was supposed to replace—didn’t do that. They were slow. They cost a fortune per token compared to the new stuff. But they followed instructions with a stubborn, literal adherence that I miss desperately.

When OpenAI signaled they were bringing these heavyweights back into the fold (or at least halting their execution), it confirmed my suspicion: the industry hit a wall with distillation. You can only compress a model so much before you start losing the nuance required for high-stakes reasoning.

Why “Legacy” Is a Feature, Not a Bug

In production, I don’t care about benchmarks. I don’t care about MMLU scores. I care about determinism.

If I send the same JSON blob to an endpoint, I need the same structured output back. The newer architectures we saw this year, with their mixture-of-experts routing on steroids, felt jittery. One request goes to an expert that understands Python perfectly; the next request, with a slightly different temperature, gets routed to an expert that thinks it’s writing pseudo-code.

The “returned” models offer something boring but critical: predictable cognitive density.

The Fine-Tuning Nightmare Avoided

Here’s a specific scenario that almost wrecked my Q4. We have a classification bot for legal documents. It runs on a fine-tuned version of a model that was scheduled for EOL (End of Life). The replacement model? It required entirely different hyperparameters. When we tried to port the dataset over, the F1 score dropped by 12 points.

Twelve points.

I spent three weeks tweaking the learning rate, messing with the system prompt, trying to coax the new “smarter” model to behave like the “dumb” old one. It refused. It was too “aligned,” too chatty. It wanted to explain why the document was a contract instead of just outputting the tag CONTRACT.

With the reversal of these deprecations, that project is saved. I don’t have to explain to my CTO why we need to burn another $15,000 on compute just to get back to where we were in 2024.

The Technical Reality of “Bringing Them Back”

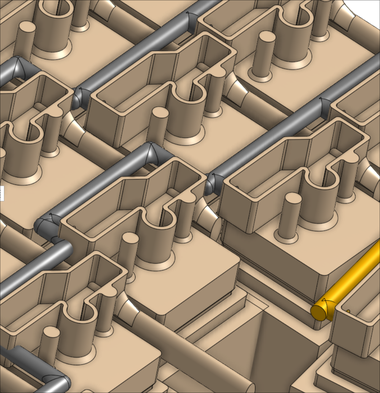

So what does this actually look like for us developers? It means we need to change our architectural strategy for 2026. We can stop treating models as disposable software versions and start treating them like hardware components.

Think about it. You don’t throw away a server just because a faster CPU came out. If that server is running a legacy database perfectly, you keep it running. We can finally apply that logic to LLMs.

My revised stack for Q1 2026 looks like this:

- Frontend/Chat: Use the latest, fastest, cheapest models. Users want speed. They want the “vibes” of a conversationalist.

- Reasoning Core: Route the hard stuff to the reinstated legacy models. If the user asks for a complex refactor of a legacy codebase, I’m not trusting a turbo-charged lightweight model. I’m paying the premium for the heavy lifter.

- Data Extraction: Stick to the older snapshots. They seem less prone to “creative” interpretation of data fields.

This hybrid approach is expensive, sure. But it’s cheaper than debugging hallucinations in production.

What About GPT-5?

The elephant in the room is why this is happening. If the next generation was ready to drop and blow our minds, OpenAI wouldn’t bother maintaining the infrastructure for the old guard. The fact that they are suggests a gap.

Maybe the scaling laws are hitting diminishing returns. Maybe the compute costs for the next leap are just too high to roll out to everyone immediately. Or maybe, just maybe, they realized that “replacement” is the wrong framework entirely.

We shouldn’t be replacing models; we should be specializing them. A carpenter doesn’t throw away his hammer just because he bought a drill. They do different things.

A Plea for Stability

If anyone from the labs is reading this: Stop trying to save me money. Seriously.

I will happily pay double per token for a model that doesn’t change its behavior every Tuesday afternoon. The return of these “obsolete” models is the best news I’ve heard all year because it signals a shift from “move fast and break things” to “keep things running.”

So, as we head into 2026, I’m not looking for magic. I’m not looking for AGI. I’m just looking for a temperature=0 request that actually returns the same result twice.

It looks like we might finally get it. Not by moving forward, but by staying put.