The New Frontier: Integrating Advanced GPT Models with Enterprise Databases and Cloud Applications

The Dawn of a New Data Paradigm: Generative AI Meets Enterprise Systems

In the rapidly evolving landscape of artificial intelligence, a seismic shift is underway. The abstract, creative power of large language models (LLMs) is breaking out of the sandbox and into the boardroom, connecting directly with the lifeblood of modern business: enterprise data. The latest GPT Applications News isn’t just about smarter chatbots or better content creation; it’s about a fundamental reimagining of how we interact with, query, and derive value from our most critical information systems. The convergence of advanced AI like OpenAI’s GPT series with enterprise-grade databases and cloud applications marks a pivotal moment, promising to unlock unprecedented levels of efficiency, insight, and automation. This integration moves beyond simple API calls, heralding an era where natural language becomes the ultimate query language, democratizing data access for everyone from the C-suite to the front-line analyst.

This article explores this transformative trend, delving into the architecture, technical mechanisms, real-world applications, and strategic considerations of embedding generative AI at the core of enterprise operations. We will examine how this fusion is not just a technological upgrade but a strategic imperative, analyzing the latest in GPT-4 News and looking ahead to the potential impact of future models discussed in emerging GPT-5 News. For businesses looking to maintain a competitive edge, understanding this new frontier is no longer optional—it’s essential.

Section 1: The Architectural Shift: Bridging Structured Data and Generative AI

The integration of GPT models with enterprise databases represents a significant architectural evolution. Historically, business intelligence (BI) and data analytics have relied on structured query languages (SQL), specialized dashboards, and trained data scientists to extract insights. This created a bottleneck, limiting deep data interaction to a select few. The new paradigm aims to dismantle this barrier by creating a seamless conversational interface powered by generative AI.

The Core Components of the Modern AI-Powered Data Stack

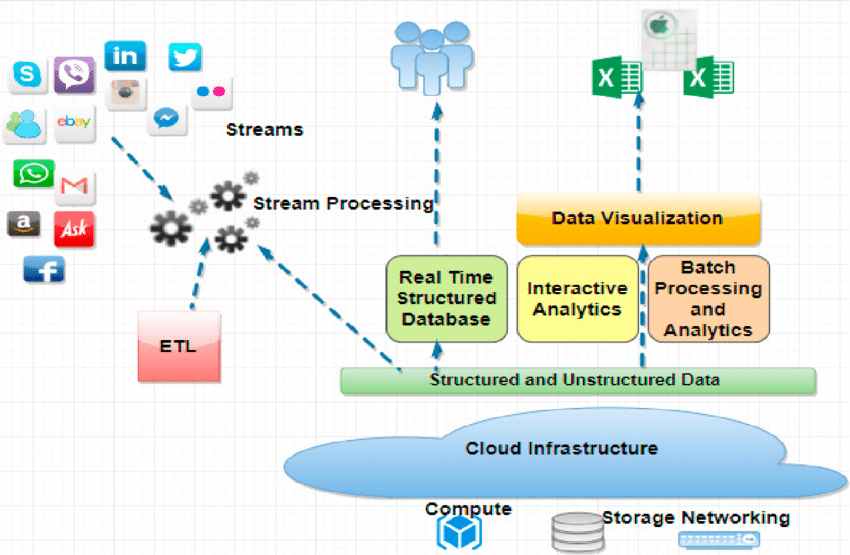

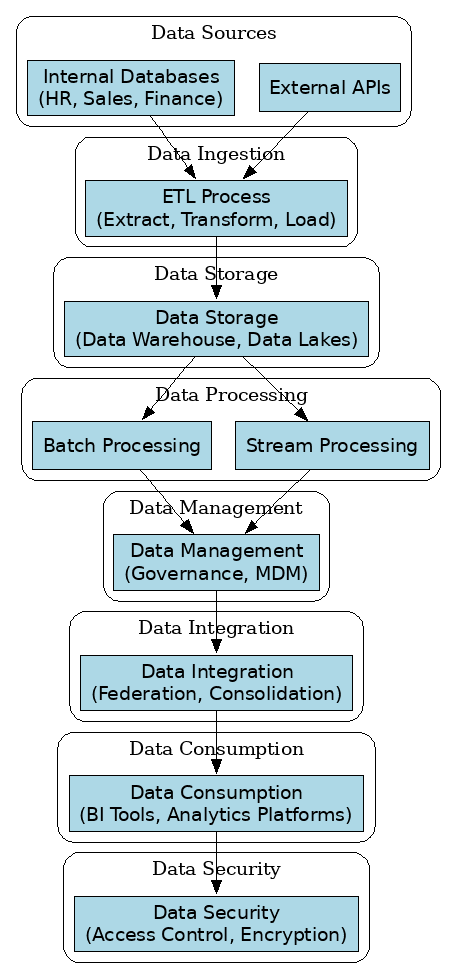

At its heart, this integration involves three primary components working in concert:

- The Enterprise Data Source: This is the system of record—a robust, secure database (like Oracle, PostgreSQL, SQL Server) or a data warehouse (like Snowflake, BigQuery) residing on a major cloud platform. It contains the structured, trusted business data that is the foundation for all insights.

- The Generative AI Model: This is the powerful reasoning engine, typically accessed via an API. The latest OpenAI GPT News highlights the capabilities of models like GPT-4, which excel at understanding context, generating human-like text, and, crucially, translating natural language into code, such as SQL.

- The Integration Layer: This is the sophisticated middleware that orchestrates the interaction. It’s not just a simple connector; it manages security, context, prompt engineering, and the translation process. This layer is where much of the innovation in the GPT Ecosystem News is happening, with new tools and platforms emerging to simplify deployment.

Retrieval-Augmented Generation (RAG): The Key to Grounded Insights

The primary technical mechanism enabling this synergy is Retrieval-Augmented Generation (RAG). Instead of relying solely on the LLM’s pre-trained knowledge (which can be outdated and lacks specific business context), RAG grounds the model’s responses in real-time, proprietary data. The process typically works as follows:

- A user asks a question in natural language, like “What were our top-selling products in Europe last quarter, and how did their sales compare to the previous quarter?”

- The integration layer intercepts this query. It first consults a vector database containing embeddings of the company’s database schema, metadata, and documentation. This step “retrieves” the most relevant context about which tables and columns contain information about sales, products, and regions.

- This retrieved context is then packaged with the original user query into a sophisticated prompt for the LLM.

- The LLM, now armed with both the user’s intent and the specific map of the database, generates a precise SQL query. This is a hot topic in GPT Code Models News, as models become increasingly proficient at writing accurate and optimized code.

- The generated SQL is executed securely against the enterprise database.

- The database returns the raw data (e.g., product names, sales figures).

- The LLM receives this raw data and synthesizes it into a clear, concise, and human-readable summary, directly answering the user’s original question.

This RAG-based approach ensures that the AI’s answers are not hallucinations but are firmly rooted in the company’s actual, up-to-the-minute data, a critical requirement for any serious enterprise application.

Section 2: Deep Dive: Implementation Strategies and Critical Considerations

While the potential is immense, deploying GPT models within an enterprise environment is a complex undertaking that requires careful planning. The latest GPT Deployment News emphasizes a shift from experimental projects to production-grade systems, which brings a host of new challenges and best practices to the forefront.

Beyond RAG: The Role of Fine-Tuning and Custom Models

While RAG is a powerful tool for grounding models in factual data, some scenarios demand deeper specialization. This is where the latest GPT Fine-Tuning News becomes relevant. Fine-tuning involves further training a base model on a smaller, domain-specific dataset. For example, a legal tech firm might fine-tune a model on a corpus of its own legal documents to better understand specific legal jargon and citation formats.

The decision between RAG and fine-tuning is a critical one:

- RAG is ideal for: Fact-based Q&A, querying rapidly changing data, and applications where data freshness and verifiability are paramount. It is generally faster and cheaper to implement.

- Fine-tuning is better for: Teaching the model a new skill, style, or highly specialized vocabulary that isn’t present in its base training. This is a core topic in GPT Custom Models News.

In many advanced applications, a hybrid approach is used, where a fine-tuned model operates within a RAG framework to achieve both specialized understanding and real-time data grounding.

Common Pitfalls: Security, Governance, and Performance

Connecting a powerful LLM to a core database introduces significant risks that must be mitigated. The conversation around GPT Ethics News and GPT Safety News is paramount here.

- Data Privacy and Security: The most critical concern. All data, especially prompts containing sensitive information, must be handled within a secure environment. Solutions often involve using private endpoints, deploying models within a virtual private cloud (VPC), or leveraging services like Azure OpenAI that offer enterprise-grade privacy guarantees. The latest GPT Privacy News often focuses on new techniques for data anonymization within AI pipelines.

- Prompt Injection and Malicious Queries: A user could theoretically craft a prompt that tricks the LLM into generating a malicious SQL query (e.g., `DROP TABLE users;`). Robust validation layers are needed to inspect and sanitize any code generated by the LLM before it is executed.

- Hallucination and Accuracy: While RAG significantly reduces hallucinations, it doesn’t eliminate them. The model might misinterpret the schema or the returned data. Implementing confidence scoring and a “human-in-the-loop” verification step for critical queries is a common best practice. Ongoing GPT Benchmark News helps organizations choose models with the highest accuracy for tasks like code generation.

- Performance and Cost: LLM inference is computationally expensive. As reported in GPT Inference News, optimizing for low latency and high throughput is a major engineering challenge. Caching common queries, using smaller, specialized models (a focus of GPT Distillation News), and leveraging optimized GPT Hardware News are key strategies to manage costs and ensure a responsive user experience.

Section 3: Real-World Impact: Use Cases Transforming Industries

The theoretical benefits of integrating GPT with enterprise data come to life in a variety of practical, high-impact applications across different sectors. These real-world scenarios demonstrate a clear return on investment and a new way of operating.

GPT in Finance News: Democratizing Financial Analytics

A portfolio manager, instead of waiting for a weekly report from the data science team, can now directly ask the system: “Compare the year-to-date performance of our tech holdings against the NASDAQ index, highlight any assets with a Sharpe ratio below 0.5, and summarize the key market news affecting them this week.” The system, powered by an LLM connected to market data feeds and internal portfolio databases, can generate a comprehensive, multi-faceted answer in seconds. This accelerates decision-making and uncovers risks and opportunities that might have been missed in standard reporting cycles. This is a prime example of how GPT Agents News is evolving, with AI performing multi-step analytical tasks.

GPT in Healthcare News: Accelerating Clinical Research and Operations

In a hospital setting, a clinical trial coordinator can query a secure, anonymized patient data repository with a complex request: “Identify all patients over 60 who have been diagnosed with hypertension, are not currently on beta-blockers, and have shown elevated LDL cholesterol levels in their last two lab reports.” This task, which previously required a data specialist and days of work, can now be done in minutes. This dramatically speeds up patient recruitment for clinical trials and helps hospital administrators optimize resource allocation based on real-time patient population trends. The focus on data security and compliance is a constant theme in GPT in Healthcare News.

GPT in Marketing News: Hyper-Personalization at Scale

A marketing director can leverage an LLM integrated with their CRM and sales database to craft highly targeted campaigns. They might ask, “Generate a list of customers who have purchased our running shoes in the last 6 months but have not purchased our new line of athletic apparel. Draft three distinct email variations for a promotional campaign targeting this segment, using a personalized and encouraging tone.” This moves beyond simple segmentation to true one-to-one marketing, a long-standing goal discussed in GPT in Content Creation News. The system can analyze past purchase behavior and generate compelling copy, all grounded in real customer data.

Section 4: Recommendations, Best Practices, and the Future Outlook

As organizations embark on this integration journey, a strategic approach is crucial for success. The landscape, driven by constant GPT Trends News, is dynamic, and what works today may be superseded tomorrow.

Best Practices for Implementation

- Start with a Defined, High-Value Use Case: Don’t try to boil the ocean. Identify a specific business problem where a natural language interface to data can provide a clear and measurable benefit.

- Prioritize Governance and Security from Day One: Build a robust framework for data access control, prompt sanitization, and output validation before writing a single line of production code. Address the concerns raised in GPT Regulation News proactively.

- Embrace a Human-in-the-Loop (HITL) Model: For critical decisions, especially in finance or healthcare, the AI should act as a co-pilot, not an autopilot. The system should present its findings and the underlying data for human verification.

- Monitor, Log, and Iterate: Continuously monitor the performance, accuracy, and cost of your implementation. Log all queries and responses to identify patterns, improve prompt engineering, and decide when retraining or fine-tuning might be necessary.

The Road Ahead: GPT-5, Multimodality, and Autonomous Agents

The future of this integration is even more exciting. The anticipated arrival of GPT-5, a constant source of speculation in GPT Future News, promises even more powerful reasoning, longer context windows, and potentially lower inference costs, making these applications more powerful and accessible.

Furthermore, the rise of multimodal AI, a key topic in GPT Multimodal News and GPT Vision News, will allow users to query using images, charts, and voice. Imagine an inventory manager taking a photo of a warehouse shelf and asking, “How many of these items did we sell last month, and when is our next shipment due?” This seamless fusion of different data types will unlock entirely new workflows. As these systems become more capable, they will evolve from simple Q&A tools into proactive GPT Assistants News, capable of monitoring data streams and alerting users to important trends or anomalies without being prompted.

Conclusion: A New Chapter in Data Interaction

The integration of advanced GPT models with enterprise databases and cloud applications is more than an incremental improvement; it is a paradigm shift that redefines our relationship with data. By replacing complex code and rigid dashboards with intuitive, conversational interfaces, businesses can empower their entire workforce to make faster, better-informed decisions. While the path to implementation is paved with technical and ethical challenges—from data privacy and security to model accuracy and cost management—the strategic advantages are undeniable. The ongoing advancements reported in GPT Research News and the growth of the surrounding GPT Tools News ecosystem are rapidly maturing this technology from a bleeding-edge concept to an enterprise-ready solution. For organizations willing to navigate its complexities, the reward is a future where the full potential of their data is finally unlocked, accessible through the power of simple conversation.