Bridging the Digital and Real Worlds: A Deep Dive into GPT Function Calling

The Next Evolution in AI Interaction: GPT Models Can Now Call Functions

For years, the primary limitation of Large Language Models (LLMs) has been their confinement to the data they were trained on. While incredibly powerful at generating human-like text, answering questions, and summarizing content, they have historically been disconnected from real-time information and external systems. This created a fundamental gap between their vast knowledge and their ability to take action in the digital world. The latest chapter in GPT Models News marks a monumental shift in this paradigm. With the introduction of function calling capabilities in the latest GPT-4 and GPT-3.5 Turbo models, OpenAI has provided developers with a robust, reliable, and native way to bridge this gap. This development is more than just an incremental update; it represents a foundational change, transforming these models from sophisticated conversationalists into powerful, actionable agents capable of interacting with APIs, databases, and external tools. This advancement is a cornerstone of recent OpenAI GPT News, paving the way for a new generation of smarter, more integrated AI applications.

Understanding Function Calling: The Mechanics and Architecture

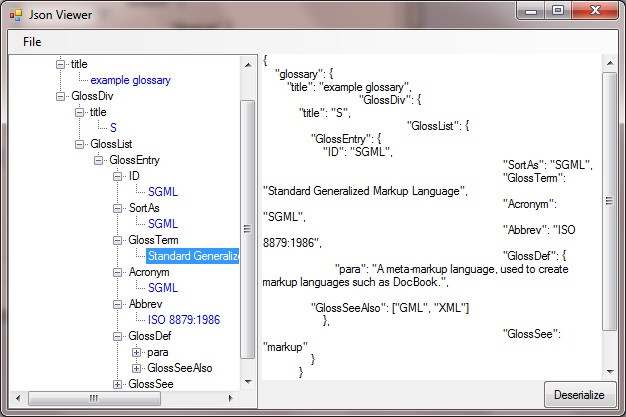

At its core, function calling is a mechanism that allows a developer to describe external functions to a GPT model, which can then intelligently choose to output a JSON object containing arguments to call one of those functions based on the user’s input. It’s a crucial distinction to understand: the model does not execute the code itself. Instead, it acts as an intelligent router or a natural language-to-API translator, providing a structured request that the developer’s application can then execute. This is a significant piece of GPT APIs News, as it standardizes a previously complex and error-prone process.

The Core Concept: From Natural Language to Structured Output

Imagine a user typing, “What’s the weather like in San Francisco?” Previously, a model might search its training data for weather patterns in San Francisco, providing a generic or outdated answer. With function calling, the process is entirely different. The model recognizes the user’s intent to get real-time weather information. If the developer has provided a function definition for, say, get_current_weather(location), the model will forgo a standard text response. Instead, its output will be a structured JSON object, such as {"name": "get_current_weather", "arguments": {"location": "San Francisco, CA"}}. This structured data can then be reliably parsed and used by the application to call an actual weather API, making the interaction dynamic and accurate.

A Step-by-Step Breakdown of the Interaction Loop

The entire function calling process can be broken down into a three-step conversational loop, which is a key update in the latest GPT-4 News and GPT-3.5 News:

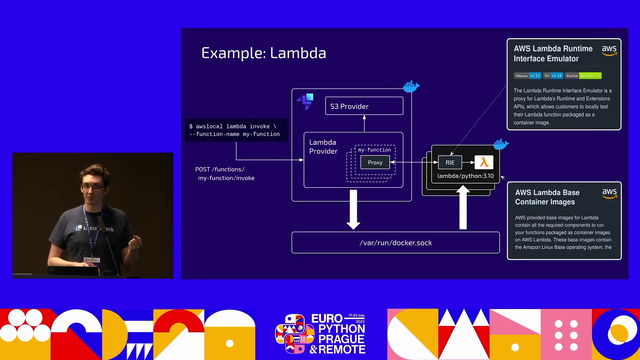

- Function Definition: In the initial API request, the developer provides not only the user’s prompt but also a list of available functions. Each function is described with its name, a clear description of what it does, and a schema for its parameters (e.g., parameter name, type, and description). This description is critical, as the model uses it to determine the function’s purpose and how to use it.

- Model’s Intelligent Response: The model processes the user’s query and the provided function definitions. It determines if the query can be best answered by calling one of the functions. If so, it responds with a JSON object containing the name of the function to call and the arguments to use, extracted from the user’s query. If not, it responds with a standard text-based message.

- Execution and Finalization: The developer’s application receives the JSON response. It then parses this data, executes the corresponding function (e.g., calls an internal API or a database), and gets a result. This result is then sent back to the model in a new API call. The model receives this new context and generates a final, user-friendly natural language response that incorporates the data from the function call (e.g., “The current weather in San Francisco is 65°F and sunny.”).

This closed-loop system is far more reliable than previous methods that relied on prompt engineering and parsing unstructured text, representing a major leap forward in GPT Language Support News.

Putting Function Calling into Practice: Code Examples and Use Cases

The true power of function calling is realized when it is applied to solve real-world problems. By connecting GPT models to external tools, developers can create applications that are more dynamic, helpful, and integrated into existing workflows. This has massive implications for GPT Applications News across various sectors.

A Concrete Example: Building a Smart E-commerce Assistant

Let’s consider building a chatbot for an e-commerce website. We want the chatbot to be able to check product inventory and get order statuses. We can define two functions for the model:

get_inventory(product_id: string): Checks the current stock level for a given product ID.

get_order_status(order_id: string): Retrieves the current status of a customer’s order.

A user might ask: “Hey, can you tell me if product #A4B2 is in stock and what the status of my order #98765 is?”

The model, equipped with the definitions for our two functions, can recognize this as a multi-step request. It might decide to chain these calls. First, it would output a JSON object to call the inventory function:

{"name": "get_inventory", "arguments": {"product_id": "A4B2"}}

Your application would execute this, find that the product is in stock, and send this result back to the model. The model, still retaining the context of the original query, would then output a second JSON object:

{"name": "get_order_status", "arguments": {"order_id": "98765"}}

Your application executes this call, finds the order has shipped, and sends that result back. Finally, with all the necessary information gathered, the model provides a single, coherent response to the user: “Yes, product #A4B2 is currently in stock. Your order #98765 has been shipped and is on its way.” This ability to chain calls is a revolutionary aspect of GPT Agents News.

Transformative Real-World Scenarios

- GPT in Finance News: A financial analyst could ask, “Convert $500 USD to EUR and then buy 10 shares of stock XYZ at the current market price.” This would trigger a sequence of function calls to a currency conversion API followed by a brokerage API.

- GPT in Healthcare News: A medical assistant application could help a doctor by responding to the query, “Summarize the patient’s latest lab results and schedule a follow-up appointment for next Tuesday.” This would involve a function to query an electronic health record (EHR) database and another to interact with a scheduling system.

- GPT in Legal Tech News: A paralegal could use a tool that responds to, “Find all contracts with ‘Clause X’ signed in the last quarter and summarize their key terms,” triggering a database query function followed by a summarization task.

- GPT Integrations News: The possibilities are endless, from controlling smart home devices (“Dim the lights and play my ‘Focus’ playlist”) to managing complex cloud infrastructure (“Provision a new server with these specs and deploy the latest build”).

The Broader Implications: From Chatbots to Autonomous Agents

The introduction of native function calling is more than just a new feature; it’s a strategic move that fundamentally alters the landscape of AI development and is central to current GPT Trends News. It signals a shift from models that simply process information to models that can act on it, a critical step on the path to creating more sophisticated and autonomous AI agents.

The Evolution from GPT Plugins to Native API Support

Earlier this year, the community saw the rise of ChatGPT Plugins, which offered a first glimpse into connecting GPT with external services. While innovative, the plugin system is a closed ecosystem primarily for the ChatGPT product. Function calling, on the other hand, is a core feature of the API. This is a crucial piece of GPT Ecosystem News because it democratizes this capability, giving any developer the power to build deeply integrated applications with more control, better security, and greater reliability. This also simplifies the development lifecycle, which is welcome GPT Deployment News for engineering teams.

Enabling Complex Workflows and Tool Chaining

As seen in the e-commerce example, the model’s ability to decide when and how to use tools, and even to chain them together to accomplish a multi-step goal, is profound. This capability is the bedrock of building AI agents. An agent can now be tasked with a high-level objective, like “Plan a weekend trip to New York for me,” and it can independently decide to call functions for flight searches, hotel bookings, and restaurant reservations, synthesizing the information at the end. This will have a huge impact on the future of GPT Assistants News and personal productivity tools.

Impact on Future GPT Architecture and Research

This feature also opens up new avenues for GPT Research News. The feedback loop—where the model requests an action, receives a result from the real world, and then continues its reasoning—is a form of active learning. This interaction with external environments could be a key component in training more capable and grounded models in the future. It’s a step away from static training and towards dynamic, interactive learning, which could be a major topic in future GPT Architecture News and discussions around the much-anticipated **GPT-5 News**.

Navigating Function Calling: Best Practices and Common Pitfalls

While incredibly powerful, implementing function calling requires careful consideration to ensure reliability, security, and efficiency. Adhering to best practices is crucial for building robust applications and is a key topic in GPT Safety News.

Tips for Effective Implementation

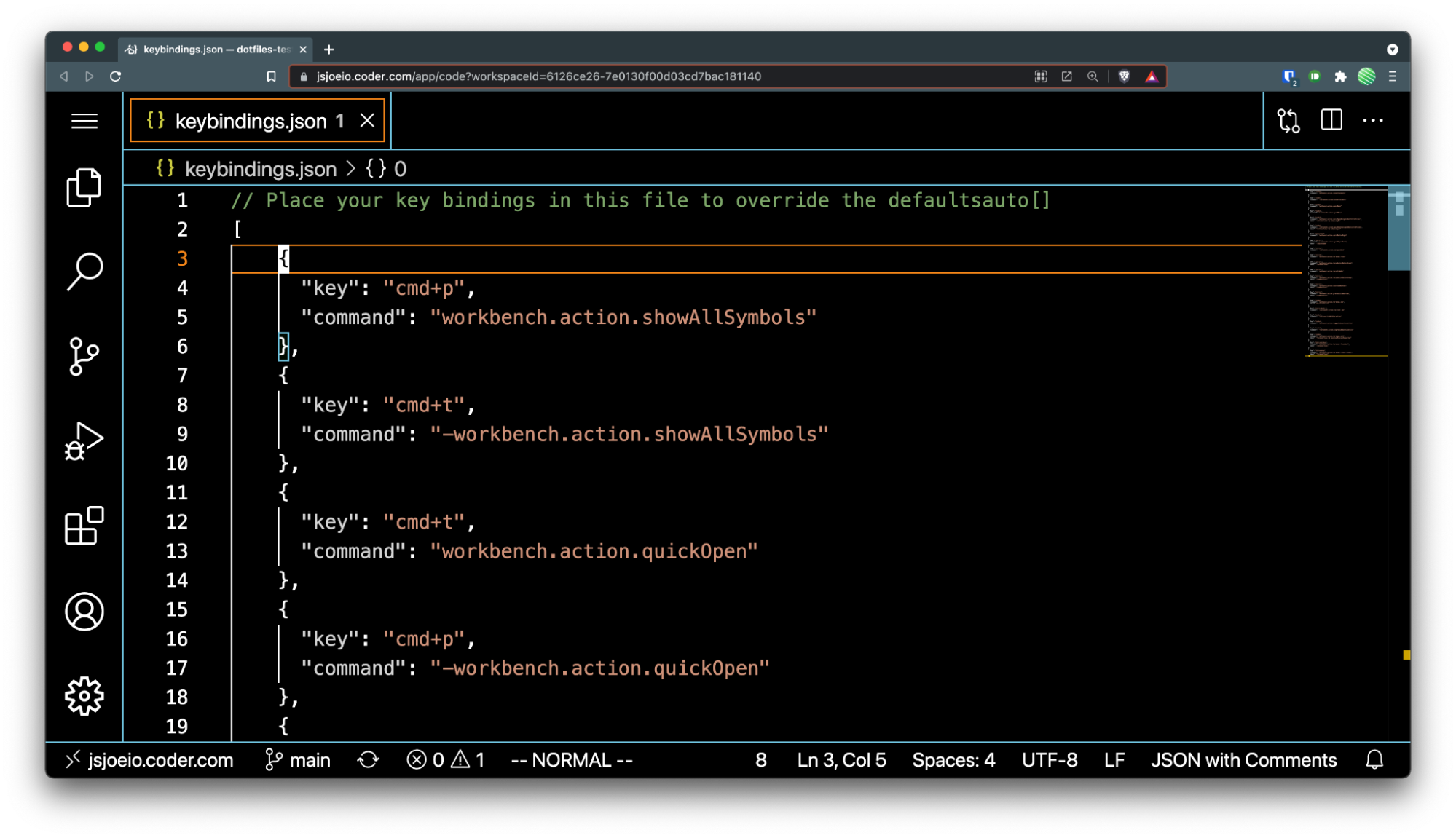

- Write Crystal-Clear Function Descriptions: The model’s ability to correctly use your functions is almost entirely dependent on the quality of your descriptions. Be explicit and unambiguous. Describe not only what the function does but also what each parameter represents. Think of it as writing documentation for an AI, not a human.

- Implement Robust Error Handling: The model is not infallible. It might occasionally hallucinate arguments or attempt to call a function in an incorrect context. Your application code must be prepared to handle malformed JSON, missing arguments, or unexpected function calls gracefully.

- Prioritize Security Above All: This is the most critical consideration. Never, under any circumstances, execute code or database queries generated by the model without strict validation, sanitization, and sandboxing. Treat the model’s JSON output with the same skepticism you would treat any untrusted user input. This is paramount for maintaining GPT Privacy News and preventing security vulnerabilities.

Common Pitfalls to Avoid

- Overly Complex, Monolithic Functions: Avoid defining single functions that try to do too much. The model works best with simple, atomic functions that perform one specific task. Decompose complex operations into smaller, chainable functions.

- Ignoring the Finalization Step: A common mistake is to execute the function and consider the job done. Remember that the magic happens when you send the function’s output back to the model, allowing it to provide a final, context-aware, and natural language response to the user.

– Ambiguous or Vague Descriptions: If a description is vague (e.g., “gets data”), the model will struggle to understand when to use it. Be specific (e.g., “retrieves customer order history by customer ID”).

Conclusion: A New Era of Actionable AI

The introduction of function calling in GPT-4 and GPT-3.5 Turbo is a landmark event in the evolution of artificial intelligence. It systematically dismantles the wall between LLMs and the vast world of external data and APIs. This feature transforms the models from being passive generators of text into active participants in digital workflows, capable of converting natural language requests into concrete actions. For developers, this unlocks a new frontier of possibilities, enabling the creation of more powerful, reliable, and context-aware applications that can query databases, interact with services, and automate complex tasks. As we look to the horizon of GPT Future News, this development is not merely an added feature but a foundational building block for the next generation of sophisticated AI agents and a clear signal of the exciting, action-oriented future of AI.