The Next Leap in AI Development: Unpacking OpenAI’s Latest GPT Model Updates, Function Calling, and 16K Context

The field of artificial intelligence is evolving at a breathtaking pace, with each new development cycle bringing capabilities that were once the domain of science fiction into the hands of developers. Recently, the AI community has been buzzing with a suite of transformative updates to generative pre-trained transformer models that significantly lower the barrier to creating sophisticated, real-world AI applications. These advancements are not merely incremental; they represent a fundamental shift in how developers can interact with and leverage large language models (LLMs).

This wave of innovation brings forth a powerful combination of new, more steerable GPT models, a groundbreaking “function calling” capability that effectively brings the power of plugins to the API, a vastly expanded context window for processing large amounts of information, and significant cost reductions that democratize access to cutting-edge technology. This article provides a comprehensive technical breakdown of these updates, exploring their mechanics, real-world applications, and strategic implications for developers, businesses, and the future of the entire GPT Ecosystem News landscape.

A Landmark Update for the AI Ecosystem: What Developers Need to Know

The latest batch of OpenAI GPT News represents a multi-faceted upgrade designed to enhance model capability, developer control, and economic feasibility. These updates work in concert to unlock a new tier of applications, moving beyond simple text generation to create complex, tool-using AI agents.

New, More Steerable GPT-4 and GPT-3.5 Turbo Models

At the core of the recent announcements are new versions of the flagship models, including gpt-4-0613 and gpt-3.5-turbo-0613. These are not entirely new architectures but are refined “snapshot” models that offer significantly improved instruction-following capabilities. This enhancement in steerability means the models are more reliable in adhering to specific formatting requests and system-level instructions, a crucial piece of GPT-4 News for developers building structured applications. This increased reliability reduces the need for elaborate prompt engineering, simplifying the development process and leading to more predictable outcomes.

The Game-Changer: Function Calling in the API

Arguably the most significant development is the introduction of native function calling. This is the API-level implementation of the technology that powers ChatGPT Plugins, and it’s a paradigm shift for GPT APIs News. Function calling allows developers to describe their application’s functions to the model, which can then intelligently generate a JSON object containing the necessary arguments to call those functions. This enables the model to interact with external tools, APIs, and databases, effectively giving it the ability to take action in the real world. This is a cornerstone for building advanced GPT Agents News and sophisticated GPT Integrations News.

Expanded Horizons: The 16k Context Window

A major bottleneck for many advanced applications has been the limited context window of LLMs. The introduction of gpt-3.5-turbo-16k directly addresses this. This new model variant quadruples the context length of its predecessor, from 4,096 tokens to 16,384 tokens. This translates to roughly 12,000 words of text that can be processed in a single API call. This massive expansion, a key piece of GPT-3.5 News, unlocks a host of new use cases, from analyzing lengthy legal documents to maintaining coherent, long-form conversations, fundamentally changing the scale of problems that can be tackled. This is a significant development in GPT Scaling News, directly impacting how we approach GPT Tokenization News and data processing.

Economic Accessibility: Significant Cost Reductions

Power is meaningless without access. Recognizing this, the latest updates include substantial price reductions. The cost of using the state-of-the-art embeddings model, text-embedding-ada-002, has been slashed by 75%. Furthermore, the new gpt-3.5-turbo-16k model is priced strategically to make large-context applications economically viable. These cost optimizations are critical GPT Models News, as they empower startups, researchers, and independent developers to build and scale ambitious GPT Applications News that were previously cost-prohibitive.

Deep Dive: Mastering Function Calling to Build Dynamic AI Agents

Function calling is more than just a new feature; it’s a foundational building block for creating interactive and autonomous AI systems. It bridges the gap between the linguistic intelligence of LLMs and the functional capabilities of conventional software.

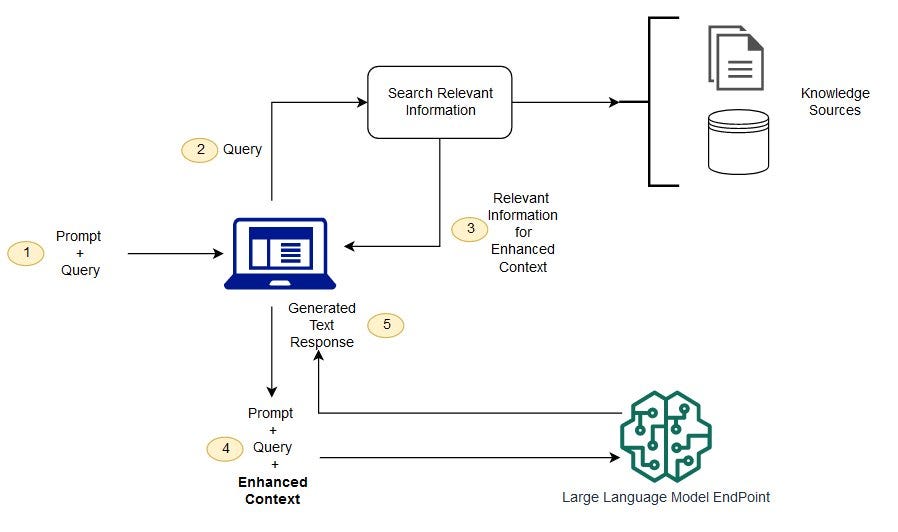

How Function Calling Works Under the Hood

The implementation is both elegant and powerful. The process involves a three-step dance between the developer’s code and the model:

- Definition: The developer defines a set of available functions within their application code. In the API call, they pass a description of these functions to the model, including the function name, a clear description of what it does, and the parameters it accepts (with types and descriptions).

- Generation: The model processes the user’s prompt, along with the function definitions. If it determines that one or more of the external tools are needed to fulfill the user’s request, it doesn’t respond with a conversational message. Instead, it outputs a specially formatted JSON object containing the name of the function to call and the arguments to use.

- Execution: The developer’s code receives this JSON, parses it, and executes the corresponding local function with the provided arguments. The result of this function call (e.g., API response, database query result) is then sent back to the model in a subsequent API call. The model uses this new information to formulate its final, context-aware response to the user.

This loop transforms a simple chatbot into a powerful, tool-using agent, making the latest GPT Chatbots News far more exciting.

Practical Example: Building a Travel Assistant

Imagine creating a travel assistant. You define two functions in your code: get_flight_price(departure_city, destination_city) and get_hotel_availability(city, check_in_date).

A user prompts: “Find me the cheapest flight from New York to London next Monday and see if there are any 4-star hotels available.”

- The model first recognizes the need for flight information. It returns a JSON object:

{"name": "get_flight_price", "arguments": {"departure_city": "NYC", "destination_city": "LON"}}. - Your code executes this function, calls a flight API, and gets a price of $500.

- You send this result back to the model.

- The model now processes the second part of the request and returns another JSON object:

{"name": "get_hotel_availability", "arguments": {"city": "London", "check_in_date": "YYYY-MM-DD"}}. - Your code executes this, finds available hotels, and sends the results back.

- Finally, the model synthesizes all the information and provides a comprehensive answer to the user: “The cheapest flight is $500. There are several 4-star hotels available, including The Savoy and The Ritz.”

This demonstrates how function calling enables complex, multi-step reasoning and interaction with external GPT Tools News.

Best Practices and Common Pitfalls

To effectively leverage this technology, developers should adhere to certain best practices. The quality of function and parameter descriptions is paramount; they act as the “prompt” for the model’s tool-use reasoning. Clear, unambiguous descriptions lead to reliable performance. It’s also critical to implement robust error handling and validation on the developer’s side. Never trust the model’s output implicitly; always validate the JSON structure and arguments before execution to mitigate potential errors or security risks, a key aspect of GPT Safety News and responsible GPT Deployment News.

The Ripple Effect: How Expanded Context and Model Refinements are Reshaping Industries

The combination of a 16k context window and more reliable models is set to have a profound impact across numerous sectors, enabling applications that were previously impractical or impossible.

Unlocking New Possibilities with the 16k Context Window

The quadrupled context length is a game-changer for professional domains that rely on dense, long-form documents. This is major GPT Applications in IoT News as well, where long streams of sensor data can be analyzed.

- GPT in Legal Tech News: Lawyers and paralegals can now feed entire contracts, depositions, or lengthy case files into the model in a single pass to ask for summaries, identify clauses, or find precedents.

- GPT in Healthcare News: Researchers can analyze full-length medical studies, and clinicians can summarize extensive patient histories to identify patterns or potential diagnoses more efficiently.

- GPT in Finance News: Financial analysts can process quarterly earnings reports, prospectuses, and market analysis documents to extract key insights and sentiment without needing to chunk the text into smaller pieces.

- GPT in Education News: The model can act as a more effective tutor by maintaining the context of an entire chapter or a long Socratic dialogue, providing more personalized and contextually aware guidance.

This expanded context also enhances GPT Multilingual News, as it can handle large, mixed-language documents for translation or cross-lingual analysis.

The Nuances of Updated Models and Fine-Tuning

The improved steerability of the -0613 models has direct implications for customization. For developers interested in GPT Fine-Tuning News, a more reliable base model means that the fine-tuning process can be more effective, requiring less data to achieve a desired level of performance. This makes creating GPT Custom Models News more accessible. Better instruction-following also improves zero-shot performance, which is crucial for passing various evaluations and a topic of interest in GPT Benchmark News. The improved reliability also positively impacts GPT Inference News, as developers can expect more consistent outputs for the same inputs.

Impact on Content Creation and Code Generation

For those in creative fields, the 16k context is a massive boon. The latest GPT in Content Creation News highlights the ability to generate longer, more coherent narratives, screenplays, or technical manuals while maintaining plot points and character consistency. For developers, the impact on GPT Code Models News is equally profound. The model can now hold the context of multiple files or an entire complex module, enabling it to provide more accurate debugging assistance, refactor code with greater awareness of dependencies, and generate more complete and functional code blocks.

Strategic Considerations and the Road Ahead

These updates are not just technical improvements; they are strategic moves that reshape the competitive landscape and provide a glimpse into the future of AI development.

Developer Recommendations and Strategic Adoption

Developers should adopt a strategic approach to leveraging these new tools. For tasks requiring large amounts of text, starting with gpt-3.5-turbo-16k offers a powerful and cost-effective solution. Function calling should be seen as the new standard for building any application that needs to interact with the outside world, moving beyond basic GPT Assistants to fully integrated agents. However, it’s essential to consider the trade-offs. While powerful, larger context models may have slightly higher latency. Therefore, analyzing the specific needs of an application regarding GPT Latency & Throughput is crucial for optimal GPT Optimization News and achieving peak GPT Efficiency News.

The Competitive Landscape and Future Speculation

These advancements solidify OpenAI’s position in a rapidly growing market and raise the bar for GPT Competitors News. The focus on structured, reliable outputs and external tool integration points to a future dominated by AI agents. Looking ahead, this is a stepping stone towards what many anticipate from future GPT-5 News. We can speculate that future models will have even more deeply integrated multimodality (GPT Vision News, GPT Multimodal News) and more autonomous agent-like capabilities. The ongoing GPT Research News into techniques like GPT Quantization and GPT Distillation aims to make these powerful models run efficiently on smaller devices, heralding a future of GPT Edge News and on-device intelligence.

Ultimately, these updates fuel a virtuous cycle within the AI space, where more powerful tools lead to more innovative applications, which in turn generate more data and insights to build even better models. This is central to the ongoing dialogue around GPT Ethics News and GPT Regulation News, as the technology becomes more capable and integrated into society.

Conclusion

The latest wave of updates from OpenAI marks a pivotal moment in the evolution of generative AI. The introduction of function calling is a paradigm shift, transforming LLMs from pure text generators into active participants in digital workflows. The quadrupling of the context window with the 16k model unlocks new industries and scales of problem-solving, while significant price reductions ensure that these powerful tools are accessible to the broadest possible audience. These are not just incremental updates; they are foundational building blocks that empower developers to construct the next generation of intelligent, responsive, and genuinely useful AI applications. As we look to the GPT Future News, it’s clear that the pace of innovation is only accelerating, and the gap between human imagination and AI capability is rapidly closing.