The Edge AI Paradigm Shift: Analyzing OpenAI’s gpt-oss-20b and the Qualcomm Snapdragon Integration

Introduction

The landscape of artificial intelligence is undergoing a seismic shift, moving from a centralized, cloud-dependent architecture toward a decentralized, edge-first reality. For years, GPT Models News has been dominated by massive, proprietary models hosted on remote server farms, accessible only via API calls that require constant internet connectivity. However, a groundbreaking development has emerged that bridges the gap between high-level reasoning capabilities and local hardware constraints. The release of gpt-oss-20b, OpenAI’s latest open-source language model, marks a pivotal moment in GPT Open Source News.

This 20-billion parameter model is not merely a downsized version of its predecessors; it is a specialized reasoning engine designed to rival the cognitive performance of models like o3-mini. But the true headline lies in its deployment strategy. Through a strategic collaboration with Qualcomm, this model is the first of its kind optimized to run locally on Snapdragon-powered devices. This integration signifies a massive leap for GPT Edge News, enabling complex AI tasks to be performed directly on smartphones, laptops, and IoT devices without data ever leaving the terminal. As we delve into this development, we will explore the technical specifications, the implications for privacy and latency, and how developers can leverage this new tool within the evolving GPT Ecosystem News.

Section 1: Unpacking gpt-oss-20b – Architecture and Reasoning Capabilities

The release of gpt-oss-20b represents a strategic pivot for OpenAI, a company that has historically kept its most powerful architectures closed. By entering the open-weight arena, OpenAI is directly addressing the growing demand for transparency and control highlighted in recent GPT Competitors News. To understand the significance of this release, we must analyze the architecture and the specific niche it aims to fill.

The Sweet Spot: 20 Billion Parameters

In the realm of GPT Architecture News, parameter count is often a proxy for capability, but it is also the primary bottleneck for deployment. Massive models like GPT-4 require clusters of H100 GPUs. Conversely, small models (under 7B parameters) often struggle with complex logic and hallucination. The 20-billion parameter size of gpt-oss-20b strikes a calculated balance. It is large enough to retain deep semantic understanding and reasoning patterns but small enough to fit within the memory constraints of high-end consumer hardware, particularly when utilizing modern GPT Compression News techniques like quantization.

Reasoning vs. Generation

Unlike standard predictive text models, gpt-oss-20b is touted for its reasoning capabilities, drawing comparisons to the o3-mini model. This suggests that the model has been fine-tuned using Chain-of-Thought (CoT) methodologies. In the context of GPT Training Techniques News, this implies the model generates intermediate reasoning steps before arriving at a final answer. This is crucial for applications in coding, mathematics, and logic puzzles where “vibes-based” text generation fails. For developers tracking GPT Research News, this moves the goalpost for what is expected from “small” language models (SLMs).

Open Source Implications

By making the weights available, OpenAI is influencing GPT Ethics News and GPT Safety News. Open models allow researchers to inspect the model for biases, test its safety guardrails, and understand its decision-making processes. This transparency is vital for sectors like healthcare and finance, where “black box” AI is increasingly scrutinized under GPT Regulation News. Furthermore, the availability of gpt-oss-20b on platforms like Hugging Face allows for community-driven improvements, including custom fine-tuning on niche datasets, effectively democratizing access to high-level AI reasoning.

Section 2: The Hardware Revolution – Qualcomm Snapdragon and Edge AI

Software is only as good as the hardware it runs on. The collaboration between OpenAI and Qualcomm is the catalyst that transforms gpt-oss-20b from a theoretical research artifact into a practical tool for millions of users. This partnership highlights critical advancements in GPT Hardware News and GPT Inference Engines News.

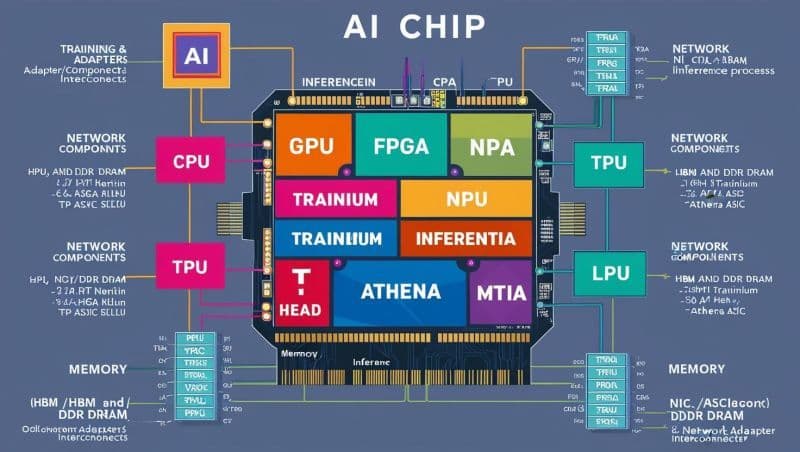

The Role of the NPU

Running a 20-billion parameter model on a CPU would be agonizingly slow; running it on a mobile GPU drains the battery rapidly. The solution lies in the Neural Processing Unit (NPU) found in modern Snapdragon platforms. Qualcomm has optimized gpt-oss-20b to leverage the Qualcomm AI Stack, shifting the heavy lifting of matrix multiplications to the NPU. This results in significant improvements in GPT Latency & Throughput News, allowing for real-time interaction without the lag associated with cloud round-trips.

Privacy and Data Sovereignty

One of the most significant advantages of this local deployment is privacy, a hot topic in GPT Privacy News. Traditionally, using a model like GPT-4 involved sending user prompts to OpenAI’s servers. This posed risks for enterprise users dealing with sensitive IP or personal data. With gpt-oss-20b running locally on a Snapdragon device, the data never leaves the hardware. This “air-gapped” capability is revolutionary for:

- GPT in Healthcare News: Doctors can process patient notes and generate summaries on a tablet without violating HIPAA compliance or requiring a cloud connection.

- GPT in Legal Tech News: Lawyers can analyze confidential contracts and case files on their laptops, ensuring attorney-client privilege is maintained technologically.

- GPT in Finance News: Financial analysts can run predictive models on proprietary market data without exposing their strategies to third-party API providers.

Minimizing Connectivity Dependence

The reliance on high-speed internet has been a major bottleneck for AI adoption in remote areas or developing regions. GPT Applications in IoT News suggests that smart devices often operate in environments with intermittent connectivity. A Snapdragon-powered drone, for example, can now use gpt-oss-20b to make navigation decisions or analyze visual data (if multimodal adapters are used) in real-time, regardless of cellular coverage. This autonomy is a game-changer for industrial automation and field operations.

Section 3: Practical Deployment, Tools, and Best Practices

For developers, the release of gpt-oss-20b is an invitation to build. However, deploying a 20B model on edge devices requires specific knowledge of GPT Tools News and optimization strategies. Here is a detailed breakdown of how the ecosystem is adapting to support this model.

Accessing the Model: Hugging Face and Ollama

The model is accessible via standard repositories like Hugging Face, which serves as the hub for GPT Datasets News and model weights. However, for local execution, tools like Ollama have become the standard. Ollama simplifies the process of pulling, managing, and running large language models (LLMs). Developers can likely pull the model using a simple command line interface, abstracting away the complexities of PyTorch or TensorFlow environments.

Example Scenario:

A developer building a GPT Code Models News assistant for an IDE can integrate gpt-oss-20b via Ollama’s local API. When the user types a comment, the IDE sends the context to the local NPU, which returns a code snippet instantly. This reduces the latency to near-zero, making the “autocomplete” feel magical rather than sluggish.

Optimization: Quantization and Distillation

To fit gpt-oss-20b onto a device with limited RAM (e.g., 12GB or 16GB unified memory), developers must rely on GPT Quantization News. This involves reducing the precision of the model’s weights from 16-bit floating-point to 4-bit integers (INT4).

Best Practices for Snapdragon Deployment:

- Use the Qualcomm AI Hub: Qualcomm plans to detail deployment specifics here. Developers should utilize the pre-compiled binaries optimized for the Hexagon NPU rather than generic ONNX runtimes.

- Memory Management: Ensure background processes are minimized. A 20B model, even quantized, is resource-intensive.

- Thermal Throttling: Continuous inference generates heat. Developers should implement batching or throttling mechanisms to prevent the device from overheating during long reasoning tasks.

Integration with the Qualcomm AI Stack

The Qualcomm AI Stack provides a unified software toolchain. For those following GPT Integrations News, this means write-once-deploy-anywhere capabilities across Snapdragon mobile, automotive, and IoT platforms. By utilizing the Qualcomm Neural Processing SDK, developers can ensure that gpt-oss-20b takes full advantage of the hardware acceleration, achieving tokens-per-second (TPS) rates that make chat interfaces feel fluid.

Section 4: Strategic Implications and Future Outlook

The release of gpt-oss-20b is not an isolated event; it is a signal of where the industry is heading. It touches upon GPT Future News and the broader strategy of AI democratization.

The Hybrid AI Model

We are moving toward a hybrid architecture. Simple queries and privacy-sensitive tasks will be handled by local models like gpt-oss-20b, while queries requiring massive world knowledge or 100k+ context windows will be routed to the cloud (GPT-4 or GPT-5). This bifurcation improves GPT Efficiency News and reduces operational costs for companies who no longer need to pay API fees for every trivial interaction.

Impact on Content Creation and Creativity

GPT in Creativity News and GPT in Content Creation News will see a surge in personalized tools. Writers can fine-tune a local version of gpt-oss-20b on their own previous novels to assist with style consistency, without fear of their work being used to train a public model. Similarly, GPT in Marketing News suggests that agencies can deploy bespoke models on employee laptops to generate copy that adheres strictly to brand voice guidelines, enforced by local fine-tuning.

Challenges: Bias and Regulation

With great power comes great responsibility. GPT Bias & Fairness News remains relevant. While OpenAI likely applied RLHF (Reinforcement Learning from Human Feedback) to safety-align the model, open weights mean safeguards can be stripped. This presents a challenge for GPT Regulation News. How do regulators control a model that lives on millions of consumer devices? The shift to local AI complicates enforcement but enhances user freedom.

The Competitive Landscape

This move places OpenAI in direct competition with Meta’s Llama series and Mistral AI, dominating GPT Competitors News. By offering a “reasoning-class” model for the edge, OpenAI is trying to capture the developer mindshare that has recently drifted toward open-source alternatives. It forces competitors to optimize their models for NPU performance, accelerating innovation in GPT Distillation News and model architecture.

Conclusion

The introduction of gpt-oss-20b and its optimization for Qualcomm Snapdragon devices is a watershed moment in the AI timeline. It represents the convergence of high-level software reasoning and cutting-edge hardware acceleration. For developers, it opens new avenues in GPT Applications News, allowing for the creation of faster, more private, and more reliable intelligent systems. For the consumer, it promises a future where AI is a personal, always-available utility rather than a distant cloud service.

As we look ahead, the synergy between open-source models and edge computing will likely define the next generation of AI interaction. Whether it is transforming GPT in Education News through offline tutors or revolutionizing GPT Assistants News with instant, private responses, gpt-oss-20b proves that the future of AI is not just in the cloud—it is in the palm of your hand.