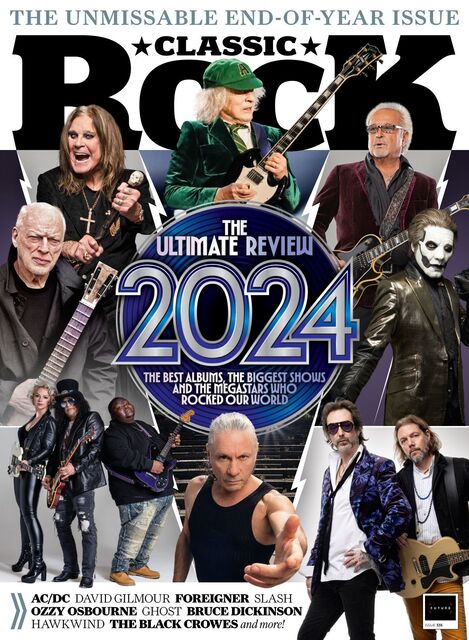

OpenAI Finally Dropped Weights. My Local Rig Is Crying.

I owe my co-worker, Dave, a steak dinner. A simplified, non-wagyu steak, but a steak nonetheless. Back in 2024, I looked him in the eye and swore that OpenAI would freeze hell over before they released open weights for a current-gen model. I was confident. I was smug.

Well, it’s January 2026, and I’m eating crow.

While everyone on Twitter (X? Whatever we call it now) is losing their minds over the GPT-5 API capabilities—and yeah, we’ll get to that—the real story is the smaller, open-weight models they quietly dropped alongside the big release. I spent the last 48 hours benchmarking them, breaking my Python environment twice, and barely sleeping. Here is what’s actually going on, minus the marketing fluff.

The “Open” Pivot: Why Now?

It feels weird typing git clone for an OpenAI repo that actually contains model weights.

For years, the strategy was “bigger is better, and keep it closed.” But the open-source community—thanks to Meta’s Llama series and the Mistral guys—ate into the market share faster than anyone expected. Developers like me stopped caring about the absolute SOTA (State of the Art) for 90% of tasks. We wanted control. We wanted privacy. We didn’t want to pay per token for a simple JSON formatting job.

So, OpenAI releasing these “GPT-Open” variants isn’t charity. It’s a defensive move. They realized that if they didn’t offer a local option, they’d lose the developer ecosystem entirely to the open-weight rivals.

But are they any good?

Running GPT-Open-12B Locally

I started with the 12B parameter model because I wanted to see if it could run on my laptop without melting the chassis.

The setup:

MacBook Pro M4 Max (128GB Unified Memory)

Ollama backend (updated this morning)

First impression: It’s fast. Suspiciously fast. I threw my standard “messy data cleaning” prompt at it—a terrible CSV string with missing commas and weird encoding artifacts. Usually, smaller models hallucinate extra columns or just give up and write Python code instead of fixing the data.

The 12B model just fixed it. No preamble. No “Here is the corrected data.” Just the CSV.

However, it’s not perfect. When I asked it to explain the nuance of a specific Rust memory safety edge case, it hallucinated a function that doesn’t exist. So, it’s great for grunt work, bad for deep technical consulting. Standard small-model behavior, but the instruction following is noticeably tighter than Llama 3 was back in the day.

The Big Dog: GPT-5 API

Okay, let’s talk about the main event. I finally got my API key provisioned yesterday.

If the open models are for the trenches, GPT-5 is for the generals. The reasoning capabilities have jumped. I tested it on a complex legal contract analysis—something that usually trips up LLMs because they lose context halfway through page 40.

GPT-5 held the context. It remembered a definition from paragraph 3 while analyzing a clause in paragraph 105. That’s the “reasoning” improvement they promised. It feels less like a stochastic parrot and more like a tired paralegal.

But the cost.

My wallet hurts just looking at the usage dashboard. It is significantly more expensive than GPT-4o was at launch. This clearly isn’t meant for your “write me a poem” chatbot. This is enterprise-grade pricing.

Which makes sense, considering the other news that slipped under the radar: the approval for civilian agencies.

The Government Angle

This is where the split happens. OpenAI is bifurcating its strategy.

1. GPT-5 (Closed API): High security, high cost, approved for government and enterprise use. This is for the Feds, the banks, and the Fortune 500s who need compliance and guaranteed uptime.

2. GPT-Open (Weights): For the hackers, the startups, and the people who refuse to send their data to the cloud.

It’s smart. They capture the high-value institutional contracts with the closed model while stopping the bleeding of developer mindshare with the open models.

My New Workflow

I’ve completely changed how I’m building my current project (a log analysis tool) based on this release. Before, I was routing everything to a mid-tier API.

Now? I’m running the GPT-Open-7B model locally for the initial pass. It filters the logs, tags the obvious errors, and formats the data. It costs me zero dollars (minus electricity).

Then, and only then, if the local model flags something as “critical/unknown anomaly,” I forward that specific snippet to the GPT-5 API for a deep analysis.

I call it the “Dumb-Smart Sandwich.”

- Layer 1 (Local): Fast, free, good at formatting.

- Layer 2 (API): Expensive, slow, genius-level reasoning.

This hybrid approach is the only way to survive the API costs in 2026. If you are piping raw data directly to GPT-5, you are burning money. Stop it.

The Verdict

I’m still skeptical about how “open” these open models really are. The license is a bit restrictive regarding commercial competition (classic), and we don’t have the training data mix. But having the weights on my drive is a level of security I didn’t think we’d get from this specific company.

The gap between open weights and closed APIs hasn’t closed—it’s just shifted. The closed models are becoming specialized reasoning engines, while the open models are becoming the operating system of the AI web.

Now, if you’ll excuse me, I have to go buy Dave a steak. I hate being wrong, but at least I have some new toys to play with.