The Shifting Landscape of AI Code Generation: A Deep Dive into the Latest GPT Code Models News

The New Frontier: A Paradigm Shift in Code Generation Capabilities

The world of software development is in the midst of a profound transformation, driven by the exponential advancement of large language models (LLMs) optimized for coding. For years, models from OpenAI set the industry standard, but the latest GPT Code Models News reveals a rapidly evolving and increasingly competitive landscape. We are witnessing a paradigm shift where new, highly capable models are not just catching up but are beginning to surpass established benchmarks, heralding a new era of AI-assisted software engineering.

This evolution is about more than just incremental improvements. It represents a fundamental leap in reasoning, contextual understanding, and multimodal capabilities. The latest generation of code models demonstrates a remarkable ability to handle complex, multi-step programming tasks, debug intricate codebases, and even translate high-level architectural concepts into functional code. As the competition heats up, developers are the ultimate beneficiaries, gaining access to more powerful, efficient, and versatile tools that are redefining productivity and creativity in the tech industry. This article delves into these groundbreaking developments, analyzing the technical underpinnings, real-world applications, and the far-reaching implications for the future of coding.

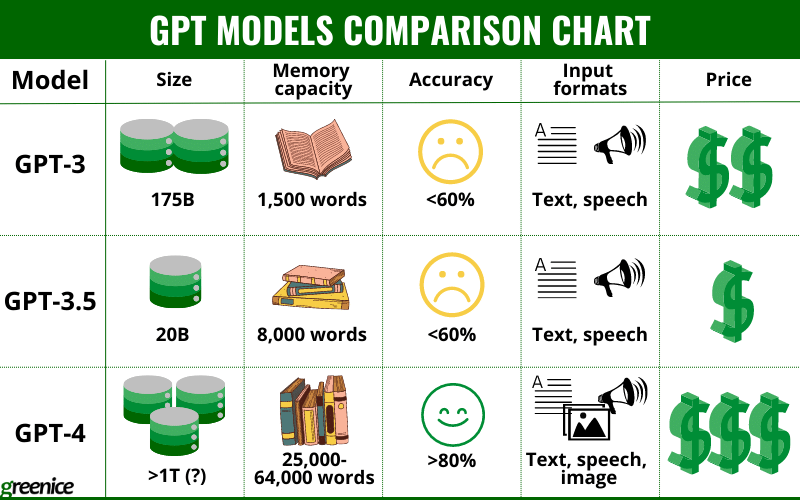

Redefining the Leaderboard: Beyond GPT-4

For a significant period, GPT-4 was the undisputed leader in AI code generation. However, recent GPT Competitors News indicates a major shake-up. New families of models from prominent AI labs are now outperforming GPT-4 on a suite of critical benchmarks. According to the latest GPT Benchmark News, these challengers are showing superior performance in areas like the HumanEval benchmark, which tests Python code generation for complex problems, and MBPP (Mostly Basic Python Programming). They also excel in broader reasoning and knowledge benchmarks like MMLU (Massive Multitask Language Understanding), which has significant coding and computer science components. This surge in performance is not isolated; it points to a broader trend of rapid innovation across the AI ecosystem. The latest GPT-4 News is now framed in the context of how it stacks up against these new contenders, pushing the entire field toward greater heights of accuracy and reliability.

Multimodality and Expansive Context Windows

A key driver of this new wave of innovation is the enhancement of multimodal capabilities. The latest GPT Multimodal News highlights models that can interpret and process visual information alongside text. This is a game-changer for developers. Imagine feeding a model a whiteboard sketch of an application’s architecture or a UI mockup from Figma; the model can then generate the corresponding boilerplate code in React or Swift. This burgeoning field of GPT Vision News is closing the gap between human ideation and machine execution. Furthermore, the expansion of context windows—from a few thousand tokens to 200,000 or even 1 million tokens—is a critical architectural advancement. A larger context window allows a model to ingest and analyze an entire codebase, understand complex interdependencies, and maintain consistency during large-scale refactoring or feature implementation, a significant update in GPT Architecture News.

Under the Hood: What Powers the Next Generation of Code Models?

The remarkable capabilities of these new code models are not magic; they are the result of sophisticated architectural innovations, massive and meticulously curated datasets, and advanced optimization techniques. Understanding these technical underpinnings is crucial for appreciating their power and limitations.

Advanced Architectures and Training Techniques

While the Transformer architecture remains the foundational building block, the latest models employ significant refinements. There is growing evidence that leading models are utilizing Mixture of Experts (MoE) architectures. An MoE model consists of numerous smaller “expert” networks, and a gating mechanism routes input to the most relevant experts for a given task. This allows the model to scale its parameter count massively without a proportional increase in computational cost for each inference, a key topic in GPT Scaling News. The GPT Training Techniques News also points to the critical role of dataset quality. It’s no longer just about the quantity of code scraped from the internet. The new frontier involves training on highly curated GPT Datasets that include not only open-source code but also technical documentation, programming textbooks, peer-reviewed papers, and structured problem-solving examples. This diverse data diet enhances the model’s reasoning and ability to generate idiomatic, well-documented code.

Efficiency and Deployment: From Cloud to Edge

A significant trend highlighted in recent GPT Ecosystem News is the release of model “families” rather than a single monolithic model. Typically, this includes a flagship model for maximum performance, a balanced mid-tier model for general use, and a highly efficient, smaller model designed for speed and low cost. This tiered approach is a direct response to diverse market needs. The latest GPT Efficiency News is filled with developments in optimization. Techniques like GPT Quantization (reducing the precision of model weights) and GPT Distillation (training a smaller model to mimic a larger one) are becoming standard practice. These advances, covered in GPT Compression News, are crucial for making powerful AI accessible. They reduce operational costs and are paving the way for powerful on-device applications, a major focus of GPT Edge News. This focus on efficiency directly impacts GPT Latency & Throughput, making real-time GPT Assistants more responsive and practical for everyday developer tasks.

From Theory to Practice: How New Code Models are Reshaping Development

The true measure of these advanced models lies in their practical application. They are moving beyond simple code completion to become active collaborators in the software development lifecycle, impacting everything from initial design to final deployment. The latest GPT Applications News showcases a wide array of use cases across various industries.

Concrete Use Cases and Scenarios

-

The AI-Powered Legacy System Modernizer: A senior engineer at a financial institution is tasked with modernizing a monolithic COBOL application. Using a model with a massive context window, they feed the entire legacy codebase into the system. The AI analyzes the code, identifies business logic, and generates equivalent services in a modern language like Java or Python, complete with API endpoints and unit tests. This is a prime example of the kind of impact discussed in GPT in Finance News.

-

The Multimodal Prototyper: A startup founder sketches a user flow for a new mobile app on a napkin. They take a photo and upload it to an AI coding assistant, adding a text prompt: “Generate a functional React Native prototype based on this user flow diagram, using a clean, minimalist design.” The model interprets the diagram and text, generating the component structure, navigation logic, and placeholder UI elements, dramatically accelerating the path from idea to interactive prototype. This is a key development in GPT in Content Creation News.

-

The Automated Debugging and Security Analyst: A developer encounters a cryptic runtime error. Instead of spending hours searching forums, they provide the model with the error stack trace and the relevant code files. The model cross-references the error with its vast knowledge base, identifies the likely cause—a subtle race condition—and suggests a corrected code snippet using proper locking mechanisms. Furthermore, these tools are being integrated into CI/CD pipelines to automatically scan for vulnerabilities, a crucial topic in GPT Safety News.

Best Practices for Integration

To maximize the benefits of these powerful tools, developers should adopt new best practices. The latest GPT Tools News emphasizes the importance of effective integration into existing workflows.

Tip 1: Master Contextual Prompting. Treat the AI as a junior developer who needs precise instructions. Provide as much context as possible: the programming language and version, required libraries, existing data structures, and the desired coding style or design pattern. Use chain-of-thought prompting for complex tasks, breaking the problem down into logical steps.

Tip 2: Implement a Human-in-the-Loop (HITL) System. AI-generated code should never be blindly trusted and pushed to production. It must be reviewed, tested, and validated by a human developer. The AI is an accelerator, not a replacement for human expertise, especially in areas of security, performance, and architectural integrity. This is a core principle in discussions around GPT Ethics News.

Tip 3: Leverage Customization and Fine-Tuning. For enterprise use, generic models may not suffice. The latest GPT Custom Models News and GPT Fine-Tuning News show that tailoring a base model to a company’s specific codebase, coding standards, and private APIs can yield a significant performance boost and ensure generated code aligns with internal practices.

The Ecosystem at a Glance: Competition, Ethics, and Future Trajectories

The recent surge in high-performing code models signifies a maturing and fiercely competitive market. This dynamic environment is accelerating innovation but also brings to the forefront critical discussions about ethics, safety, and the future of the software development profession.

The Competitive Landscape and the Road to GPT-5

Intensified competition is unequivocally good for the industry. It drives down prices for API access (a major topic in GPT APIs News), fosters a diversity of approaches, and prevents a single entity from dominating the market. The latest developments put immense pressure on OpenAI. The community is now eagerly awaiting GPT-5 News, with expectations that it will need to deliver a monumental leap in capability to definitively reclaim its leadership position. In parallel, the GPT Open Source News community continues to thrive, with models like Llama and Mixtral providing powerful, transparent alternatives that can be self-hosted, addressing key concerns around GPT Privacy News. This vibrant GPT Ecosystem News ensures that developers have a wide array of choices, from proprietary cloud APIs to open, self-managed models.

Ethical Considerations and Responsible AI

With great power comes great responsibility. The rise of AI code generation surfaces several ethical challenges. Training data often includes code with various open-source licenses, creating complex legal questions about intellectual property. There’s also the risk of models generating subtle but critical security vulnerabilities or perpetuating biases present in their training data, a key concern in GPT Bias & Fairness News. Furthermore, there are ongoing debates about the impact on junior developer roles and the potential for deskilling. Leading AI labs are increasingly focused on GPT Safety News, implementing sophisticated guardrails and red-teaming efforts to mitigate these risks. As these tools become more integrated into critical infrastructure, from healthcare systems (GPT in Healthcare News) to legal tech (GPT in Legal Tech News), the need for robust GPT Regulation News and industry-wide safety standards becomes paramount.

Conclusion: The Dawn of Collaborative Intelligence in Software Engineering

The latest GPT Code Models News makes one thing clear: we have crossed a threshold. The era of AI as a simple autocomplete tool is over. We are now entering an age of collaborative intelligence, where developers are augmented by AI partners capable of complex reasoning, multimodal understanding, and large-scale code manipulation. The emergence of new state-of-the-art models that challenge the dominance of established players is a testament to the incredible pace of innovation in the field.

For developers and engineering leaders, the key takeaway is to embrace this change proactively. The focus should be on integrating these tools intelligently, fostering a culture of critical review, and upskilling teams to leverage AI for higher-order tasks like system design and creative problem-solving. The future of software development is not about human versus machine, but human and machine working in synergy. As we look toward the horizon, the ongoing GPT Trends News and anticipated GPT Future News promise even more powerful and integrated AI systems that will continue to reshape how we build the digital world.