The Next Frontier: Unpacking the Shift to Autonomous AI with GPT-5 and Agentic Systems

The Dawn of a New AI Paradigm: Beyond Instruction-Following

The evolution of large language models (LLMs) has been nothing short of breathtaking. From the coherent prose of GPT-3 to the sophisticated multimodal reasoning of GPT-4, each iteration has dramatically expanded the horizons of what artificial intelligence can achieve. We’ve moved from simple text completion to complex problem-solving, content creation, and code generation. However, the latest GPT Models News suggests we are on the cusp of another monumental leap—a transition from passive, instruction-following assistants to proactive, autonomous agents. This emerging paradigm, often discussed in the context of a hypothetical GPT-5, centers on the concept of “Agent Mode,” a framework where AI can independently plan, execute, and iterate on complex, multi-step tasks. This isn’t merely an upgrade in language comprehension; it’s a fundamental shift in the nature of human-AI interaction, promising to redefine productivity, creativity, and automation as we know it. The latest OpenAI GPT News and broader GPT Research News point towards a future where AI doesn’t just answer our questions but actively pursues our goals.

Section 1: Understanding the Leap from Language Model to AI Agent

To grasp the significance of an “Agent Mode,” it’s crucial to understand the distinction between a standard LLM and an AI agent. While the underlying technology is similar, their operational capabilities are worlds apart. The latest ChatGPT News often highlights its incredible ability to respond to prompts, but its operation is fundamentally reactive. An AI agent, by contrast, is proactive and goal-oriented.

What Defines an AI Agent?

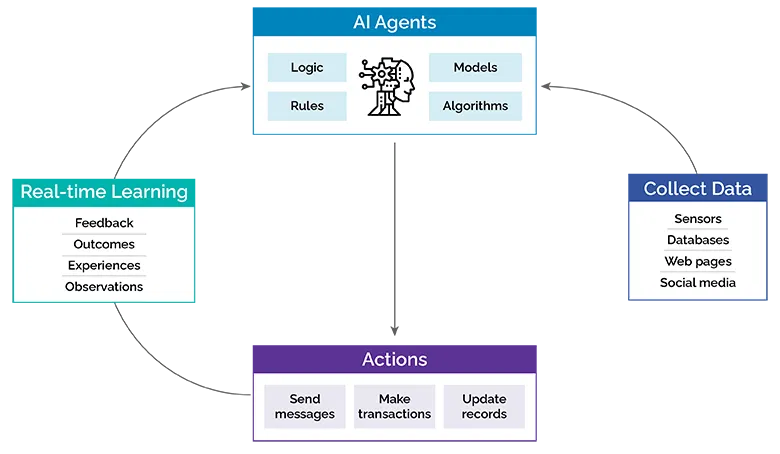

An AI agent, powered by a next-generation model like a potential GPT-5, is a system designed to perceive its environment, make decisions, and take actions to achieve a specific goal. Unlike a chatbot that waits for the next prompt, an agent can break down a high-level objective into a sequence of smaller, executable steps. Key components that differentiate an agent from a standard LLM include:

- Planning and Decomposition: Given a complex goal like “Analyze our Q3 sales data and create a presentation for the board,” an agent first formulates a plan. This might involve steps like: 1) Locate the sales data spreadsheet. 2) Open and read the data. 3) Perform statistical analysis to identify key trends. 4) Generate relevant charts and graphs. 5) Synthesize findings into a narrative. 6) Create a slide deck with the narrative and visuals.

- Tool Use and Integration: This is a critical capability. An agent isn’t confined to its own internal knowledge. Through GPT APIs News and developments in GPT Plugins News, we’ve seen early versions of this. A true agent can interact with external tools—it can run Python code in a sandboxed environment, access files on a local system (with permission), query databases, or use third-party APIs to fetch real-time information. This moves the AI from a text-in, text-out model to an active participant in a digital ecosystem.

- Memory and Self-Correction: To execute a multi-step plan, an agent needs both short-term memory (to track its current task) and long-term memory (to learn from past attempts). Crucially, it must also possess a self-correction mechanism. If a step fails (e.g., a line of code produces an error), the agent can analyze the error message, revise its approach, and try again without requiring human intervention for every micro-failure. This iterative process is a hallmark of agentic behavior, a topic frequently covered in GPT Training Techniques News.

This paradigm shift is the core of the latest GPT Trends News, moving beyond the simple Q&A format that has dominated the GPT-3.5 News and even much of the GPT-4 News cycle.

Section 2: A Technical Deep Dive into “Agent Mode” Architecture

While the exact specifications of a future GPT-5 remain speculative, we can infer the likely architecture of an “Agent Mode” based on current research and emerging patterns in the GPT Ecosystem News. Such a system would be a sophisticated orchestration of multiple components, all governed by a powerful core reasoning engine.

Core Components of a Hypothetical Agentic System

A robust “Agent Mode” would likely be built upon a foundation model with superior reasoning, a lower hallucination rate, and a significantly larger context window than current models. The GPT Architecture News and discussions around GPT Scaling News suggest that future models will be optimized for these very characteristics. The agentic layer built on top would consist of several key modules:

1. The Planning Module (The “Brain”):

This module receives the user’s high-level goal and, using the core LLM’s reasoning capabilities, decomposes it into a detailed, step-by-step plan. This plan is not static; it’s a dynamic “thought process” that can be updated as new information becomes available or as steps fail. For instance, if the initial plan was to use a specific Python library that turns out to be deprecated, the planning module would adjust to find an alternative.

2. The Tool-Use Module (The “Hands”):

This is the agent’s interface with the outside world. It would maintain a library of available “tools.” These tools could range from simple functions like `read_file()` or `write_file()` to complex API integrations for platforms like Salesforce, Google Analytics, or internal company databases. The latest GPT Integrations News shows a clear trend towards this kind of interoperability. For developers, this module is where GPT Code Models News becomes highly relevant, as the agent could write and execute its own scripts to accomplish tasks.

3. The Memory Module (The “Mind’s Eye”):

To function effectively, an agent needs a memory system. This would likely be two-tiered:

- Short-Term/Working Memory: A “scratchpad” that holds the current plan, the results of the last action, error messages, and immediate context. This allows the agent to maintain a coherent train of thought throughout a single task.

- Long-Term Memory: A more persistent storage, likely using vector databases, where the agent can store key learnings, successful procedures, user preferences, and project-specific information. This prevents it from having to re-learn everything for each new session and is a hot topic in GPT Custom Models News.

Case Study: Automating a Financial Report in Excel

Imagine a financial analyst provides the prompt: “Generate a Q3 performance report for our top five products. The data is in ‘sales_q3.xlsx’. The report should include total revenue per product, month-over-month growth, and a bar chart visualizing the results. Save the final report as ‘Q3_Report.docx’.”

An agent in “Agent Mode” would execute the following hypothetical steps:

- Plan: Decompose the goal into: access file, read data, calculate metrics, generate chart, create document, insert text and chart, save document.

- Tool Use (File System): Execute `read_file(‘sales_q3.xlsx’)`.

- Tool Use (Code Interpreter): Load the data into a pandas DataFrame. Perform calculations for revenue and growth.

- Self-Correction: If a column name is incorrect (e.g., ‘ProductID’ instead of ‘Product_ID’), the code will error. The agent reads the error, inspects the first few rows of the data to find the correct column name, and corrects its code.

- Tool Use (Data Visualization): Use a library like Matplotlib to generate the bar chart and save it as an image file.

- Tool Use (Document Creation): Create a new Word document. Write the summary text, pulling the calculated metrics from its working memory.

- Tool Use (File Integration): Insert the saved chart image into the document.

- Finalization: Save the document as `Q3_Report.docx` and notify the user of completion.

This entire workflow, which would currently require significant manual effort, could be completed autonomously in minutes. This is the promise that animates the latest GPT Applications News and GPT Agents News.

Section 3: Transformative Implications Across Industries

The introduction of robust AI agents would not be an incremental improvement; it would be a seismic shift, fundamentally altering workflows and creating new possibilities across virtually every sector. The impact would extend far beyond simple productivity gains, touching everything from scientific research to creative arts.

Real-World Applications of Agentic AI

GPT in Finance News: In finance, agents could perform complex market analysis by autonomously gathering data from multiple sources (news APIs, stock tickers, financial reports), running sentiment analysis, performing technical analysis using code interpreters, and generating a consolidated investment brief. This would compress days of research into a single, automated task.

GPT in Healthcare News: Medical researchers could deploy agents to sift through thousands of published studies, cross-referencing data on gene sequences, drug interactions, and clinical trial results to identify promising avenues for new research. An agent could formulate hypotheses, test them against available datasets, and summarize findings, dramatically accelerating the pace of discovery.

GPT in Legal Tech News: A legal agent could handle the discovery process by scanning millions of documents, identifying relevant information based on case law, redacting sensitive data according to privacy rules (a key topic in GPT Privacy News), and categorizing evidence for review by human lawyers.

GPT in Marketing News: A marketing team could task an agent with “launching a social media campaign for our new product.” The agent would research the target audience, generate ad copy and images (leveraging GPT Vision News and GPT Multimodal News), schedule posts across different platforms, and then monitor engagement metrics, providing a performance report at the end of the week.

GPT in Content Creation News: For content creators, an agent could take a simple idea like “a blog post about the benefits of remote work” and execute the entire process: conduct keyword research, outline the article, write the draft, find or generate relevant images, and even publish it to a WordPress site. This represents a huge leap in GPT Assistants News and GPT Chatbots News.

Section 4: Best Practices, Pitfalls, and Ethical Guardrails

While the potential of AI agents is immense, their power and autonomy also introduce significant new challenges. Deploying these systems effectively and safely will require new best practices and a keen awareness of potential pitfalls. The discourse around GPT Ethics News and GPT Safety News will become more critical than ever.

Best Practices for Working with AI Agents

- Goal-Oriented Prompting: Shift from giving step-by-step instructions to defining a clear, unambiguous end goal. Specify constraints, success criteria, and the desired final output.

- Human-in-the-Loop (HITL) Supervision: For critical tasks, implement approval gates. The agent can complete a series of steps and then pause to request human approval before executing a sensitive action, like sending an email to a client list or deploying code to production.

- Scoped Permissions: Never grant an AI agent unrestricted access to your entire system. Use sandboxed environments and provide access only to the specific files, tools, and APIs necessary for the task at hand. This is a central theme in GPT Deployment News.

- Iterative Refinement: Start with smaller, less critical tasks to understand the agent’s capabilities and limitations. Gradually increase the complexity as you build trust in its performance.

Common Pitfalls and Ethical Considerations

- Security Vulnerabilities: An agent with tool-use capabilities is a potential attack vector. A malicious actor could trick the agent into executing harmful code or leaking sensitive data. Robust security protocols are non-negotiable.

- Cost Overruns: Since agents can perform many actions (each potentially an API call), a poorly defined goal could lead to a runaway process that incurs significant computational costs. Clear budget limits and monitoring are essential.

- Reliability and Hallucination: While models are improving, they can still make mistakes or “hallucinate” facts. An agent acting on flawed information could lead to disastrous outcomes. Verifying critical outputs remains crucial.

- Bias and Fairness: The underlying models are trained on vast datasets, which can contain biases. An autonomous agent could perpetuate or even amplify these biases at scale. Ongoing audits and discussions in GPT Bias & Fairness News are vital.

- Regulation and Accountability: As agents become more autonomous, questions of accountability become paramount. Who is responsible if an AI agent makes a costly error? This is a key topic for GPT Regulation News and will require new legal and corporate frameworks.

Conclusion: Charting the Course for a Collaborative Future

The transition from advanced language models to autonomous AI agents represents the next major chapter in the story of artificial intelligence. The developments hinted at by the ongoing GPT Future News and GPT-5 News cycle point not just to more powerful tools, but to a new class of digital collaborators. An “Agent Mode” powered by a next-generation model would fundamentally reshape our interaction with technology, automating complex workflows and unlocking unprecedented levels of productivity and creativity. However, this power demands responsibility. Navigating this new frontier will require a concerted effort to build robust safety features, establish clear ethical guidelines, and foster a culture of mindful human-AI collaboration. The future isn’t about replacing humans, but about empowering them with intelligent agents that can handle the tedious, complex, and time-consuming tasks, freeing us to focus on strategic thinking, innovation, and the uniquely human aspects of our work.