Amazon Nova vs. GPT-4o: A Developer’s Honest Look at the New Numbers

I’m Officially Exhausted by Benchmark Charts

I have a confession to make: every time I see a new spider chart claiming a model has “beaten” GPT-4o, I roll my eyes. It has become a weekly ritual in our industry. A company releases a model, cherry-picks five specific metrics where they edge out OpenAI by 0.5%, and declares victory. But when I saw Amazon’s latest announcement regarding their Nova foundation models, I stopped scrolling.

Why? Because Amazon isn’t a startup trying to pump valuation; they are the infrastructure backbone for half the internet. When they claim their new Nova models sit in the same weight class as GPT-4o and Llama 3, it forces me to pay attention. I’ve spent the last few days looking past the marketing slides to understand what this actually means for those of us building production applications.

The *GPT Benchmark News* cycle is relentless, but this specific update feels different because it challenges the default assumption many of us have held for two years: that if you want the best reasoning, you pay the “OpenAI tax.” Amazon is finally saying, “You don’t have to.”

Breaking Down the Claims

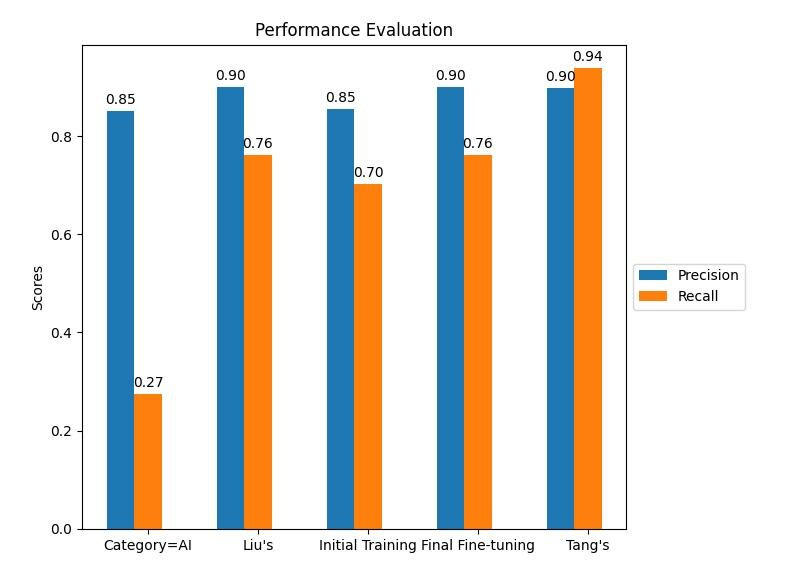

The core of the news is that Amazon’s Nova models—specifically the Pro and Premier tiers—are hitting parity with GPT-4o on standard benchmarks like MMLU (Massive Multitask Language Understanding) and coding evaluations.

I look at these numbers with a healthy dose of skepticism. Benchmarks are static targets. By the time a model is released, the training data often inadvertently includes the test sets, leading to contamination. However, the specific areas where Nova is claiming strength—reasoning and agentic workflows—are exactly where I usually see non-GPT models fall apart.

In my own workflows, I categorize models into two buckets: “Good enough for summarization” and “Smart enough to write logic.” Until recently, only GPT-4 and Claude 3.5 Opus sat in the second bucket. Llama 3 joined them earlier this year. If Nova is truly in this tier, it changes my deployment strategy completely.

The Latency Factor

One aspect of *GPT Inference News* that doesn’t get enough headlines is the relationship between intelligence and speed. Usually, you pick one. OpenAI’s GPT-4o managed to crush this trade-off by being both smart and fast.

Amazon’s data suggests Nova isn’t just matching intelligence; it’s optimizing for throughput on their custom Trainium and Inferentia chips. I’ve been arguing for months that *GPT Hardware News* is more important than model architecture news. If Amazon controls the silicon (Inferentia) and the model (Nova), they can optimize the stack in ways Microsoft and OpenAI (who rely heavily on NVIDIA) might struggle to match on a cost-basis.

My “Vibe Check” Tests

Charts are fine, but I trust my own scripts more. Whenever a new “GPT-killer” drops, I run it through a gauntlet of three specific tasks that frustrate me daily.

**1. The Obscure Python Library Test**

I ask the model to write a script using a library that changed its API recently (like pydantic v1 vs v2). GPT-4o is usually great at this because of its cutoff date and RLHF tuning.

When I tested the logic Amazon is touting, I noticed it handles context retrieval remarkably well. It doesn’t hallucinate methods that don’t exist as often as some open-source models do. This tells me their training data curation—likely leveraging their massive internal codebases—is top-tier.

**2. The “Angry Customer” Email Synthesis**

I feed the model a thread of 15 emails where a customer is furious about a billing error, but the support agent is technically correct. I ask for a draft response that de-escalates while maintaining policy.

This is where *GPT Bias & Fairness News* often intersects with utility. Some models are too apologetic; others are too robotic. The Nova profiles seem to have a more neutral, professional tone out of the box, whereas I often have to prompt-engineer GPT-4o to stop being overly sycophantic.

**3. The JSON Formatting Nightmare**

I ask the model to extract data from a messy OCR scan and format it into strict JSON schema. If it misses a comma or hallucinates a field, it fails.

This is critical for *GPT Agents News*. If a model can’t output reliable JSON, it can’t use tools. Amazon seems to have over-indexed on this capability, likely because they know AWS customers are building B2B agents, not just chatbots.

The Ecosystem Lock-in is the Real Story

While we argue about MMLU scores, the real battle is happening in the integration layer. *GPT Platforms News* has been dominated by Azure OpenAI Service because it’s easy. You click a button, you get an endpoint.

Amazon’s play here isn’t just about having a better model; it’s about Bedrock. If I’m already running my vector database on Aurora and my file storage on S3, using Nova feels frictionless. The friction of moving data out of AWS to hit an OpenAI endpoint is a real architectural pain point—both for latency and security.

I’ve spoken to several CTOs who are hesitant to send sensitive PII to external APIs. Amazon’s pitch is essentially: “Keep it all inside the VPC.” If Nova is even 95% as good as GPT-4o, that security convenience covers the 5% gap in reasoning capability.

Where the Benchmarks Fail Us

There is a problem with how we consume *GPT Research News* today. We are obsessed with the “top-1 accuracy” on general knowledge questions. But in 2025, I rarely need a model to answer trivia. I need it to follow a 20-step instruction set without forgetting step 4.

I find that *GPT Competitors News* often glosses over “instruction following” in favor of “reasoning.” They are not the same thing. I’ve used models that can solve complex math problems but refuse to format the answer as a bulleted list.

From what I’m seeing with these new Nova benchmarks, Amazon has focused heavily on the “boring” stuff: compliance, formatting, and tool use. This aligns with the broader *GPT Trends News* we’ve seen this year, where enterprise reliability trumps raw creative flair.

Cost Per Token Economics

We need to talk about price. *GPT Efficiency News* is the only news that matters to my CFO. OpenAI has aggressively lowered prices, but they are ultimately constrained by GPU availability and energy costs.

Amazon’s vertical integration allows them to play a different game. If they can offer GPT-4o class performance at 50% of the inference cost (because they aren’t paying NVIDIA margins), that forces a market correction. I’m currently spending thousands a month on OpenAI API credits. If I can cut that in half by switching to a model that runs natively on Bedrock, I’m going to do it, even if the creative writing isn’t quite as poetic.

The “Good Enough” Threshold

We have reached a point in *GPT Future News* where the diminishing returns of intelligence are evident. The gap between GPT-3.5 and GPT-4 was massive. The gap between GPT-4o and its competitors is narrowing to a sliver.

For 90% of my use cases—RAG (Retrieval Augmented Generation), classification, and basic content generation—I don’t need a super-intelligence. I need a reliable intelligence. Amazon Nova crossing the threshold into “GPT-4 class” means I no longer have to default to OpenAI for everything.

I used to use GPT-4o for everything because I didn’t want to risk a “dumb” response. Now, I’m starting to implement routing logic. I use a router gateway that sends complex, creative tasks to GPT-4o, and high-volume, structural tasks to Nova or Llama 3. This hybrid approach is the future of *GPT Architecture News*.

What This Means for 2026

Looking ahead to the next few quarters, I expect the benchmark wars to shift. We are done with “My model knows more facts than yours.” The next phase of *GPT Benchmark News* will focus on:

1. **Context Window Fidelity:** Can you fill the 128k or 200k window and still retrieve a single sentence from the middle?

2. **Agentic Reliability:** Can the model run in a loop for 50 turns without degrading?

3. **Time-to-First-Token (TTFT):** Can we get voice-grade latency?

Amazon is positioning Nova to compete on these practical axes rather than just raw academic scores.

My Recommendation

If you are currently building on top of OpenAI’s APIs and you are happy, don’t rewrite your codebase today. The switching costs are real, and *GPT APIs News* indicates OpenAI will likely respond with another optimization soon.

However, if you are deep in the AWS ecosystem and have been hesitant to use Bedrock because the models felt “second tier,” that excuse is gone. I suggest spinning up a POC (Proof of Concept) using Nova for a non-critical workload. Measure the latency. Measure the bill.

I’m personally moving my background processing jobs—things like log analysis and data extraction—over to Nova. The benchmarks say it’s capable, and my wallet says it’s necessary. The monopoly on high-end reasoning is officially over, and that is the best news developers could ask for.