The Great Divide: Navigating the Speed vs. Depth Trade-Off in Next-Generation AI Code Models

The Evolving Landscape of AI-Powered Code Generation

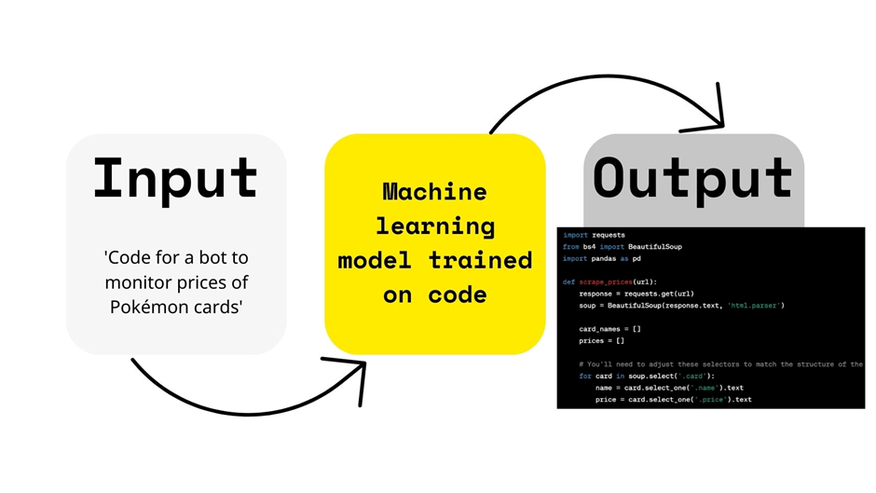

The world of software development is in the midst of a profound transformation, driven by the rapid evolution of large language models (LLMs) specialized in code generation. Early iterations, while impressive, often served as sophisticated autocompletes. Today, we are witnessing a fascinating divergence in model philosophy, creating a new dichotomy for developers, team leads, and CTOs to navigate. This isn’t just about incremental improvements anymore; it’s about a fundamental split in design and purpose. The latest GPT Models News highlights a clear trend: the emergence of two distinct classes of AI coding assistants. On one side, we have models optimized for sheer speed and responsiveness, capable of generating functional code in seconds. On the other, a new generation of more deliberate, architecturally-aware models is appearing, prioritizing depth, accuracy, and robustness over instantaneous output. This article delves into this critical trade-off, exploring the technical underpinnings, practical applications, and strategic implications of choosing between speed and substance in the new era of AI-assisted software engineering.

Section 1: The New Dichotomy: Velocity vs. Veracity

The current conversation in the GPT Ecosystem News is centered on a critical performance axis: latency versus quality. This isn’t a simple linear improvement scale; it’s a strategic choice in model design that has significant downstream effects on development workflows. Understanding the “why” behind this split is crucial for leveraging these tools effectively.

The “Velocity-First” Model Architecture

Models designed for speed are engineered for low-latency inference. This is often achieved through several architectural and optimization techniques. According to recent GPT Architecture News, these models may employ:

- Model Quantization and Distillation: Techniques like quantization (reducing the precision of the model’s weights, e.g., from 32-bit floats to 8-bit integers) and knowledge distillation (training a smaller “student” model to mimic a larger “teacher” model) significantly reduce computational overhead. This is a key topic in GPT Compression News and GPT Efficiency News.

- Smaller Parameter Counts: While still massive, these models might have billions rather than trillions of parameters, allowing them to be loaded and run on less powerful GPT Hardware.

- Optimized Inference Engines: They are often deployed on highly specialized GPT Inference Engines that are fine-tuned for specific hardware, maximizing throughput. This focus on GPT Latency & Throughput News is paramount for their design.

The result is an AI assistant that feels incredibly responsive, making it ideal for interactive tasks like autocompleting lines of code, generating simple functions, or scaffolding basic project structures. However, this speed can come at the cost of nuance. The generated code might be syntactically correct and functional for simple cases but may lack comprehensive error handling, optimal performance, or adherence to complex architectural patterns.

The “Depth-First” Model Architecture

In contrast, the more thorough, “depth-first” models represent the cutting edge of GPT-5 News and advanced GPT Research News. These models prioritize correctness, context-awareness, and code quality. Their design philosophy is different:

- Larger Context Windows and Parameter Counts: These models can analyze much larger codebases (tens of thousands of lines of code) to understand existing patterns, dependencies, and style guides. This is a direct result of advancements in GPT Scaling News.

- Advanced Training Techniques: They are often trained not just on code, but on associated documentation, bug reports, pull requests, and unit tests. This allows them to generate code that is not just functional but also well-documented and testable, a key theme in GPT Training Techniques News.

- Complex Reasoning Capabilities: These models exhibit more sophisticated reasoning, allowing them to tackle ambiguous requests, refactor complex legacy code, and design multi-component systems. They are better equipped to handle the intricate logic seen in GPT in Finance News or the safety-critical requirements in GPT in Healthcare News.

The trade-off is latency. Generating a solution might take significantly longer, as the model performs a more exhaustive analysis and generation process. The output, however, is often far superior, resembling the work of a senior developer—complete with comments, tests, and a clear understanding of the surrounding architecture.

Section 2: A Tale of Two Scenarios: Practical Application and Analysis

Theory is one thing, but the real impact of this divergence is felt in the daily workflows of developers. Let’s explore two common scenarios to illustrate the strengths and weaknesses of each model type. This analysis reflects the ongoing discussions found in the latest ChatGPT News and GPT Code Models News.

Scenario 1: Building a Quick MVP for a Startup Weekend

A team needs to rapidly prototype a simple web application that allows users to sign up, create a profile, and post short messages. The goal is to have a working demo in 48 hours.

Using a Velocity-First Model:

The developer prompts: “Create a simple Flask application with user authentication using SQLite and routes for signup, login, and a user dashboard.” Within seconds, the model outputs a complete `app.py` file, a basic `models.py`, and corresponding HTML templates. The code works out of the box.

- Pros: Incredible speed allows the team to focus on front-end and product features. The barrier to getting a functional skeleton is almost zero. This is a game-changer for hackathons and rapid prototyping.

- Cons: The generated code has minimal error handling. Passwords might be stored insecurely (e.g., plaintext without hashing). The database schema is simplistic and not designed for scale. There are no unit tests. This superficiality is a risk if the prototype is pushed directly to production, a concern often raised in GPT Safety News.

Scenario 2: Refactoring a Mission-Critical Payment Processing Module

A fintech company needs to refactor a legacy payment processing module. The module is complex, has poor documentation, and lacks test coverage. The goal is to improve maintainability, add robust error handling, and introduce comprehensive logging without breaking existing functionality.

Using a Depth-First Model:

The developer provides the entire legacy module as context and prompts: “Analyze this Java payment processing module. Identify code smells, potential race conditions, and areas with inadequate error handling. Refactor the code to implement the Strategy pattern for different payment gateways, add comprehensive Javadoc comments, introduce SLF4J for logging, and generate a suite of JUnit tests covering the primary success and failure paths.”

The model takes a minute or two to process. The output is not just a block of code; it’s a multi-file commit suggestion.

- The Refactored Code: Clean, well-structured code implementing the requested design pattern.

- Unit Tests: A complete test file with mocks for external services, covering edge cases the developer might have missed.

- Documentation: Clear Javadoc comments explaining the purpose of each class and method.

- Explanation: A markdown summary of the changes made and the reasoning behind them.

This kind of deep, contextual work is where these advanced models, as hinted at in GPT-4 News and future GPT-5 News, truly shine. They act less like a code generator and more like a senior architect and pair programmer, a trend highlighted in GPT Agents News.

Section 3: Strategic Implications and The Evolving Developer Role

The existence of these two model archetypes forces a strategic re-evaluation of how development teams integrate AI. It’s no longer a one-size-fits-all solution. The choice of tool has profound implications for productivity, code quality, and even team structure.

Choosing the Right Tool for the Task

A key takeaway from recent GPT Benchmark News is that performance is context-dependent. Teams must develop a nuanced approach:

- Use Velocity Models for: Rapid prototyping, scaffolding new projects, writing boilerplate code, generating simple scripts, and brainstorming initial solutions. Their application in GPT in Content Creation News for generating quick code snippets for tutorials is also a perfect fit.

- Use Depth Models for: Refactoring legacy systems, implementing complex algorithms, debugging intricate issues, security analysis, and working on core, mission-critical business logic. Their value is immense in regulated industries like those covered by GPT in Legal Tech News and finance.

This “right tool for the job” philosophy requires developers to be proficient with multiple AI systems and, more importantly, to have the discernment to know when to use each. This is a central theme in discussions around GPT Deployment News and best practices.

The Developer as an AI Orchestrator

This trend solidifies the shift in the developer’s role from a line-by-line code author to a system architect and AI orchestrator. The most valuable skills are no longer just writing code, but:

- Precise Prompt Engineering: The ability to provide detailed, context-rich prompts that guide the AI to the desired outcome.

- Critical Code Review: The skill to quickly evaluate AI-generated code for correctness, performance, and security vulnerabilities. This human oversight is a critical aspect of GPT Ethics News and addressing GPT Bias & Fairness News.

- Architectural Vision: The capacity to design a high-level system and use AI to fill in the implementation details, ensuring the generated parts fit together coherently.

This evolution means that continuous learning about GPT Trends News and new model capabilities is no longer optional; it’s a core professional competency.

Section 4: Recommendations and The Road Ahead

As we look to the future, the gap between speed and depth may narrow, but for now, developers and organizations must adopt a hybrid strategy to maximize the benefits of AI in software development.

Best Practices for a Hybrid AI Workflow

To navigate this landscape, consider the following actionable recommendations:

- Build a Tiered AI Toolchain: Don’t rely on a single model. Equip your team with both a fast, responsive model integrated into the IDE for real-time assistance, and access to a more powerful, in-depth model for complex tasks and pre-commit reviews. Many GPT Platforms News are starting to offer such tiered services.

- Start Fast, Refine Deep: Use a velocity-first model to generate the initial draft or scaffold of a new feature. Then, use a depth-first model to review, refactor, and harden that code, asking it to add tests, improve error handling, and check for security flaws.

- Automate with AI Agents: Leverage emerging GPT Agents News to create automated workflows. For example, a CI/CD pipeline could trigger a depth-first model to perform a code quality and security review on every pull request, flagging potential issues before human review.

- Invest in Training: Your team’s ability to effectively use these tools is a competitive advantage. Invest in training on advanced prompt engineering and the ethical considerations of using AI-generated code, a topic of growing importance in GPT Regulation News.

Future Outlook: Convergence or Specialization?

The big question is whether these two paths will converge. Will we see a single model that offers a “slider” for speed versus depth? It’s possible. Future GPT Architecture News may reveal hybrid architectures, like Mixture-of-Experts (MoE) models, that can dynamically allocate computational resources based on task complexity. We may also see advancements in GPT Multimodal News, where a model can generate robust, tested code directly from a whiteboard diagram or a UI mockup. For now, however, the trend points towards continued specialization. As the GPT Competitors News space heats up, companies will likely double down on specific niches—some aiming to be the fastest, others the most reliable. The open-source community, a hot topic in GPT Open Source News, will also play a crucial role, likely producing a wide array of specialized models optimized for specific tasks and hardware, including GPT Edge News for on-device code generation.

Conclusion: A New Era of Intentional AI Integration

The divergence of AI code generation models into distinct “velocity” and “depth” archetypes marks a new stage of maturity for the technology. It signals a move away from a one-size-fits-all approach towards a more sophisticated, specialized ecosystem of tools. The key takeaway for developers and technology leaders is that the most effective strategy is not to pick a single “winner,” but to build a versatile toolkit and cultivate the wisdom to know which tool to apply to which problem. The future of software development won’t be about replacing human developers, but about empowering them with a new class of intelligent, specialized collaborators. By embracing this hybrid approach, teams can harness the immediate productivity gains of high-velocity models while relying on the profound analytical power of depth-focused models to build the robust, secure, and scalable systems of tomorrow. The ongoing stream of GPT Future News promises even more powerful and nuanced tools, making this an incredibly exciting time to be building software.