GPT on the Edge: The Future of AI is Local, Private, and Powerful

The Next Frontier: Unpacking the Latest GPT Edge News and Why It Matters

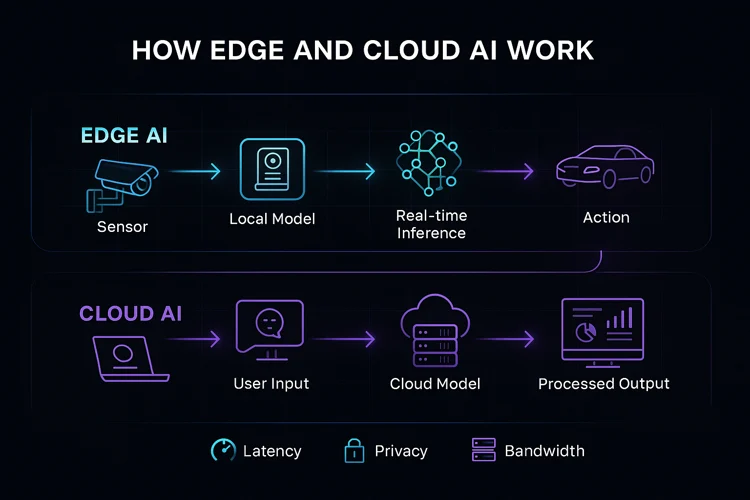

For years, the narrative surrounding generative AI has been one of massive scale. We’ve been captivated by colossal models with hundreds of billions of parameters, housed in sprawling data centers, accessible only through a constant connection to the cloud. This cloud-centric approach, while powerful, introduces inherent limitations related to latency, privacy, and cost. However, a paradigm shift is underway. The latest and most exciting GPT Models News isn’t just about making models bigger; it’s about making them smaller, faster, and more efficient. Welcome to the world of Edge AI, where the immense power of Generative Pre-trained Transformers (GPT) is being brought directly to our personal devices. This movement, captured in the burgeoning field of GPT Edge News, promises to redefine our interaction with technology by making AI instantaneous, fundamentally private, and universally accessible, even without an internet connection. This article explores the technical breakthroughs, real-world applications, and critical considerations driving the migration of GPT models from the cloud to the edge.

Why GPT on the Edge is the Next Big Wave in AI

The push to run sophisticated AI models like those discussed in ChatGPT News and GPT-4 News on local hardware—from smartphones and laptops to IoT devices and vehicles—is not merely an academic exercise. It’s a response to fundamental user and business needs that the cloud-only model cannot fully address. Several key drivers are fueling this transition, each promising to unlock new capabilities and user experiences.

The Quest for Lower Latency and Higher Throughput

Every time you interact with a cloud-based AI, your request travels from your device to a remote server, gets processed, and the response travels back. This round-trip introduces latency—a delay that can be noticeable and detrimental for real-time applications. The latest GPT Latency & Throughput News confirms that for tasks requiring immediate feedback, such as interactive GPT Assistants News, live translation, or dynamic NPC dialogue in gaming, even a half-second delay can shatter the user experience. By processing data directly on the device, edge AI eliminates this round-trip, enabling near-instantaneous responses. This is a critical development for the future of responsive and immersive GPT Applications News, where performance is paramount.

Unlocking Unprecedented Privacy and Data Security

In an era of heightened data sensitivity, the need to send personal or proprietary information to third-party servers is a significant concern. Recent GPT Privacy News and GPT Regulation News highlight the growing demand for solutions that prioritize user control over data. Edge AI offers a powerful solution: if the model runs locally, the data never has to leave the device. This is a game-changer for sensitive industries. Imagine a doctor using an AI tool to summarize patient notes; with an edge model, that confidential data remains within the clinic’s secure environment. This on-device processing is a cornerstone of the “privacy-by-design” philosophy, directly addressing concerns detailed in GPT Safety News and making AI viable for applications in legal tech, finance, and healthcare.

Offline Functionality and Cost Efficiency

The cloud model is predicated on two things: constant connectivity and a pay-per-use billing structure. This dependency limits the utility of AI in environments with unreliable internet and can lead to unpredictable operational costs. The latest GPT APIs News often revolves around token pricing, a cost that can scale rapidly. Edge AI decouples AI functionality from these constraints. A construction manager using a GPT Code Models News-powered tool to analyze blueprints on-site or a vehicle using a vision model for navigation can continue to operate seamlessly, regardless of internet availability. Furthermore, by reducing reliance on cloud servers, organizations can significantly cut down on API and data transfer costs, making widespread GPT Deployment News more economically feasible.

The Technical Gauntlet: Shrinking Giants for Local Devices

The primary challenge in bringing models like GPT-4 to the edge is their sheer size. These models can occupy tens or even hundreds of gigabytes of storage and require immense computational power, far exceeding the capabilities of a typical smartphone or laptop. The most compelling GPT Research News today focuses on solving this problem through a suite of sophisticated optimization techniques that shrink these AI giants without catastrophically compromising their performance.

Model Compression and Optimization Techniques

The core of edge AI deployment lies in making models smaller and faster. The latest GPT Efficiency News is dominated by three key techniques:

- Quantization: This process involves reducing the numerical precision of the model’s weights. For instance, converting weights from 32-bit floating-point numbers (FP32) to 8-bit integers (INT8) can reduce the model size by up to 75% and significantly speed up calculations on compatible hardware. The latest GPT Quantization News showcases methods that minimize the accuracy loss typically associated with this process.

- Pruning: Large language models often contain redundant parameters that contribute little to their overall performance. Pruning is a technique that identifies and removes these unnecessary connections or weights, creating a “sparser,” more lightweight model.

- Distillation: Knowledge distillation, a topic of frequent discussion in GPT Training Techniques News, involves using a large, powerful “teacher” model (like GPT-4) to train a much smaller “student” model. The student model learns to mimic the output and internal logic of the teacher, effectively inheriting its capabilities in a much more compact form. This is a key strategy for creating highly specialized, efficient models for edge deployment, as covered in GPT Distillation News.

Hardware Acceleration and Inference Engines

Software optimization is only half the battle. The latest GPT Hardware News reveals a surge in the development of specialized processors, such as Neural Processing Units (NPUs) and AI accelerators, which are now standard in modern smartphones and computers. These chips are designed specifically to perform the mathematical operations central to AI at high speed and with low power consumption. To leverage this hardware, developers rely on sophisticated GPT Inference Engines News like Apple’s Core ML, Google’s TensorFlow Lite, and ONNX Runtime. These engines act as a bridge, translating the optimized AI model into instructions that can be executed with maximum efficiency on the target device’s specific hardware.

From Cloud to Pocket: Real-World GPT Edge Applications

As the technical hurdles are overcome, the practical applications of GPT on the edge are beginning to emerge, transforming industries and personal computing. The progress in GPT Architecture News and GPT Scaling News is no longer just about cloud performance but also about enabling a new class of intelligent, on-device experiences.

The Rise of On-Device AI Assistants and Chatbots

The next generation of digital assistants will be faster, more context-aware, and fundamentally more private. By running on-device, they can access your calendar, emails, and contacts to provide personalized assistance without sending that data to the cloud. This trend, a hot topic in GPT Assistants News and GPT Chatbots News, will lead to assistants that can reliably function on a plane or in a remote area, offering a truly seamless experience. The future of GPT Custom Models News will likely involve personalizing these assistants directly on your device based on your unique usage patterns.

Revolutionizing Industries with Local AI

The impact of edge AI extends far beyond consumer devices. We are seeing a wave of innovation across multiple sectors:

- Healthcare: The latest GPT in Healthcare News discusses AI-powered diagnostic tools on portable medical devices that can provide instant analysis of scans or patient data in clinics with limited connectivity.

- Finance: GPT in Finance News reports on on-device fraud detection systems that can analyze transaction patterns in real-time on a user’s phone or at a point-of-sale terminal, blocking threats before sensitive data is even transmitted.

- Content Creation: For creatives, GPT in Content Creation News points to a future where powerful writing aids, image editors, and code assistants run directly within applications, offering instantaneous suggestions without the lag of an API call.

- Gaming: As highlighted in GPT in Gaming News, edge AI will enable non-player characters (NPCs) with truly dynamic, unscripted personalities who can react to players’ actions in real-time, creating deeply immersive worlds.

The Future: Multimodality and Autonomous Agents

Looking ahead, the most exciting GPT Future News lies in multimodality and autonomous agents. The latest GPT Multimodal News and GPT Vision News detail models that can understand not just text, but also images, audio, and sensor data. Running these models on the edge is critical for creating truly intelligent devices. A pair of smart glasses could analyze what you see and provide real-time information, or a robot could navigate a complex environment by processing its sensor data locally. This is the foundation for the next wave of GPT Agents News, where AI can take action in the physical world with the low latency and high reliability that only edge computing can provide.

Navigating the Edge: A Developer’s Guide

For developers and organizations looking to leverage edge AI, the transition requires a strategic approach. It’s not as simple as downloading a model and running it. Success hinges on careful planning, rigorous testing, and a deep understanding of the trade-offs involved.

The Model Selection Dilemma

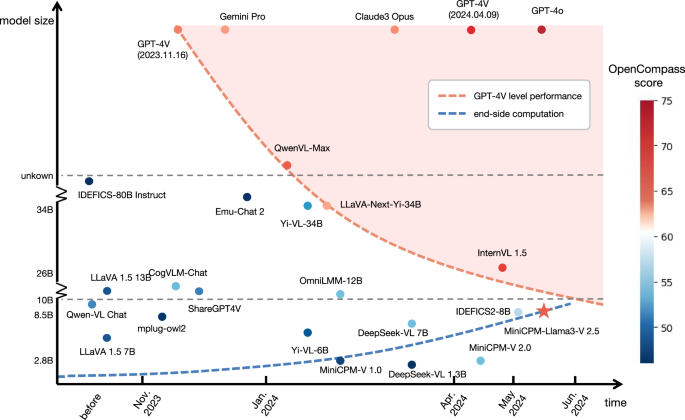

The first step is choosing the right model for the job. While the power of a model like the one anticipated in GPT-5 News is tempting, it’s often overkill for specific edge tasks. The latest GPT Open Source News reveals a growing ecosystem of smaller, more specialized models that are pre-optimized for efficiency. Developers should consult GPT Benchmark News to compare models based on performance metrics relevant to their target hardware, such as inference speed, memory usage, and power consumption, rather than just raw accuracy.

Balancing Performance and Accuracy

Implementing techniques like quantization and distillation is a delicate balancing act. Overly aggressive optimization can degrade model accuracy to the point where it becomes useless. The best practice is to establish an acceptable accuracy threshold for your specific application and then optimize the model to meet that target while maximizing performance. This requires an iterative process of optimization, profiling, and testing on the actual target hardware. Keeping up with GPT Optimization News is crucial for learning about new tools and techniques that make this process easier.

Ethical and Privacy by Design

While edge AI inherently enhances privacy, it is not a silver bullet. Developers must still adhere to strict ethical guidelines. As covered in GPT Ethics News and GPT Bias & Fairness News, models can still perpetuate biases present in their training data, regardless of where they are deployed. Furthermore, developers must be transparent with users about what data is being processed on the device and for what purpose. Building trust is paramount, and designing with privacy and ethics as core principles is non-negotiable for the long-term success of any AI application.

Conclusion: A New Era of Ubiquitous AI

The shift towards GPT on the edge represents a pivotal moment in the evolution of artificial intelligence. It marks the transition from a centralized, connection-dependent technology to one that is distributed, personal, and deeply integrated into the fabric of our daily lives. Driven by the undeniable benefits of low latency, enhanced privacy, and offline capability, this movement is forcing innovation across the entire GPT Ecosystem News, from hardware design to model architecture. While significant technical challenges remain, the breakthroughs in model compression, hardware acceleration, and efficient inference engines are rapidly paving the way for a new generation of intelligent applications. The future of AI is not just in a distant cloud; it’s in your pocket, on your desk, and all around you, running silently, securely, and instantly on the edge.