The New Era of AI: A Deep Dive into the Latest GPT Integrations and Model Updates

Introduction: From General AI to Specialized Agents

The artificial intelligence landscape is undergoing a seismic shift, moving rapidly from the era of powerful, general-purpose models to a new paradigm of specialized, integrated, and accessible AI agents. Recent developments have signaled a clear strategic direction: empowering developers and creators to build bespoke AI experiences tailored to specific tasks and workflows. This evolution is not merely incremental; it represents a fundamental change in how we interact with, build upon, and deploy generative AI. The latest GPT Integrations News reveals a maturing ecosystem where the focus is shifting from the novelty of the core technology to its practical, real-world application. This article provides a comprehensive technical analysis of these groundbreaking updates, exploring the new models, APIs, and platforms that are set to redefine the future of AI development and application across every industry.

Section 1: A New Ecosystem for Customization and Integration

The latest wave of updates has introduced a suite of tools and services designed to democratize AI development. The central theme is a move away from one-size-fits-all solutions towards a rich ecosystem of customizable components. This represents significant OpenAI GPT News, transforming the developer experience from prompt engineering a single endpoint to orchestrating sophisticated, stateful AI assistants.

The Rise of Custom GPTs and the GPT Store

Perhaps the most significant development for both technical and non-technical users is the introduction of custom GPTs. This feature allows anyone to create a tailored version of ChatGPT for a specific purpose without writing a single line of code. Users can instruct the model with specific knowledge, define its skills, and even connect it to external services through actions. For example, a marketing team could create a “Brand Voice Guardian” GPT that is pre-loaded with their company’s style guides and can review content for compliance.

This initiative is complemented by the upcoming GPT Store, a marketplace where creators can publish and monetize their custom GPTs. This platform has the potential to foster a vibrant GPT Ecosystem News cycle, creating a new economy around specialized AI agents. Early integrations with platforms like Canva and Zapier demonstrate the power of this model, allowing a custom GPT to, for instance, generate a social media post and then use Zapier to automatically schedule it across multiple platforms.

The Assistants API: Building Stateful AI Agents

For developers, the new Assistants API is a game-changer. Previously, building a conversational agent with memory and tool access required significant engineering effort to manage conversation history, context, and tool integration. The Assistants API abstracts this complexity away. It introduces persistent “Threads” that automatically manage conversation state, so developers no longer need to manually pass the entire chat history with each API call. This is a major piece of GPT APIs News that simplifies the development of complex applications like AI-powered tutors, sophisticated customer support chatbots, and interactive data analysis tools.

The API is built to be extensible, with built-in tools like a Code Interpreter for running Python code in a sandboxed environment and Retrieval for augmenting the model’s knowledge with external files. This marks a significant step towards creating true GPT Agents News, where AI can perform complex, multi-step tasks on behalf of a user.

Section 2: Under the Hood: A Technical Breakdown of Model Enhancements

Beneath the new user-facing features and APIs lie substantial upgrades to the core GPT models. These enhancements deliver not only increased performance and capability but also greater efficiency and cost-effectiveness, making advanced AI more accessible than ever.

Introducing GPT-4 Turbo: Bigger, Smarter, and Cheaper

The headline from the latest GPT-4 News is the release of GPT-4 Turbo. This new model boasts several key improvements over its predecessor, addressing some of the most common developer pain points.

A key highlight in GPT Architecture News is the massively expanded context window. GPT-4 Turbo supports a 128,000-token context window, equivalent to over 300 pages of text. This allows the model to process and reason over entire documents, codebases, or lengthy conversation histories in a single prompt, enabling more complex and context-aware applications. Furthermore, the model’s knowledge cutoff has been updated to April 2023, making its responses more current and relevant.

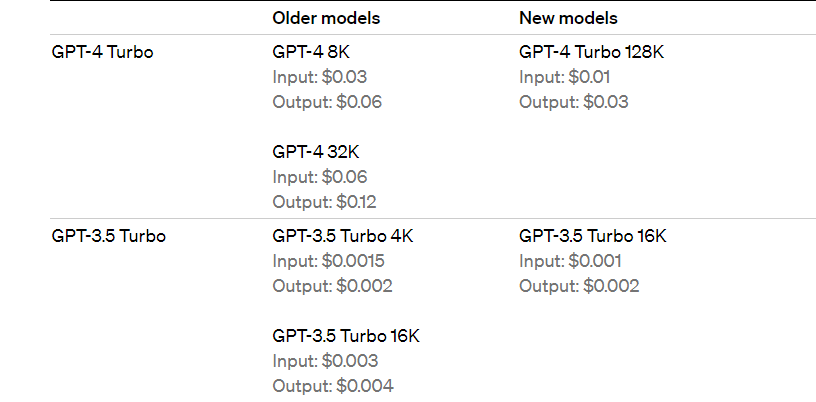

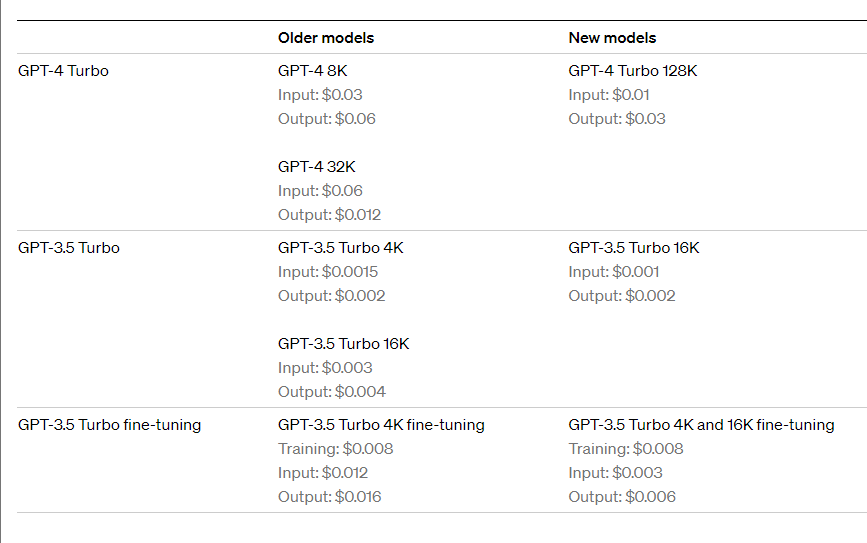

Here’s a quick comparison:

- Context Window: GPT-4 (8k/32k tokens) vs. GPT-4 Turbo (128k tokens)

- Knowledge Cutoff: GPT-4 (September 2021) vs. GPT-4 Turbo (April 2023)

- Pricing: GPT-4 Turbo is significantly cheaper, with input tokens being 3x less expensive and output tokens 2x less expensive than GPT-4.

Multimodality and Improved Function Calling

GPT-4 Turbo is now a truly multimodal model. The API can accept images as inputs, a development central to GPT Vision News and GPT Multimodal News. This opens up a vast array of new use cases, from analyzing visual data in financial reports to generating code from a whiteboard sketch. The integration of DALL-E 3 for image generation and new text-to-speech (TTS) capabilities further solidify its multimodal prowess.

For developers building agentic workflows, the improvements to function calling are critical. The model is now more accurate at calling functions and can call multiple functions in a single turn. This, combined with a new JSON Mode that ensures the model outputs valid JSON, dramatically improves the reliability of integrating GPT with external tools and APIs. The updated GPT-3.5 Turbo also benefits from these improvements, offering a 16k context window and better instruction following at a lower price point, making it a powerful option for less complex tasks.

Reproducible Outputs and Fine-Tuning Advancements

A subtle but crucial update for developers is the introduction of a `seed` parameter. This feature ensures that the model will produce the same output for the same input and seed, a concept known as reproducible outputs. This is invaluable for debugging, writing unit tests, and maintaining consistent user experiences, a key topic in GPT Benchmark News and developer workflows. Additionally, the fine-tuning program has been expanded to include GPT-4, allowing enterprises to train a custom version of the flagship model on their proprietary data. This, along with the new Custom Models program, provides a path for organizations with highly specific needs to collaborate directly with researchers to build bespoke models, representing the cutting edge of GPT Fine-Tuning News.

Section 3: Strategic Implications for Industries and Developers

These updates are not just technical; they have profound strategic implications for how businesses operate and how developers build software. The lower barrier to entry and higher ceiling for complexity will accelerate AI adoption across numerous sectors.

Accelerating Application Development

The Assistants API, combined with cheaper and more powerful models, dramatically reduces the time and expertise required to build sophisticated AI applications. What once took a team of engineers weeks to build—a chatbot with persistent memory, document retrieval, and tool-use capabilities—can now be prototyped in a matter of hours. This will lead to an explosion of GPT Applications News, with new tools emerging in fields like GPT in Education News (personalized tutors), GPT in Healthcare News (AI-powered diagnostic assistants), and GPT in Finance News (automated financial report analysis).

For example, a legal tech firm can now use the Assistants API with Retrieval to build an internal tool that allows lawyers to “chat” with thousands of pages of case law documents, asking complex questions and getting cited answers in seconds. This application, which would have been a major R&D project a year ago, is now within reach for smaller development teams.

The Legal and Ethical Landscape: Copyright Shield and Safety

As AI becomes more integrated into business workflows, questions of intellectual property and liability have become paramount. The introduction of Copyright Shield, a commitment to defend customers against legal claims of copyright infringement arising from the use of the services, is a landmark development in GPT Legal Tech News and GPT Regulation News. This provides a crucial layer of assurance for enterprises that are hesitant to adopt generative AI due to legal risks. It signals a maturation of the market where platform providers are taking on more responsibility for the outputs of their systems. This also intersects with ongoing discussions in GPT Ethics News and GPT Safety News, as it establishes a clearer framework for accountability in the AI ecosystem.

Section 4: Best Practices and Recommendations for Adoption

Navigating this new landscape requires a strategic approach. Developers and business leaders should consider how to best leverage these new tools to maximize value while mitigating risks.

Choosing the Right Tool for the Job

With a growing array of options, selecting the appropriate tool is crucial for successful GPT Deployment News and GPT Optimization News.

- Custom GPTs: Ideal for non-developers or for creating quick, task-specific internal tools and public-facing agents without the overhead of API development. Perfect for personal productivity or specific team workflows.

- Assistants API: The go-to choice for developers building complex, stateful applications. If your application requires long-running conversations, file retrieval, or tool use (like code execution), this API will save significant development time.

- Chat Completions API (with GPT-4 Turbo): Still the best option for stateless, single-turn tasks or when you need maximum control over the conversation flow and state management. Its lower latency makes it suitable for real-time applications.

- Fine-Tuning: Reserve this for when you need the model to learn a very specific style, tone, or format that is difficult to achieve through prompting alone. It’s a powerful but more resource-intensive option compared to retrieval-augmented generation (RAG), which should be the first choice for knowledge-based tasks.

Tips for Implementation

When integrating these new features, keep the following in mind:

- Start with RAG: Before jumping to fine-tuning, use the Retrieval feature in the Assistants API to provide your model with specific knowledge. It’s often more effective, cheaper, and easier to update.

- Leverage Reproducibility: Use the `seed` parameter in your development and staging environments to create reliable tests for your application’s logic.

- Monitor Costs: While prices have dropped, the 128k context window of GPT-4 Turbo can lead to high costs if not managed carefully. Implement robust logging and monitoring to track token usage, especially for long-running assistant threads.

- Prioritize User Experience: The power of these tools can be overwhelming. Focus on building intuitive interfaces that guide the user and clearly communicate the AI’s capabilities and limitations.

Conclusion: The Dawn of the AI Application Layer

The latest wave of updates marks a pivotal moment in the evolution of generative AI. The focus has decisively shifted from the raw power of large language models to the creation of a robust, accessible, and versatile application layer. The introduction of the Assistants API, Custom GPTs, and a more powerful, cost-effective GPT-4 Turbo model democratizes the ability to build sophisticated AI agents. For developers, this means less time spent on foundational plumbing and more time dedicated to creating innovative user experiences. For businesses, it unlocks a vast new territory of potential applications, from hyper-personalized customer service to automated creative workflows. As this ecosystem matures, the defining factor for success will no longer be access to the technology itself, but the creativity and vision with which it is applied. This is not just an update; it’s the beginning of a new chapter in the story of AI integration.