The Engine of Intelligence: A Deep Dive into GPT Hardware News and Trends

Introduction: The Silicon Powering the AI Revolution

The seemingly magical capabilities of large language models (LLMs) like GPT-4 are not born from software alone. Beneath the surface of every eloquent response, creative story, and line of code generated lies a vast and powerful foundation of specialized hardware. The evolution of Generative Pre-trained Transformers is intrinsically linked to, and often limited by, the raw computational power available. As we follow the latest GPT-4 News and speculate on future GPT-5 News, it’s crucial to understand the silicon engines driving this progress. This symbiotic relationship between advanced algorithms and cutting-edge hardware is the central theme of today’s GPT Hardware News.

The hardware story is a tale of two distinct, yet related, challenges: the monumental task of training these models and the global-scale demand for efficient inference. Training requires colossal data centers, packed with thousands of interconnected accelerators, consuming megawatts of power. Inference, on the other hand, must be fast, scalable, and energy-efficient to serve billions of queries in real-time. This article delves into the critical hardware landscape, exploring the technologies, key players, and optimization strategies that are shaping the future of AI, from massive cloud infrastructure to emerging GPT Applications in IoT News.

The Foundation: Why Hardware Matters for GPTs

At its core, a GPT model is a massive collection of numerical parameters (weights) that are adjusted during training to learn patterns from data. The operations involved are primarily matrix multiplications, which are perfectly suited for the parallel processing architecture of modern accelerators. The choice of hardware directly impacts the speed, cost, and feasibility of developing and deploying these models, making it a cornerstone of the entire GPT Ecosystem News.

From Training to Inference: Two Sides of the Hardware Coin

Understanding the hardware landscape begins with differentiating between the two primary phases of a model’s lifecycle. The requirements for each are vastly different, driving innovation in separate but complementary directions.

Training: The Realm of Brute-Force Computation

Training a foundational model is an exercise in scale. It involves processing petabytes of data over weeks or months, requiring immense computational power. Key hardware requirements include:

- Massive Parallelism: Thousands of accelerator chips working in concert. This is where GPT Scaling News becomes critical, as architectural choices determine how effectively a model can be distributed across nodes.

- High-Bandwidth Memory (HBM): Models with hundreds of billions of parameters must be loaded into memory. HBM provides the necessary speed to keep the processing cores fed with data, preventing bottlenecks.

- High-Speed Interconnects: Technologies like NVIDIA’s NVLink and NVSwitch or Infiniband are essential for rapid communication between chips within a server and across the data center. This is fundamental to many GPT Training Techniques News, such as distributed training.

Inference: The Pursuit of Latency and Efficiency

Once trained, a model is deployed to perform tasks, a process called inference. Here, the priorities shift. A user interacting with a service powered by the latest ChatGPT News expects an instant response. Key hardware requirements include:

- Low Latency: The time taken to generate a response must be minimal for real-time applications like those featured in GPT Chatbots News.

- High Throughput: The system must handle thousands or millions of concurrent user requests efficiently. This is a major focus of GPT Latency & Throughput News.

- Power Efficiency: At scale, the energy cost per query is a significant operational expense. Efficient hardware reduces the total cost of ownership.

The Role of Software Frameworks in Hardware Abstraction

Software frameworks like PyTorch and TensorFlow, along with higher-level platforms, provide a crucial layer of abstraction. They allow developers to write code without needing to manage the intricate details of the underlying hardware. This hardware-agnostic approach accelerates research and development, as seen in the broader GPT Platforms News. However, this abstraction is not perfect. Peak performance is only achieved when the software stack, including specialized libraries like NVIDIA’s CUDA, is finely tuned for the specific hardware it’s running on. This synergy between hardware and software is a recurring theme in all GPT Tools News and GPT Integrations News.

The Titans of Training: A Look at Data Center Hardware

The insatiable demand for more powerful models has ignited a hardware arms race. At the center of this are the specialized chips designed to accelerate the mathematical operations that form the bedrock of the transformer architecture, a topic frequently covered in GPT Architecture News.

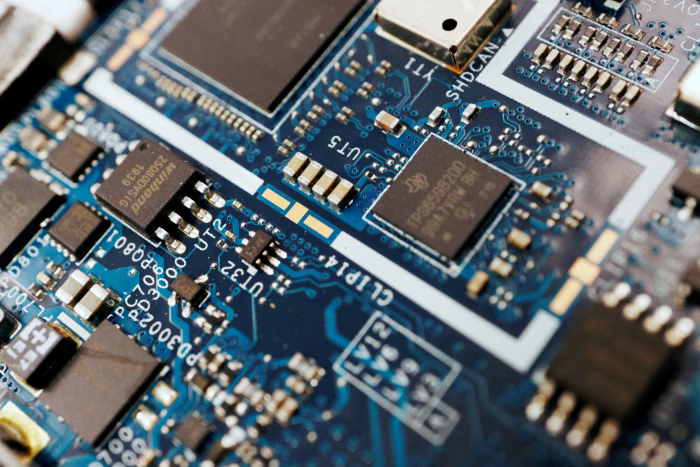

NVIDIA’s Dominance and the GPU Revolution

It’s impossible to discuss AI hardware without highlighting NVIDIA’s commanding position. Their GPUs, particularly the data center-focused A100 and H100 Tensor Core GPUs, have become the de facto standard for training large-scale models. Their success is built on several key features:

- Tensor Cores: These are specialized processing units within the GPU designed to dramatically speed up the matrix operations used in deep learning.

- CUDA Ecosystem: NVIDIA’s mature software platform provides a rich set of libraries and tools that make it easier for developers to leverage the full power of the hardware.

- High-Capacity HBM: The H100, for example, comes with 80GB of HBM3 memory, allowing larger models or model segments to be stored directly on-chip, reducing data movement delays.

The immense clusters of these GPUs are what made the training of models like GPT-4 possible, and any credible OpenAI GPT News about future models invariably involves discussions of securing tens of thousands of these powerful chips.

The Rise of Competitors and Custom Silicon

While NVIDIA leads, the market is far from static. The high demand and cost of top-tier GPUs have created a significant opportunity for competitors and new approaches, a key trend in GPT Competitors News.

- Google’s TPUs: Tensor Processing Units are Google’s custom-designed ASICs (Application-Specific Integrated Circuits) built specifically for their machine learning workloads, including models like LaMDA and PaLM. They are highly optimized for the TensorFlow framework.

- AMD’s Instinct Series: AMD is making significant inroads with its MI-series data center GPUs, offering competitive performance and an open-source software stack (ROCm) as an alternative to CUDA.

- Cloud-Specific Silicon: Major cloud providers are developing their own chips to reduce reliance on third parties and optimize for their specific infrastructure. Examples include AWS’s Trainium (for training) and Inferentia (for inference) chips.

- AI Startups: A new wave of companies like Cerebras, SambaNova, and Groq are building novel architectures, from wafer-scale engines to streaming processors, aiming to solve AI’s computational challenges in unique ways.

Beyond Brute Force: The Push for GPT Efficiency

As models grow larger, the costs of training and inference are becoming prohibitive. This has spurred a massive push towards efficiency, ensuring that the power of GPTs can be deployed more widely and sustainably. This focus on optimization is a central theme in GPT Efficiency News and is crucial for enabling everything from GPT Custom Models News to deployment on local devices.

Software-Based Optimization Techniques

Before the hardware even runs a single calculation, significant performance gains can be achieved through software. These techniques are vital for making models practical for real-world GPT Applications News.

- Quantization: As covered in GPT Quantization News, this involves reducing the numerical precision of a model’s weights (e.g., from 32-bit floating-point to 8-bit integers). This shrinks the model’s memory footprint and can dramatically speed up calculations on hardware that supports lower-precision arithmetic, with minimal impact on accuracy.

- Distillation: The latest GPT Distillation News highlights a process where a large, powerful “teacher” model is used to train a much smaller “student” model. The student learns to mimic the teacher’s output, resulting in a compact model that retains much of the original’s capability.

- Pruning and Compression: These techniques, often discussed in GPT Compression News, involve identifying and removing redundant or unimportant parameters from a trained model, further reducing its size and computational requirements.

These methods are essential for successful GPT Deployment News, particularly for resource-constrained environments, a key topic in GPT Edge News.

Hardware-Accelerated Inference

To squeeze every last drop of performance from the silicon, specialized software is used to optimize a trained model for a specific hardware target. This is the domain of GPT Inference Engines like NVIDIA’s TensorRT, Intel’s OpenVINO, and open-source compilers like Apache TVM. These engines analyze a model’s structure and perform optimizations such as “operator fusion,” where multiple computational steps are combined into a single, more efficient operation. The results of these optimizations are frequently analyzed in GPT Benchmark News, which compares the performance of different models on various hardware setups, providing crucial data for the GPT Inference News community.

The Road Ahead: Future Hardware and Deployment Strategies

The trajectory of AI is inextricably tied to the future of hardware. As we look toward the horizon, several trends and strategic considerations emerge for developers, researchers, and businesses aiming to leverage the power of GPTs.

The Future of GPT Hardware: What to Expect

The relentless demand for more computation will continue to drive innovation. Key trends highlighted in GPT Future News include:

- Advanced Packaging and Chiplets: Instead of building one giant monolithic chip, manufacturers are moving towards combining smaller, specialized “chiplets” in a single package. This can improve yield, reduce cost, and allow for more customized designs.

- Memory and Interconnect Bottlenecks: The biggest challenge is no longer just raw compute; it’s getting data to the processors quickly enough. Future hardware will feature even faster and larger HBM, as well as novel interconnect technologies like optical I/O to break through current bandwidth limitations.

- Specialization Continues: We will see more hardware designed specifically for transformer architectures and even more specialized hardware for tasks like GPT Vision News, which combines language with image processing.

These advancements are what will ultimately enable the capabilities speculated about in early GPT-5 News.

Strategic Considerations for Developers and Businesses

Navigating the hardware landscape requires a strategic approach. It’s not just about buying the most powerful chip; it’s about building a cohesive stack. Best practices include:

- Cloud vs. On-Premise: For many, the cloud offers the easiest access to state-of-the-art hardware without massive capital expenditure. However, for applications in sensitive fields like those covered by GPT in Healthcare News or GPT in Finance News, on-premise deployment may be necessary for data privacy and security, a topic closely related to GPT Privacy News and GPT Regulation News.

- A Full-Stack View: An effective strategy considers the entire pipeline. The choice of model architecture, the use of GPT Optimization News techniques like quantization, and the final deployment target must all be aligned. A model destined for an edge device requires a different approach than one running in a massive data center.

- Cost-Performance Analysis: The most expensive hardware is not always the best choice. For many inference workloads, older or less powerful GPUs, or even CPUs with specialized AI instructions, can provide a better price-to-performance ratio.

Conclusion: The Unseen Engine of the AI Revolution

The discourse around generative AI is often dominated by model capabilities and software applications. However, the silent, powerful engine driving this revolution is hardware. The tight coupling between GPT software and the underlying silicon means that progress in one domain directly fuels advancements in the other. From the GPU-powered data centers that give birth to foundational models to the efficiency techniques that allow them to run on our phones, hardware is the ultimate enabler.

Understanding the latest GPT Hardware News is no longer a niche concern for engineers; it is a strategic imperative for anyone involved in building, deploying, or investing in AI. As we move forward, the innovations in chip design, memory systems, and interconnects will continue to define the boundaries of what is possible, unlocking new frontiers for GPT Agents News, multimodal AI, and a future where intelligent systems are more integrated into our world than ever before.