Scaling and Alignment: The Real Training Techniques Behind the GPT Revolution

The last few years have witnessed a seismic shift in the landscape of artificial intelligence, with models like GPT-3.5 and GPT-4 capturing the world’s imagination. Their ability to understand, generate, and reason about human language felt like a sudden, revolutionary leap. However, a deeper look into the latest GPT Models News reveals a different story. This progress wasn’t born from a single, magical breakthrough but was the culmination of several years of dedicated research, meticulously combining and scaling proven techniques to an unprecedented degree. The true story of this AI explosion lies not in revolution, but in the powerful synergy of two key methodologies: training on vast corpuses of computer code and the meticulous process of Reinforcement Learning from Human Feedback (RLHF).

Understanding these core components is crucial for anyone navigating the rapidly evolving GPT Ecosystem News. It demystifies the technology, providing a clearer picture of not only how these models work but also where they are headed. This article delves into the technical underpinnings of modern GPT training, exploring how the fusion of logical structure from code and human-centric alignment from RLHF created the powerful, conversational AI we interact with today, and what this means for the future, including the much-anticipated GPT-5 News.

The Twin Pillars of Modern LLMs: Massive Scale and Diverse Data

At the heart of any Generative Pre-trained Transformer (GPT) model is the principle of scale. The foundational idea, validated by years of GPT Research News, is that performance predictably improves with more data, larger model architectures (more parameters), and greater computational power. This is often referred to as the “scaling laws” of neural networks. However, the quality and diversity of that data are just as critical as its sheer volume. While early models were trained primarily on web text, books, and articles, the inclusion of a different kind of data proved to be a pivotal moment in AI development.

From Text to Logic: The Unsung Hero of Training on Code

One of the most significant, yet often overlooked, advancements in training data was the large-scale inclusion of source code. According to recent GPT Code Models News, training models on billions of lines of code from repositories like GitHub has a profound impact that extends far beyond just writing software. Computer code is, by its nature, a language of pure logic, structure, and causality. To predict the next token in a line of Python or JavaScript, a model must learn about syntax, nested dependencies, algorithmic logic, and long-range context in a way that is far more rigorous than in most natural language.

This process endows the model with a rudimentary but powerful “reasoning engine.” It learns to think in steps, to structure information hierarchically, and to maintain consistency over long sequences. This is why a model trained on code is not just better at programming; it’s better at solving logic puzzles, outlining complex business plans, and deconstructing legal arguments. The abstract reasoning skills honed on code are transferable to a vast array of other domains. This development has been a key driver in the latest GPT Architecture News, as models are now built with the expectation of processing such structured data.

The Scaling Hypothesis in Action

GPT-4 is the quintessential example of the scaling hypothesis. While the exact parameter count is not public, it is understood to be significantly larger than GPT-3.5, trained on a more extensive and carefully curated dataset that includes a massive volume of code. This is not just about making the model “bigger” but about providing it with enough capacity and data to internalize the complex patterns present in its training corpus. The latest GPT Scaling News suggests that we are still seeing significant performance gains from this approach, though it requires immense investment in GPT Hardware News, relying on tens of thousands of specialized GPUs running for months. This scaling of both model size and high-quality, logic-rich data forms the powerful base upon which further refinements are built.

Taming the Beast: The Critical Role of RLHF

A scaled-up, pre-trained base model is an incredibly powerful text predictor, but it is not inherently an assistant. Left on its own, it might complete a user’s prompt in unhelpful ways, generate factually incorrect or toxic content, or fail to follow instructions. The challenge, then, is to align this raw intelligence with human values and intent. This is where Reinforcement Learning from Human Feedback (RLHF) enters the picture, a technique that has dominated GPT Fine-Tuning News and is central to the user experience of products like ChatGPT.

What is RLHF and How Does It Work?

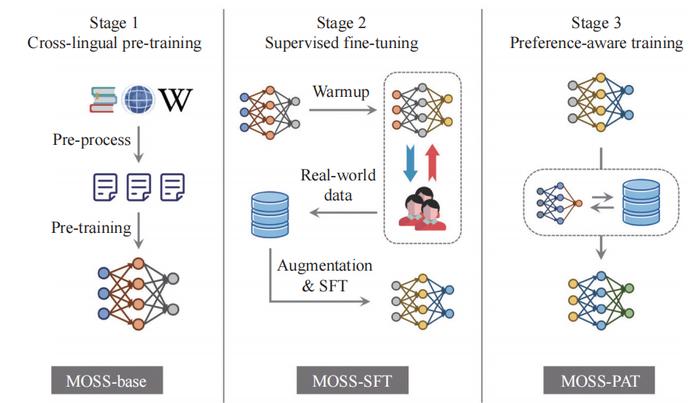

RLHF is a multi-stage fine-tuning process designed to make a model more helpful, harmless, and honest. It steers the model’s behavior to better match what users find useful. The process can be broken down into three core steps:

- Supervised Fine-Tuning (SFT): First, the base model is fine-tuned on a smaller, high-quality dataset of curated prompt-and-response pairs. These examples are created by human labelers who demonstrate the desired behavior, showing the model how to respond to various instructions in a helpful, conversational manner.

- Reward Modeling: This is the crucial step. For a given prompt, the model generates several different responses. Human labelers then rank these responses from best to worst. This comparison data is used to train a separate AI model, known as the “reward model.” The reward model’s job is not to generate text, but to look at a prompt and a response and output a score indicating how much a human would prefer that response.

- Reinforcement Learning Optimization: Finally, the SFT model is further fine-tuned using reinforcement learning. The model treats generating a response as a series of actions. For each response it generates, it receives a score from the reward model. Using an algorithm like Proximal Policy Optimization (PPO), the model’s parameters are adjusted to maximize this reward score. In essence, the model learns through trial and error to generate responses that the reward model—and by extension, the human labelers—would approve of.

This process is a cornerstone of current GPT Safety News, as it’s the primary mechanism for reducing harmful outputs and addressing issues highlighted in GPT Bias & Fairness News.

Why RLHF Was a Game-Changer

The impact of RLHF cannot be overstated. It transformed large language models from fascinating but unwieldy text completion engines into practical, interactive assistants. It taught the model the nuances of conversation, the importance of following instructions precisely, and the ability to refuse inappropriate requests. The difference between the raw GPT-3 and the original ChatGPT (based on a GPT-3.5 model) was almost entirely due to the masterful application of RLHF, a key topic in all ChatGPT News from its launch.

The Multiplier Effect: Combining Logical Reasoning with Human Alignment

The true breakthrough behind models like GPT-4 is not just the application of training on code or the use of RLHF in isolation, but the powerful synergistic effect that emerges when they are combined. These two techniques amplify each other, creating a model that is far more capable and controllable than the sum of its parts.

Creating a More Capable and Controllable Model

Think of it this way: training on code provides the model with a powerful, latent reasoning capability—a “System 2” thinking engine. It learns to break down problems, structure arguments, and maintain logical consistency. However, without guidance, it doesn’t know when or how to apply these skills effectively in a conversation. RLHF provides that guidance. It acts as the “steering wheel,” directing the model to use its underlying reasoning abilities to be as helpful and aligned as possible with the user’s request.

This synergy is what enables the sophisticated behaviors we see today. When a user asks a complex question, the model can leverage its code-trained logic to structure a multi-step answer, while using its RLHF-trained alignment to present that answer in a clear, polite, and easy-to-understand format. This has massive implications for the development of GPT Agents News, where autonomous systems must both plan logically and act in accordance with human preferences.

Real-World Applications of this Synergy

The practical applications of this combined approach are evident across numerous fields, driving the latest GPT Applications News.

- In Legal Tech: A lawyer can ask the model to summarize a complex contract and identify potential clauses of concern. The model uses its logical reasoning to parse the document’s structure and its alignment to highlight the most relevant points in plain language, a key area in GPT in Legal Tech News.

- In Education: A student struggling with a math problem can get a step-by-step solution. The model’s code-trained brain breaks the problem down logically, while its RLHF tuning ensures it explains each step patiently, acting as a personalized tutor. This is a major focus of GPT in Education News.

- In Content Creation: A marketer can request a blog post about a technical topic. The model structures the article logically, ensuring a coherent flow from introduction to conclusion, while using its alignment to write in an engaging and brand-appropriate tone, a trend seen in GPT in Content Creation News.

What This Means for the Future: From GPT-4 to GPT-5 and Beyond

Understanding that today’s AI prowess comes from scaling and refining existing techniques provides a clear lens through which to view the future. The path forward is likely to follow a similar pattern, focusing on enhancing these core pillars rather than waiting for a completely new paradigm to emerge.

The Path Forward: More Scaling and New Paradigms

The latest GPT Future News suggests that the development of GPT-5 and subsequent models will continue this trend. We can expect:

- Even Greater Scale: Continued scaling of model size, data, and compute, pushing the boundaries of what is possible with current architectures.

- More Diverse, High-Quality Data: A focus on acquiring more proprietary and structured data beyond just code, including scientific papers, mathematical theorems, and other forms of logical information.

- More Sophisticated Alignment: Research is already moving beyond RLHF to techniques like Reinforcement Learning from AI Feedback (RLAIF), where an AI model helps supervise another AI, potentially scaling the alignment process itself. This is a hot topic in GPT Ethics News and GPT Regulation News.

However, the industry is also grappling with challenges like the immense cost of training, potential data saturation, and the diminishing returns of simply adding more parameters. This is driving research in GPT Efficiency News, with a focus on techniques like GPT Quantization News and GPT Distillation News to create smaller, faster, yet still powerful models.

The Rise of Multimodality and Specialization

The principles of scaling and alignment are now being applied to new domains. GPT Multimodal News is dominated by models that can process and reason about images, audio, and video, not just text. This is a form of scaling the diversity of the data. Similarly, the rise of GPT Custom Models News and platforms allowing for easier fine-tuning enables the specialization of these powerful base models for specific industries, such as those covered by GPT in Healthcare News and GPT in Finance News, unlocking a new wave of practical applications.

Conclusion

The incredible advancements in AI, epitomized by GPT-3.5 and GPT-4, are not the product of an overnight discovery but of a deliberate and powerful strategy: combining the logical reasoning capabilities imparted by training on code with the human-centric alignment provided by RLHF, and scaling this combination to unprecedented levels. This dual approach of enhancing a model’s core intelligence while simultaneously steering its behavior has proven to be remarkably effective. It has transformed large language models from mere curiosities into indispensable tools across countless industries. As we look toward the horizon of GPT Trends News, it is this foundational understanding of scaling and alignment that will continue to guide the development of ever more capable, useful, and safe artificial intelligence.