GPT Chatbots News: Beyond the Hype, The Silent Revolution of Specialized AI

In the constant stream of GPT Chatbots News, headlines are dominated by the latest feats of large-scale, general-purpose models. From GPT-4 News heralding new creative capabilities to speculative GPT-5 News promising unprecedented intelligence, the public imagination is captivated by conversational AI that can write poetry, summarize articles, and debate philosophy. This media focus, fueled by constant updates in OpenAI GPT News and the expanding world of ChatGPT News, paints a picture of an AI revolution led by a few monolithic, all-knowing giants.

However, beneath this surface-level excitement, a quieter but arguably more impactful transformation is underway. This is the era of the specialist. While general-purpose models are designed for broad appeal and attention, a new class of AI is being built for specific, high-stakes action. These are not chatbots; they are mission-critical engines fine-tuned to excel at a single, complex domain. From accelerating drug discovery in biology to automating compliance in finance, these specialized models are the unsung heroes quietly reshaping the foundations of science, industry, and the physical world. This article delves beyond the mainstream headlines to explore the architecture, applications, and strategic importance of these domain-specific AI powerhouses.

The Generalist vs. Specialist Dichotomy in AI

The current AI landscape is largely defined by two parallel, yet distinct, evolutionary paths: the well-publicized generalist and the industrious specialist. Understanding their differences is crucial for anyone looking to leverage AI effectively, as the latest GPT Trends News points towards a future where both approaches coexist and complement each other.

The Rise of the General-Purpose LLM

General-purpose Large Language Models (LLMs) like GPT-3.5 and GPT-4 are masterpieces of scale. Built on the transformer architecture, their power stems from being pre-trained on vast, internet-scale datasets encompassing a staggering breadth of human knowledge. This allows them to perform a wide array of tasks out-of-the-box, from GPT in Content Creation News to powering versatile GPT Assistants News. Their accessibility through platforms and APIs has fueled a vibrant GPT Ecosystem News, enabling developers to rapidly prototype and deploy AI-powered features. The strength of these models lies in their versatility. They are the Swiss Army knives of AI, capable of handling novel requests and engaging in open-ended dialogue, which makes for compelling GPT Applications News.

However, this breadth comes with trade-offs. For highly specific or technical domains, generalist models can be a “jack of all trades, master of none.” They may lack the nuanced understanding of domain-specific jargon, occasionally “hallucinate” incorrect facts, and their sheer size can lead to higher operational costs and latency, a key concern in GPT Inference News. While they are excellent for broad applications, they often fall short where precision, reliability, and efficiency are paramount.

The Unsung Heroes: Domain-Specific Models

In contrast, specialized models are forged for depth, not breadth. These models often begin with a powerful foundation model (either proprietary or from the burgeoning GPT Open Source News community) and undergo a rigorous process of fine-tuning. This involves continuing their training on a curated, high-quality dataset specific to a particular domain. The latest GPT Fine-Tuning News highlights how this process imbues the model with expert-level knowledge, making it highly accurate and reliable for its designated task.

The core advantage is precision. A legal AI fine-tuned on millions of court filings will outperform a generalist model in legal research. A financial model trained on decades of market data will be superior at risk assessment. These models are not built for casual conversation; they are built for decisive action. They represent a strategic shift from general intelligence to applied, functional expertise, a key theme in GPT Custom Models News.

From Foundation to Function: The Engineering of Specialized AI

Creating a high-performance specialized AI is a sophisticated engineering challenge that goes far beyond simply prompting a large model. It involves meticulous data curation, architectural optimization, and a deep understanding of the deployment environment. The latest GPT Training Techniques News and GPT Architecture News reveal a focus on efficiency and precision.

Fine-Tuning and Custom Data Curation

The heart of specialization is fine-tuning. This process adapts a pre-trained model to a new, specific task. For example, a healthcare startup might fine-tune a model on thousands of anonymized patient records and medical journals to create a diagnostic assistant. The quality of the GPT Datasets News is paramount here; the model is only as good as the data it learns from. Best practices involve rigorous data cleaning, labeling, and ensuring the dataset is comprehensive and free from biases, a critical topic in GPT Bias & Fairness News.

This process allows the model to learn the specific vocabulary, context, and patterns of its domain. A model fine-tuned for software development, for instance, will understand the nuances of different programming languages and frameworks, leading to more accurate and useful outputs, as seen in the progress of GPT Code Models News.

Architectural Innovations and Optimization for the Edge

Specialized models often don’t require the colossal parameter counts of their generalist cousins. The focus shifts from raw scale to efficiency. Techniques like model optimization are critical for deploying AI in real-world scenarios, especially on devices with limited computational power. Key methods include:

- Distillation: Training a smaller, “student” model to mimic the behavior of a larger, more powerful “teacher” model. This transfers knowledge into a more compact and faster architecture.

– Quantization: Reducing the numerical precision of the model’s weights (e.g., from 32-bit floating-point numbers to 8-bit integers). This shrinks the model’s size and speeds up computation with minimal loss in accuracy.

– Pruning: Identifying and removing redundant or unimportant connections (weights) within the neural network, making it leaner and more efficient.

These techniques, covered in GPT Compression News and GPT Quantization News, are essential for the rise of GPT Edge News. They enable powerful AI to run directly on drones, factory robots, and IoT sensors without needing a constant connection to a data center. This dramatically reduces latency and enhances data privacy, as sensitive information is processed locally—a major topic in GPT Privacy News. This optimization directly impacts GPT Latency & Throughput News and is often supported by specialized GPT Hardware News, such as NPUs (Neural Processing Units) on edge devices.

Where Specialists Shine: Real-World Impact Across Industries

The true measure of specialized AI is its real-world impact. Across virtually every sector, these domain-specific models are moving from research labs to mission-critical operational roles, creating tangible value far from the public spotlight.

Advancing Science and Healthcare

In biotechnology, models like ProtBERT and ESMFold, trained specifically on protein sequences and structures, are revolutionizing drug discovery and disease research. They can predict a protein’s 3D shape from its amino acid sequence—a task that once took years of lab work. This is a prime example of how specialized GPT Research News is accelerating scientific breakthroughs. In clinical settings, GPT in Healthcare News reports on vision models fine-tuned on medical scans (X-rays, MRIs) to assist radiologists in detecting tumors and other anomalies with greater accuracy and speed.

Transforming Finance and Legal Tech

The finance industry relies on speed and accuracy, making it a perfect fit for specialized AI. GPT in Finance News covers models trained on real-time transaction data to detect fraudulent activity in milliseconds, far faster than humanly possible. Other models analyze market data to perform complex risk modeling and algorithmic trading. Similarly, GPT in Legal Tech News highlights how LLMs trained on vast libraries of case law and regulatory filings are automating legal research, contract analysis, and compliance workflows, saving thousands of hours of manual labor and reducing human error.

Powering Robotics and Geospatial Analysis

In the physical world, specialized models are giving machines unprecedented awareness. GPT Vision News is not just about identifying cats in photos; it’s about powering industrial robots to identify defects on an assembly line or enabling autonomous drones to navigate complex environments. Geospatial models, trained on petabytes of satellite imagery, can track deforestation, monitor crop health for precision agriculture, and provide real-time analysis during natural disasters to guide emergency responders. These are high-stakes GPT Applications in IoT News where efficiency and low latency are non-negotiable.

Enhancing Gaming and Creative Industries

Even in creative fields, specialists are making their mark. GPT in Gaming News discusses how AI is used to create dynamic, responsive NPCs (non-player characters) that interact with players in more realistic ways. Specialized models also power procedural content generation, creating vast, unique game worlds on the fly. In marketing, models fine-tuned on brand voice and performance data are a key part of GPT in Marketing News, helping to generate highly targeted and effective ad copy, moving beyond generic content.

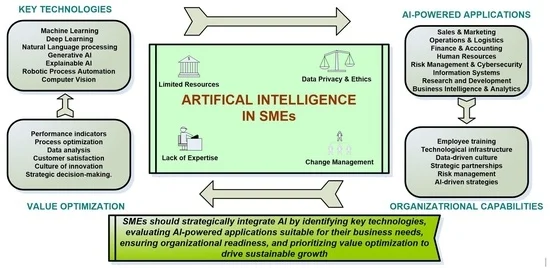

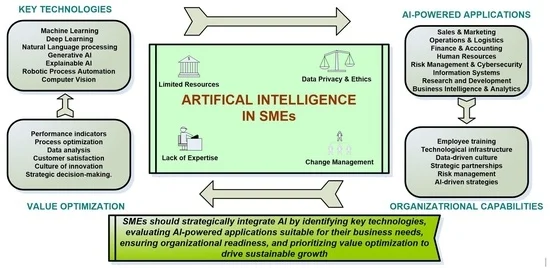

Strategic Considerations: Generalist API vs. Custom Specialist

For any organization looking to integrate AI, the central question becomes: when should we use a general-purpose API, and when should we invest in building a specialized model? The answer depends entirely on the use case, stakes, and strategic goals.

When to Use a General-Purpose API

Leveraging a powerful, off-the-shelf API from providers like OpenAI is an excellent strategy for several scenarios:

- Rapid Prototyping: Quickly test ideas and build minimum viable products without the overhead of training a custom model.

- General Content and Communication: Tasks like drafting emails, summarizing articles, or powering a customer service chatbot for common queries.

- Low-Stakes Applications: Situations where occasional inaccuracies are acceptable and do not pose a significant business or safety risk.

- Broad Knowledge is Key: When the application needs to draw from a wide range of topics rather than deep expertise in one.

The vast ecosystem of GPT Tools News and GPT Integrations News makes this approach highly accessible for a wide range of developers.

When to Invest in a Specialized Model

Building or fine-tuning a custom model is a significant investment but becomes necessary when the requirements are more demanding:

- Mission-Critical Functions: Applications where accuracy, reliability, and consistency are non-negotiable, such as medical diagnostics or financial fraud detection.

- Deep Domain Expertise: When the task requires understanding highly specific jargon, complex rules, or nuanced context that a generalist model lacks.

- Efficiency and Low Latency: For on-device or edge computing scenarios where speed and low power consumption are critical.

- Data Privacy and Security: When sensitive data cannot leave the organization’s private infrastructure, making on-premise GPT Deployment News a priority.

- Competitive Differentiation: A custom model trained on proprietary data can become a unique and defensible asset that competitors cannot easily replicate.

This path requires careful consideration of GPT Ethics News and GPT Safety News, ensuring the model is robust, fair, and aligned with its intended purpose.

Conclusion

While the headlines surrounding general-purpose GPT Chatbots News will continue to capture the public’s imagination, the silent, steady progress of specialized AI models is where much of the tangible, industrial-scale revolution is happening. These are not flashy conversationalists; they are high-precision instruments of action, engineered for specific, high-stakes tasks. From decoding the building blocks of life to securing global financial systems and enabling autonomy on the edge, these models are delivering on the promise of AI in profound ways.

The GPT Future News is not about a single, all-powerful AI. Instead, it points to a diverse and dynamic ecosystem where powerful generalists serve as accessible platforms for innovation, while a growing army of highly-trained specialists drives progress in the fields that matter most. For businesses, developers, and researchers, the key to success will be understanding this dichotomy and knowing when to embrace the breadth of a generalist versus when to harness the focused power of a specialist.