The New Frontier: How Advanced GPT Models are Revolutionizing High-Stakes Finance and Government Operations

From Public APIs to Fortified Clouds: GPT’s Enterprise Journey

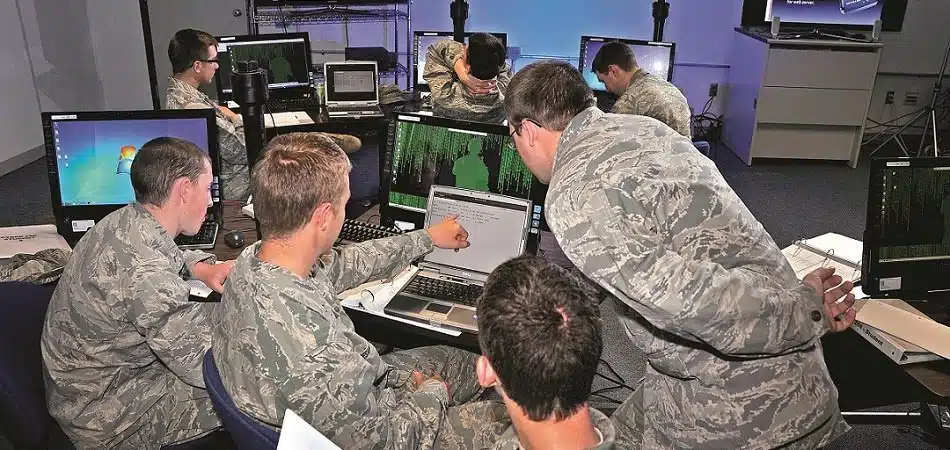

The conversation around Generative Pre-trained Transformers (GPT) has rapidly evolved from the public fascination with ChatGPT to a more serious, strategic discussion within the world’s most secure and regulated industries. While early adoption saw businesses leveraging public GPT APIs for marketing copy and customer service chatbots, the latest GPT Models News reveals a significant pivot. Today, the focus is on deploying powerful models like GPT-4 within highly secure, private cloud environments, specifically tailored for mission-critical operations in finance, defense, and intelligence. This shift marks the transition of GPT from a general-purpose novelty to a specialized, indispensable tool for high-stakes decision-making.

This evolution is driven by a fundamental need: harnessing the incredible reasoning and data synthesis capabilities of large language models (LLMs) without compromising on security, privacy, or regulatory compliance. The latest GPT-4 News and whispers of GPT-5 News are not just about improved performance but also about enhanced control and deployability. For financial institutions managing trillions in assets or government agencies handling classified information, sending data to a public API is a non-starter. The new paradigm involves integrating these advanced AI systems into proprietary, often air-gapped, data platforms. This creates a fortified ecosystem where the AI’s power is unleashed on sensitive internal data, revolutionizing everything from financial crime detection to geopolitical risk analysis.

Early Adoption: Automating Routine Financial Tasks

The initial wave of GPT in Finance News centered on efficiency gains for routine tasks. Analysts used tools built on GPT-3.5 to summarize lengthy earnings call transcripts, draft market commentary, and analyze sentiment from financial news feeds. This was largely powered by public GPT APIs News, offering a quick and accessible way to experiment with the technology. However, these applications barely scratched the surface and were fraught with limitations. The risk of data leakage, the potential for model “hallucinations” producing inaccurate financial data, and a lack of traceability made them unsuitable for core operations. The early ChatGPT News highlighted its power but also its unsuitability for tasks requiring verifiable accuracy and ironclad data privacy.

The Shift to Secure, Domain-Specific Applications

The current trend is a direct response to these early challenges. Major technology and data analytics firms are now creating solutions that bring the model to the data, not the other way around. This involves sophisticated GPT Integrations News where foundation models are deployed within a client’s own secure cloud infrastructure (e.g., Azure Government Cloud, AWS GovCloud). This approach addresses key concerns around GPT Privacy News and aligns with stringent GPT Regulation News like GDPR and other data sovereignty laws. Within these secure enclaves, organizations can create GPT Custom Models News. Through techniques discussed in GPT Fine-Tuning News, they can adapt a base model to understand their unique financial jargon, internal reporting formats, and specific operational contexts, creating a highly specialized and secure analytical powerhouse.

Architecting Trust: Deploying Generative AI for Mission-Critical Tasks

Deploying a model like GPT-4 for sensitive financial or intelligence analysis requires more than just a secure server; it demands a complete re-imagining of the AI stack. The goal is to build a system that is not only powerful but also trustworthy, auditable, and controllable. The latest GPT Architecture News focuses on creating composite AI systems that pair the generative capabilities of LLMs with the structured reliability of traditional data platforms.

The Secure AI Stack: Combining Language Models with Data Platforms

The modern architecture for high-stakes AI involves a multi-layered approach. At the core is a powerful foundation model, but it is wrapped in several layers of security and control provided by a data platform. This platform governs all interactions with the model. It manages data access permissions, ensuring the AI only “sees” data the human user is authorized to see. It logs every query and response, creating an immutable audit trail for compliance. Most importantly, it orchestrates workflows, transforming the LLM from a simple chatbot into a sophisticated analytical engine or one of many GPT Agents News. This approach, detailed in recent GPT Platforms News, is fundamental to ensuring GPT Safety News and mitigating risks of unauthorized data access or misuse.

Fine-Tuning and RAG for Specialized Intelligence

To make a general-purpose model effective for specialized domains, two key techniques are employed: Fine-Tuning and Retrieval-Augmented Generation (RAG).

- GPT Fine-Tuning News often discusses adapting a model’s style, tone, and knowledge base. For a hedge fund, this could mean training the model on decades of its own proprietary research reports to understand its unique analytical framework.

- Retrieval-Augmented Generation (RAG) is arguably more critical for high-stakes applications. Instead of relying solely on its pre-trained knowledge (which can be outdated or inaccurate), a RAG system first retrieves relevant, up-to-the-minute information from a trusted, internal knowledge base (e.g., a vector database of compliance documents, market data feeds, or intelligence reports). It then provides this context to the LLM along with the user’s query. The model uses this verified information to formulate its answer, drastically reducing hallucinations and allowing it to cite its sources. This makes the output traceable and verifiable, a crucial requirement in finance and legal tech. The evolution of GPT Datasets News and GPT Tokenization News is critical for optimizing these RAG systems.

Performance and Efficiency Considerations

Running massive models like GPT-4 on-premise or in a private cloud presents significant computational challenges. The latest GPT Efficiency News is filled with research on making these models smaller, faster, and cheaper to run. Techniques like GPT Quantization News (reducing the precision of the model’s weights), GPT Distillation News (training a smaller model to mimic a larger one), and GPT Compression News are vital. These optimizations are key to enabling GPT Edge News scenarios, where analysis might need to happen on localized hardware. Furthermore, advances in GPT Hardware News, including new generations of GPUs and custom AI accelerators, are lowering the barrier to entry for private deployment, while new GPT Inference Engines News promise to boost GPT Latency & Throughput News, making real-time analysis feasible.

From Analysis to Action: Concrete Use Cases in Finance and Beyond

The theoretical architecture translates into powerful, real-world capabilities that are set to redefine professional workflows in high-stakes environments. The focus of GPT Applications News is shifting from simple content creation to complex reasoning and problem-solving, touching fields from GPT in Healthcare News to GPT in Legal Tech News.

Financial Crime and Fraud Detection

Consider an anti-money laundering (AML) analyst investigating a complex web of shell corporations. Traditionally, this involves manually piecing together information from dozens of siloed databases, transaction logs, and unstructured reports.

- Real-World Scenario: Using a secure, RAG-enabled GPT agent, the analyst can now issue a natural language query like, “Summarize all transactions linked to entity X across our international branches in the last 180 days, cross-reference with public sanctions lists, and highlight any connections to individuals mentioned in our internal suspicious activity reports.” The AI agent can parse structured transaction data, understand the text in the reports, and generate a comprehensive summary in seconds, complete with links to the source documents. This system might even leverage GPT Vision News to analyze diagrams of corporate structures found in attached PDFs, showcasing true GPT Multimodal News capabilities.

Geopolitical Risk and Investment Strategy

For a global macro hedge fund, staying ahead of geopolitical events is paramount. The speed at which information can be synthesized and acted upon can mean the difference between profit and loss.

- Real-World Scenario: A custom GPT model is continuously fed a stream of real-time data: global news wires, satellite imagery analysis, shipping lane data, and diplomatic communications. The model is fine-tuned to identify patterns that correlate with market volatility. It could flag a subtle shift in naval patrol patterns in a key strait, cross-reference it with a change in tone in state-sponsored media, and alert portfolio managers to a heightened risk of supply chain disruption for specific commodities. This is a prime example of the GPT Trends News moving toward predictive, proactive intelligence gathering.

The Rise of AI Agents and Autonomous Systems

The ultimate trajectory, as highlighted in GPT Future News, is the development of sophisticated AI agents. These are not just chatbots but systems that can perform multi-step tasks. An agent might be tasked with monitoring a portfolio, and if a certain risk threshold is breached, it could automatically execute a pre-approved hedging strategy, generate a compliance report, and notify the relevant stakeholders. The development of such systems requires immense focus on GPT Ethics News and GPT Safety News, ensuring robust human-in-the-loop oversight and clear operational boundaries to prevent unintended consequences. This is where topics like GPT Bias & Fairness News become critically important to prevent systemic errors in automated financial decisions.

A Pragmatic Approach: Best Practices and Common Pitfalls

As organizations rush to adopt these powerful technologies, a pragmatic and cautious approach is essential. The difference between a successful deployment and a costly failure often lies in adhering to a set of core principles and being aware of potential pitfalls.

Best Practices for Secure Deployment

To navigate this complex landscape, organizations should prioritize several key areas. First, data governance is paramount. Strict, role-based access controls must be enforced by the platform, not the model. Second, rigorous model validation and continuous monitoring using internal GPT Benchmark News are necessary to test for accuracy, bias, and performance degradation. Third, prioritize explainability. Systems built on RAG are inherently more transparent because their outputs can be traced back to source documents. Finally, always maintain meaningful human oversight. For critical decisions, the AI should serve as a co-pilot to augment human intelligence, not replace it entirely. This is a central theme in ongoing discussions around GPT Regulation News.

Common Pitfalls to Avoid

Several traps can derail a high-stakes AI initiative. The most common is automation bias—the tendency for humans to over-trust the output of an automated system. Another significant risk is data poisoning, where bad or malicious data is fed into the system, leading to flawed or dangerous conclusions. Organizations must also avoid scope creep, ensuring that models are specialized for specific tasks rather than trying to build a single, monolithic “do-everything” AI. Finally, ignoring the broader GPT Ecosystem News, including developments from GPT Competitors News and the GPT Open Source News community, can lead to technological dead-ends. Staying informed about the entire landscape, from GPT Tools News to new GPT Research News, is crucial for long-term success.

Conclusion: The Dawn of a New Analytical Paradigm

The integration of advanced GPT models into secure, enterprise-grade platforms represents a watershed moment for the finance and intelligence sectors. We are moving beyond the era of generic AI tools and into a new age of specialized, secure, and auditable AI co-pilots and agents. This convergence of cutting-edge AI with robust data governance is unlocking capabilities that were once the realm of science fiction, allowing for unprecedented speed and depth in analysis. The journey is complex, fraught with technical and ethical challenges, but the trajectory is clear. For organizations that can successfully navigate this new frontier, the ability to transform vast amounts of data into decisive, strategic action will provide a formidable competitive and operational advantage for years to come.