The New Silicon Brains: A Cambrian Explosion in Hardware for GPT Models

The Accelerating Arms Race for AI Dominance

We are living through a pivotal moment in the history of computing. The insatiable demand for larger, more powerful generative AI, from GPT-3.5 to the colossal scale of GPT-4 and the anticipated GPT-5, has ignited a fierce and fascinating hardware arms race. While NVIDIA’s GPUs have been the undisputed workhorses of the AI revolution, their dominance is now being challenged by a “Cambrian explosion” of novel architectures and specialized silicon. The computational cost of training and running these massive models has created a powerful incentive for innovation, pushing tech giants, agile startups, and research labs to rethink the very foundations of processing. This article delves into the latest GPT Hardware News, exploring the emerging landscape of AI accelerators, the software ecosystems that enable them, and the profound implications for the future of artificial intelligence, from cloud data centers to the edge.

Section 1: The Reign of the GPU and the Seeds of Change

To understand where we’re going, we must first understand the current landscape. The rise of large language models is inextricably linked to the evolution of the Graphics Processing Unit (GPU). Originally designed for rendering complex 3D graphics in gaming, GPUs proved to be exceptionally well-suited for the parallel matrix multiplications and tensor operations that form the bedrock of deep learning.

NVIDIA’s CUDA-Powered Kingdom

NVIDIA’s strategic foresight in developing the CUDA (Compute Unified Device Architecture) platform more than a decade ago created a powerful moat. CUDA provided a relatively accessible programming model that allowed developers to unlock the parallel processing power of GPUs for general-purpose computing. This software ecosystem became the de facto standard for AI research and development. Chips like the A100 and the more recent H100 Tensor Core GPUs are marvels of engineering, packing thousands of cores and specialized hardware (Tensor Cores) designed specifically to accelerate the mixed-precision calculations common in GPT Training Techniques News. Key specifications that underscore their power include:

- HBM (High Bandwidth Memory): Modern GPUs use HBM2e or HBM3, providing memory bandwidth in the range of 2-3 TB/s. This is critical for feeding the processing cores with the massive datasets and model parameters required for GPT Scaling News.

- Interconnects: Technologies like NVLink and NVSwitch allow multiple GPUs to be connected into a single, massive accelerator, enabling the training of models with hundreds of billions or even trillions of parameters.

- Tensor Cores: These specialized units dramatically speed up matrix operations, which can account for over 90% of the computation in a Transformer model, the core of GPT Architecture News.

However, this dominance comes at a cost. High demand, supply chain constraints, and premium pricing have created a significant bottleneck for many organizations. Furthermore, while GPUs are excellent general-purpose accelerators, the extreme specialization of LLM workloads has opened the door for more purpose-built, and potentially more efficient, hardware solutions.

Section 2: The Cambrian Explosion of AI Accelerators

The market is now fragmenting as new players enter the fray, each with a unique architectural philosophy aimed at solving the AI computation problem more efficiently. This diversification is creating a rich and competitive ecosystem, a true “Cambrian explosion” of silicon.

AI processor chip with glowing circuits – Technology Chip Circuit With Glowing Processor. Bright microchip …

Custom ASICs from the Hyperscalers

Cloud giants, who operate LLMs at an unimaginable scale, were the first to move towards custom hardware. By designing their own Application-Specific Integrated Circuits (ASICs), they can optimize for their specific software stacks and workloads, driving down operational costs and reducing their reliance on third-party vendors.

- Google’s TPU (Tensor Processing Unit): Now in its fifth generation, the TPU is a prime example. TPUs are designed from the ground up for TensorFlow and JAX workloads. Their architecture, featuring a large systolic array for matrix multiplication, is hyper-optimized for neural network training and inference, making waves in GPT Benchmark News.

- Amazon’s Trainium and Inferentia: AWS has developed two distinct chip families. Trainium is focused on high-performance, cost-effective model training, while Inferentia is designed for high-throughput, low-latency inference. This specialization highlights a key trend: the divergence of hardware needs for training versus deployment.

- Microsoft’s Maia 100: Microsoft has also joined the race with its own AI accelerator, designed to power its Azure and OpenAI workloads, a significant development in OpenAI GPT News.

The Innovative Upstarts

Beyond the tech titans, a host of well-funded startups are introducing radical new designs that challenge conventional thinking.

- Cerebras Systems: Cerebras famously builds a “Wafer-Scale Engine” (WSE), a single chip the size of an entire silicon wafer. The WSE-3 boasts 4 trillion transistors and 900,000 AI-optimized cores. By keeping all compute and memory on a single piece of silicon, Cerebras aims to eliminate the data movement bottlenecks that plague multi-chip systems, offering a unique solution to GPT Scaling News.

- Groq: Groq has developed the Language Processing Unit (LPU), an inference-specific chip designed for deterministic low latency. Unlike GPUs which process in parallel batches, the LPU operates more like a synchronized assembly line. This results in incredibly fast token-per-second performance for a single user, a critical metric for real-time GPT Chatbots and GPT Agents News. Their public demos have shown staggering inference speeds, directly impacting GPT Latency & Throughput News.

- SambaNova Systems: SambaNova’s reconfigurable dataflow architecture allows the hardware to adapt to the specific data flow of a given model, promising high performance across a variety of AI workloads, from GPT Vision News to traditional language tasks.

The Next Frontier: Photonics and Analog Computing

Looking further ahead, some companies are exploring non-digital, non-electronic computing paradigms to shatter current efficiency barriers. Companies like Lightmatter and Lightelligence are developing photonic processors that compute using light instead of electrons. This approach promises orders-of-magnitude improvements in speed and power efficiency by overcoming the physical limitations of electrical resistance and heat generation in silicon. This research is a key part of the GPT Future News, potentially enabling AI models of unprecedented scale and complexity.

Section 3: System-Level Implications and the Software Challenge

A revolutionary chip is useless without the software to run it and the system to support it. The battle for AI hardware supremacy is being fought as much in the compiler and the data center rack as it is on the silicon wafer.

Breaking the CUDA Monopoly

The biggest hurdle for any new hardware entrant is NVIDIA’s CUDA. The vast ecosystem of libraries, developer tools, and community expertise built around CUDA creates immense inertia. To compete, challengers must offer not just superior hardware, but also a compelling software stack. Open standards and frameworks are emerging as the primary weapons in this fight:

- OpenXLA and Mojo: OpenXLA is a compiler project that aims to make AI models portable across different hardware backends (CPUs, GPUs, TPUs, etc.). Mojo, a new programming language from Modular, aims to combine the usability of Python with the performance of C++, providing a powerful alternative for AI development.

- Triton: Originally from OpenAI, Triton is a Python-based language that enables researchers to write highly efficient GPU code, often outperforming hand-tuned libraries. Its growing adoption is a key piece of GPT Ecosystem News.

The success of these open initiatives is crucial for fostering a healthy, competitive hardware market and preventing vendor lock-in, a major concern for companies investing heavily in GPT Deployment News.

The Importance of Software-Hardware Co-Design

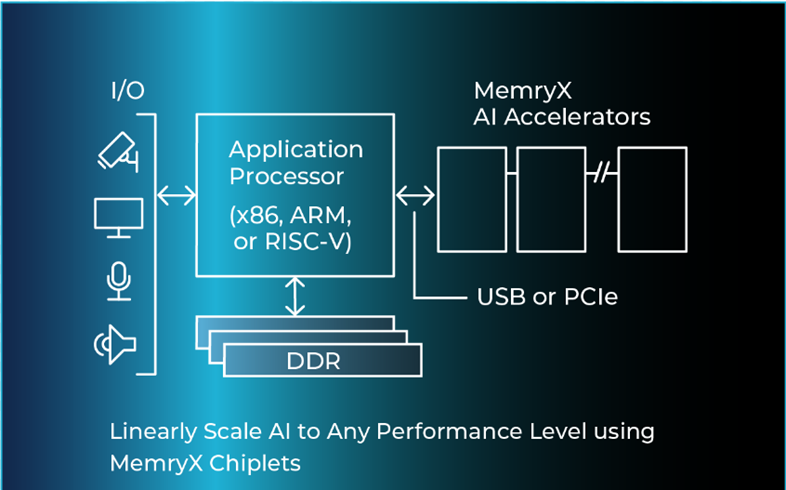

The most effective AI systems are born from a tight integration of hardware and software. This is where optimization techniques become critical. Techniques like GPT Quantization News (reducing the precision of model weights from 32-bit floats to 8-bit integers) and GPT Distillation News (training a smaller “student” model to mimic a larger “teacher” model) can dramatically reduce a model’s memory footprint and computational requirements. These software optimizations allow massive models to run on smaller, more efficient hardware, a critical enabler for GPT Edge News and applications in IoT devices. The hardware itself is now being designed with these techniques in mind, with specialized support for low-precision arithmetic.

Section 4: Practical Considerations and Best Practices

With this explosion of options, how should developers, researchers, and businesses choose the right hardware? The answer depends entirely on the specific application and goals.

Training vs. Inference: A Tale of Two Workloads

The hardware requirements for training an LLM are vastly different from those for running it in production (inference).

- Training: This is a “scale-out” problem. It requires massive clusters of powerful accelerators with extremely high-speed interconnects and vast amounts of high-bandwidth memory. The goal is to minimize the time-to-train, which can take weeks or months. This is the domain of NVIDIA’s H100 clusters, Google’s TPU Pods, and Cerebras’s CS-3 systems. It’s a critical consideration for those following GPT Fine-Tuning News and developing GPT Custom Models News.

- Inference: This is a “scale-up” problem focused on serving millions of users concurrently. The key metrics are latency (time to first token), throughput (tokens per second), and total cost of ownership (TCO). This is where specialized hardware like Groq’s LPU, Amazon’s Inferentia, and quantized models running on more modest hardware shine. For many real-world GPT Applications News, from GPT in Finance News to GPT in Healthcare News, low-latency inference is non-negotiable.

Tips for Navigating the Hardware Maze

When evaluating hardware for your AI workloads, consider the following:

- Define Your Primary Workload: Are you primarily training, fine-tuning, or running inference? Your answer will immediately narrow the field of suitable hardware.

- Analyze the Software Ecosystem: Does the hardware support your preferred frameworks (PyTorch, TensorFlow, JAX)? How mature is its compiler and set of libraries? Migrating from the CUDA ecosystem can be a significant engineering effort.

- Benchmark for Your Model: Don’t rely on marketing claims. Benchmark performance on your specific model architecture and size. A chip that excels at one task (e.g., computer vision) may not be the best for another (e.g., language generation).

- Consider Total Cost of Ownership (TCO): Look beyond the initial hardware cost. Factor in power consumption, cooling, and the engineering resources required to support the platform. Power efficiency is a major driver of the GPT Efficiency News trend.

- Future-Proofing: Consider the hardware vendor’s roadmap. How will their architecture scale to support the next generation of models, such as those anticipated in GPT-5 News?

Conclusion: The Dawn of a New Computing Era

The era of a single, dominant architecture for AI is drawing to a close. The Cambrian explosion in GPT hardware is a direct response to the monumental success and computational appetite of large language models. We are moving from a world of general-purpose acceleration to one of purpose-built, highly specialized silicon designed for specific AI workloads. This intense competition is fantastic news for the entire field. It will drive down costs, democratize access to powerful AI, and unlock new applications we can only begin to imagine. From massive, wafer-scale training engines to hyper-efficient inference chips at the edge, the diversity of these new silicon brains will be the engine that powers the next wave of the AI revolution. Keeping a close watch on GPT Hardware News is no longer just for chip designers; it is essential for anyone building, deploying, or investing in the future of artificial intelligence.