Breaking the Language Barrier: A Deep Dive into GPT’s Multilingual Revolution

The Dawn of a Truly Global AI: Understanding the Multilingual Leap in GPT Models

For decades, the dream of a universal translator has been a cornerstone of science fiction, promising a world without linguistic divides. Today, that fiction is rapidly becoming fact, driven by monumental advancements in large language models (LLMs). We are witnessing a pivotal shift in artificial intelligence, moving beyond models that simply translate English to other languages and toward models that possess a native, nuanced understanding of a vast spectrum of human communication. This evolution represents more than just an incremental update; it’s a foundational change that unlocks unprecedented potential for global collaboration, commerce, and creativity. The latest GPT Models News signals a new era where AI is not just bilingual but truly multilingual, capable of reasoning and generating content across diverse languages with remarkable speed and accuracy. This article explores the technical underpinnings of this revolution, its real-world applications, and the future it heralds for developers, businesses, and society at large.

Section 1: The Evolution of Multilingual AI: From Clunky Translation to Native Fluency

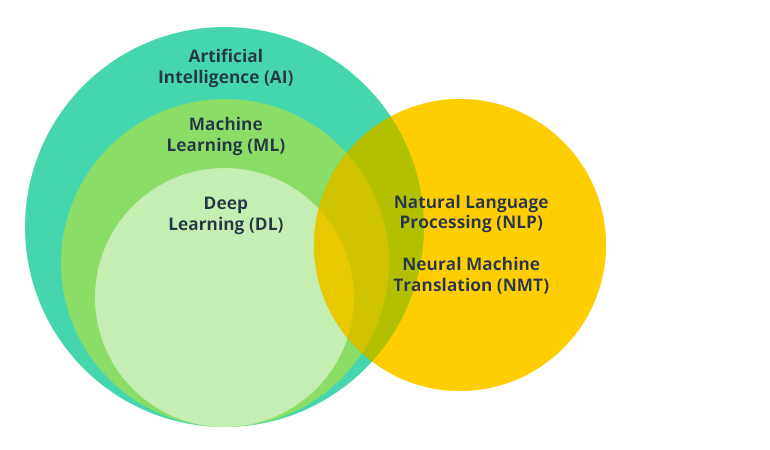

The journey to create truly multilingual AI has been long and complex. Early approaches were dominated by Statistical Machine Translation (SMT), which relied on analyzing massive bilingual text corpora to find statistical likelihoods between words and phrases. While functional, SMT often produced literal, grammatically awkward translations that lacked context and nuance. The advent of Neural Machine Translation (NMT) using recurrent neural networks (RNNs) and later, the Transformer architecture, marked a significant improvement, leading to more fluid and context-aware translations.

From English-Centric to Globally Aware

Early generative models, including initial versions of GPT, were largely English-centric. Their training data was predominantly in English, and their tokenizers—the systems that break down text into numerical pieces the model can process—were optimized for the Latin alphabet. This created significant inefficiencies when dealing with other languages. For example, a single character in a language like Japanese or Hindi might be broken into multiple tokens, making processing slower, more expensive, and less contextually accurate. The latest GPT-3.5 News and GPT-4 News have shown a steady improvement in this area, but recent breakthroughs represent a quantum leap.

The Tokenization Bottleneck and Its Solution

The core of this new multilingual proficiency lies in solving the tokenization problem. According to recent GPT Tokenization News, newer models employ far more efficient tokenizers trained on a diverse, global dataset. This means a sentence in Korean or Arabic now requires significantly fewer tokens than it did previously. This is not just a minor tweak; it has profound implications:

- Lower Latency & Higher Throughput: Fewer tokens mean faster processing, which is critical for real-time applications like live translation or interactive GPT Chatbots News. This directly impacts GPT Latency & Throughput News, making global-scale applications more feasible.

- Reduced Costs: For developers using APIs, costs are often tied to token count. A more efficient tokenizer makes building multilingual applications with GPT APIs News more economically viable.

- Improved Accuracy: When a model can represent complex characters and concepts with fewer, more meaningful tokens, its ability to grasp grammar, syntax, and idiomatic expressions in that language improves dramatically. This is a key driver behind the latest GPT Language Support News.

This shift from an English-first model with translation capabilities to a natively multilingual architecture is the fundamental change enabling the next generation of global AI applications.

Section 2: Under the Hood: The Architectural Shifts Driving Multilingual Proficiency

The enhanced multilingual capabilities of modern GPT models are not the result of a single innovation but a confluence of advancements in architecture, training techniques, and data processing. Understanding these technical details is crucial for appreciating the depth of this leap forward and for leveraging its full potential.

A Unified, Multimodal Architecture

A key piece of GPT Architecture News is the move towards a single, unified model that processes information across different modalities—text, audio, and images—natively. This is a significant departure from older systems that would pipe outputs from one model (e.g., speech-to-text) into another (text-to-text). According to GPT Multimodal News, by training on a vast dataset that includes spoken language, the model learns not just the text but also the tone, rhythm, and emotional nuance inherent in speech. This allows it to understand and generate language with greater fidelity, capturing subtleties that are often lost in pure text. This integrated approach is a cornerstone of recent GPT Vision News and audio processing advancements, creating a more holistic understanding of human communication in any language.

Advanced Training Techniques and Global Datasets

The quality of an AI model is inextricably linked to the data it’s trained on. The latest GPT Training Techniques News highlights a deliberate focus on curating massive, high-quality, and diverse multilingual datasets. This goes beyond simply scraping the web. It involves sophisticated data filtering and balancing to ensure that less-resourced languages are adequately represented, mitigating some of the biases inherent in internet data. Furthermore, techniques like curriculum learning, where the model is first trained on simpler concepts before moving to more complex ones, are being applied across languages. This focus on data quality is a major theme in ongoing GPT Research News and directly impacts GPT Bias & Fairness News by promoting more equitable performance across different linguistic and cultural groups.

Efficiency and Optimization for Real-World Deployment

A powerful model is only useful if it can be deployed efficiently. The field is buzzing with GPT Efficiency News, focusing on making these colossal models faster and cheaper to run. Techniques like quantization (reducing the precision of the model’s numerical weights) and distillation (training a smaller, faster model to mimic a larger one) are becoming standard practice. This GPT Optimization News is critical for enabling real-world use cases. Improved efficiency, as detailed in GPT Inference News, means that complex multilingual reasoning can happen in near real-time, opening the door for applications on less powerful hardware, a key topic in GPT Edge News. These optimizations, combined with powerful GPT Hardware News and new GPT Inference Engines, are making advanced AI accessible to a much broader audience.

Section 3: Real-World Impact: Unleashing Global Potential with Advanced GPTs

The transition to natively multilingual GPT models is unlocking a wave of innovation across virtually every industry. By breaking down language barriers, these technologies are fostering global collaboration, personalizing user experiences, and creating entirely new markets. The latest GPT Applications News reveals a landscape ripe with opportunity.

Transforming Global Industries

- Healthcare: In healthcare, clear communication is a matter of life and death. The latest GPT in Healthcare News showcases applications where doctors can communicate with patients in their native language via a real-time AI intermediary, ensuring accurate symptom descriptions and treatment instructions. This can dramatically improve patient outcomes in multicultural regions.

- Finance: According to GPT in Finance News, financial firms are using advanced models to analyze global market sentiment by processing news, reports, and social media in dozens of languages simultaneously, providing a significant competitive edge.

- Legal Tech: The complexity of international law is a major hurdle for global business. GPT in Legal Tech News highlights tools that can perform cross-lingual document review, comparing contracts written in different languages and flagging discrepancies, saving thousands of hours of manual work.

Empowering Education, Creativity, and Marketing

- Education: The potential in education is immense. GPT in Education News points to personalized AI tutors that can explain complex scientific concepts to a student in their native tongue, adapting its vocabulary and examples to their cultural context.

- Content Creation & Gaming: For creators, this technology is a game-changer. GPT in Content Creation News describes how a single piece of creative work can be instantly and idiomatically localized for global audiences. In gaming, GPT in Gaming News reports on the development of real-time translation for in-game chat, allowing players from around the world to strategize and socialize seamlessly.

- Marketing: Hyper-personalization is the holy grail of marketing. GPT in Marketing News details how companies can now move beyond simple translation to craft culturally resonant marketing campaigns that speak directly to the values and nuances of each local market.

Navigating Ethical and Societal Challenges

With this great power comes great responsibility. The GPT Ethics News and GPT Safety News communities are actively discussing the challenges. How do we prevent these models from perpetuating cultural stereotypes found in their training data? How do we ensure user data is handled with care, a topic central to GPT Privacy News? As governments take notice, GPT Regulation News will become increasingly important, shaping the legal framework for deploying these powerful tools responsibly.

Section 4: Navigating the Multilingual Landscape: Best Practices and Future Horizons

For developers, businesses, and researchers, capitalizing on this multilingual revolution requires a strategic approach. It’s not enough to simply plug into an API; achieving the best results involves understanding the nuances of the technology and anticipating its future trajectory.

Best Practices for Implementation

When developing multilingual applications, it’s crucial to move beyond literal translation. Developers should focus on “transcreation”—adapting content to a specific culture, not just a language. This involves careful prompt engineering that provides cultural context. For instance, when creating a marketing campaign, a prompt might specify a formal tone for a German audience and a more casual, enthusiastic tone for a Brazilian one. Staying updated on the GPT Ecosystem News and leveraging specialized GPT Tools News can provide frameworks and guardrails for building more culturally aware applications. Furthermore, exploring GPT Custom Models News and GPT Fine-Tuning News can allow organizations to train models on their own domain-specific, multilingual data for superior performance.

Looking Ahead: The Future of Global AI

The pace of innovation is relentless. Whispers of GPT-5 News suggest even more profound capabilities are on the horizon. The trend is moving towards proactive, autonomous GPT Agents News that can understand a user’s goal and execute complex, multi-step tasks across different languages and platforms. We can also expect to see a greater push for on-device processing, a key theme in GPT Edge News, which will enhance privacy and enable powerful AI applications in environments with limited connectivity, such as in IoT devices (GPT Applications in IoT News). The open-source community will also play a vital role, with GPT Open Source News tracking alternatives and complementary technologies that drive competition and innovation, a topic closely watched by those following GPT Competitors News.

Conclusion: A More Connected World, Powered by AI

The recent advancements in the multilingual capabilities of GPT models represent a watershed moment in the history of artificial intelligence. We are moving from a world where AI learned to translate languages to one where AI understands them natively. This fundamental shift, driven by architectural innovations, superior training data, and a focus on efficiency, is not just a technical achievement; it is a catalyst for global connection. From making healthcare more accessible and education more personalized to enabling seamless international business and fostering cross-cultural creativity, the applications are as vast as human language itself. As we continue to explore this new frontier, a commitment to ethical development and responsible deployment will be paramount. The future of AI is not just intelligent; it is multilingual, and it is here.