The Great Shrink: Why GPT Efficiency is the New Frontier in AI

The Unseen Arms Race in AI: Moving Beyond Bigger Models

For the past several years, the dominant narrative in artificial intelligence has been one of scale. The release of successively larger and more capable models, from GPT-3 to GPT-4 and beyond, has captured the public imagination and driven incredible innovation. This “bigger is better” philosophy, central to the latest GPT Models News, has been fueled by the observation that increasing parameter counts often leads to emergent abilities and superior performance. However, a powerful counter-current is now gaining momentum, shifting the focus from sheer size to sophisticated efficiency. The future of AI may not be confined to massive, energy-hungry data centers but distributed across billions of devices at the network’s edge.

This paradigm shift is driven by a convergence of critical needs: reducing computational costs, minimizing latency for real-time applications, enhancing user privacy, and enabling powerful AI on personal devices. The conversation is no longer just about what a model can do, but how efficiently it can do it. This emerging field, a hot topic in GPT Efficiency News, explores everything from novel model architectures that challenge the Transformer’s dominance to advanced optimization techniques that make today’s powerful models leaner and faster. This article delves into the critical importance of AI efficiency, explores the groundbreaking techniques making it possible, and examines the profound implications for the future of GPT Applications News and the broader technology landscape.

Section 1: The Inevitable Reckoning with AI’s Scale Problem

The success of large language models (LLMs) like those in the GPT series has come at a staggering cost. Training and running these behemoths requires vast server farms, consuming immense amounts of electricity and capital. This reality presents several fundamental challenges that are forcing a pivot towards efficiency.

The Triple Constraint: Cost, Latency, and Environment

The first and most obvious hurdle is economic. The computational resources needed for training and inference are a significant barrier to entry, concentrating power in the hands of a few tech giants. As reported in OpenAI GPT News, the operational costs of services like ChatGPT are substantial, driven by the constant demand on powerful GPUs. For businesses looking to leverage GPT APIs News, inference costs can quickly add up, making large-scale deployment economically unviable for many use cases.

Second, latency—the delay between a user’s query and the model’s response—is a critical factor for interactive applications. A conversational AI, a real-time translation service, or a gaming NPC powered by an LLM must respond almost instantly. Relying on a round trip to a distant data center introduces unavoidable delays, degrading the user experience. This focus on GPT Latency & Throughput News is pushing developers to find ways to process data closer to the source.

Finally, the environmental impact of AI is a growing concern. The carbon footprint associated with training a single large model can be equivalent to hundreds of transatlantic flights. As AI becomes more integrated into society, this energy consumption is unsustainable. The push for “Green AI” is not just an ethical consideration but a practical necessity for long-term growth.

The Privacy and Autonomy Imperative

Beyond the operational constraints, centralizing AI in the cloud creates significant privacy and data security risks. When sensitive personal, financial, or health data must be sent to a third-party server for processing, it becomes vulnerable to breaches and misuse. The latest discussions in GPT Privacy News and GPT Regulation News highlight a growing demand for on-device processing. Running models locally—on a smartphone, laptop, or IoT device—ensures that data never leaves the user’s control. This is the core premise of GPT Edge News: bringing AI capabilities directly to the user, ensuring autonomy, offline functionality, and a much higher standard of privacy.

Section 2: The Efficiency Toolkit: New Architectures and Optimization Methods

Addressing the scale problem requires a two-pronged approach: designing fundamentally more efficient model architectures and developing techniques to shrink and accelerate existing ones. This area of GPT Research News is currently one of the most active and exciting in the field.

Beyond Transformers: The Search for a New Foundation

The Transformer architecture, which underpins GPT-3.5 and GPT-4, has a key bottleneck: its self-attention mechanism has a computational and memory complexity that scales quadratically with the length of the input sequence. This makes processing very long documents, codebases, or high-resolution images extremely resource-intensive. The latest GPT Architecture News is filled with research into alternatives:

- State Space Models (SSMs): Models like Mamba have gained significant attention for their ability to model long sequences with linear scaling complexity. They process information sequentially, much like an RNN, but use a more sophisticated mechanism to maintain and update their “state,” allowing them to capture long-range dependencies far more efficiently than traditional RNNs and with less computational overhead than Transformers.

- xLSTM (Extended Long Short-Term Memory): Recent proposals from pioneers in the field aim to revitalize and enhance LSTM-based architectures. By incorporating exponential gating and modified memory structures, xLSTM aims to overcome the vanishing gradient problem more effectively than its predecessors and achieve performance competitive with Transformers but with significantly better scaling properties. This represents major GPT Competitors News, as it challenges the very foundation upon which current LLMs are built.

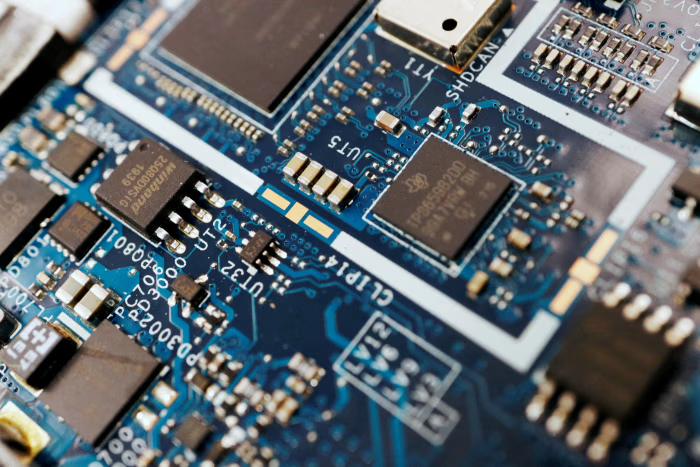

Making GPT Models Leaner: Compression and Optimization

While new architectures are being developed, a parallel effort is focused on optimizing the massive models we already have. These GPT Optimization News techniques are crucial for deploying models on resource-constrained hardware.

- Quantization: This involves reducing the numerical precision of the model’s weights and activations. For example, converting 32-bit floating-point numbers (FP32) to 8-bit integers (INT8) can reduce the model’s memory footprint by 75% and often leads to faster inference on compatible GPT Hardware News, with only a minor drop in accuracy. GPT Quantization News is a key area for developers working on edge deployment.

- Pruning: This technique identifies and removes redundant or unimportant weights from the neural network, creating a “sparse” model. This is analogous to trimming unnecessary branches from a tree. The resulting model is smaller and can be faster, though it requires careful fine-tuning to maintain performance.

- Knowledge Distillation: This involves using a large, powerful “teacher” model (like GPT-4) to train a much smaller “student” model. The student learns to mimic the teacher’s output distribution, effectively transferring the larger model’s “knowledge” into a more compact form. This is a popular topic in GPT Fine-Tuning News for creating specialized, efficient models.

Section 3: Real-World Impact: GPT on the Edge

The convergence of efficient architectures and optimization techniques is unlocking a new wave of AI applications that run locally, without constant reliance on the cloud. This shift is transforming industries and creating entirely new possibilities.

Case Study: The Truly Smart Personal Assistant

Imagine a voice assistant on your smartphone that doesn’t need an internet connection to function. It can summarize your emails, schedule meetings, and draft messages, all while your personal data remains securely on your device. This is made possible by running a distilled and quantized version of a powerful LLM locally. The low latency enables natural, real-time conversation, and the privacy guarantee is absolute. This is a prime example of the potential highlighted in GPT Assistants News and GPT Chatbots News, moving beyond cloud-dependent solutions.

Practical Applications Across Industries

- Healthcare: In GPT in Healthcare News, edge AI is being explored for wearable devices that can analyze biometric data in real-time to detect anomalies like an impending heart attack or a diabetic emergency, providing instant alerts without sending sensitive health data to the cloud.

- Automotive: An autonomous vehicle cannot afford the latency of consulting a cloud server to decide whether to brake. Efficient, on-board GPT Vision News models are essential for processing sensor data from cameras and LiDAR in milliseconds to navigate complex traffic situations safely.

- Industrial IoT: In a smart factory, as discussed in GPT Applications in IoT News, efficient models on edge devices can monitor machinery for predictive maintenance, analyze production quality in real-time, and optimize energy consumption without overwhelming the central network with raw data streams.

- Content Creation: For artists and developers, the latest GPT in Creativity News involves tools that run locally. This could mean real-time code completion from GPT Code Models News within an IDE or generative art plugins in a design program that work offline, providing a faster and more private creative workflow.

Section 4: Best Practices, Challenges, and Recommendations

While the move towards efficiency and edge AI is promising, it is not without its challenges. Developers and organizations must navigate a complex landscape of trade-offs and technical hurdles.

Navigating the Efficiency-Capability Trade-Off

The most significant challenge is the inherent trade-off between model size and performance. Aggressive compression can sometimes lead to a noticeable degradation in accuracy or an increase in model bias, a key concern in GPT Bias & Fairness News. The key is to find the sweet spot for a specific application.

Best Practices and Recommendations:

- Benchmark Rigorously: Don’t rely on a single metric. According to GPT Benchmark News, it’s crucial to evaluate models based on a holistic set of criteria, including accuracy, latency, throughput, memory usage, and energy consumption on the target hardware.

- Choose the Right Tool for the Job: A massive, general-purpose model is overkill for a specialized task. Use GPT Custom Models News and fine-tuning to create smaller, expert models. Techniques like knowledge distillation are perfect for this.

- Leverage Specialized Hardware: The GPT Hardware News landscape is evolving rapidly. NPUs (Neural Processing Units) in smartphones and specialized AI accelerators for edge devices are designed to run quantized models extremely efficiently. Optimizing for this hardware is critical.

- Consider Hybrid Models: The future is not a binary choice between cloud and edge. A hybrid approach, where a small, fast on-device model handles most tasks and only escalates complex queries to a larger cloud model, can offer the best of both worlds—balancing privacy, latency, and capability.

The Deployment and Maintenance Hurdle

Deploying and updating models across a diverse fleet of edge devices is a significant operational challenge. Unlike a centralized cloud service, managing different hardware capabilities, operating systems, and software versions requires robust MLOps (Machine Learning Operations) infrastructure. Utilizing standardized GPT Inference Engines and platforms designed for edge deployment is essential for managing this complexity.

Conclusion: A Smarter, More Accessible AI Future

The relentless pursuit of scale has brought us the incredible power of models like GPT-4, but the next great leap in AI will be defined by efficiency. The shift towards leaner architectures, advanced optimization, and edge deployment is not merely a technical trend; it is a fundamental re-imagining of how and where artificial intelligence will create value. By making AI less dependent on centralized, resource-intensive infrastructure, we can build applications that are faster, more private, more accessible, and more sustainable.

As covered in ongoing GPT Trends News and GPT Future News, the industry is moving from a monolithic to a distributed model of intelligence. The future AI ecosystem will be a rich tapestry of massive foundation models in the cloud working in concert with billions of small, hyper-efficient specialist models on the edge. This new frontier promises to democratize AI capabilities, placing unprecedented power directly into the hands of users and ushering in an era of truly personal and pervasive intelligence.