OpenAI’s Strategic Pivot: Deep Dive into New Open-Weight Safety Models and the Future of GPT Ecosystems

Introduction

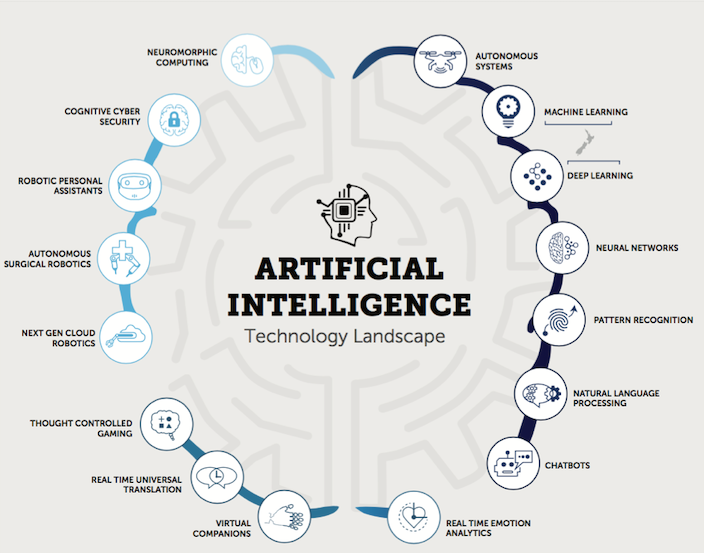

The landscape of artificial intelligence is undergoing a seismic shift, moving from a binary choice between closed-source APIs and open-source experimentation toward a nuanced hybrid ecosystem. In a significant development for **GPT Models News**, the AI community is buzzing about the release of new open-weight models specifically designed for safeguarding and reasoning. This move marks a departure from the traditional “black box” approach, offering developers and enterprises access to specialized models—specifically in the 20-billion and 120-billion parameter classes—aimed at fortifying AI applications.

For years, **OpenAI GPT News** has been dominated by the race for larger, more capable proprietary models like GPT-4. However, the introduction of accessible, open-weight safety models (often referred to in recent discussions as safeguard variants) signals a maturity in the industry. It addresses a critical bottleneck: how to deploy powerful generative AI while maintaining strict adherence to safety, privacy, and compliance standards without relying solely on external API filters. This article delves deep into the technical specifications, architectural implications, and real-world applications of these new tools, analyzing how they reshape **GPT Safety News** and the broader **GPT Ecosystem News**.

Section 1: The Architecture of Open Safety and Reasoning

The release of models in the 20b and 120b parameter range represents a strategic sweet spot in **GPT Architecture News**. Unlike massive general-purpose models, these specialized weights are tuned for specific tasks: reasoning through policies, detecting adversarial inputs, and ensuring output alignment.

Understanding the Parameter Classes

The bifurcation into two distinct sizes—20b and 120b—serves different tiers of infrastructure and latency requirements, a hot topic in **GPT Scaling News**.

* The 20b Model (Edge and Efficiency): This model is designed for high-throughput environments. In the context of **GPT Efficiency News**, a 20-billion parameter model is small enough to be quantized and run on consumer-grade hardware or edge servers. It acts as a first line of defense, capable of rapid classification and basic reasoning. It is ideal for real-time applications where **GPT Latency & Throughput News** is a primary KPI.

* The 120b Model (Deep Reasoning): The larger variant addresses complex nuance. In **GPT Research News**, it is well-documented that smaller models struggle with “gray area” safety policies. The 120b model utilizes its vast parameter count not just for knowledge, but for deep semantic reasoning to adjudicate complex edge cases that smaller models might miss.

From Chatbots to Guardrails

These models differ fundamentally from standard **ChatGPT News** updates. They are not necessarily designed to be the “personality” of a chatbot but rather the “conscience.” This aligns with emerging trends in **GPT Agents News**, where multi-agent systems require a dedicated supervisor node. By releasing these as open weights, developers can fine-tune the safety parameters on their own datasets—a massive leap forward for **GPT Fine-Tuning News**.

This shift also impacts **GPT Multimodal News** and **GPT Vision News**. As these safety models evolve, they are expected to act as gatekeepers not just for text, but for analyzing multimodal inputs to prevent jailbreaks via image or audio vectors, ensuring a holistic safety net.

Section 2: Technical Breakdown, Optimization, and Deployment

Deploying these open-weight safeguard models requires a different technical approach compared to consuming a REST API. This section explores the mechanics of implementation, touching upon **GPT Deployment News** and **GPT Hardware News**.

Inference Engines and Quantization

To make a 120b model viable for enterprise use without a massive GPU cluster, optimization is key. This brings **GPT Quantization News** to the forefront. Developers are utilizing techniques to compress these models down to 4-bit or even 3-bit precision with minimal loss in reasoning capability.

Tools and platforms like Ollama and Hugging Face have become central to **GPT Platforms News**, allowing these models to be loaded locally. For instance, running the 20b safeguard model via `llama.cpp` allows for CPU-based inference, democratizing access for startups that cannot afford H100 clusters. This is a critical development in **GPT Inference Engines News**, as it reduces the barrier to entry for robust AI safety.

Integration Patterns: The Sidecar Pattern

A best practice emerging from **GPT Optimization News** is the “Sidecar” deployment pattern. In this architecture, the main application (perhaps running **GPT-4 News** or **GPT-5 News** class models via API) is paired with a local instance of the open-weight safeguard model.

1. Input Guarding: The user prompt is first sent to the local 20b safeguard model.

2. Sanitization: If the local model detects PII or malicious intent (relevant to **GPT Privacy News**), it rejects the prompt before it ever leaves the secure environment.

3. Output Verification: The response from the main LLM is checked by the safeguard model to ensure it meets company tone and safety guidelines.

This hybrid approach drastically reduces costs associated with **GPT APIs News**, as rejected prompts never incur API token charges, and it enhances privacy by keeping sensitive data filtering on-premise.

Handling Context and Distillation

With **GPT Distillation News** gaining traction, we are seeing techniques where the reasoning capabilities of the 120b model are distilled into smaller 7b or 8b custom models for specific domain tasks. This allows organizations to create hyper-specialized safety nets—for example, a model trained specifically to detect medical misinformation—referencing **GPT Custom Models News**.

Section 3: Industry Implications and Real-World Scenarios

The availability of open-weight safety models transforms how specific verticals integrate Generative AI. This section explores the impact across various sectors, highlighting **GPT Applications News**.

Finance and Legal Tech: The Privacy Imperative

In **GPT in Finance News** and **GPT in Legal Tech News**, data sovereignty is paramount. Financial institutions often block the use of public LLMs due to the risk of data leakage.

* Scenario: A bank wants to use a coding assistant. By deploying the 120b safeguard model locally, they can scan every snippet of code for proprietary algorithms or customer data *before* it is sent to a cloud-based coding model. This local “air gap” facilitated by open weights satisfies strict compliance regulatory bodies, a major topic in **GPT Regulation News**.

Healthcare: Ethical Reasoning

**GPT in Healthcare News** focuses heavily on accuracy and ethics. A generic safety filter might block a query about “poison” that is actually a valid toxicology question from a medical researcher.

* Scenario: A hospital fine-tunes the open-weight safeguard model on medical literature. This specialized guardrail understands the difference between self-harm queries and legitimate medical inquiry, allowing the system to function effectively for doctors while maintaining safety protocols. This is a prime example of **GPT Ethics News** in action.

Education: protecting the Learning Environment

For **GPT in Education News**, the challenge is protecting minors while allowing creative freedom.

* Scenario: An EdTech platform uses the 20b model (optimized for low latency) to monitor student interactions in real-time. Because the weights are open, the platform can adjust the model to be more lenient on creative writing prompts while maintaining zero tolerance for bullying or harassment, offering a level of customization impossible with rigid API filters.

Marketing and Content Creation

In **GPT in Marketing News** and **GPT in Content Creation News**, brand safety is the priority. Companies can train the safeguard model to recognize and reject content that conflicts with specific brand values or tone of voice, effectively automating the editorial review process.

Section 4: The Strategic Landscape and Future Outlook

The release of these models is not just a technical update; it is a strategic maneuver that affects **GPT Competitors News** and the future of the AI market.

The Open Source vs. Closed Source Dynamic

This development blurs the lines in **GPT Open Source News**. By releasing safety weights, OpenAI acknowledges that while the “brain” (the frontier model) may remain closed, the “immune system” (safety) benefits from community stress-testing. This puts pressure on competitors who keep their safety mechanisms entirely opaque. It establishes a standard for **GPT Bias & Fairness News**, allowing researchers to audit the weights to see exactly how the model defines “unsafe” content.

Impact on the Tooling Ecosystem

This fuels a boom in **GPT Tools News** and **GPT Integrations News**. We can expect to see a new wave of enterprise software that wraps these open weights into easy-to-deploy containers (Docker/Kubernetes) for corporate IT environments. It also accelerates **GPT Edge News**, as hardware manufacturers will optimize their chips (NPUs) to run these specific parameter sizes efficiently.

Future Trends: Autonomous Agents and IoT

Looking ahead to **GPT Future News** and **GPT Applications in IoT News**, these lightweight safety models will likely reside directly on devices. Imagine a smart speaker or a robotic assistant that processes safety constraints locally without needing an internet connection. This is crucial for **GPT Assistants News**, ensuring that autonomous agents do not take harmful actions even if they lose connectivity to the cloud.

Conclusion

The release of open-weight safeguard models in the 20b and 120b classes is a watershed moment for the AI industry. It moves the conversation from “how smart is the model?” to “how safely and reliably can we deploy it?” By empowering developers with the tools to build local, private, and customizable guardrails, this development addresses the most critical barriers to enterprise adoption: privacy, latency, and control.

From enhancing **GPT in Gaming News** with moderated voice chats to securing high-stakes financial transactions, the applications are limitless. As we monitor **GPT Trends News**, it is clear that the future of AI is hybrid—combining the raw power of massive cloud models with the agile, secure, and transparent oversight of open-weight safety systems. Developers and organizations should immediately begin experimenting with these models, integrating them into their **GPT Datasets News** pipelines and inference workflows to build the next generation of robust AI applications.