Unlocking Efficiency: The State of GPT Quantization and the Future of Edge AI

Introduction: The Weight of Intelligence

The artificial intelligence landscape has been dominated by a singular, overwhelming trend: scaling. From the early days of language modeling to the release of massive architectures covered in GPT-4 News and GPT-5 News, the philosophy has largely been “bigger is better.” However, as parameter counts soar into the trillions, a critical bottleneck has emerged. The computational cost of running these models—specifically the inference latency and memory requirements—has become prohibitive for many real-world applications. This is where the latest GPT Quantization News becomes the most critical topic in the industry.

Quantization is the process of reducing the precision of the numbers used to represent a model’s parameters, effectively compressing the model to run on smaller hardware footprints without significantly sacrificing intelligence. While the headlines often focus on GPT Scaling News, the real engineering revolution is happening in the trenches of optimization. Recent breakthroughs in quantization techniques are transforming how we think about GPT Deployment News, moving us away from massive server farms and toward a future where powerful AI lives on local devices.

In this article, we will explore the cutting-edge developments in model compression, analyzing how techniques like GPTQ, AWQ, and GGUF are reshaping the ecosystem. We will examine the implications for GPT Edge News, discuss the trade-offs between speed and accuracy, and look at how these technical strides are fueling innovation across finance, healthcare, and IoT sectors.

Section 1: The Mechanics of Compression – Recent Breakthroughs

From FP16 to INT4: The Race to the Bottom

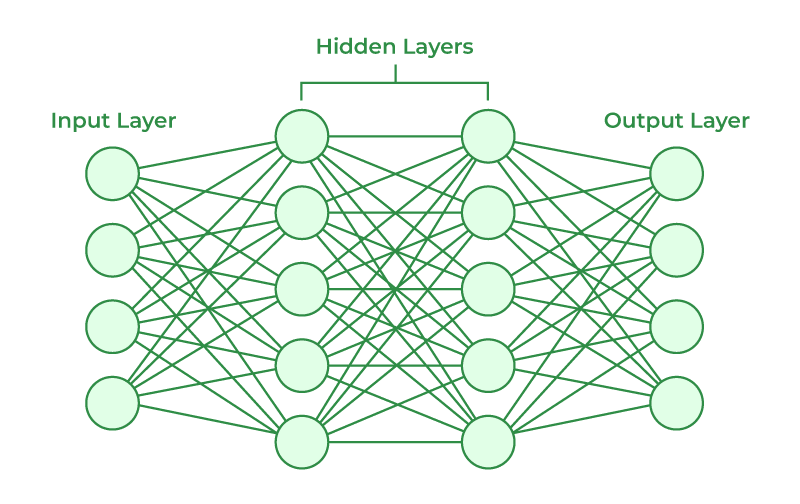

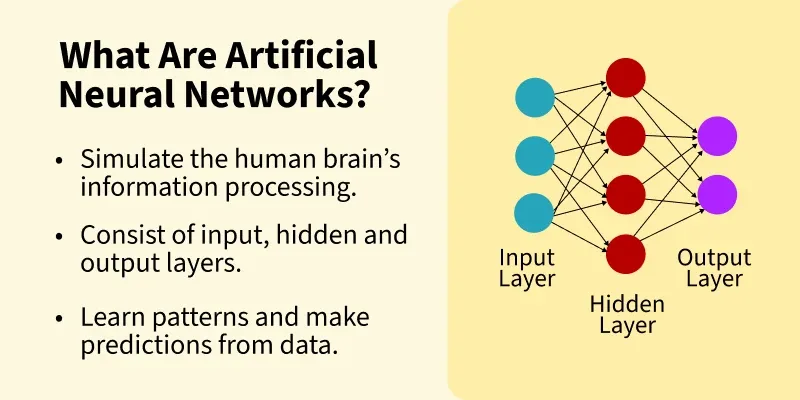

To understand the significance of recent GPT Compression News, one must understand the baseline. Traditionally, Large Language Models (LLMs) are trained in 16-bit floating-point precision (FP16) or 32-bit (FP32). This means every single weight in the neural network requires significant memory bandwidth to load and process. For a model like GPT-3, this translates to hundreds of gigabytes of VRAM just to load the model, let alone run it.

The latest GPT Optimization News highlights a shift toward 4-bit and even 3-bit quantization. By mapping these high-precision floating-point numbers to lower-precision integers (INT8 or INT4), developers can reduce the model size by up to 75%. Recent research papers and open-source contributions have demonstrated that 4-bit quantization, when done correctly, results in negligible degradation of perplexity (a measure of how well the model predicts the next token).

The Rise of GPTQ and AWQ

Two acronyms have dominated GPT Tools News and GPT Inference News recently: GPTQ and AWQ.

GPTQ (Generative Pre-trained Transformer Quantization) has become a standard for post-training quantization. Unlike older methods that simply rounded weights (causing “brain damage” to the model), GPTQ uses second-order information to compensate for the error introduced during quantization. It adjusts the remaining un-quantized weights to minimize the output error layer by layer. This allows GPT Models News to report that massive models can now run on consumer-grade GPUs.

However, AWQ (Activation-aware Weight Quantization) is challenging GPTQ’s dominance. The insight behind AWQ is that not all weights are created equal. Some parameters are crucial for the model’s performance, while others are less significant. AWQ identifies the 1% of salient weights that are critical for activation and protects them, keeping them in higher precision while aggressively compressing the rest. This aligns with GPT Architecture News suggesting that hybrid precision is the future of efficient inference.

QLoRA: Democratizing Fine-Tuning

Perhaps the most impactful development in GPT Fine-Tuning News is QLoRA (Quantized Low-Rank Adaptation). Before QLoRA, fine-tuning a large model required massive compute resources to update the weights. QLoRA allows developers to freeze the main model in 4-bit precision and only train a small adapter layer. This has exploded the GPT Open Source News ecosystem, allowing individual researchers and small startups to create custom models on a single GPU, fueling a wave of GPT Custom Models News.

Section 2: Detailed Analysis of Performance and Benchmarks

The Latency vs. Accuracy Trade-off

When analyzing GPT Benchmark News, the primary metric for quantization success is the balance between throughput (tokens per second) and perplexity. In the past, dropping to INT4 meant the model would start hallucinating or losing coherence—a major concern for GPT Safety News and GPT Ethics News. Today, the gap has narrowed significantly.

Recent benchmarks show that a 70-billion parameter model quantized to 4-bit precision often outperforms a 13-billion parameter model running at full 16-bit precision. This counter-intuitive finding suggests that “size matters more than precision.” It is better to have a larger, smarter brain compressed down, than a smaller brain running at high fidelity. This insight is driving GPT Research News toward training larger models specifically designed to be quantized later.

Hardware Acceleration and Inference Engines

Software is only half the battle; GPT Hardware News plays a pivotal role. The latest generation of GPUs and TPUs includes specific tensor cores designed to accelerate INT8 and INT4 operations. Furthermore, GPT Inference Engines News highlights the rise of specialized runtimes like vLLM, TensorRT-LLM, and llama.cpp.

Llama.cpp, in particular, revolutionized GPT Efficiency News by introducing the GGUF format. This allowed LLMs to run efficiently on standard CPUs (like the one in your laptop) and Apple Silicon, utilizing unified memory. This shift is critical for GPT Applications in IoT News, as it enables smart assistants to run locally on devices without needing an internet connection to a central API.

The 1-Bit Era: BitNet

Looking toward GPT Future News, researchers are experimenting with 1-bit LLMs, such as BitNet b1.58. These models use ternary weights (-1, 0, 1). This radical approach eliminates multiplication operations entirely, replacing them with addition, which is computationally much cheaper. While still in the research phase, this suggests we are approaching a theoretical limit of GPT Distillation News, where models become incredibly fast and energy-efficient.

Section 3: Implications for Industries and Applications

Finance: The Need for Speed

In the world of high-frequency trading and financial analysis, milliseconds matter. GPT in Finance News is increasingly focused on how quantized models can process market sentiment and news feeds in real-time. By deploying quantized models on-premise (avoiding API latency), financial institutions can analyze vast amounts of unstructured data—tweets, earnings calls, news articles—instantly. This mirrors the general trend in GPT Integrations News where speed of insight is the primary competitive advantage.

Healthcare and Privacy

GPT in Healthcare News and GPT Privacy News are inextricably linked. Hospitals are hesitant to send patient data to cloud-based APIs like ChatGPT due to HIPAA and GDPR regulations. Quantization solves this by enabling high-performance models to run locally on hospital servers or even on diagnostic devices. A quantized medical LLM can assist doctors with diagnosis support without a single byte of patient data leaving the facility, addressing major concerns regarding GPT Regulation News.

Education and Accessibility

GPT in Education News often discusses the digital divide. If powerful AI is only accessible via expensive APIs or high-end hardware, it exacerbates inequality. Quantization is a democratizing force. It allows GPT Assistants News to run on older smartphones and budget laptops, bringing personalized tutoring and educational content to students in developing regions. This aligns with GPT Multilingual News and GPT Cross-Lingual News, as smaller, efficient models can be fine-tuned for specific local languages without requiring massive infrastructure.

Edge Computing and IoT

The intersection of GPT Edge News and GPT Applications in IoT News is where quantization shines brightest. Imagine a smart home hub that processes voice commands and automates tasks using a local LLM, rather than sending voice data to the cloud. This reduces latency, improves reliability (works offline), and enhances privacy. From smart fridges to industrial sensors, GPT Latency & Throughput News confirms that edge AI is moving from novelty to necessity.

Section 4: Strategic Recommendations and Best Practices

When to Quantize

Despite the benefits, quantization is not a silver bullet. For organizations following GPT Enterprise News, the decision to quantize should be strategic. If the application requires absolute maximum reasoning capability (e.g., complex legal contract analysis discussed in GPT in Legal Tech News or novel scientific discovery), keeping the model in FP16 might be necessary. However, for chatbots, summarization, and classification tasks—common in GPT Chatbots News—quantization is almost always the correct choice.

Navigating the Ecosystem

The GPT Ecosystem News is fragmented. Developers must choose between GGUF for CPU/Apple inference, EXL2 for extreme GPU speeds, or AWQ for production stability. A key tip is to evaluate GPT Platforms News and GPT Competitors News to see which formats are supported by major deployment providers. Currently, containerizing a quantized model using vLLM provides the best balance of throughput and ease of use.

Addressing Bias and Drift

One under-discussed aspect in GPT Bias & Fairness News is how quantization affects model alignment. There is evidence that aggressive quantization can sometimes degrade the safety guardrails fine-tuned into a model. It is essential to run evaluations not just on perplexity, but on safety benchmarks after quantizing a model. This is a critical component of GPT Datasets News—ensuring the validation sets used to test quantized models are robust.

Conclusion: The Future is Condensed

The narrative of AI development is shifting. While GPT Models News will continue to cover the release of ever-larger foundation models, the real utility will be defined by how well we can shrink them. Quantization is no longer just a compression technique; it is the bridge between theoretical AI capability and practical, real-world utility.

From enabling GPT in Creativity News tools to run on an artist’s tablet, to allowing GPT in Gaming News NPCs to generate dynamic dialogue on a console, the impact of these optimization techniques is ubiquitous. As we look toward GPT-5 News and beyond, the most exciting metrics may not be parameter counts, but rather tokens-per-watt and inference-per-dollar. The future of AI is not just smarter; thanks to quantization, it is faster, cheaper, and everywhere.

By staying abreast of GPT Quantization News and GPT Training Techniques News, developers and businesses can ensure they are not just watching the AI revolution, but actively deploying it efficiently and effectively.