The Democratization of Reasoning: How Emerging Open Source Models Are Rivaling GPT-5 Performance

Introduction

The landscape of artificial intelligence is undergoing a seismic shift, marking a pivotal moment in GPT Open Source News. For years, the narrative has been dominated by a singular assumption: that proprietary, closed-source models like those from OpenAI hold an insurmountable lead in reasoning capabilities, coding proficiency, and multimodal understanding. However, recent developments in the ecosystem suggest that this moat is rapidly drying up. We are currently witnessing the emergence of “thinking” models—open-weights architectures that utilize advanced Chain of Thought (CoT) processing to rival, and in some specific benchmarks outperform, the anticipated capabilities of next-generation proprietary systems.

This article explores the latest GPT Models News, focusing on the surge of high-performance open-source contenders that are challenging the dominance of OpenAI GPT News. As the gap between closed APIs and downloadable weights narrows, developers and enterprises are presented with new opportunities for data privacy, customization, and cost efficiency. From the rise of GPT Competitors News to the technical nuances of GPT Architecture News, we will dissect what this paradigm shift means for the future of AI deployment.

Section 1: The Rise of “Thinking” Models in the Open Ecosystem

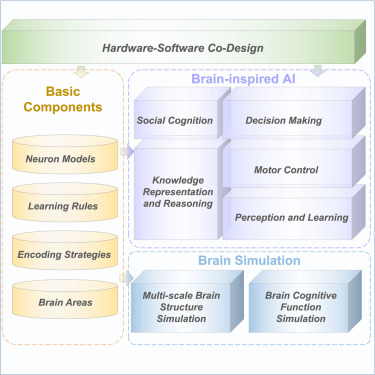

The most significant trend currently dominating GPT Trends News is the arrival of models capable of “System 2” thinking. Unlike traditional large language models (LLMs) that predict the next token based on statistical likelihood, these new architectures incorporate a distinct reasoning phase. This mirrors the methodology seen in proprietary “o1” style models but brings it into the open-source domain.

Breaking the Benchmark Ceiling

Recent reports in GPT Benchmark News indicate that new open-source entrants are not merely catching up; they are trading blows with state-of-the-art proprietary giants. By leveraging reinforcement learning strategies and high-quality synthetic data, these models are achieving superior scores in mathematics, coding, and complex logic puzzles. This is a critical development for GPT-5 News watchers, as it suggests that the performance leap expected from the next generation of closed models is already being approximated by the open community.

For example, in scenarios requiring multi-step logic—such as legal reasoning or complex debugging—these open models are demonstrating a tenacity and accuracy previously reserved for GPT-4 News headlines. This democratization of high-level intelligence changes the calculus for GPT APIs News, as developers no longer strictly require expensive API calls to access top-tier reasoning.

Architectural Innovations and Training

The secret sauce behind these advancements lies in GPT Training Techniques News. Developers are moving beyond simple next-token prediction. Techniques such as “thinking” tokens, where the model outputs its internal monologue before generating a final answer, are becoming standard. This relates closely to GPT Fine-Tuning News, where datasets are now curated to reward the *process* of finding an answer, not just the answer itself.

Furthermore, GPT Multimodal News and GPT Vision News are seeing a convergence. The leading open models are no longer just text-based; they are natively multimodal, processing images and text with a unified understanding that rivals Claude Sonnet 4.5 class performance. This integration is vital for GPT Agents News, allowing autonomous systems to navigate graphical user interfaces or analyze real-world visual data without relying on a central server.

Section 2: Technical Breakdown: Efficiency, Optimization, and Customization

While raw intelligence is headline-worthy, the practical viability of these models relies on GPT Efficiency News. The open-source community is aggressively optimizing how these massive neural networks run on consumer and enterprise hardware.

Compression and Quantization

One of the barriers to adopting high-parameter models has always been VRAM limitations. However, breakthroughs in GPT Compression News and GPT Quantization News are altering the landscape. We are seeing models that retain 95% of their reasoning capability even when quantized down to 4-bit or even 2-bit precision. This connects directly to GPT Hardware News, as it allows powerful “thinking” models to run on single-GPU setups or even high-end consumer laptops (MacBook Pros with unified memory, for instance).

GPT Distillation News is also playing a massive role. Researchers are taking the outputs of massive frontier models and using them to train smaller, denser student models. These “distilled” models offer GPT-3.5 News level speed with GPT-4 News level logic, creating a sweet spot for GPT Inference News where latency and throughput are optimized for real-time applications.

The Edge Computing Revolution

The combination of efficient architectures and quantization fuels GPT Edge News. We are moving toward a world where GPT Applications in IoT News become reality. Imagine a smart home hub that doesn’t just execute commands but understands context and nuance locally, without sending voice data to the cloud. This addresses major concerns found in GPT Privacy News, as sensitive data never leaves the device.

GPT Inference Engines News highlights tools like vLLM, Llama.cpp, and TGI, which have drastically improved the token-per-second generation rates. This makes GPT Latency & Throughput News a competitive advantage for open source, as self-hosted solutions often beat the variable latency of overloaded public APIs.

Section 3: Industry Implications and Real-World Applications

The availability of open-source models that outperform legacy proprietary systems has profound implications across various verticals. The narrative is shifting from “how do we integrate ChatGPT?” to “how do we build our own sovereign AI?”

Healthcare and Finance

In highly regulated industries, GPT in Healthcare News and GPT in Finance News are dominated by the need for data sovereignty. With the new breed of open models, hospitals can deploy diagnostic assistants that analyze patient records within a secure, offline intranet. These models can be fine-tuned on specific medical literature, reducing hallucinations and improving adherence to medical protocols.

Similarly, financial institutions are leveraging GPT Custom Models News to analyze market trends and process loan applications. By using GPT RAG (Retrieval Augmented Generation) with open models, they ensure that proprietary financial data is never exposed to third-party API providers, mitigating risks often discussed in GPT Regulation News.

Coding and Software Development

GPT Code Models News is perhaps the most vibrant sector. Open-source coding assistants are now capable of handling entire repository contexts. Because these models can be self-hosted, companies can allow the AI to index their entire proprietary codebase without fear of IP leakage. This has led to a surge in GPT Tools News and GPT Integrations News within IDEs, where the AI acts as a pair programmer that truly understands the specific architectural patterns of the company.

Education and Creativity

GPT in Education News highlights the use of personalized tutors. Open models allow educational tech companies to build tutors that adapt to a student’s curriculum without the per-token cost that makes scaling proprietary APIs prohibitive. Meanwhile, GPT in Creativity News and GPT in Content Creation News are seeing a boom in localized storytelling engines that assist writers and game designers (GPT in Gaming News) in generating dynamic narratives and dialogue trees on the fly.

Section 4: Strategic Considerations: Pros, Cons, and Best Practices

While the allure of open-source “thinking” models is strong, navigating this ecosystem requires a strategic approach. It is not merely a plug-and-play replacement for ChatGPT News; it requires infrastructure and maintenance.

The Pros: Control and Cost

- Data Privacy: As highlighted in GPT Ethics News and GPT Safety News, owning the model means owning the data flow. There is zero risk of a provider using your data for training if you control the inference stack.

- Cost Predictability: While GPT APIs News often involves variable costs based on usage spikes, self-hosting offers flat hardware costs.

- Customization: GPT Fine-Tuning News suggests that open models are far more amenable to deep customization (LoRA, QLoRA) than the limited fine-tuning APIs offered by major providers.

The Cons: Complexity and Responsibility

- Infrastructure Overhead: Managing GPT Optimization News and ensuring high availability requires a dedicated DevOps team.

- Safety Guardrails: Unlike OpenAI’s curated models, open weights often come with fewer safety filters. Organizations must implement their own content moderation, a key topic in GPT Bias & Fairness News.

- Fragmented Ecosystem: The sheer volume of GPT Datasets News and model variations can be overwhelming. Selecting the right model requires constant vigilance of GPT Ecosystem News.

Best Practices for Deployment

To successfully leverage these new models, organizations should adopt a hybrid approach. Use GPT Platforms News to identify the best managed hosting for open models if you lack on-premise hardware. Invest in GPT Tokenization News research to ensure your specific language or domain is represented efficiently (crucial for GPT Multilingual News and GPT Cross-Lingual News). Finally, always benchmark using your specific use cases rather than relying solely on generic leaderboards.

Conclusion

The recent surge in open-source AI performance is more than just a technical curiosity; it is a fundamental restructuring of the artificial intelligence market. As GPT Open Source News continues to report on models that outperform GPT-5 benchmarks in reasoning and coding, the monopoly of closed-source labs is eroding. We are entering an era of GPT Future News where the distinction between “state-of-the-art” and “open-source” is effectively meaningless.

For developers, businesses, and researchers, this means unparalleled freedom. Whether it is leveraging GPT Assistants News for customer support, utilizing GPT in Legal Tech News for contract analysis, or exploring GPT in Marketing News for automated copy generation, the tools are now available to build sovereign, powerful, and cost-effective AI solutions. The future of AI is not just intelligent; it is open, distributed, and rapidly evolving.