The Diagnostic Duel: How GPT Models and Emerging Competitors Are Redefining Healthcare AI

Introduction

The intersection of artificial intelligence and medicine has historically been a landscape of cautious optimism, but recent developments have accelerated this relationship into a full-blown revolution. For professionals tracking GPT in Healthcare News, the narrative has shifted from simple administrative automation to high-stakes clinical reasoning. We are currently witnessing a pivotal moment where generalist Large Language Models (LLMs) like OpenAI’s GPT-4 are facing fierce competition from specialized, domain-specific models designed explicitly for medical diagnostics.

The latest GPT Models News indicates that while general-purpose architectures have set the standard for reasoning and natural language understanding, the arrival of medically fine-tuned competitors—such as Google’s recent advancements with Med-Gemini—has ignited a new arms race. This competition is not merely about passing medical board exams; it is about multimodal understanding, processing vast electronic health records (EHRs), and providing reasoning that clinicians can trust. As we analyze the trajectory of GPT Future News, it becomes clear that the next generation of AI in healthcare will be defined by its ability to integrate visual data, long-context patient histories, and rigorous safety protocols.

Section 1: The Battle for Medical Supremacy – Generalists vs. Specialists

The Evolution of Clinical Reasoning

To understand the current state of GPT-4 News in the medical sector, we must look at the benchmarks. For a long time, the “gold standard” was passing the USMLE (United States Medical Licensing Examination). GPT-4 achieved this with remarkable proficiency, demonstrating that a generalist model could possess domain-specific knowledge without explicit medical training. However, GPT Competitors News suggests that the ceiling is being raised. Newer models are utilizing uncertainty-guided search strategies and advanced reasoning chains to outperform generalist models in complex diagnostic scenarios.

The core differentiator in recent GPT Research News is the handling of nuance. Medical diagnosis is rarely binary; it involves probabilistic thinking. While standard GPT models excel at pattern matching, specialized architectures are being optimized to mimic the “differential diagnosis” process used by human doctors, weighing conflicting symptoms and requesting additional information before concluding.

Multimodal Capabilities: Beyond Text

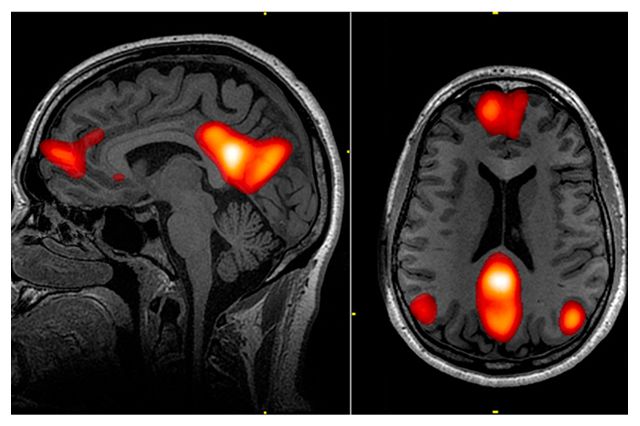

A significant leap forward in GPT Multimodal News and GPT Vision News is the integration of image analysis into the diagnostic workflow. Healthcare is inherently visual—radiology scans, dermatology photos, and pathology slides are as critical as text-based notes. The latest iterations of medical AI are not just reading text; they are analyzing X-rays and CT scans.

For instance, in GPT Applications News, we are seeing pilot programs where models analyze a patient’s history (text) alongside their latest MRI (vision) to suggest potential anomalies. This convergence challenges the traditional architecture of GPT models, pushing the boundaries of GPT Architecture News to accommodate massive, mixed-media datasets without losing inference speed or accuracy.

The Context Window Challenge

One of the most critical aspects discussed in GPT Scaling News is the context window. A patient with a chronic illness may have thousands of pages of medical records spanning decades. Standard models with limited token limits struggle to ingest this “long-tail” history. Recent breakthroughs in GPT Tokenization News and long-context processing allow newer models to ingest entire EHR histories, finding the “needle in the haystack”—such as a drug allergy noted ten years ago—that a tired human clinician might miss.

Section 2: Technical Deep Dive – Architecture, Fine-Tuning, and RAG

Fine-Tuning vs. Prompt Engineering

The debate in GPT Fine-Tuning News centers on the most effective way to adapt LLMs for healthcare. Should hospitals use a massive, frozen model like GPT-4 and rely on prompt engineering, or should they invest in smaller, fine-tuned versions? GPT Custom Models News suggests a hybrid approach is emerging. While foundation models provide the reasoning engine, fine-tuning on de-identified medical datasets (like PubMed or proprietary hospital data) creates a layer of domain specificity that reduces hallucinations.

Furthermore, GPT Training Techniques News highlights the importance of Reinforcement Learning from Human Feedback (RLHF) specifically from medical professionals. Generic RLHF focuses on helpfulness and safety in a broad sense, but medical RLHF prioritizes accuracy, caution, and the admission of ignorance—teaching the model to say “I don’t know” rather than fabricating a diagnosis.

Retrieval-Augmented Generation (RAG) in Medicine

In the realm of GPT Tools News and GPT Integrations News, Retrieval-Augmented Generation (RAG) has become the standard architecture for medical AI. Because medical knowledge changes daily with new research, a static model trained on data from 2023 is already obsolete. RAG allows the model to query live medical databases, trusted journals, and internal protocols in real-time.

This approach mitigates the risks highlighted in GPT Hallucination News (often discussed under GPT Safety News). By grounding the AI’s response in retrieved, citable documents, healthcare providers can verify the source of the AI’s advice. This is a crucial development in GPT Inference News, as it shifts the model from a “know-it-all” oracle to an intelligent research assistant that cites its sources.

Handling Data Privacy and Ethics

Technical implementation cannot be separated from GPT Privacy News and GPT Regulation News. The deployment of GPT models in healthcare requires strict adherence to HIPAA and GDPR. GPT Cloud vs. Edge News is relevant here; many institutions prefer GPT Edge News solutions where the inference happens locally on hospital servers rather than sending sensitive patient data to the cloud. This has spurred interest in GPT Compression News and GPT Quantization News, aiming to shrink powerful models so they can run efficiently on secure, on-premise hardware.

Section 3: Real-World Applications and Implications

Clinical Decision Support Systems (CDSS)

The most transformative application found in GPT Applications News is the next generation of CDSS. Traditional systems were rule-based (if X, then Y). AI-driven systems can handle ambiguity. For example, a GPT Chatbots News integration might listen to a doctor-patient conversation (ambient listening), transcribe it, extract clinical codes, and suggest a differential diagnosis in real-time. This reduces physician burnout—a major crisis in modern healthcare.

Automating Administrative Burdens

While diagnostics grab the headlines, GPT in Finance News and healthcare administration is where the immediate ROI lies. GPT Agents News describes autonomous agents capable of handling prior authorization requests, coding insurance claims, and scheduling. By automating the bureaucratic friction of healthcare, these tools allow providers to focus on patient care. GPT Efficiency News reports that hospitals utilizing these LLM-driven workflows have seen significant reductions in administrative overhead.

Education and Simulation

GPT in Education News is revolutionizing how medical students learn. Instead of static textbooks, students can engage with “virtual patients”—GPT-powered avatars that simulate various conditions, react to treatments, and provide feedback on the student’s bedside manner. This creates a safe sandbox for learning that was previously impossible to scale.

Case Study: The Rare Disease Detective

Consider a scenario involving GPT Datasets News. A patient presents with a constellation of non-specific symptoms. A human doctor, having seen common cases 99% of the time, might misdiagnose it as a common virus. An AI model, however, can cross-reference these symptoms against a vast database of rare diseases. There are documented instances in GPT Trends News where AI tools flagged genetic disorders that human specialists missed simply because the model could access a broader range of “long-tail” medical literature instantly.

Section 4: Challenges, Risks, and Future Outlook

The Bias and Fairness Conundrum

A critical area of concern in GPT Bias & Fairness News is the training data. If medical AI is trained primarily on data from specific demographics, it may perform poorly for underrepresented groups. GPT Ethics News emphasizes the need for diverse datasets to prevent algorithmic discrimination in healthcare outcomes. Developers must rigorously test models across different populations to ensure equity.

Hardware and Latency

For AI to be useful in an emergency room, it must be fast. GPT Latency & Throughput News is vital here. A doctor cannot wait 30 seconds for a response. This drives the demand for better GPT Hardware News and GPT Inference Engines News. The industry is moving toward specialized chips (TPUs, LPUs) designed to accelerate transformer models, ensuring that life-saving insights are delivered in milliseconds.

The “Human-in-the-Loop” Necessity

Despite the advancements in GPT 3.5 News, GPT-4 News, and the anticipation of GPT-5 News, the consensus in GPT Safety News is that AI must remain a co-pilot, not an autopilot. The concept of “automation bias”—where humans blindly trust the computer—is a significant risk. GPT Best Practices dictate that AI outputs should always be presented as suggestions requiring human validation, specifically in high-stakes environments like oncology or surgery.

The Open Source Factor

GPT Open Source News is altering the landscape. While proprietary models like GPT-4 and Med-Gemini lead the pack, open-source medical models (often based on LLaMA architectures) are gaining ground. This democratizes access to medical AI, allowing researchers in resource-constrained settings to build tools tailored to their specific needs without paying high API fees, impacting GPT Ecosystem News significantly.

Conclusion

The landscape of GPT in Healthcare News is shifting rapidly from experimental curiosity to clinical necessity. While generalist models like GPT-4 laid the groundwork, the emergence of specialized competitors and multimodal architectures proves that the future of medical AI lies in depth, precision, and context. As we look toward GPT-5 News and beyond, the focus will likely shift from raw intelligence to trust, integration, and verifiable safety.

For healthcare leaders and developers, the message is clear: the technology is ready, but the implementation requires care. By leveraging GPT APIs News for integration, adhering to GPT Ethics News for safety, and utilizing GPT Optimization News for efficiency, the medical community can harness these powerful tools to enhance human capability rather than replace it. The ultimate winner in this technological arms race will not be a specific company or model, but the patients who benefit from faster, more accurate, and more accessible healthcare.