We Can Finally Run GPT-4V Class Models Locally (And It’s About Time)

I stopped paying for my cloud-based vision API subscription last week. It wasn’t a protest. It wasn’t about privacy, though that’s a nice bonus. It was simply because my phone can now do the same job faster, for free, without needing a single bar of signal.

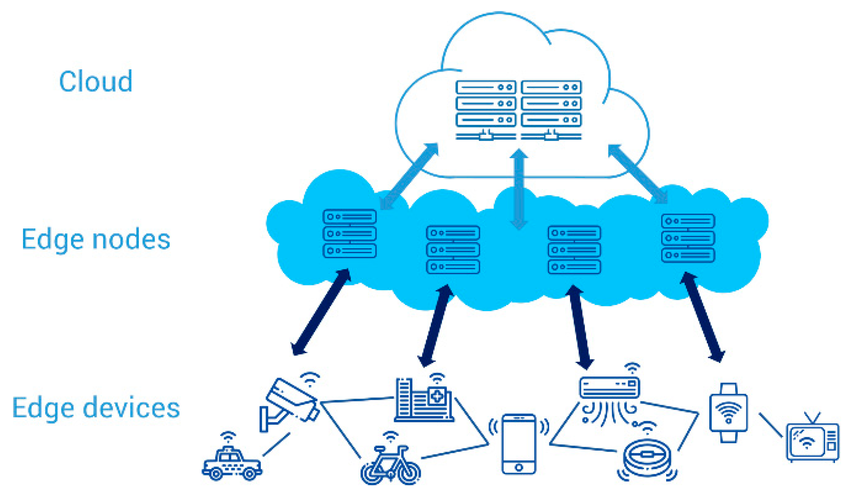

For the last two years, “Edge AI” has been this perpetually over-promised concept. We were told our fridges and watches would be smart, but in reality, we got stripped-down, lobotomized versions of models that hallucinated if you looked at them wrong. If you wanted real multimodal capabilities—understanding images, video, and complex context—you had to send your data to a server farm in Virginia and wait for the latency gods to smile upon you.

That era ended. Or at least, the door just slammed shut on it.

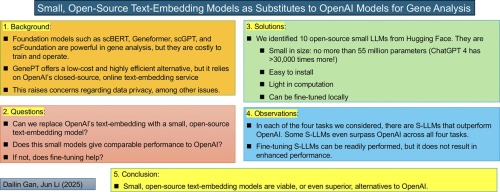

With the recent publication in Nature Communications detailing efficient multimodal LLMs for edge deployment, specifically regarding the MiniCPM-V architecture, the academic seal of approval has finally caught up to what the open-source community has been hacking together for months. We aren’t just talking about text anymore. We are talking about GPT-4V levels of visual understanding running on consumer hardware.

I’ve been testing these local multimodal models on a few devices—a high-end Android phone and a customized edge box I use for home automation. The difference is night and day compared to where we were in early 2025.

The “Toy Model” Myth is Dead

Here’s the thing that bugged me for ages. Whenever someone mentioned “local LLMs” or “mobile inference,” the immediate assumption was that you were compromising. You were trading intelligence for portability. You’d get a model that could maybe tell you a cat was in a picture, but ask it why the cat looked angry, and it would fall apart.

That trade-off is vanishing. The new architecture discussed in the recent paper—and deployed in the MiniCPM-V series—uses a much smarter way of stitching the visual encoder to the language model. Instead of just shoving image tokens into the context window and hoping the LLM doesn’t choke, they’ve optimized the cross-modal alignment.

Basically? It’s smarter about how it looks at pixels.

I ran a test yesterday. I pointed my phone at a messy circuit board I was debugging. Usually, I’d upload this to a frontier model in the cloud. This time, I let the local model handle it. It identified the blown capacitor and the specific trace that was corroded. No internet. No API cost. It just worked.

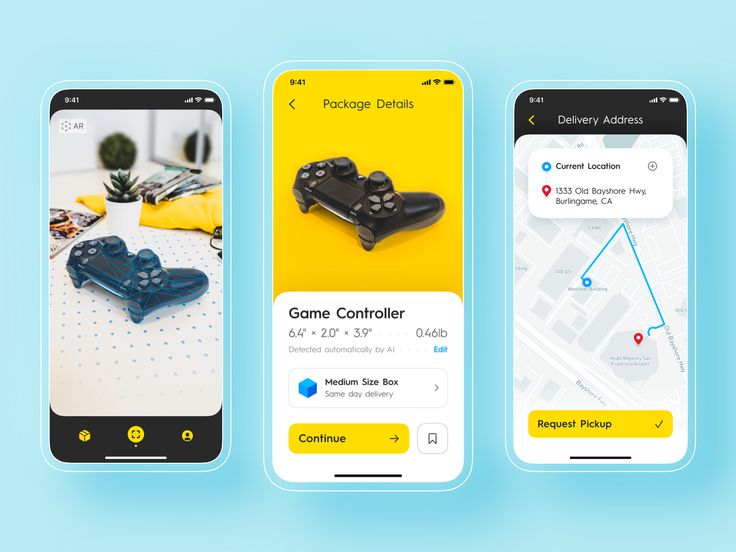

And I’m not the only one noticing this. The download numbers for these edge-optimized models have crossed 10 million. That’s not just researchers tinkering; that’s developers shoving these things into apps because they realize the cloud bill is the only thing stopping them from scaling.

Why “Efficient” is the Only Metric That Matters

Let’s get technical for a second (but I promise I won’t bore you with math). The bottleneck for edge AI has never really been compute—our phones have insane NPUs now. The bottleneck was memory bandwidth and energy consumption.

If running a query drains 5% of my battery, I’m not using it. Period.

The breakthrough here—and what the Nature publication validates—is the efficiency curve. We are seeing models that achieve high-resolution visual understanding while keeping the parameter count low enough to sit in RAM without killing the OS background processes.

I’ve seen plenty of “efficient” models before that were just aggressively quantized garbage. They were small, sure. But they were also stupid. The new approach preserves the reasoning capabilities of the larger parameter models by using high-quality data density during pre-training. They aren’t just shrinking the model; they are training it better from the start.

My Setup

For anyone curious, here is what I’m running right now to test this:

- Hardware: A standard flagship smartphone (2025 model) with 16GB RAM.

- Model: MiniCPM-V 2.6 (int4 quantized).

- Use Case: Real-time OCR and scene description for my visually impaired father.

The latency is under 500ms for a full description. A year ago, doing this locally took 4-5 seconds and heated the phone up enough to fry an egg.

The Privacy Argument Finally Holds Water

I’ve always been skeptical of the “privacy first” marketing pitch because, usually, privacy-first meant “feature-last.” But now that the performance parity is here, the privacy argument is actually compelling.

If I’m analyzing medical documents, personal photos, or proprietary code snippets from a whiteboard, I don’t want that leaving my device. With these new edge MLLMs, it doesn’t have to. The data stays on the silicon in my pocket.

This is huge for enterprise. I know a couple of CTOs who have banned cloud-based visual AI tools because of data leakage fears. They are now looking at deploying these efficient edge models on company tablets. The data never hits the public internet. That’s the kind of security posture that gets signed off on immediately.

What’s Next? (Looking at 2027)

So, we are in January 2026, and my phone is as smart as a 2024 server farm. Where does this go?

I suspect by early 2027, we’re going to see “hybrid inference” become the default in OS design. Your phone will handle 95% of queries locally—identifying objects, summarizing emails, sorting photos. It will only ping the cloud when you ask something that requires massive world knowledge or up-to-the-second news retrieval.

We’re also going to see a massive shift in robotics. If a $500 robot dog can have GPT-4V level vision running on an onboard chip, it can navigate a cluttered house without needing a constant Wi-Fi tether. That changes the utility of home robotics from “cute gimmick” to “actually useful.”

The Bottom Line

It’s easy to get jaded by AI news. Every week someone claims they’ve revolutionized something. But this shift to efficient, high-performance edge multimodal models is different. It’s tangible. You can download it today. You can run it on the hardware you already own.

The cloud isn’t going away, but for the first time, it’s not the default requirement for intelligence. We’ve cut the cord.