The Next Leap for AI: Unpacking OpenAI’s Game-Changing GPT Updates, Function Calling, and the Future of Intelligent Applications

A Paradigm Shift in AI Development: Decoding the Latest OpenAI GPT News

The world of artificial intelligence is in a constant state of rapid evolution, but certain moments mark a fundamental shift in what’s possible. We are currently in the midst of one such moment. Recent announcements from OpenAI have introduced a suite of powerful updates that are not merely incremental improvements but a foundational leap forward for developers, businesses, and the entire AI ecosystem. This wave of innovation brings more intelligent GPT models, a groundbreaking capability known as “function calling,” vastly expanded context windows, and dramatic cost reductions that democratize access to cutting-edge technology.

This article provides a comprehensive technical deep dive into these transformative updates. We will move beyond the headlines to explore the mechanics of function calling, analyze the specifications of the new models, and unpack the profound implications for everything from autonomous AI agents to enterprise-level applications. For developers, this is a roadmap to harnessing new powers; for business leaders, it’s a glimpse into the future of automated workflows and intelligent services. The latest GPT Trends News indicates a clear move from conversational AI to actionable, integrated AI, and these updates are the engine driving that transition.

Decoding the Announcements: What’s New and Why It Matters

The latest batch of updates from OpenAI is a multi-faceted release that addresses power, accessibility, and cost simultaneously. Each component is significant on its own, but together, they create a synergistic effect that will unlock a new generation of applications. Let’s break down the core components of this major OpenAI GPT News release.

Function Calling: The API Gateway to the Real World

The most significant development is the introduction of native function calling capabilities in the GPT APIs. This is the core technology that powered the much-discussed ChatGPT Plugins, now made available to the entire developer community. In essence, function calling allows developers to describe their own functions (e.g., custom code, internal APIs, third-party services) to the model. The model can then intelligently determine when and how to use these functions, outputting a structured JSON object with the necessary arguments to execute the code. This is a monumental step up from previous methods that relied on brittle prompt engineering to coax structured data from the model. It provides a reliable, robust, and scalable way for AI to interact with external systems, transforming it from a text generator into a true reasoning engine that can take action. This is pivotal GPT Plugins News for anyone building interactive applications.

Model Upgrades: Smarter, Steerable, and More Expansive

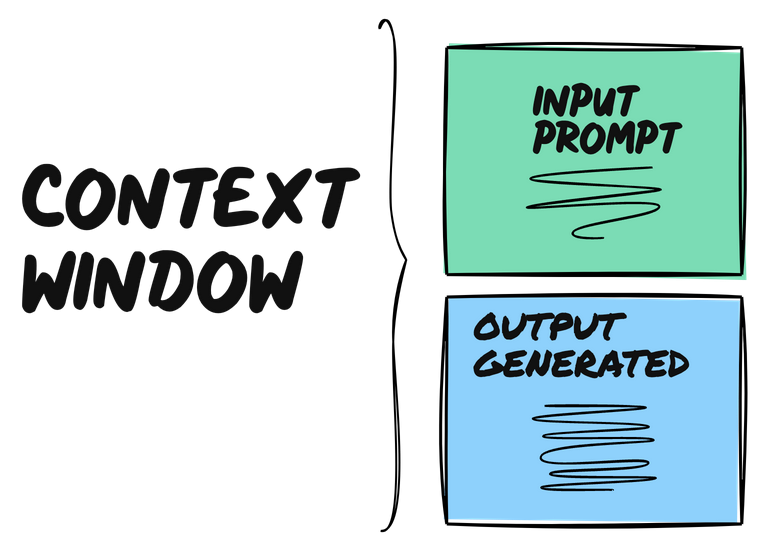

Underpinning these new capabilities are updated models. The new gpt-4-0613 and gpt-3.5-turbo-0613 have been specifically fine-tuned to excel at function calling, demonstrating higher accuracy and reliability in generating the correct JSON outputs. Perhaps even more impactful is the introduction of gpt-3.5-turbo-16k. This model quadruples the context window of its predecessor from 4,000 tokens to 16,000 tokens. This massive expansion means the model can process and reason over approximately 20 single-spaced pages of text in a single request. This is a game-changer for tasks involving long documents, extensive conversation histories, or complex codebases, directly impacting the latest GPT-4 News and GPT-3.5 News cycles.

Economic Accessibility: Drastic Cost Reductions

Innovation is often constrained by cost. OpenAI has addressed this head-on with significant price reductions. The state-of-the-art text-embedding-ada-002 model, crucial for semantic search and retrieval-augmented generation (RAG), has seen a 75% price drop. Furthermore, the input token cost for the highly popular gpt-3.5-turbo has been reduced by 25%. These reductions dramatically lower the barrier to entry for startups and individual developers, making it economically viable to build more complex, data-intensive, and scalable applications. This move is set to accelerate growth across the GPT Ecosystem News, fostering wider adoption and experimentation.

Function Calling in Practice: A Technical Breakdown

To truly appreciate the power of function calling, it’s essential to understand how it works under the hood. It’s an elegant, multi-step process that bridges the gap between natural language intent and programmatic execution. This capability is central to the latest GPT APIs News and is the key to building sophisticated GPT Agents News.

The Three-Step Dance: Define, Respond, Execute

The interaction between a developer’s application and the GPT model follows a clear, structured pattern:

1. Define Your Tools: The developer first defines a list of available functions in the initial API call. This is done by providing a JSON schema for each function that includes its name, a clear description of what it does, and the parameters it accepts (along with their types and descriptions). The quality of these descriptions is critical, as the model uses this metadata to decide which tool to use.

2. The Model’s Intelligent Request: When the user provides a prompt, the model analyzes it against the provided function definitions. If it determines that one or more functions are needed to fulfill the request, it doesn’t generate a standard text response. Instead, it returns a specific JSON object containing the name of the function to call and the arguments to use, extracted from the user’s query.

3. Execute and Synthesize: The developer’s application code receives this JSON object, parses it, and executes the specified local function with the provided arguments. This could involve calling a weather API, querying a customer database, or sending an email. The result of this function call (e.g., the weather data, the customer record) is then sent back to the model in a new API call. The model receives this new information and uses it to generate a final, natural-language response for the end-user.

Real-World Scenario: Building a Smart Customer Support Chatbot

Imagine a customer support chatbot for an e-commerce company. Without function calling, the bot can only answer general questions based on its training data. With function calling, it becomes a powerful, active assistant. Let’s see how:

- Functions Defined: The developer defines two functions:

getOrderStatus(order_id: string)andinitiateReturn(order_id: string, reason: string). - User Prompt: A user types, “Hi, I’d like to check on my order #ABC-123. It hasn’t arrived yet.”

- Model’s Response: The model recognizes the intent and the order ID. It responds to the API with:

{"name": "getOrderStatus", "arguments": "{\"order_id\": \"ABC-123\"}"}. - Application Logic: The application server receives this, calls its internal order management API with the ID “ABC-123”, and gets back a status like “Shipped, estimated delivery: 2 days”.

- Final Synthesis: The application sends this status back to the model. The model then generates the final user-facing message: “I’ve checked on your order #ABC-123. It has been shipped and is expected to arrive in 2 days.”

This example, central to GPT Chatbots News, showcases how the model can now reliably interact with proprietary, real-time data, dramatically increasing its utility in real-world business applications.

Beyond the Code: The Transformative Impact of These Updates

The implications of these updates extend far beyond simple API enhancements. They represent a fundamental shift in AI architecture and capability, paving the way for new categories of applications and reshaping how we think about human-computer interaction. The convergence of better models, tool use, and larger context windows is a catalyst for widespread disruption.

The Rise of Autonomous GPT Agents

Function calling is the bedrock upon which more autonomous AI agents will be built. An agent can now be given a high-level goal and a set of tools (functions). It can then reason, create a multi-step plan, and execute that plan by calling functions in sequence, using the output of one step as the input for the next. For example, a marketing agent could be tasked with “analyzing last week’s campaign performance and drafting a summary email.” It could use functions to pull data from Google Analytics, fetch ad spend from a different API, analyze the sentiment of social media comments, and finally, use a `sendEmail` function to deliver the synthesized report. This is a crucial development in GPT Research News and points toward a future of sophisticated, goal-oriented AI assistants.

Democratizing Advanced AI Applications Across Industries

The combination of the 16k context window and lower costs unlocks use cases that were previously impractical. In legal tech, an entire 50-page contract can now be loaded into the context for analysis, summarization, or risk identification, a key topic in GPT in Legal Tech News. In healthcare, a model can be given a patient’s extensive medical history to identify potential drug interactions or suggest diagnoses, advancing the conversation in GPT in Healthcare News. In finance, lengthy quarterly reports can be processed to extract key financial metrics and sentiment, a boon for GPT in Finance News. This enhanced capacity for long-form content analysis will accelerate AI adoption in knowledge-intensive professional fields.

A Glimpse into Future GPT Architecture

These updates offer clues about the direction of future AI development, including the much-anticipated GPT-5 News. The focus is clearly shifting from models that are simply masters of text to models that are masters of tool use. This suggests that future GPT Training Techniques News will likely involve training models not just on vast datasets of text and code, but also on countless examples of successful tool execution. This evolution in GPT Architecture News is moving us closer to systems that can reason, plan, and act reliably in the digital world, blurring the lines between language model and operating system.

Navigating the New Landscape: Best Practices and Considerations

Harnessing these new capabilities effectively requires a thoughtful approach. While the potential is immense, developers must also be mindful of the challenges and responsibilities that come with building more powerful and autonomous systems.

Best Practices for Implementing Function Calling

-

Write Descriptive and Unambiguous Functions: The model’s ability to use your tools correctly is directly proportional to how well you describe them. Use clear, concise names and detailed descriptions for both the function and its parameters. Think of it as writing documentation for an AI developer.

-

Implement Robust Error Handling: The model can occasionally hallucinate arguments or attempt to call a function in an inappropriate context. Your application code must validate all inputs from the model before execution to prevent errors or unexpected behavior.

-

Prioritize Security and Safety: This is paramount. The model suggests actions, but your code executes them. Never execute code received directly from the model. Sanitize all arguments and ensure the functions have the minimum necessary permissions. This is a critical aspect of GPT Safety News and GPT Privacy News, as a poorly secured function could expose sensitive data or systems.

Choosing the Right Model for the Job

With an expanded roster of models, selection becomes a key strategic decision. Here’s a simple guide:

-

gpt-4-0613: The top choice for complex reasoning, high-stakes tasks, and applications requiring the highest degree of reliability in function calling. -

gpt-3.5-turbo-16k: The go-to for processing, summarizing, or querying long documents, codebases, or conversation histories where context is king. -

gpt-3.5-turbo-0613: Ideal for applications that require a balance of speed, cost-effectiveness, and strong capability, such as general-purpose chatbots and content creation tasks.

Analyzing GPT Benchmark News and your specific application’s needs regarding latency and throughput will be crucial for making the right choice and optimizing your deployment.

Conclusion: A New Chapter in Applied AI

The latest updates from OpenAI represent far more than just a new feature list; they signify a new chapter in the story of applied artificial intelligence. The introduction of function calling fundamentally changes the role of large language models, elevating them from sophisticated text predictors to active participants in digital workflows. By giving models the ability to interact with the world through developer-defined tools, we are unlocking a vast and previously inaccessible frontier of possibilities.

When combined with more powerful models, quadrupled context windows, and lower costs, the impact is magnified. These changes will accelerate the development of everything from smarter personal assistants to fully autonomous business process agents. For developers and businesses, the message is clear: the tools to build the next generation of truly intelligent, integrated, and actionable AI applications are now in your hands. The future of AI is not just about conversation; it’s about capability, and that future has just arrived.