Beyond Text: Unpacking the Latest GPT Vision News and the Future of Multimodal AI

The Visual Revolution: How GPT Vision is Reshaping Our Interaction with AI

For years, the frontier of artificial intelligence was largely defined by text. We marveled at large language models (LLMs) that could write poetry, debug code, and converse with human-like fluency. However, a profound shift is underway, moving AI from a purely linguistic realm into a rich, multimodal world where it can see, interpret, and understand visual data. This evolution is spearheaded by advancements in GPT Vision technology. The latest GPT Vision News isn’t just about AI identifying objects in a picture; it’s about creating a new paradigm of human-computer interaction where visual context and understanding are paramount. This article provides a comprehensive deep dive into the latest developments, exploring the underlying architecture, transformative real-world applications, technical challenges, and the exciting future that lies ahead. From healthcare to content creation, the ability of models to process visual information is unlocking unprecedented opportunities and forcing us to rethink the very definition of an intelligent system.

Section 1: The Architecture and Evolution of GPT Vision

The journey from text-only models to visually-aware AI is a story of architectural innovation and exponential scaling. Understanding how these systems work reveals the clever fusion of two distinct fields of AI research: natural language processing and computer vision. The latest OpenAI GPT News highlights how this integration is becoming increasingly seamless and powerful.

From Pixels to Prompts: How GPT Vision Works

At its core, a multimodal model like GPT-4 with Vision (GPT-4V) combines a powerful LLM with a sophisticated vision encoder. The process begins when a user provides an image. This image is first broken down by a vision encoder, often a variant of the Vision Transformer (ViT) architecture. The ViT dissects the image into a grid of smaller patches, similar to how text is broken into tokens. Each patch is then converted into a numerical representation, or an “embedding,” that captures its visual features. These image embeddings are then projected into the same dimensional space as the text embeddings from the user’s prompt. This shared space is the critical “meeting point” where the model learns the relationship between visual concepts and words. Once combined, this sequence of text and image tokens is fed into the main transformer-based LLM, which processes the entire input to generate a coherent, context-aware response. The latest GPT Architecture News focuses on making this integration more efficient, allowing for faster and more nuanced visual reasoning.

Key Milestones in GPT Vision Development

The evolution of GPT Vision has been rapid. Early attempts at multimodal AI were often limited to simple image captioning. However, the release of GPT-4 marked a significant leap, introducing a model that could perform complex visual reasoning. It could not only describe what was in an image but also explain why a meme was funny, translate handwritten notes into digital text, or identify the ingredients in a photo of a meal and suggest a recipe. The more recent GPT-4 News, particularly around models like GPT-4o, showcases the next frontier: real-time, low-latency visual interaction. These models can process a live video feed from a camera, responding to visual cues and environmental changes almost instantaneously. This progression from static image analysis to dynamic video understanding represents a monumental step towards creating truly interactive AI assistants. As we look toward the future, speculative GPT-5 News suggests that these capabilities will only deepen, potentially enabling AI to understand and interact with complex 3D environments.

Section 2: Real-World Applications and Industry Transformation

The theoretical advancements in GPT Vision are translating into tangible, high-impact applications across a multitude of industries. As developers gain access to more powerful multimodal capabilities through APIs, we are witnessing a Cambrian explosion of innovative tools and services. The latest GPT Applications News is filled with examples of how this technology is solving real-world problems and creating new efficiencies.

Transforming Industries with AI Sight

GPT Vision is not a one-size-fits-all solution; its power lies in its adaptability to specific industry needs. Here are a few key sectors being reshaped:

- Healthcare: According to recent GPT in Healthcare News, vision models are being trained to analyze medical imagery like X-rays, MRIs, and retinal scans to identify anomalies, potentially assisting radiologists in making faster and more accurate diagnoses. Another powerful application is accessibility; these models can power applications that describe the world to visually impaired individuals in real-time.

- Education: The GPT in Education News highlights tools that can transform learning. A student can take a picture of a complex biological diagram or a historical map, and the AI can provide a detailed, step-by-step explanation. It can also solve handwritten math problems, showing the student the process, not just the answer.

- Marketing and E-commerce: In marketing, vision models analyze user-generated content on social media to gauge brand perception and identify trends. The GPT in Marketing News reports on e-commerce platforms using this tech to automatically generate rich, SEO-friendly product descriptions and alt-text directly from product images, saving countless hours of manual work.

- Content Creation: For creators, this technology is a game-changer. As reported in GPT in Content Creation News, artists can upload a sketch and get creative suggestions, while video editors can get automatic scene descriptions and content moderation flags.

Practical Examples in Action

To make this more concrete, consider these scenarios:

Case Study 1: The Code Assistant. A developer is struggling with a complex user interface layout. Instead of describing the problem in text, they take a screenshot of their app and their design mockup. They upload both images and ask, “What CSS adjustments do I need to make the UI on the left look like the design on the right?” The vision model analyzes both images, understands the visual discrepancies, and generates the exact code needed to fix the layout. This is a prime example highlighted in GPT Code Models News.

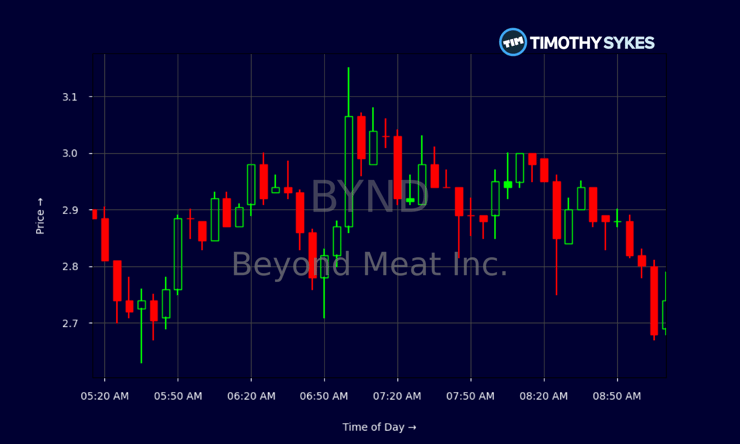

Case Study 2: The Financial Analyst. An analyst is presented with a dozen complex financial charts in a PDF report. Instead of manually interpreting each one, they feed the document to a vision-enabled AI. Their prompt: “Summarize the key trends from these charts, identify any anomalies, and explain the Q3 revenue performance based on the bar chart on page 5.” The AI extracts the visual data, synthesizes the information, and provides a concise, data-driven summary, a trend covered in GPT in Finance News.

Section 3: Technical Deep Dive: Performance, Optimization, and Challenges

While the applications of GPT Vision are impressive, deploying these models effectively requires a deep understanding of their performance characteristics, optimization techniques, and inherent limitations. The latest GPT Research News and GPT Benchmark News provide crucial insights for developers and businesses looking to leverage this technology responsibly and efficiently.

Benchmarking Performance: Speed, Accuracy, and Cost

The performance of a vision model is a multi-faceted issue. Key metrics include:

- Latency: How quickly does the model respond after receiving an image? For real-time applications like interactive assistants, low latency is critical. The GPT Latency & Throughput News shows a major push by providers like OpenAI to reduce this delay to a few hundred milliseconds.

- Accuracy: How correctly does the model interpret the visual data? This is measured against standardized benchmarks like VQA (Visual Question Answering) and object recognition datasets.

- Cost: Processing images is computationally more expensive than processing text. The cost per API call is a significant factor for businesses looking to scale their applications.

These factors often involve trade-offs. A larger, more accurate model may have higher latency and cost. The ongoing GPT Scaling News focuses on developing architectures that improve accuracy without a proportional increase in computational demand.

Optimization and Deployment Strategies

To make these powerful models practical for widespread use, especially on devices with limited resources, several optimization techniques are crucial. The GPT Optimization News is rich with developments in this area:

- Quantization and Distillation: These are model compression techniques. GPT Quantization News reports on methods that reduce the precision of the model’s weights (e.g., from 32-bit to 8-bit integers), significantly shrinking its size and speeding up inference with minimal loss in accuracy. GPT Distillation News covers the process of training a smaller “student” model to mimic the behavior of a larger “teacher” model, creating a more efficient version for deployment.

- Fine-Tuning: Instead of training a massive model from scratch, developers can use GPT Fine-Tuning News to guide their process of taking a pre-trained base model and further training it on a smaller, domain-specific dataset. This is essential for creating specialized tools, such as a model expert at identifying manufacturing defects or classifying skin conditions.

- Edge Deployment: The ultimate goal for many real-time applications is running the model directly on a device (e.g., a smartphone or IoT camera). This is the focus of GPT Edge News, which explores how compression and specialized GPT Hardware News (like new AI chips) are making this increasingly feasible.

Common Pitfalls and Ethical Considerations

Despite their power, vision models are not infallible. One of the most significant challenges is “visual hallucination,” where the model confidently misinterprets an image or invents details that are not there. Furthermore, the GPT Bias & Fairness News frequently discusses how biases present in the training data can lead to skewed or unfair interpretations, particularly concerning demographic attributes. The GPT Privacy News raises critical questions about how user-submitted images and videos are stored and used. Addressing these issues through improved training data, robust safety filters, and transparent policies is a central theme in the ongoing GPT Ethics News and GPT Safety News discourse.

Section 4: The Future of GPT Vision and the Broader Ecosystem

The current state of GPT Vision is merely a glimpse of what’s to come. The trajectory of development points towards more integrated, autonomous, and contextually aware AI systems that will blur the lines between the digital and physical worlds. The latest GPT Future News is less about incremental improvements and more about fundamental shifts in capability.

What’s Next? From Real-Time Interaction to Autonomous Agents

The next wave of innovation, hinted at in speculative GPT-5 News, will likely focus on a few key areas. First is the mastery of video. Instead of analyzing static frames, future models will understand the temporal context of video streams, tracking objects, interpreting actions, and predicting outcomes. This has profound implications for robotics, autonomous vehicles, and security. Second, we will see the rise of true GPT Agents News—AI systems that can not only see the world but also act within it. Imagine an agent that can watch a cooking tutorial video and then operate a robotic kitchen to replicate the recipe. This requires a deep fusion of vision, reasoning, and action planning. The overarching trend, as covered in GPT Trends News, is the move towards a more holistic, ambient form of AI that is constantly aware of its surroundings and ready to assist proactively.

The Competitive Landscape and Open Source Movement

OpenAI is a major player, but the field is far from a monopoly. The GPT Competitors News is buzzing with activity from companies like Google (with its Gemini models), Anthropic, and Meta, all of whom are pushing the boundaries of multimodal AI. This competition is a powerful catalyst for innovation. Simultaneously, the GPT Open Source News highlights a vibrant community building and sharing powerful vision models. These open-source alternatives provide greater transparency and customization, allowing researchers and smaller companies to build on the cutting edge without being locked into a single proprietary ecosystem. This dynamic interplay between corporate labs and the open-source community, as detailed in GPT Ecosystem News, is accelerating progress across the entire field.

Conclusion: A New Vision for Artificial Intelligence

The latest GPT Vision News confirms that we are at a pivotal moment in the history of artificial intelligence. The integration of sight has elevated LLMs from sophisticated text processors to versatile reasoning engines that can engage with the world in a far more human-like way. We’ve moved beyond simple classification to nuanced understanding, real-time interaction, and industry-specific problem-solving. While significant technical and ethical challenges remain—from mitigating bias to ensuring privacy and optimizing performance—the trajectory is clear. The future of AI is multimodal, and GPT Vision is at the vanguard of this revolution. As these technologies continue to mature, they will unlock new efficiencies, spark unprecedented creativity, and fundamentally reshape our relationship with the digital world, creating a future where AI not only understands our words but also sees our world.