The Secret Sauce of AI: A Deep Dive into the Evolving World of GPT Datasets

The Bedrock of Intelligence: Understanding the Evolving Landscape of GPT Datasets

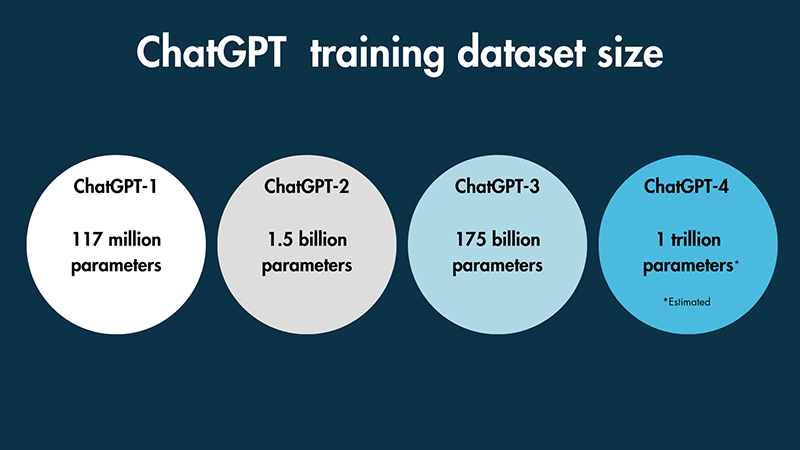

In the rapidly advancing world of artificial intelligence, models like GPT-4 have captured the public imagination with their astonishing ability to generate human-like text, write code, and even analyze images. This constant stream of GPT-4 News often focuses on model size and architectural breakthroughs. However, beneath these powerful algorithms lies the true engine of their intelligence: the data they are trained on. The latest GPT Datasets News reveals a critical shift in the AI community, moving from a purely model-centric view to a data-centric one. The quality, diversity, and strategic curation of datasets have become the primary drivers of innovation, safety, and real-world utility.

This article delves into the comprehensive world of datasets powering today’s most advanced generative AI. We will explore the journey from the colossal, web-scale corpora used for pre-training to the meticulously crafted, domain-specific datasets used for fine-tuning and alignment. We will also examine the revolutionary trend of using AI to generate synthetic data, creating a powerful feedback loop for model improvement. Understanding this data-driven ecosystem is no longer just for researchers; it’s essential for developers, businesses, and anyone looking to harness the true potential of GPT technology. This is the frontier of GPT Custom Models News, where data transforms a generalist tool into a specialized, high-performance asset.

From Trillions of Tokens to Multimodal Understanding: The Pre-training Foundation

The initial “pre-training” phase is what imbues a Large Language Model (LLM) with its broad understanding of language, reasoning, and world knowledge. This process involves feeding the model an unimaginably vast amount of data, allowing it to learn patterns, grammar, facts, and semantic relationships. The evolution of these foundational datasets is a key topic in GPT Training Techniques News.

The Scale and Composition of Foundational Datasets

Early models were trained on curated text collections like Wikipedia and Google Books. However, to achieve the capabilities seen in modern systems, the scale had to increase exponentially. Today’s pre-training datasets are often measured in trillions of tokens and are sourced from a wide swath of the internet. Datasets like Common Crawl, which contains petabytes of web crawl data, form a significant portion. Other notable examples include “The Pile,” a large, diverse, open-source dataset, and Google’s C4 (Colossal Cleaned Common Crawl). These datasets are not just raw dumps of text; they undergo extensive cleaning, filtering, and deduplication to remove low-quality content, boilerplate text, and personally identifiable information (PII), although this process is imperfect. This stage is crucial for the model’s core competency and is a major focus of OpenAI GPT News and research from its competitors.

The Leap to Multimodality: Integrating Vision and Text

One of the most exciting developments in GPT Multimodal News is the expansion of models beyond text. GPT-4’s ability to analyze images stems from its training on massive datasets that pair images with descriptive text. Datasets like LAION-5B, containing over 5 billion image-text pairs scraped from the web, have been instrumental in this progress. By learning the connections between visual information and textual descriptions, models develop a form of “vision.” This allows for groundbreaking applications, from describing a complex scene in a photograph to interpreting charts and diagrams. This trend in GPT Vision News is pushing the boundaries of what AI assistants and creative tools can achieve, enabling seamless integration of different data types.

The Inherent Challenges: Bias, Copyright, and Ethics

The “train on the internet” approach is not without significant challenges. Web-scale datasets inevitably mirror the biases present in human society, which can lead to models perpetuating harmful stereotypes. This is a central theme in GPT Bias & Fairness News. Furthermore, the use of copyrighted material in training data has sparked intense legal debates, raising questions about fair use and intellectual property. The latest GPT Regulation News reflects a growing global effort to address these ethical and legal gray areas, pushing for greater transparency and accountability in data sourcing and model training. These issues of GPT Ethics News and GPT Privacy News are paramount as the technology becomes more integrated into our daily lives.

The Art of Specialization: Fine-Tuning with Custom and Synthetic Datasets

While pre-training creates a powerful generalist, the true business value of GPT models is often unlocked through specialization. Fine-tuning is the process of taking a pre-trained model and further training it on a smaller, high-quality, domain-specific dataset. This adapts the model to a particular task, style, or knowledge base, making it a core topic in GPT Fine-Tuning News.

Crafting High-Quality Custom Datasets for Niche Applications

Imagine a healthcare provider wanting an AI assistant that can summarize patient-doctor conversations into clinical notes. A general-purpose model like GPT-4 might perform adequately, but it would lack the specific terminology and formatting required. By fine-tuning the model on a custom dataset of thousands of anonymized conversation transcripts and their corresponding clinical notes, the provider can create a highly accurate and reliable tool. This is a prime example of GPT in Healthcare News.

The process involves several key steps:

- Data Collection: Gathering relevant data, such as internal documents, customer support logs, or legal contracts.

- Data Annotation: Labeling or structuring the data into a format the model can learn from, often in a prompt-response or instruction-following format.

- Data Cleaning: Ensuring the data is consistent, accurate, and free of sensitive information.

This data-centric approach is transforming industries. In finance, models are fine-tuned on market reports (GPT in Finance News), and in law, they are trained on case law and legal documents (GPT in Legal Tech News), creating powerful, specialized GPT Agents News.

The Synthetic Data Revolution: Using AI to Train AI

What if you don’t have a large, high-quality dataset? A groundbreaking trend, and a major piece of GPT-4 News, is the use of powerful models like GPT-4 to generate synthetic training data. This technique is a game-changer for overcoming data scarcity and reducing manual annotation costs. For instance, to build a chatbot for a new software product, a developer can provide GPT-4 with the product’s documentation and ask it to generate thousands of diverse question-and-answer pairs that a user might ask. This synthetic dataset can then be used to fine-tune a smaller, more efficient model for deployment. This method not only accelerates development but also allows for greater control over the diversity and quality of the training examples, directly impacting the final model’s performance and safety.

Beyond Training: Datasets for Evaluation and Human Alignment

Creating a powerful model is only half the battle. Ensuring it is reliable, safe, and aligned with human intentions requires a different class of datasets focused on evaluation and alignment. This area of GPT Research News is critical for building trust and deploying models responsibly.

Benchmarking Performance with Complex Evaluations

To objectively measure and compare the capabilities of different models, the AI community relies on standardized benchmarks. These are not simple accuracy tests; they are complex evaluation datasets designed to probe a model’s reasoning, knowledge, and potential flaws. Key benchmarks in GPT Benchmark News include:

- MMLU (Massive Multitask Language Understanding): Tests knowledge across 57 subjects, from elementary mathematics to US history and law.

- HumanEval: A benchmark specifically for evaluating code generation capabilities, a key area of GPT Code Models News.

- HELM (Holistic Evaluation of Language Models): A comprehensive framework that evaluates models across a wide range of scenarios and metrics, including accuracy, fairness, and efficiency.

These benchmarks are crucial for tracking progress in the field, identifying weaknesses in current architectures, and providing transparency for users comparing different GPT Competitors News and platforms.

Aligning with Human Values: RLHF and DPO Datasets

A core challenge in AI development is ensuring that models behave in ways that are helpful, harmless, and honest. Techniques like Reinforcement Learning from Human Feedback (RLHF) and Direct Preference Optimization (DPO) are used to “align” models with human values. This process relies on unique datasets built from human interaction. Initially, human labelers write high-quality responses to various prompts. Then, the model generates multiple responses to a new set of prompts, and human labelers rank them from best to worst. This preference data—a dataset of human judgments—is used to train a “reward model” that guides the LLM’s behavior, teaching it to generate responses that humans are more likely to prefer. This alignment process is fundamental to the user experience of products like ChatGPT and is a cornerstone of GPT Safety News.

Navigating the Data-Centric Future: Best Practices and Recommendations

As the focus shifts towards data, developers and organizations must adopt new strategies for building and deploying AI systems. The future of GPT Applications News will be defined by those who master the art and science of data curation.

Best Practices for Dataset Management

- Prioritize Quality Over Quantity: For fine-tuning, a few thousand high-quality, diverse examples will almost always outperform a million noisy, low-quality ones. Focus on clean, well-structured, and relevant data.

- Embrace Iterative Refinement: Treat your dataset as a living asset, not a one-time creation. Continuously analyze your model’s failures, collect or generate new data to address those weaknesses, and retrain. This iterative loop is key to robust GPT Deployment News.

- Champion Ethical Sourcing: From the outset, consider the provenance of your data. Ensure you have the rights to use it, anonymize sensitive information, and proactively audit it for biases that could harm users or create legal risks.

- Invest in Data Tooling: As datasets become more central, so do the tools to manage them. Platforms for data annotation, versioning, and quality analysis are becoming essential components of the modern AI stack, as highlighted in GPT Tools News.

Future Trends in GPT Datasets

The landscape of GPT datasets continues to evolve at a breakneck pace. Key GPT Trends News to watch include the rise of datasets for hyper-personalization, enabling models to adapt to individual users, and the integration of real-time, streaming data for continuously learning systems. We will also see more complex datasets incorporating video, audio, and other sensory inputs. As models move to smaller devices, a new focus on GPT Edge News will drive the creation of highly efficient and compressed datasets for on-device fine-tuning and inference, a key area of GPT Efficiency News and GPT Quantization News.

Conclusion: Data as the New Frontier of AI Innovation

The narrative surrounding generative AI is maturing. While breakthroughs in model architecture and scale will continue to make headlines, the most significant and sustainable advancements will come from a deeper understanding and more strategic application of data. We have moved from an era of simply amassing web-scale data to a more sophisticated age of curating, synthesizing, and aligning datasets to build specialized, safe, and truly intelligent systems. The insights from GPT Datasets News make it clear: data is no longer just the fuel for AI; it is the blueprint for its future. For developers, researchers, and businesses, mastering the data-centric approach is the definitive path to unlocking the next wave of AI-driven innovation and creating real-world value.