The Next Leap in AI Reliability: How Advanced GPT Models Are Conquering Hallucinations

The Dawn of High-Fidelity AI: Moving Beyond Probabilistic Guesswork

The rapid evolution of generative AI has been nothing short of revolutionary, with large language models (LLMs) like those in the GPT series transforming industries from content creation to software development. However, a persistent shadow has loomed over their widespread adoption in high-stakes environments: the problem of “hallucination.” These confident, yet factually incorrect, outputs have been the primary barrier preventing AI from being fully trusted in critical domains like medicine, finance, and law. The latest GPT Models News, however, signals a monumental shift. Emerging research and development in next-generation architectures are directly targeting this fundamental flaw, introducing sophisticated reasoning mechanisms that dramatically enhance factual accuracy. This evolution marks a pivotal transition from models that are merely articulate to models that are genuinely reliable, paving the way for a new era of AI applications where precision and trustworthiness are paramount. This article explores the groundbreaking techniques reducing hallucination rates to unprecedented lows and analyzes their profound implications across various sectors.

Section 1: Understanding and Quantifying the Hallucination Problem

At its core, an AI hallucination is a response generated by a model that is nonsensical, factually incorrect, or disconnected from the provided source context. This phenomenon arises from the very nature of how LLMs are built. They are probabilistic models, trained on vast swaths of internet data to predict the next most likely word in a sequence. While this makes them incredibly fluent, it doesn’t endow them with a true understanding of facts or logic. The model might associate concepts that appear together frequently in its training data, leading it to invent plausible-sounding but false information. The latest GPT-4 News and ongoing research have highlighted that this is not just a bug, but a fundamental challenge of the underlying architecture.

The High Cost of Inaccuracy

In creative applications, a hallucination might be a harmless, even amusing, quirk. But in professional settings, the consequences can be severe. Consider the following scenarios:

- Healthcare: A medical chatbot incorrectly advises a user on drug interactions, leading to a dangerous health outcome. This is a major topic in GPT in Healthcare News.

- Legal Tech: An AI assistant misinterprets a legal precedent while summarizing case law for an attorney, potentially jeopardizing a legal strategy. This concern drives much of the discussion in GPT in Legal Tech News.

- Finance: A model generates a fabricated financial report on a company, leading an investor to make a poor decision. This risk is a central theme in GPT in Finance News.

These risks have fueled significant research into GPT Safety News and GPT Bias & Fairness News, as models can also hallucinate biased or unfair content. To combat this, the AI community has developed sophisticated evaluation metrics and benchmarks. Datasets like HealthBench, TruthfulQA, and others are designed specifically to measure a model’s propensity to hallucinate. By testing models against curated sets of questions with known answers, researchers can quantify their factual accuracy and track progress between generations, providing the hard data needed to validate claims of improved reliability.

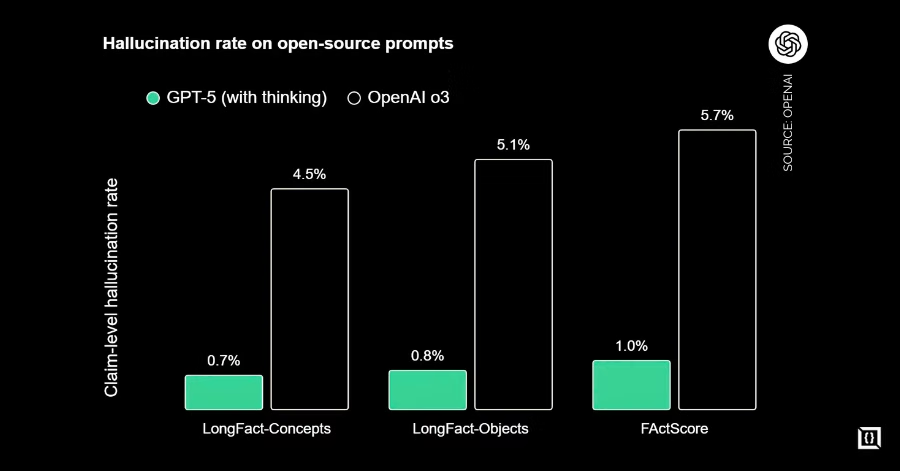

The Role of Benchmarks in Building Trust

The latest GPT Benchmark News reveals a clear trend: newer, more advanced models are demonstrating a marked improvement in these evaluations. While earlier models like GPT-3.5 might have struggled, newer iterations show a significant reduction in error rates. This progress isn’t accidental; it’s the result of targeted innovations in GPT Training Techniques News and GPT Architecture News, specifically designed to instill a more robust “world model” and reasoning capability within the AI. These benchmarks are crucial for building trust and are becoming a standard part of the GPT Ecosystem News, allowing developers and enterprises to make informed decisions about which models are suitable for their specific, risk-sensitive applications.

Section 2: “Thinking Before Speaking”: The Rise of Deliberative AI Architectures

The most significant breakthrough in reducing hallucinations is the shift from a purely reflexive response system to a more deliberative one. Instead of generating an answer in a single, forward pass, advanced models are being engineered with internal “thinking” or reasoning steps. This approach, often referred to as process supervision or chain-of-thought (CoT) reasoning, fundamentally changes how the model arrives at a conclusion. The latest OpenAI GPT News and research papers from competitors point towards this as the future of reliable AI.

How Deliberative Reasoning Works

Imagine asking an AI a complex medical question. A traditional model might immediately generate an answer based on patterns it recognizes. A model with deliberative reasoning would instead follow a structured internal monologue:

- Deconstruct the Query: The model first breaks down the question into its core components. “What are the contraindications for drug X in a patient with condition Y?” becomes: (1) Define drug X. (2) Define condition Y. (3) Search for known interactions and contraindications between X and Y.

- Formulate a Plan & Gather Information: The model internally queries its own knowledge base to retrieve relevant facts for each sub-question. It might identify the class of drug X and the physiological impact of condition Y.

- Synthesize and Verify: The model then synthesizes the retrieved information, cross-referencing facts to ensure consistency. It might check if drug X is known to exacerbate the symptoms of condition Y. This step is crucial for catching potential errors before they make it to the final output.

- Generate the Final Answer: Only after this internal verification process does the model compose the final, reasoned answer, often explaining its logic.

This “thinking” process significantly reduces the likelihood of a hallucination because it forces the model to justify its answer with a logical chain of steps, rather than a probabilistic guess. This is a hot topic in GPT Research News, with various techniques like self-consistency (where the model generates multiple reasoning paths and chooses the most common answer) further improving accuracy. This also has implications for GPT Inference News, as these methods can increase computational cost and latency, a trade-off for higher accuracy.

Architectural and Training Innovations

Achieving this level of reasoning isn’t just about clever prompting. It involves fundamental changes discussed in GPT Architecture News and GPT Training Techniques News. Models are being trained not just to predict the next word, but to predict and reward correct reasoning paths. This involves creating massive, high-quality GPT Datasets News that include step-by-step solutions to problems. Furthermore, techniques like Reinforcement Learning from Human Feedback (RLHF) are being refined to specifically penalize hallucinations and reward factual accuracy, making the models safer and more aligned with user intent. These advancements are critical for the development of more capable GPT Code Models News and GPT Agents News, which rely on logical consistency to perform complex tasks.

Section 3: Real-World Implications and the Expansion of AI’s Role

The dramatic reduction in hallucination rates is not merely an academic achievement; it is the key that unlocks a vast range of previously inaccessible, high-value applications. As models become more reliable, their role will evolve from creative assistants to trusted partners in critical decision-making processes. This trend is already beginning to dominate GPT Applications News and discussions about the GPT Future News.

Transforming High-Stakes Industries

- GPT in Healthcare News: In medicine, ultra-reliable AI could function as a diagnostic co-pilot for doctors. A physician could describe a patient’s symptoms and preliminary lab results, and the AI could generate a differential diagnosis, citing specific medical literature for each possibility with an extremely high degree of accuracy. This could also power patient-facing GPT Chatbots News that provide safe, verified health information, reducing the burden on clinics.

- GPT in Legal Tech News: Law firms can deploy AI for discovery and contract analysis with newfound confidence. An AI agent could scan thousands of documents, accurately identifying key clauses, potential risks, and relevant precedents without inventing or misrepresenting information, drastically accelerating a previously manual and error-prone process.

- GPT in Finance News: For financial analysts, these models can perform real-time analysis of market data, earnings reports, and geopolitical news to generate investment summaries. With hallucination rates nearing zero, the insights from these GPT Assistants News become actionable intelligence rather than speculative suggestions.

The Rise of Autonomous and Multimodal Agents

This newfound reliability is the bedrock for the next generation of AI: autonomous agents. As covered in GPT Agents News, these systems can perform multi-step tasks in digital or physical environments. A reliable agent could manage complex logistics, execute trading strategies, or even conduct scientific research by planning experiments, analyzing data, and writing reports. Furthermore, as these reasoning capabilities are integrated with other modalities, we see exciting developments in GPT Multimodal News and GPT Vision News. An AI could analyze a medical image (like an X-ray), cross-reference it with a patient’s electronic health record, and write a preliminary report for a radiologist, all with a verifiable chain of reasoning. This convergence of vision, language, and logic represents a quantum leap in AI capability.

Section 4: Best Practices, Recommendations, and Future Outlook

As organizations look to harness the power of these next-generation models, a strategic approach is essential. Simply swapping out an old API for a new one is not enough. Success requires a deep understanding of both the technology’s capabilities and its remaining limitations.

Recommendations for Implementation

- Always Maintain a Human-in-the-Loop for Critical Tasks: Despite drastically lower hallucination rates, no model is perfect. For applications in healthcare, law, or finance, AI should be used to augment, not replace, human expertise. The AI can serve as a powerful tool for drafting, research, and analysis, but the final decision must rest with a qualified professional. This is a core principle in GPT Ethics News.

- Leverage Structured Outputs and Verification: When using GPT APIs News, prompt the model to not only provide an answer but also to cite its sources or explain its reasoning step-by-step. This output can then be programmatically checked or more easily reviewed by a human expert.

- Invest in Fine-Tuning and Custom Models: For domain-specific applications, using a base model is often insufficient. The latest GPT Fine-Tuning News shows that training a model on your proprietary, high-quality data can further reduce hallucinations and align its behavior with your specific needs. This is a key part of building effective GPT Custom Models News.

The Road Ahead: Efficiency and Accessibility

A significant challenge discussed in GPT Latency & Throughput News is that the deliberative “thinking” processes can make models slower and more expensive to run. The future of GPT Optimization News will focus on techniques like GPT Distillation, GPT Quantization, and GPT Compression to create smaller, more efficient models that retain these advanced reasoning capabilities. This will be crucial for deploying powerful AI on edge devices (GPT Edge News) and in applications where real-time performance is critical. As the technology matures, we can expect to see a greater focus on GPT Regulation News and industry standards to ensure these powerful tools are deployed safely and responsibly.

Conclusion: Entering the Age of AI Reliability

The journey of large language models is reaching a critical inflection point. The concerted effort to combat and minimize hallucinations is transforming them from unpredictable savants into reliable, logical reasoning engines. The introduction of deliberative, “thinking” architectures represents a fundamental paradigm shift, pushing error rates in high-stakes domains to unprecedented lows. This breakthrough is not just an incremental improvement; it is the catalyst that will unlock the full potential of AI in the most critical sectors of our economy and society. While vigilance, ethical oversight, and a human-centric approach remain essential, the latest GPT Trends News clearly indicates that we are moving into an era where we can begin to trust AI with tasks that demand the highest standards of accuracy and reliability, heralding a future rich with intelligent collaboration.