Beyond 4-Bit: The New Frontier of Extreme GPT Quantization Explained

The Unseen Revolution: Making Giant AI Models Radically Smaller and Faster

In the world of artificial intelligence, the dominant narrative has been one of relentless growth. From GPT-3.5 to GPT-4 and the highly anticipated GPT-5, Large Language Models (LLMs) have been expanding in size and capability at a breathtaking pace. This scaling, however, comes with a steep price: enormous computational and memory requirements that confine these powerful tools to massive data centers and limit their accessibility. The latest GPT Models News often focuses on bigger parameter counts, but a parallel, equally important revolution is underway—one focused on efficiency. Recent breakthroughs in model compression, specifically in a technique called quantization, are now pushing the boundaries to extreme, sub-2-bit levels. This isn’t just an incremental improvement; it’s a paradigm shift that promises to democratize AI, enabling powerful GPT models to run on everything from a standard laptop to a smartphone. This development in GPT Quantization News is arguably one of the most significant trends shaping the future of AI deployment and accessibility.

Section 1: The Foundations of Extreme Model Quantization

To understand the significance of this leap, we must first grasp the core concept. Quantization is the process of reducing the numerical precision of a model’s weights and activations, which are traditionally stored as 32-bit floating-point numbers (FP32). By converting them to lower-precision formats, we can dramatically shrink the model’s footprint and accelerate its performance.

What is Quantization? A Quick Refresher

Imagine a model’s weight as a highly precise measurement, like 3.14159265. For many tasks, a less precise value, like 3.14, is sufficient. Quantization applies this logic at a massive scale. The most common forms have been:

- INT8 (8-bit Integer): Reduces model size by roughly 4x compared to FP32. This has been a standard for years in optimizing GPT Inference News for better latency and throughput.

- INT4 (4-bit Integer): A more aggressive technique, reducing model size by 8x. This method gained massive popularity with techniques like QLoRA, which made fine-tuning large models on consumer hardware a reality, marking a major milestone in GPT Fine-Tuning News.

The benefits are clear: a smaller model requires less VRAM, loads faster, and can perform calculations more quickly, especially on hardware with specialized support for low-precision arithmetic. This has been a central theme in GPT Efficiency News and is critical for improving deployment on a wider range of systems.

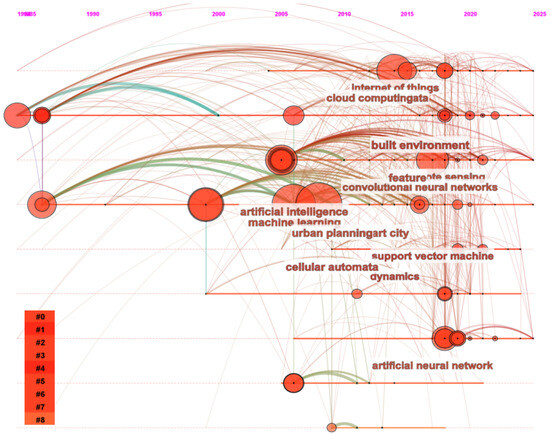

Pushing the Limits: The Rise of Sub-4-Bit Techniques

For a long time, 4-bit was considered the practical limit for quantization without causing a catastrophic drop in model performance. However, recent GPT Research News has shattered this perception. Researchers are now successfully implementing quantization schemes that go far below this threshold, entering the realm of what can be called “extreme quantization.” We are now seeing viable models using:

- Ternary (2-bit): Weights are restricted to three values, typically -1, 0, and 1.

- Binary (1-bit): The most extreme form, where weights are just -1 or 1.

- Fractional Bits (e.g., 1.58-bit): This might sound impossible, but it refers to an *average* bit rate per parameter. This is achieved through clever techniques like grouping weights and using a shared codebook to represent them. For example, a group of four weights might be represented by a single 3-bit value, which can encode 2^3=8 unique states for that group. The average bit rate per weight is then 3 bits / 4 weights = 0.75 bits. The 1.58-bit figure is derived from similar, more complex mathematical groupings, representing a significant update in GPT Architecture News.

Section 2: A Technical Deep Dive into Sub-2-Bit Quantization

Achieving such extreme compression without destroying the model’s intricate knowledge requires sophisticated techniques that go far beyond simple rounding. The challenge is to preserve the rich information encoded in the high-precision weights while representing them with an incredibly limited set of values.

The Mechanics of Extreme Compression

The latest methods, central to current GPT Training Techniques News, often employ a combination of strategies to maintain model fidelity. One of the most promising approaches is a form of vector quantization applied to groups of weights. Here’s a simplified breakdown:

- Weight Grouping: Instead of quantizing each weight individually, the model’s parameters are divided into small groups (e.g., 64 or 128 weights per group).

- Codebook Learning: For each group, a small “codebook” of representative high-precision vectors is learned. This codebook contains the optimal values to represent the patterns within that group.

- Index Mapping: Each group of original weights is then replaced by a simple index pointing to the closest vector in its learned codebook.

- Calculating Bit Rate: If a codebook has 16 entries, you only need 4 bits (2^4=16) to store the index for an entire group of, say, 32 weights. This results in an average bit rate of 4/32 = 0.125 bits per weight for that group! The 1.58-bit figure is an average across all such groups in the model.

This approach is powerful because it preserves relationships between weights within a group, which is far more effective than quantizing them in isolation. Furthermore, it often involves Quantization-Aware Training (QAT) or a post-quantization fine-tuning step to allow the model to adapt to its new, highly constrained representation, a key topic in GPT Optimization News.

Maintaining Performance While Shedding Weight

The primary concern with any compression is performance degradation. How do these extreme methods avoid it? The key lies in intelligently managing the “quantization error”—the information lost during the process.

- Outlier Handling: A small number of weights often have disproportionately large magnitudes and are crucial for model performance. These “outliers” are sometimes kept in a higher-precision format (e.g., 8-bit or 16-bit) while the vast majority of other weights are aggressively quantized. This mixed-precision approach is a cornerstone of modern GPT Compression News.

- Quantization-Aware Fine-Tuning: After the initial quantization, the model undergoes a short period of fine-tuning. This allows it to adjust its remaining parameters to compensate for the information lost. This process is crucial for recovering performance and is a hot topic in the GPT Custom Models News community.

- Data-Driven Quantization: The quantization scales and codebooks are not arbitrary; they are carefully calibrated based on the statistical distribution of the model’s weights and activations, often using a small, representative dataset.

The results are staggering. Recent GPT Benchmark News shows models quantized to under 2 bits retaining over 99% of the performance of their full-precision counterparts on standard benchmarks like MMLU and perplexity, a feat once considered impossible.

Section 3: The Ripple Effect: Implications Across the AI Ecosystem

This breakthrough is not just an academic curiosity; it has profound, real-world implications that will reshape the GPT Ecosystem News for years to come. The ability to run massive models with minimal resources unlocks a new frontier of applications and democratizes access to cutting-edge AI.

Democratizing AI: From Data Centers to Your Pocket

The most immediate impact is on accessibility. A 70-billion parameter model, which in its FP16 form requires 140GB of VRAM and multiple high-end GPUs, can be compressed to under 15GB with 1.58-bit quantization. This brings it within reach of a single high-end consumer GPU. This is transformative for:

- Developers and Researchers: The ability to run and fine-tune state-of-the-art models on local machines drastically lowers the barrier to entry, fueling innovation in the GPT Open Source News community.

- Edge Computing: This is the holy grail of GPT Edge News. Powerful, responsive AI can now be deployed directly on devices like smartphones, laptops, cars, and IoT sensors. This enables real-time applications without relying on a cloud connection, addressing both latency and GPT Privacy News concerns. Imagine complex GPT Assistants running entirely on your phone.

Transforming Industries and Applications

This efficiency unlocks novel use cases across various sectors, leading to exciting GPT Applications News:

- GPT in Healthcare News: Medical imaging analysis or diagnostic chatbots can run on local hospital hardware, ensuring patient data never leaves the premises.

- GPT in Finance News: Sophisticated fraud detection algorithms can operate in real-time on local banking terminals or ATMs without network lag.

- GPT in Gaming News: In-game characters (NPCs) can be powered by complex LLMs running locally, allowing for truly dynamic and unscripted interactions that aren’t dependent on server-side processing.

- GPT in Content Creation News: Powerful creative tools for writing, coding, and art generation can be integrated directly into desktop applications, offering a seamless and private user experience.

A New Paradigm for Hardware and Inference Engines

This trend will also steer future GPT Hardware News. Instead of focusing solely on massive FP16/FP32 compute power, chip designers will increasingly prioritize hardware acceleration for low-bit integer and mixed-precision operations. Similarly, GPT Inference Engines and platforms like NVIDIA’s TensorRT-LLM and open-source projects will need to be re-architected to fully exploit these ultra-low-precision data types, which will be a key topic in GPT Deployment News.

Section 4: Navigating the Trade-offs: Best Practices and Considerations

While extreme quantization is revolutionary, it is not a silver bullet. Implementing it effectively requires a clear understanding of its trade-offs and potential pitfalls.

The Inevitable Compromise: Performance vs. Efficiency

Even with the best techniques, there is almost always a small, measurable drop in performance. For a general-purpose chatbot, a 1% reduction in benchmark scores might be imperceptible. However, for highly specialized tasks, this could be critical.

- Factual Recall & Reasoning: Extreme quantization can sometimes slightly degrade a model’s ability to recall specific facts or perform complex multi-step reasoning.

- Code & Math Generation: Tasks requiring high precision, like those covered in GPT Code Models News, may be more sensitive to quantization errors.

The key is to align the level of quantization with the application’s tolerance for error. This intersects with GPT Ethics News, as deploying a slightly degraded model in a high-stakes field like legal tech or healthcare requires careful validation and consideration of GPT Safety News.

Best Practices and Tips for Implementation

For teams looking to leverage these techniques, here are some actionable recommendations:

- Benchmark Rigorously: Always evaluate the quantized model on a comprehensive suite of tasks relevant to your specific domain, not just on generic benchmarks.

- Start Conservatively: Begin with a less aggressive method like 4-bit quantization. If performance is acceptable and you need more efficiency, then experiment with sub-2-bit techniques.

- Use Quantization-Aware Fine-Tuning (QAF): For maximum performance recovery, fine-tuning the model *after* quantization is almost always the best approach.

- Profile Your Model: Identify which layers or modules are most sensitive to quantization. Applying a mixed-precision strategy can often yield the best balance of efficiency and accuracy.

Conclusion: The Future is Small, Fast, and Everywhere

The latest developments in extreme GPT quantization represent a monumental shift in the AI landscape. We are moving beyond the era where cutting-edge AI was the exclusive domain of hyperscalers and entering a new phase of accessibility and ubiquity. This breakthrough in GPT Efficiency News ensures that the future of AI is not just about building bigger models, but about building smarter, more efficient ones that can be deployed anywhere.

As these techniques mature and become integrated into popular GPT Tools and platforms, they will supercharge the next wave of innovation. From on-device personal assistants that respect our privacy to powerful AI-driven features in our everyday applications, the 1.58-bit revolution is fundamentally changing who can build with, and who can benefit from, artificial intelligence. This is a core part of the GPT Future News, and it promises a world where the power of large language models is truly in everyone’s hands.