The Vision API Revolution: A Technical Deep Dive into GPT’s New Multimodal Capabilities

A New Paradigm in AI: The Dawn of Accessible Multimodal Interaction

The evolution of artificial intelligence has been marked by distinct, transformative leaps. We moved from rule-based systems to machine learning, and then to the dominance of Large Language Models (LLMs) that mastered text. Today, we stand at the precipice of another monumental shift: the transition from purely linguistic intelligence to comprehensive, multimodal understanding. The latest GPT Models News signals a pivotal moment in this journey, as advanced vision capabilities, once the domain of specialized models and complex pipelines, are becoming directly accessible through mainstream APIs. This isn’t merely an incremental update; it’s the unlocking of a new sensory modality for AI, allowing it to see, interpret, and reason about the visual world in concert with text and audio.

This article provides a comprehensive technical analysis of these groundbreaking developments, focusing on the integration of vision into the latest generation of GPT models. We will explore the architecture of these new API endpoints, dissect best practices for implementation, and examine the profound implications for industries ranging from healthcare to content creation. As developers gain the tools to build applications that can see and understand images and video streams, we are moving beyond simple chatbots and into an era of truly interactive, context-aware AI agents. This is a crucial piece of GPT Future News, setting the stage for the next wave of innovation in the AI ecosystem.

Unpacking the Omni-Modal Toolbox: What’s New for Developers

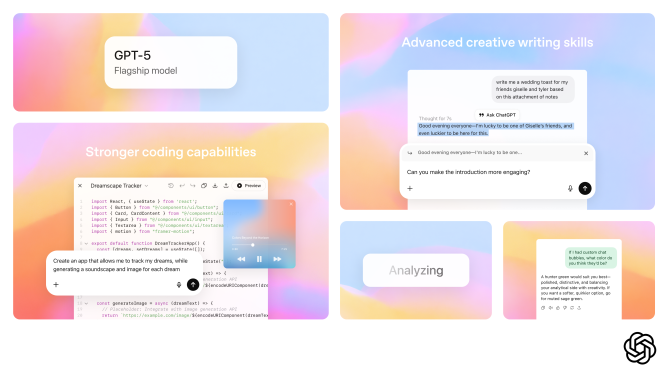

The latest wave of OpenAI GPT News centers around a powerful new “omni-modal” approach, where a single model natively processes text, images, and audio. This unified architecture represents a significant leap in GPT Architecture News, delivering substantial improvements in performance, cost, and developer experience.

GPT-4o: The Omni-Modal Powerhouse via API

The flagship announcement is the full availability of GPT-4o’s advanced capabilities through the API. Unlike previous approaches that often required chaining a text model with a separate vision model, GPT-4o handles multiple modalities within its core architecture. For developers, this translates to several key advantages. Firstly, there is a dramatic reduction in latency. By eliminating the overhead of communication between different models, response times for visual queries are significantly faster, making real-time applications viable. Secondly, the GPT Latency & Throughput News is overwhelmingly positive, as the unified model is more efficient, leading to a substantial price reduction for multimodal tasks. This cost-effectiveness democratizes access to vision capabilities, enabling startups and individual developers to experiment with ideas that were previously cost-prohibitive. This is a major development in the ongoing story of GPT-4 News, moving it from a powerful text generator to a comprehensive reasoning engine.

A Holistic Ecosystem for Next-Generation Applications

Beyond the core vision features, the recent updates provide a suite of tools that create a more robust and integrated development environment. Enhanced voice models now offer higher quality text-to-speech and speech-to-text at a lower price point, paving the way for more natural and human-like conversational agents. The integration of WebRTC (Web Real-Time Communication) is a game-changer for building these agents, allowing for low-latency, bidirectional streaming of audio and video directly between a client and the API. This is critical for applications like real-time translation, AI-powered customer support, and interactive tutoring. Furthermore, the release of official Go and Java SDKs expands the GPT Ecosystem News, making it easier for a wider range of developers to integrate these powerful features. Finally, new options like preference fine-tuning represent important GPT Fine-Tuning News, giving developers more control to align model behavior with specific brand voices or application requirements.

Technical Deep Dive: Implementing the GPT Vision API

Harnessing the power of GPT’s vision capabilities requires understanding the nuances of the API and adopting new prompting strategies. Moving from text-only to multimodal inputs introduces a new set of considerations for developers focused on GPT Deployment News and application design.

API Architecture and Request Structure

Interacting with the vision-enabled API involves structuring your requests to handle both text and image data within a single call. The core concept is a “multi-part” message format. Instead of a simple string of text, the `content` of a message is now an array of objects, each with a specific type.

A typical request might include a text part and one or more image parts. Images can be provided in two ways:

- Image URL: You can pass a publicly accessible URL to the image. The model will fetch and process the image from that location. This is efficient for images already hosted online.

- Base64 Encoding: For local images or those generated on the fly, you can encode the image data into a Base64 string and embed it directly in the API call. This increases the request payload size but offers greater flexibility and privacy.

A simplified JSON payload for a visual query looks like this:

{

"model": "gpt-4o",

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "Describe the architectural style of this building and suggest when it might have been built."

},

{

"type": "image_url",

"image_url": {

"url": "https://example.com/images/building.jpg"

}

}

]

}

]

}

Best Practices for Effective Visual Prompting

Crafting effective prompts is even more critical in a multimodal context. The quality of the output depends heavily on the synergy between the image and the accompanying text.

- Be Specific: Avoid vague questions like “What is this?” Instead, guide the model’s focus. For a picture of a circuit board, ask, “Identify the microcontroller unit (MCU) on this PCB and list any visible capacitors.”

- Provide Context: Use the text portion of the prompt to provide context that isn’t visually apparent. For a graph, you might say, “This chart shows our Q3 sales figures. Please summarize the key trends and highlight any anomalies.”

- Guide the Output Format: Explicitly ask for the format you need. For an image of a whiteboard from a brainstorming session, you could request, “Transcribe the text from this whiteboard and organize it into a JSON object with ‘categories’ and ‘action_items’ as keys.” This is great news for fans of GPT Agents News, as it enables more structured data extraction.

Common Pitfalls and How to Avoid Them

As with any powerful tool, there are potential pitfalls. A key piece of GPT Safety News is being aware of these limitations. Low-resolution or poorly lit images will yield poor results. Ambiguous prompts will lead to generic or incorrect answers. Furthermore, developers must be cautious about relying on the model for tasks requiring domain-specific, high-stakes accuracy without a human-in-the-loop verification process, a critical aspect of GPT Ethics News.

Real-World Applications and Transformative Industry Impact

The integration of vision into mainstream GPT APIs is not just a technical curiosity; it is a catalyst for widespread innovation across countless sectors. This wave of GPT Applications News will redefine user experiences and automate complex visual tasks at an unprecedented scale.

Revolutionizing Accessibility and User Interfaces

One of the most immediate and impactful applications is in accessibility. Imagine an application for the visually impaired that uses a phone’s camera to describe the user’s surroundings in real-time, read menus, or identify products on a shelf. For web development, these models can automatically generate highly descriptive alt-text for images, making the internet a more inclusive space. This also touches on GPT in Education News, where visual aids can be instantly explained and contextualized for students.

Driving Innovation in Specialized Verticals

- Healthcare: While not a replacement for expert diagnosis, the API can serve as a powerful assistant. In GPT in Healthcare News, we see potential for applications that analyze medical images like X-rays or skin lesions to highlight areas of interest for a radiologist or dermatologist to review, accelerating the diagnostic workflow.

- Finance and Insurance: The GPT in Finance News is buzzing with possibilities. An insurance company can build a tool where users upload photos of a damaged vehicle, and the AI provides an initial damage assessment and cost estimate, dramatically speeding up the claims process.

- Retail and E-commerce: A user can take a photo of an item they see in the real world, and an e-commerce app can instantly identify it or find visually similar products in its inventory, creating a seamless “search by sight” experience.

- Legal Tech: In the realm of GPT in Legal Tech News, vision capabilities can be used to scan, transcribe, and categorize evidence from photos or documents, assisting in the discovery process.

Empowering Creativity and Content Creation

The impact on creative fields is immense. This is significant GPT in Content Creation News. A marketing team can analyze a folder of user-generated images to understand how their product is being used in the wild. A video editor can use an AI assistant to automatically log footage, identifying objects, scenes, and even the sentiment of facial expressions. A game developer could allow players to import real-world objects into a game simply by taking a picture, a fascinating development for GPT in Gaming News.

Strategic Considerations for Implementation

Integrating these new vision capabilities into products requires careful strategic planning, balancing performance with cost, and maintaining a strong ethical framework.

Performance vs. Cost: Choosing the Right Model

A key aspect of the latest GPT Efficiency News is the availability of different models with varying performance and cost profiles. While a state-of-the-art model like GPT-4o offers the highest accuracy and reasoning capabilities, its cost per token (including the token cost of images) might be prohibitive for high-volume, low-margin applications. Developers must analyze their specific use case. For a critical medical imaging analysis tool, maximum performance is non-negotiable. For an app that generates fun captions for social media photos, a smaller, faster, and cheaper model may be more appropriate. This highlights the importance of ongoing GPT Benchmark News to help developers make informed decisions about GPT Optimization News and resource allocation.

Ethical Guardrails and Responsible Deployment

With great power comes great responsibility. The ability of AI to see and interpret the world introduces significant ethical challenges that developers must proactively address. The potential for misuse in surveillance, facial recognition, and the generation of misinformation is real. This makes GPT Regulation News and internal policy more important than ever. Developers must prioritize GPT Privacy News by ensuring user data, especially sensitive visual data, is handled securely and with consent. Implementing human-in-the-loop systems for sensitive applications is crucial to mitigate risks associated with GPT Bias & Fairness News. Building transparent systems that clearly communicate when a user is interacting with an AI is a fundamental principle of responsible deployment.

Conclusion: A Clear Vision for the Future of AI

The recent expansion of GPT APIs to include robust, integrated, and affordable vision capabilities is a landmark event in the progression of artificial intelligence. We are officially moving beyond the era of text-only interaction and into a new, multimodal reality. For developers and businesses, this represents an unparalleled opportunity to build applications that are more intuitive, accessible, and intelligent than ever before. From assisting the visually impaired to streamlining complex industrial workflows, the potential applications are as vast as our own visual imagination.

This is more than just an update; it is the democratization of a fundamental sensory ability for AI. As the GPT Trends News indicates, the fusion of language, sight, and sound into a single, cohesive model will be the driving force behind the next generation of AI-powered tools, assistants, and platforms, fundamentally reshaping how we interact with the digital world.