The Agentic Shift: Why the Future of AI Is Not Just Smarter Chatbots, But Autonomous GPT Agents

Beyond Conversation: The Dawn of the Autonomous AI Agent

For the past several years, the world has been captivated by the rapid advancements in large language models (LLMs). The release of models like GPT-3.5 and GPT-4 has democratized access to sophisticated conversational AI, making “chatbot” a household term. As the community eagerly awaits the latest GPT-5 News, the discourse is often centered on metrics of raw intelligence: larger parameter counts, better reasoning, and more human-like text generation. However, a more profound transformation is underway, a paradigm shift that redefines the very purpose of these powerful models. The future isn’t just about creating a more knowledgeable oracle; it’s about building autonomous AI agents—systems that can understand goals, devise plans, and execute tasks in the digital and physical world. This evolution from passive conversationalist to active participant marks the next major frontier in artificial intelligence, promising to automate complex workflows and fundamentally change how we interact with technology.

This article delves into this “agentic shift,” exploring the technical architecture that empowers GPT models to act, the real-world applications transforming industries, and the critical challenges and ethical considerations we must navigate. We will move beyond the hype of a “smarter” chatbot and examine the framework that allows AI to transition from simply talking to actively doing.

The Paradigm Shift: From Conversational AI to Autonomous Agents

The latest ChatGPT News often highlights new features that enhance its conversational prowess, but its core functionality remains rooted in a request-response loop. This model, while incredibly powerful, is fundamentally reactive. It requires continuous human guidance for every step of a process. This limitation defines the boundary between a sophisticated chatbot and a true AI agent.

The Limitations of a Chatbot

A state-of-the-art chatbot powered by GPT-4 can write code, draft a marketing plan, or explain a complex scientific theory with stunning accuracy. However, it cannot execute the code it writes, launch the marketing campaign through an ad platform, or set up the experiment it designed. It is a brilliant consultant trapped behind a text interface. For any multi-step, real-world task, the user must act as the bridge, manually copying, pasting, and executing each component of the AI’s suggested plan. This dependency creates a bottleneck, limiting the model’s utility to advisory and content generation roles. The latest GPT-4 News has focused on multimodality, but the core interaction model has remained largely the same.

Defining the AI Agent

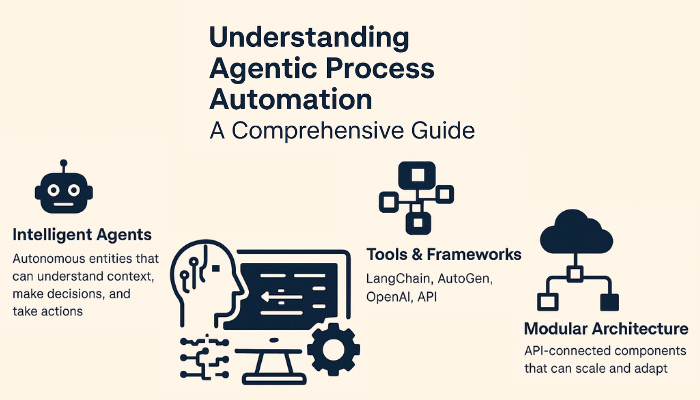

An AI agent, in contrast, is an autonomous system designed to achieve specific goals with minimal human intervention. It leverages an LLM not just for generation, but for reasoning and planning. The core components of an agent are:

- Perception: The ability to gather information from its environment. This isn’t just user text but can include reading files, browsing websites, or interpreting data from APIs. The latest GPT Vision News shows how this perception is expanding to include images and video.

- Planning: The capacity to break down a high-level goal (e.g., “Plan a business trip to Tokyo”) into a sequence of actionable steps (e.g., 1. Search for flights. 2. Compare hotel prices. 3. Check calendar for availability. 4. Book flight and hotel. 5. Add to calendar).

- Action: The ability to execute these steps by interacting with external tools, APIs, and systems. This is where the latest GPT APIs News, especially regarding function calling, becomes a critical enabler.

Think of it this way: a chatbot is a world-class navigator who can give you perfect turn-by-turn directions. An AI agent is the self-driving car that actually takes you to your destination. This shift from instruction to execution is the essence of the agentic revolution.

The Anatomy of a GPT-Powered Agent: Architecture and Core Components

Building a functional AI agent is more complex than simply putting a wrapper around an LLM. It requires a sophisticated architecture that integrates the model’s reasoning capabilities with memory, tools, and a control loop. This is a major focus of current GPT Research News and a hot topic in the GPT Ecosystem News.

The “Brain”: The Foundational LLM

At the heart of every agent is a powerful foundational model, such as those discussed in OpenAI GPT News. This LLM serves as the central reasoning engine. Given a goal, it formulates a plan, decides which tool to use next, and processes the results of its actions. The quality of this “brain” is paramount. Advancements in GPT Architecture News and GPT Training Techniques News are directly aimed at improving the multi-step reasoning and planning capabilities required for complex agentic tasks. The ongoing debate in GPT Scaling News about whether bigger models are always better is particularly relevant here, as agentic tasks often require deep reasoning over long contexts.

The “Senses” and “Memory”: Perception and State Management

An agent cannot act in a vacuum. It needs to perceive its environment and remember past actions.

- Perception: This is achieved through tools that allow the agent to access external information. A web search tool lets it read current events, a code interpreter lets it run calculations, and API connectors let it query databases. The rise of GPT Multimodal News points to a future where agents can perceive through sight and sound, not just text.

- Memory: LLMs have a limited context window (short-term memory). For an agent to perform long-running tasks, it needs a long-term memory system. This is often implemented using vector databases (e.g., Pinecone, Chroma), which store information from past interactions and allow the agent to retrieve relevant context when needed. This is a critical component of the emerging GPT Platforms News.

The “Hands”: Tool Use and Action Execution

This is what truly separates an agent from a chatbot. The ability to use tools is the agent’s “hands” to interact with the world. Early steps in this direction were seen in GPT Plugins News. Today, modern frameworks like LangChain or Auto-GPT, often highlighted in GPT Tools News, provide a structured way for an agent to:

- Choose a Tool: Based on the current step in its plan, the LLM decides which tool is appropriate (e.g., `web_search`, `python_code_executor`, `send_email_api`).

- Generate Input: The LLM generates the necessary input for the chosen tool (e.g., the search query or the Python code).

- Execute and Observe: The framework executes the tool and returns the output (the search results, code output, or API response) to the LLM.

This loop of “think, act, observe” continues until the agent determines that the initial goal has been achieved. The efficiency of this process, a key topic in GPT Inference News, is critical for usability.

Real-World Impact: GPT Agents Across Industries

The theoretical promise of GPT agents is already translating into tangible applications across various sectors. This is where the bulk of exciting GPT Applications News is being generated, moving far beyond simple GPT Chatbots News.

In Software Development and IT Operations

Imagine an agent integrated into a DevOps pipeline. It receives a bug alert from a monitoring system, accesses the relevant codebase, analyzes the error logs, writes a patch, runs it in a sandboxed test environment, and, upon success, submits a pull request for human review. This level of automation, powered by advanced GPT Code Models News, could dramatically accelerate development cycles and is a key area of focus in GPT Deployment News.

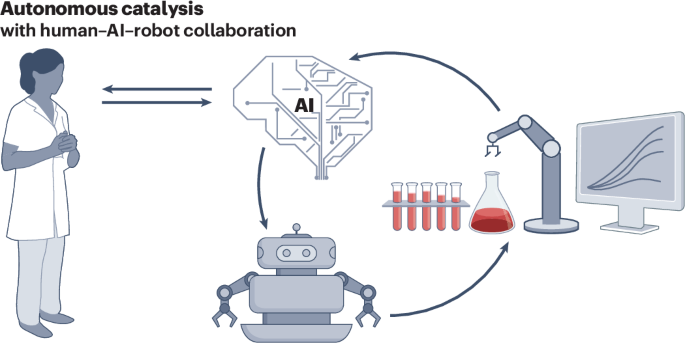

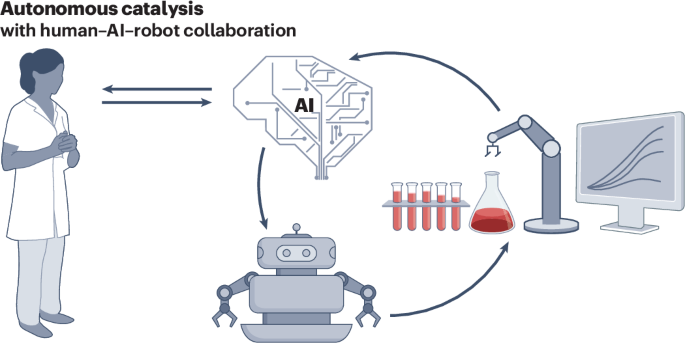

In Healthcare and Scientific Research

In life sciences, an agent could be tasked with accelerating drug discovery. It could autonomously scan thousands of new research papers and clinical trial results published daily, cross-reference findings with internal genomic datasets, and identify promising molecular compounds for further investigation. Such an application, a major breakthrough for GPT in Healthcare News, could augment human researchers and significantly shorten research timelines.

In Finance and Marketing

The latest GPT in Finance News discusses agents capable of performing complex market analysis. A financial agent could monitor real-time market data, news sentiment, and economic reports, executing trades based on a sophisticated, pre-approved strategy. Similarly, GPT in Marketing News highlights agents that can manage entire digital ad campaigns: from conducting audience research and generating ad copy to allocating budgets via platform APIs and optimizing spend based on performance metrics.

In Content Creation and Education

The creative fields are also being transformed. An agent focused on GPT in Content Creation News could take a high-level prompt like “create a short documentary about ancient Egypt,” and proceed to research topics, write a script, generate a synthetic voiceover, source or create relevant visuals using multimodal models, and edit it all into a finished video. In education, GPT in Education News explores personalized tutors that can create custom lesson plans, generate practice problems, and adapt to a student’s learning pace in real time.

Navigating the Agentic Future: Opportunities, Challenges, and Best Practices

The transition to an agentic AI paradigm is fraught with both immense opportunity and significant risk. Successfully navigating this future requires a clear-eyed view of the challenges and a commitment to responsible development practices, a topic central to GPT Ethics News.

The Immense Opportunities

The primary benefit of AI agents is the automation of complex, multi-step digital tasks, freeing up human capital for more strategic, creative, and interpersonal work. This will lead to massive productivity gains and the creation of entirely new services and business models. The ongoing improvements in GPT Efficiency News, through techniques like GPT Quantization News and GPT Distillation News, will make these agents more accessible and cost-effective to deploy at scale.

Critical Challenges and Ethical Considerations

With great power comes great responsibility. The autonomy of AI agents introduces a new class of risks that must be proactively managed.

- Security and Safety: An agent with access to APIs, file systems, and personal data is a prime target for malicious actors. A compromised agent could exfiltrate data, execute fraudulent transactions, or cause system-wide damage. This is the top concern in GPT Safety News.

- Reliability and Control: LLMs are prone to “hallucination.” When a chatbot hallucinates, it produces incorrect text. When an agent hallucinates, it could execute a flawed or destructive sequence of actions. Ensuring alignment and maintaining human oversight is a critical challenge discussed in GPT Regulation News.

- Bias and Fairness: Agents will inherit biases present in their training data. An agent used for resume screening or loan applications could perpetuate and amplify societal biases. Addressing this is a core focus of GPT Bias & Fairness News.

- Privacy: As agents become more integrated into our lives, they will handle vast amounts of sensitive information. Robust data protection and privacy-preserving techniques are non-negotiable, a key theme in GPT Privacy News.

Best Practices for Development and Deployment

To mitigate these risks, developers and organizations should adopt a set of best practices:

- Start with a Human-in-the-Loop: For any critical or irreversible action, the agent should require explicit human approval before execution.

- Implement Strict Sandboxing: Agents should operate in tightly controlled, isolated environments with the minimum permissions necessary to perform their tasks.

- Use Clear, Constrained Prompting: Define the agent’s goals, constraints, and available tools with extreme precision to limit ambiguity and unintended behavior.

- Maintain Comprehensive Logging: Keep detailed, auditable logs of an agent’s reasoning process, tool usage, and actions to facilitate debugging and incident response.

Conclusion: The Future is an Active Participant

The narrative of AI’s progress is moving beyond the simple pursuit of a higher score on a benchmark test. While the raw intelligence of future models like GPT-5 will undoubtedly be a crucial component, the real revolution lies in harnessing that intelligence to perform meaningful tasks in the world. The agentic shift represents the transition of AI from a passive tool for information retrieval and content generation to an active partner in execution and automation. This evolution promises to unlock unprecedented levels of productivity and innovation across every industry.

However, this powerful future demands a parallel evolution in our approach to safety, ethics, and control. Building trustworthy, reliable, and aligned AI agents is the central challenge of the next decade. As we follow the latest GPT Future News, the most important stories will not just be about how smart the models are, but about how effectively and safely we can empower them to act.