The Rise of Specialized GPT Assistants: Revolutionizing the Software Development Lifecycle

The Dawn of a New Era: From Generalist Chatbots to Specialized AI Co-pilots

The conversation around artificial intelligence has rapidly evolved from the novelty of general-purpose chatbots to a more profound, industry-specific dialogue. While models like ChatGPT demonstrated the immense power of large language models (LLMs), the latest GPT Assistants News reveals a pivotal shift towards specialization. We are entering an era where AI is no longer just a versatile tool but a collection of expert co-pilots, meticulously trained and integrated into specific professional workflows. The most significant and immediate impact of this trend is being felt within the software development lifecycle (SDLC). Companies are now launching sophisticated AI assistants designed not just to write code, but to manage the entire ecosystem of development, from initial generation and quality assurance to complex DevOps and deployment pipelines. This evolution marks a critical turning point, promising to redefine productivity, efficiency, and collaboration for engineering teams worldwide.

This article explores this transformative trend, delving into the architecture, application, and implications of these new, specialized GPT-powered assistants. We will analyze how they differ from their generalist predecessors, break down their specific functions within the SDLC, and provide actionable insights for teams looking to harness this powerful new wave of AI technology. As the latest GPT Trends News indicates, understanding this shift is no longer optional—it’s essential for staying competitive in a rapidly changing technological landscape.

Section 1: The Great Specialization: Why Niche GPT Assistants Are Outpacing General Models

The initial excitement surrounding models like GPT-3.5 and GPT-4 was rooted in their incredible versatility. They could write poetry, draft emails, and generate code snippets with remarkable fluency. However, for professional, high-stakes environments like software engineering, this “jack-of-all-trades” approach has inherent limitations. The latest OpenAI GPT News and developments from competitors highlight a clear move towards creating models and agents with deep, contextual understanding of a specific domain. This specialization is driven by several key factors.

Limitations of General-Purpose LLMs in Technical Workflows

Generalist models lack the specific context required for complex engineering tasks. For example, when asked to generate a CI/CD pipeline configuration, a general model might produce a syntactically correct but functionally naive script. It won’t understand your organization’s specific security protocols, preferred cloud infrastructure, or internal coding standards. This “context gap” forces developers to spend significant time editing and adapting the AI’s output, diminishing the productivity gains. Furthermore, according to recent GPT Benchmark News, specialized models consistently outperform general ones on domain-specific tasks, showing higher accuracy and relevance.

The Power of Domain-Specific Data and Fine-Tuning

Specialized assistants are built on a foundation of curated, high-quality data specific to their domain. An AI assistant for DevOps, for instance, is trained on millions of lines of configuration files (like YAML and Terraform), technical documentation for cloud services (AWS, Azure, GCP), and vast repositories of incident reports and resolution logs. This focused training, often involving advanced GPT Fine-Tuning News and techniques like Reinforcement Learning from Human Feedback (RLHF) with expert DevOps engineers, imbues the model with a nuanced understanding of best practices, common pitfalls, and complex interdependencies. This is a core topic in GPT Training Techniques News, where the focus is shifting from massive, generic datasets to smaller, higher-quality, domain-specific ones.

Seamless Integration: Moving from Chat Interface to Workflow Native

Perhaps the most crucial differentiator is integration. A general chatbot operates in a separate window, requiring developers to copy and paste information back and forth. Specialized assistants, however, are deeply embedded within the tools developers already use. They exist as plugins in IDEs (like VS Code), extensions in CI/CD platforms (like Jenkins or GitLab), and bots within communication channels (like Slack or Microsoft Teams). This native integration, a hot topic in GPT Integrations News, allows the AI to access real-time context—the codebase, build logs, and error messages—and take action directly within the workflow. This transforms the AI from a passive consultant into an active, participating GPT Agent.

Section 2: A Technical Breakdown of AI Assistants Across the SDLC

To truly appreciate the impact of these specialized tools, it’s essential to break down their functionality across the key stages of the software development lifecycle. These assistants are not a single entity but a suite of interconnected agents, each an expert in its field.

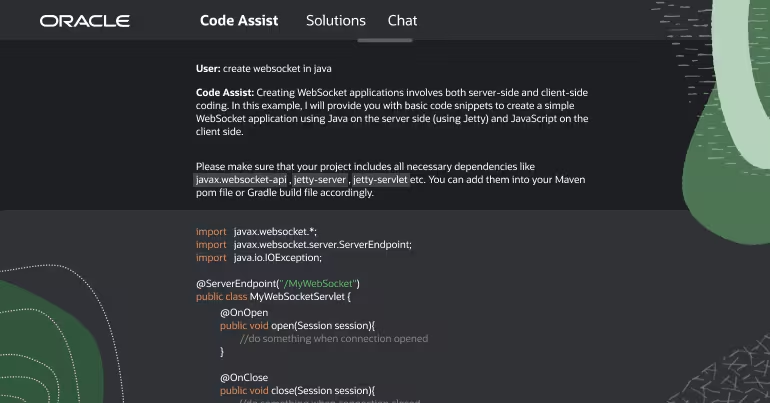

Code Generation and Development Assistance

Modern AI code assistants go far beyond the simple autocompletion seen in early tools. The latest GPT Code Models News points to assistants that can understand the entire context of a repository.

- Full-Function Scaffolding: Instead of generating single lines, these assistants can scaffold entire functions, classes, or microservices based on a high-level natural language prompt, automatically including error handling, logging, and adherence to the project’s coding style.

- Automated Unit & Integration Testing: A developer can highlight a complex function, and the AI will generate a comprehensive suite of unit tests, covering edge cases and potential failure points they might have missed. This directly improves code quality and reduces the manual testing burden.

- Intelligent Refactoring: By analyzing a block of code, the AI can suggest performance optimizations or refactor it for better readability and maintainability, explaining its reasoning based on established software design principles. This aligns with ongoing GPT Optimization News, focusing on code efficiency.

Quality Assurance (QA) and Testing Automation

QA is a traditionally labor-intensive process that is being dramatically streamlined by AI.

- Test Case Generation from Requirements: A QA assistant can read a user story or a requirements document and automatically generate a detailed list of positive, negative, and exploratory test cases. The latest GPT Vision News suggests future versions could even analyze a UI mockup or wireframe to generate relevant tests.

- Log Analysis and Bug Triage: When a test fails, the assistant can parse thousands of lines of logs in seconds, identify the root cause of the error, and even suggest a potential fix. It can then automatically create a bug ticket with all the relevant context, assigning it to the right team.

- Performance and Security Testing: Specialized QA agents can generate scripts for load testing and identify common security vulnerabilities (like SQL injection or cross-site scripting) in the code before it ever reaches production, a key topic in GPT Safety News.

DevOps and CI/CD Pipeline Management

This is arguably where specialized assistants provide the most immediate and significant value, as DevOps tasks are often complex and error-prone.

- Declarative Pipeline Generation: A DevOps engineer can describe their desired deployment process in plain English (e.g., “Create a three-stage pipeline that builds my Node.js app, runs tests, and deploys to a Kubernetes cluster on AWS EKS if the main branch is updated”). The AI assistant then generates the complete, production-ready YAML configuration file.

- Real-time Failure Diagnosis: When a deployment fails, the assistant can immediately analyze the pipeline logs, cross-reference them with cloud monitoring data, and provide a precise diagnosis and recommended solution directly in a Slack channel. For example: “Deployment failed at the ‘apply-manifest’ step. Reason: The Kubernetes service account lacks the ‘iam:PassRole’ permission. To fix, add this permission to the IAM role associated with the service account.”

- Cost and Performance Optimization: By analyzing cloud usage patterns, the assistant can recommend infrastructure changes to reduce costs, such as switching to more efficient instance types or implementing auto-scaling policies. This is a critical application discussed in GPT Efficiency News.

Section 3: Strategic Implications and Best Practices for Adoption

The introduction of specialized GPT assistants is more than a technological upgrade; it’s a strategic shift that requires careful planning and a change in mindset. The potential benefits are enormous, but so are the risks if implemented poorly.

The Productivity Multiplier Effect

The primary implication is a massive boost in developer productivity and velocity. By automating tedious, repetitive, and complex tasks, these assistants free up senior engineers to focus on high-value activities like system architecture, complex problem-solving, and innovation. A junior developer, guided by an AI co-pilot, can become productive much faster, effectively democratizing expertise across the team. This also leads to faster time-to-market for new features and a more resilient, reliable infrastructure, as AI can monitor and troubleshoot issues 24/7.

Best Practices for Successful Implementation

Adopting these tools effectively requires a thoughtful approach:

- Start with a Pilot Program: Identify a single, well-defined pain point (e.g., CI/CD pipeline configuration) and introduce an AI assistant to a small, dedicated team. Measure key metrics before and after, such as deployment frequency, failure rate, and mean time to recovery (MTTR).

- Emphasize the “Human-in-the-Loop” Model: Position the AI as an assistant, not an autonomous replacement. All critical outputs, especially generated code and infrastructure configurations, must be reviewed and approved by a human expert. This is a central tenet of current GPT Ethics News and responsible AI deployment.

- Invest in Training and Education: Teams need to be trained not just on how to use the tool, but how to prompt it effectively and critically evaluate its output. This new skill, often called “AI whispering,” is becoming essential for modern developers.

- Address Data Privacy and Security: Be clear about what data the AI assistant can access. When using cloud-based GPT APIs News, ensure that proprietary code and sensitive data are not being used to train public models. Many enterprise-grade assistants now offer on-premise or VPC deployment options to address these GPT Privacy News concerns.

Common Pitfalls and How to Avoid Them

Organizations should be wary of several potential pitfalls. Over-reliance on AI can lead to a gradual deskilling of the workforce, where engineers lose the ability to perform tasks manually. Another risk is the introduction of subtle but critical bugs or security flaws in AI-generated code that go unnoticed. Rigorous code reviews and a strong security-first culture are non-negotiable. Finally, as highlighted in GPT Bias & Fairness News, it’s crucial to ensure that the AI’s training data doesn’t perpetuate biases that could affect its recommendations or code generation.

Section 4: The Future Landscape: Autonomous Agents and a Hyper-Competitive Ecosystem

The current wave of specialized assistants is just the beginning. The trajectory of GPT Future News points towards an even more integrated and autonomous future, fundamentally altering the nature of software development.

The Rise of Autonomous Software Engineering Agents

The next logical step, and a topic of intense discussion in GPT Research News, is the evolution from assistant to autonomous agent. Instead of merely suggesting a fix for a failed deployment, a future AI agent could be granted the authority to automatically write the code for the fix, test it in a staging environment, and deploy it to production, all while keeping the human team informed. This vision of self-healing, self-optimizing systems is rapidly moving from science fiction to reality, driven by advances in areas like GPT Scaling News and more efficient GPT Inference Engines.

A Burgeoning Ecosystem and the Open Source Movement

The market for these tools is exploding. Established tech giants are integrating AI assistants into their platforms, while a vibrant ecosystem of startups is building highly specialized solutions for niche problems. Concurrently, the GPT Open Source News community is playing a vital role. Projects built on open models provide greater transparency and customizability, allowing organizations to build their own specialized assistants tailored to their unique needs. This competition is fueling rapid innovation and driving down costs, benefiting the entire industry. The ongoing developments in GPT Architecture News, especially around more efficient and smaller models, will further accelerate this trend, enabling powerful AI to run on the edge (GPT Edge News).

Looking Ahead: Multimodality and Beyond

Future assistants will be multimodal, capable of understanding more than just text. As previewed in GPT-5 News and GPT Multimodal News, an AI could watch a video of a user interacting with an application, identify a bug from their frustrated clicks, and automatically generate the code to fix it. It could analyze architectural diagrams and performance graphs to suggest infrastructure improvements. This holistic understanding of the entire development context will make these assistants indispensable partners in creating the next generation of software.

Conclusion: Embracing the Inevitable AI-Powered Future

The emergence of specialized GPT assistants for the software development lifecycle represents a paradigm shift. We are moving beyond generic AI tools and into an era of expert, integrated co-pilots that amplify human capability at every stage, from code generation to QA and DevOps. These tools offer a clear path to unprecedented levels of productivity, reliability, and innovation. However, realizing this potential requires a strategic, human-centric approach to adoption that prioritizes security, privacy, and continuous learning. The latest GPT Assistants News is not just about a new category of software; it’s about a new way of building software. For engineering leaders and developers, the message is clear: the future of development is a collaborative partnership between human creativity and artificial intelligence, and the time to start building that future is now.