The Next Frontier in AI Customization: A Deep Dive into GPT-4o Fine-Tuning

The Dawn of Hyper-Specialized AI: Unpacking GPT-4o Fine-Tuning

The artificial intelligence landscape is in a constant state of rapid evolution, with each new development pushing the boundaries of what’s possible. For years, developers and organizations have leveraged the power of large language models (LLMs) like GPT-3.5 and GPT-4 through sophisticated prompt engineering and Retrieval-Augmented Generation (RAG). While effective, these methods often felt like giving instructions to a brilliant generalist. The latest development in GPT Models News marks a paradigm shift: OpenAI has now enabled fine-tuning for its flagship model, GPT-4o. This move transcends mere instruction-giving, allowing for the creation of truly bespoke, specialized AI models that learn from custom data.

This advancement is more than an incremental update; it represents a significant leap in the democratization of cutting-edge AI. By allowing businesses to imbue GPT-4o with their unique domain knowledge, brand voice, or specific operational logic, the door opens to a new class of hyper-specialized applications. This article provides a comprehensive technical deep dive into the world of GPT-4o fine-tuning, exploring its mechanics, strategic implications, and the vast potential it unlocks across industries. From GPT Training Techniques News to real-world GPT Applications News, we will cover the essential knowledge needed to harness this powerful new capability.

Section 1: The Evolution of AI Specialization: From GPT-3.5 to GPT-4o

To fully appreciate the significance of GPT-4o fine-tuning, it’s essential to understand the journey of AI customization. The ability to tailor a model’s behavior is the key to unlocking its true business value, and this capability has evolved dramatically.

What is Fine-Tuning? A Technical Refresher

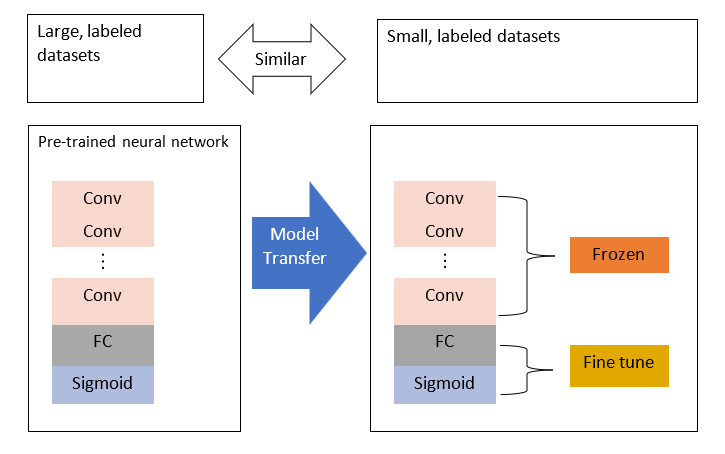

At its core, fine-tuning is the process of taking a pre-trained foundation model (like GPT-4o) and further training it on a smaller, domain-specific dataset. The base model has already learned grammar, reasoning, and a vast amount of general world knowledge from its initial training on internet-scale data. Fine-tuning doesn’t teach it these fundamentals again. Instead, it adjusts the model’s internal weights and biases to specialize in a particular style, format, or knowledge domain. This process is a core topic in GPT Research News, as it allows for efficient knowledge transfer without the prohibitive cost of training a model from scratch.

The primary goals of fine-tuning are to:

- Improve Steerability: Make the model more reliable at following complex instructions or adhering to a specific format.

- Enhance Reliability in Output Formatting: Ensure the model consistently produces outputs in a desired structure, such as valid JSON, which is crucial for GPT APIs News and integrations.

- Master a Custom Tone or Voice: Train the model to adopt a specific personality, from a formal legal advisor to a witty marketing copywriter.

Key Advancements with GPT-4o Fine-Tuning

While fine-tuning was available for older models like GPT-3.5 Turbo, its extension to GPT-4o is a game-changer. GPT-4o, OpenAI’s most advanced and efficient model, brings a superior baseline of reasoning, knowledge, and multimodal understanding. Although current fine-tuning focuses on text, the potential for future GPT Multimodal News and GPT Vision News is immense. Fine-tuning this more capable foundation means the resulting custom models start from a much higher performance ceiling. They can grasp more nuanced patterns in the training data and exhibit more sophisticated behavior, leading to significant improvements in task performance. This development is a major headline in OpenAI GPT News and sets a new standard for GPT Custom Models News.

Comparing the Old and the New: GPT-3.5 vs. GPT-4o

The decision to use GPT-3.5 or GPT-4o for fine-tuning involves a trade-off between cost, speed, and performance. Here’s a comparative breakdown:

| Feature | GPT-3.5 Turbo Fine-Tuning | GPT-4o Fine-Tuning |

|---|---|---|

| Base Model Capability | Strong general capabilities, good for simpler tasks. | State-of-the-art reasoning, knowledge, and instruction-following. |

| Task Complexity | Ideal for classification, summarization, and tone adoption. | Excels at complex, multi-step tasks, nuanced content generation, and specialized reasoning. |

| Training Cost | Lower cost per token, more accessible for initial experimentation. | Higher initial training cost, reflecting the more powerful architecture. |

| Inference Cost | Relatively low, suitable for high-volume applications. | Higher than GPT-3.5, but optimized for better price-performance than previous GPT-4 models. |

| Potential ROI | Good for tasks where “good enough” is sufficient. | Higher potential ROI for mission-critical tasks where accuracy and quality are paramount. |

This latest GPT-4 News confirms that for applications demanding the highest level of performance, the investment in GPT-4o fine-tuning is likely to yield substantial returns.

Section 2: A Technical Guide to GPT-4o Fine-Tuning

Successfully implementing fine-tuning requires a disciplined approach to data preparation, training, and evaluation. This section breaks down the technical workflow and highlights best practices and common pitfalls.

The Fine-Tuning Workflow: A Step-by-Step Guide

The process of creating a custom GPT-4o model follows a clear, API-driven workflow, a key topic in GPT Platforms News and GPT Tools News.

- Data Preparation: This is the most critical step. You must assemble a high-quality dataset of example conversations formatted in the chat completions API format. Each example should include a `system` message (defining the AI’s role), a `user` message (the prompt), and an `assistant` message (the ideal response).

- Dataset Upload: The prepared dataset, typically in a `.jsonl` file, is uploaded to OpenAI’s servers using their API.

- Fine-Tuning Job Creation: You initiate a fine-tuning job via the API, specifying the uploaded dataset file ID and the base model (`gpt-4o-2024-05-13`). You can also configure hyperparameters like the number of epochs.

- Monitoring and Completion: The job is queued and processed. OpenAI provides status updates, and upon completion, the name of your new custom model is provided (e.g., `ft:gpt-4o-2024-05-13:…`).

- Deployment and Inference: You can now use your custom model name in standard API calls, just as you would with a base model. This is a crucial aspect of GPT Deployment News, as it integrates seamlessly into existing infrastructure.

Crafting the Perfect Dataset: Best Practices

The quality of your fine-tuned model is directly proportional to the quality of your training data. This is a central theme in GPT Datasets News.

- Quality over Quantity: While OpenAI recommends at least 50-100 high-quality examples, a few hundred or thousand well-curated examples are far more effective than tens of thousands of noisy ones.

- Demonstrate, Don’t Just Tell: Your examples should actively demonstrate the desired behavior. If you want the model to be concise, provide concise assistant responses. If it needs to adopt a certain persona, write in that persona.

- Diverse and Comprehensive Coverage: Your dataset should cover the full range of inputs you expect the model to handle in production. Include edge cases, common user errors, and varied phrasing to build a robust model.

- Review and Refine: Have human experts review the dataset for accuracy, consistency, and potential biases. This step is critical for managing GPT Bias & Fairness News and ensuring GPT Safety News.

Common Pitfalls to Avoid

Navigating the fine-tuning process requires avoiding several common traps:

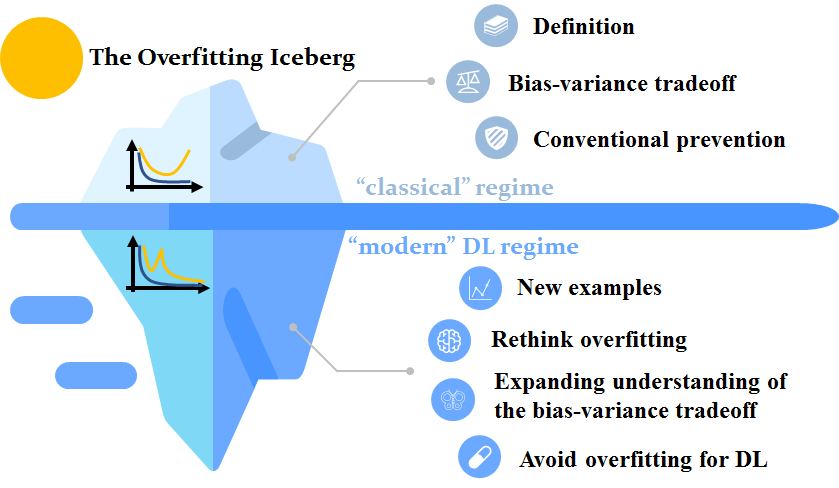

- Catastrophic Forgetting: Over-specializing the model on a narrow task can cause it to “forget” its general reasoning abilities. To mitigate this, include some diverse, general examples in your dataset.

- Overfitting: If your dataset is too small or lacks diversity, the model may simply memorize the examples rather than learning the underlying patterns. This leads to poor performance on new, unseen inputs.

- Data Contamination: Ensure your validation data is completely separate from your training data. Using overlapping data will give you an inflated sense of the model’s performance. This is a key principle in GPT Benchmark News.

- Ignoring Cost Implications: Both training and inference for fine-tuned models carry costs. A failure to model these costs can lead to budget overruns, especially in high-throughput applications. This relates to GPT Latency & Throughput News.

Section 3: Real-World Applications and Industry Impact

The ability to fine-tune GPT-4o unlocks powerful, domain-specific applications across a multitude of sectors. These are not just theoretical possibilities; they are practical solutions that can drive efficiency, quality, and innovation.

Case Study 1: GPT in Legal Tech News

A large law firm needs an AI assistant to help paralegals draft discovery requests. The requests must adhere to specific formatting, use precise legal terminology unique to the firm, and reference internal case management codes.

- Challenge: A general model like GPT-4o can draft legal documents but often misses the firm’s specific jargon and formatting nuances, requiring significant manual correction.

- Fine-Tuning Solution: The firm creates a dataset of several hundred anonymized, high-quality discovery requests previously drafted by senior paralegals. They fine-tune GPT-4o on this data.

- Result: The resulting custom model, `ft:gpt-4o-firm-legal-d1`, generates drafts that are 95% compliant with the firm’s standards, reducing drafting time by 70%. This is a landmark example for GPT in Legal Tech News.

Case Study 2: GPT in Healthcare News

A healthcare provider wants to automate the process of converting doctor-patient conversations into structured Electronic Health Record (EHR) notes in the SOAP (Subjective, Objective, Assessment, Plan) format.

- Challenge: Standard transcription services can capture the dialogue, but a general LLM struggles to accurately categorize the information into the correct SOAP sections and often misunderstands complex medical terminology.

- Fine-Tuning Solution: The provider uses a dataset of 1,000 anonymized transcripts paired with the corresponding gold-standard SOAP notes written by medical professionals.

- Result: The fine-tuned model becomes an expert medical scribe. It accurately identifies and sorts patient complaints, physician observations, diagnoses, and treatment plans, directly integrating with the EHR system. This breakthrough in GPT in Healthcare News significantly reduces administrative burden on clinicians.

Case Study 3: GPT in Marketing & Content Creation News

An e-commerce brand with a very distinct, quirky, and youthful brand voice wants to scale its content creation for product descriptions, social media posts, and email campaigns.

- Challenge: Prompting a base model with “write in a quirky and youthful tone” produces generic and inconsistent results that don’t match the brand’s unique style.

- Fine-Tuning Solution: The marketing team compiles a dataset of their best-performing content, including hundreds of examples of emails, tweets, and product descriptions.

- Result: The fine-tuned model, `ft:gpt-4o-brand-voice-v3`, perfectly captures the brand’s persona. It can generate on-brand copy instantly, allowing the marketing team to focus on strategy instead of routine writing tasks. This is a powerful application for GPT in Marketing News and GPT in Content Creation News.

Section 4: Strategic Considerations and Future Outlook

While technically accessible, adopting GPT-4o fine-tuning is a strategic decision that requires careful consideration of its costs, benefits, and alternatives.

When to Fine-Tune vs. When to Use Prompt Engineering or RAG

Fine-tuning is a powerful tool, but it’s not always the right one. Here’s a simple decision framework:

- Use Prompt Engineering when: Your task is relatively simple, you need to control the model’s behavior on a case-by-case basis, and you don’t have a large dataset of examples.

- Use RAG when: Your task requires the model to access and reason over specific, up-to-date, or proprietary documents (e.g., a knowledge base, product manuals). RAG is excellent for reducing hallucinations and citing sources.

- Use Fine-Tuning when: You need to teach the model a new skill, style, or format that is difficult to articulate in a prompt. It’s ideal for deeply embedding a specific persona or structural requirement into the model itself.

In many advanced systems, these techniques are combined. For instance, a fine-tuned model could be used within a RAG pipeline to better formulate queries or synthesize retrieved information in a specific format.

The Broader GPT Ecosystem: Looking Ahead

The release of GPT-4o fine-tuning is a significant event in the ongoing GPT Trends News. It signals a move towards more accessible and powerful AI customization. Looking forward, we can anticipate several developments:

- Multimodal Fine-Tuning: The next logical step is allowing fine-tuning on images and audio, which would revolutionize fields like medical imaging analysis and custom voice assistants. This remains a hot topic for GPT Future News.

- Increased Efficiency: As research into GPT Efficiency News, GPT Compression News, and GPT Quantization News continues, the cost of both training and inference for custom models will likely decrease, making them even more accessible.

- The Road to GPT-5: The learnings from how developers use GPT-4o fine-tuning will undoubtedly inform the architecture and capabilities of future models. Speculation around GPT-5 News suggests that built-in, efficient customization will be a core design principle.

Conclusion: A New Era of AI Partnership

The introduction of GPT-4o fine-tuning is a pivotal moment in the evolution of artificial intelligence. It marks the transition from using AI as a versatile but generic tool to creating a true, specialized partner deeply integrated with an organization’s unique knowledge and processes. This capability empowers developers and businesses to build more intelligent, reliable, and personalized applications than ever before. However, harnessing this power requires a strategic approach, a commitment to data quality, and a clear understanding of its costs and benefits.

By mastering the art and science of fine-tuning, organizations can move beyond simply prompting an AI and begin to truly shape its core behavior, unlocking unprecedented value and building a durable competitive advantage in an increasingly AI-driven world. The news of GPT-4o fine-tuning isn’t just another feature release; it’s an invitation to build the future of specialized intelligence.