Beyond the Monopoly: GPT-5, o3, and the Surge of High-Performance Competitors

Introduction

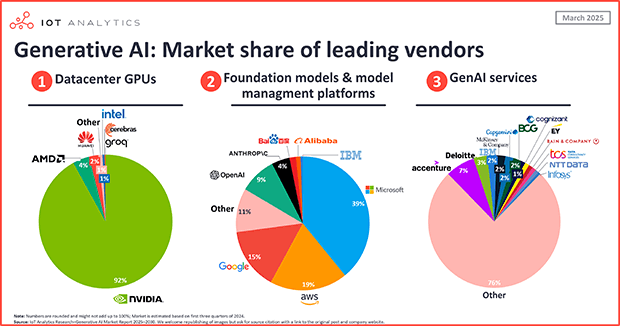

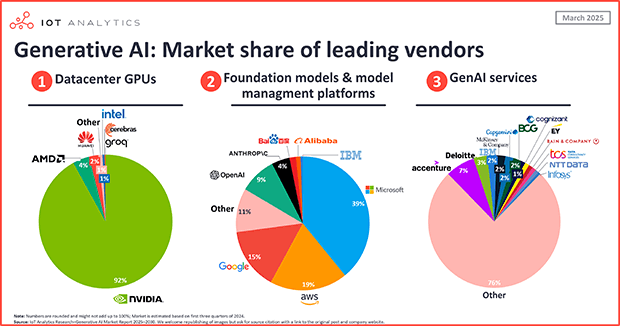

The landscape of artificial intelligence is undergoing a seismic shift. For years, OpenAI held an undisputed monopoly on high-performance Large Language Models (LLMs), with each iteration of the Generative Pre-trained Transformer setting the de facto standard for the industry. However, recent developments indicate that the era of a single dominant model is coming to an end. As we analyze the latest GPT Models News, a complex narrative is emerging: while the anticipated leap to GPT-5 represents a massive upgrade over GPT-4 News cycles of the past, the internal competition from reasoning-focused models like o3, combined with the external pressure from formidable rivals, has fractured the market.

The narrative is no longer just about OpenAI versus the world; it is about the diversification of intelligence. Developers and enterprises are discovering that for specific tasks—particularly code generation and complex reasoning—competitors like Anthropic’s Claude and open-source champions are chipping away at OpenAI’s former reign. This article provides a comprehensive analysis of this new competitive dynamic, exploring the implications of GPT Competitors News, the rise of reasoning models, and what the future holds for the broader GPT Ecosystem News.

Section 1: The New Hierarchy of AI Intelligence

To understand the current state of play, we must look beyond simple version numbers. The release of GPT-5 News suggests a return to massive scaling laws, yet the arrival of “o-series” models (like o1 and o3) has introduced a bifurcation in model architecture. We are witnessing a split between “instinctive” fast thinkers and “deliberate” deep thinkers.

The Internal Battle: GPT-5 vs. o3

For a long time, GPT Architecture News focused on increasing parameter counts and training data size. GPT-5 follows this tradition, offering a generalized intelligence boost. However, GPT Research News has pivoted toward “system 2” thinking—models that “think” before they speak. The o3 model represents this paradigm shift. While GPT-5 might offer broader knowledge and smoother conversation, o3 excels in logic, math, and scientific reasoning.

This creates a unique dilemma for OpenAI GPT News observers: the flagship model (GPT-5) may not actually be the “smartest” model for every task. For users requiring deep analytical capabilities, the reasoning models (o3) often outperform the generalist models, even if the generalist models are newer. This internal cannibalization is a key trend in GPT Future News.

The External Siege: Claude and the Coding Wars

While OpenAI diversifies internally, external competitors have struck gold in vertical specializations. Most notably, GPT Code Models News is now dominated by comparisons to Anthropic’s Claude 3.5 and 3.7 Sonnet. Developers have increasingly migrated to Claude for software engineering tasks. The consensus in the engineering community is that while GPT models are excellent generalists, competitors have optimized their models to better understand repository context, refactor complex codebases, and minimize hallucinations in syntax.

This erosion of dominance in the coding sector is critical because developers are the kingmakers of the AI industry. If the builders choose a competitor’s API for their backend, the application layer follows suit. This shift is evident in GPT APIs News, where integration platforms are rushing to offer model-agnostic solutions rather than being OpenAI-exclusive.

The Open Source Tsunami

Parallel to the proprietary wars, GPT Open Source News continues to accelerate. Meta’s Llama series and Mistral’s models are proving that you do not need a trillion-parameter model behind a paywall to achieve state-of-the-art results for many use cases. GPT Distillation News has become a hot topic, where capabilities of massive models are distilled into smaller, efficient open-weights models that can run locally. This challenges the very business model of giant proprietary APIs.

Section 2: Detailed Analysis of Capabilities and Benchmarks

In this section, we break down the technical nuances that separate the current generation of models. The competition is no longer just about “vibes”; it is about latency, context windows, and multimodal capabilities.

Code Generation and Reasoning

As mentioned, the battleground for GPT Code Models News is fierce. In practical scenarios, such as refactoring a legacy Python codebase or converting React components to TypeScript, competitors like Claude have demonstrated superior “needle-in-a-haystack” retrieval and logic adherence.

Real-World Scenario: Consider a DevOps team automating infrastructure. GPT-4 News reports often highlighted its ability to write scripts. However, when dealing with a 50-file context window, newer competitor models often maintain state better, remembering variable definitions from file A while editing file Z. This reliability is driving GPT Trends News toward models with massive, high-fidelity context windows.

Multimodal Capabilities: Vision and Voice

GPT Multimodal News and GPT Vision News remain areas where OpenAI fights aggressively. The integration of native audio and vision in models like GPT-4o set a high bar. However, Google’s Gemini has countered with native multimodal understanding that can process hours of video content.

For applications in GPT in Healthcare News—such as analyzing X-rays or transcribing doctor-patient consultations—the competition is splitting. Some providers prefer the low latency of GPT-4o, while others prefer the massive context window of Gemini 1.5 Pro to process entire patient histories in one prompt.

Efficiency, Latency, and the Edge

GPT Efficiency News is driving the next wave of adoption. Not every task requires GPT-5 level intelligence. GPT Quantization News and GPT Compression News are vital for running models on devices.

GPT Edge News highlights the move toward “Small Language Models” (SLMs). Apple and Microsoft are pushing for on-device models that handle privacy-sensitive data without hitting the cloud. Here, the competitors are not just other giant labs, but optimized versions of Llama and Phi. GPT Inference News suggests that for real-time applications (voice bots, translation), the metric of “tokens per second” is becoming as important as IQ.

Agents and Tool Use

The holy grail of AI is agency—models that can take action. GPT Agents News indicates that while OpenAI pushed the “GPTs” store, the underlying reliability of function calling is where the war is won. GPT Tools News shows that competitors are optimizing their models specifically for tool use (API calling). If a model can reliably query a database, format the data, and send an email without hallucinating a parameter, it wins the enterprise contract, regardless of whether it writes better poetry.

Section 3: Implications for Industry and Society

The fragmentation of the model landscape has profound implications across various sectors. The days of “wrapping ChatGPT” are over; successful integration now requires a multi-model strategy.

The Rise of Model Routing

With GPT Platforms News and GPT Integrations News, we are seeing the emergence of “AI Gateways.” These systems route prompts to the best model for the job. Simple queries go to GPT-3.5 News class models (or GPT-4o-mini), complex creative writing goes to GPT-5, and heavy coding tasks might be routed to Claude. This commoditization of intelligence puts pressure on pricing.

Sector-Specific Disruption

- GPT in Education News: Educational tools are moving away from generic tutors to specialized fine-tuned models. GPT Fine-Tuning News suggests that smaller, curriculum-aligned models often outperform massive generalist models in teaching specific subjects, reducing the risk of hallucinations.

- GPT in Finance News: In fintech, GPT Privacy News is paramount. Financial institutions are leveraging GPT Custom Models News to train proprietary models on secure data, often preferring open-source bases they can control over sending data to public APIs.

- GPT in Legal Tech News: Legal analysis requires extreme precision. The hallucination rates of early models were a barrier. The new reasoning models (o3) and competitors with large context windows are finally making automated contract review viable.

- GPT in Marketing News: Creativity is subjective. Marketers are finding that different models have different “voices.” GPT Creativity News shows a trend where agencies use a mix of models to generate diverse copy variations.

Regulation, Safety, and Ethics

As competition heats up, GPT Safety News and GPT Ethics News are under the microscope. There is a tension between the race for capability and the need for safety. GPT Bias & Fairness News remains a critical concern, as every model exhibits different cultural biases based on its training data (GPT Datasets News).

GPT Regulation News, such as the EU AI Act, is forcing providers to be more transparent. This favors GPT Open Source News in some regards, as transparency is inherent, but challenges it in others regarding liability. The industry is watching GPT Alignment News closely—how do we ensure these increasingly smart competitors remain aligned with human intent?

Section 4: Strategic Recommendations and Best Practices

For developers, CTOs, and enthusiasts navigating this saturated market, here are the pros, cons, and strategic recommendations.

Pros and Cons of the Multi-Model World

Pros:

- No Vendor Lock-in: You are no longer beholden to a single API provider’s uptime or pricing changes.

- Cost Optimization: You can use cheaper models for easy tasks (GPT Optimization News).

- Specialization: Access to the best coding model, the best creative model, and the best reasoning model simultaneously.

Cons:

- Integration Complexity: Managing multiple API keys, prompt formats, and tokenization schemes (GPT Tokenization News) is difficult.

- Inconsistent Safety Filters: Different providers have different guardrails, making compliance harder to standardize.

- Moving Targets: The “best” model changes every month, requiring constant benchmarking.

Best Practices for Deployment

- Implement an Abstraction Layer: Never hard-code OpenAI’s SDK directly into your core business logic if possible. Use libraries or gateways that allow you to swap the backend model (e.g., from GPT-5 to Claude or Llama) via configuration.

- Focus on Evaluation (Evals): GPT Benchmark News is often marketing fluff. Build your own internal evaluation datasets relevant to your specific use case. Test how GPT-5, o3, and competitors handle your data.

- Leverage RAG (Retrieval-Augmented Generation): Regardless of how smart the model is, it doesn’t know your private data. GPT Applications News consistently shows that a good RAG pipeline with a mid-tier model often beats a top-tier model with no context.

- Watch Latency and Throughput: For GPT Chatbots News and customer service, speed is a feature. GPT Latency & Throughput News indicates that users abandon bots that take too long to “think,” even if the answer is smarter. Balance the o3 “reasoning time” against user patience.

- Prepare for the Edge: If your application involves IoT, keep an eye on GPT Applications in IoT News. The future is hybrid: running small models locally on the device for privacy and speed, and calling the cloud only for heavy lifting.

Conclusion

The release of GPT-5 and the o3 reasoning models marks a pivotal moment in AI history, but perhaps not for the reasons we originally thought. It is not the consolidation of power, but the fracturing of it. The GPT Competitors News cycle proves that the gap between the leader and the pack has closed. Whether it is Claude dominating in coding, Google pushing the boundaries of multimodal context, or open-source models democratizing access, the ecosystem is healthier and more competitive than ever.

For the end-user, this is the best possible outcome. It drives down costs, accelerates innovation in GPT Hardware News and GPT Inference Engines News, and forces providers to compete on utility rather than just hype. As we look toward GPT Cross-Lingual News and global adoption, the question is no longer “Which model is the best?” but rather “Which combination of models best solves my specific problem?” The age of the AI monolith is over; the age of the AI ecosystem has begun.