The Hidden Cost of Intelligence: Navigating the New Era of GPT Benchmark News and Energy Efficiency

Introduction

The artificial intelligence landscape is currently undergoing a profound transformation, shifting focus from raw capability to sustainable efficiency. As the industry anticipates the arrival of next-generation frontier models, the discourse surrounding GPT Benchmark News has evolved. Historically, benchmarks focused almost exclusively on intellectual prowess—how well a model could score on the LSAT, write Python code, or generate creative fiction. However, as models grow exponentially in size and complexity, a new critical metric has emerged: resource intensity.

With major providers like OpenAI becoming increasingly guarded regarding the architectural specifics of upcoming iterations like GPT-5 News, the technical community is facing a “black box” dilemma. We are entering an era where the energy consumption, carbon footprint, and inference costs of these models are as significant as their reasoning capabilities. This opacity has triggered a surge in independent research and third-party auditing, aimed at estimating the environmental and economic costs of large language models (LLMs). For developers, enterprises, and researchers, understanding these hidden metrics is no longer optional—it is a requisite for responsible GPT Deployment News and strategic planning.

This article delves deep into the changing world of AI evaluation, exploring how experts are developing new methodologies to benchmark energy use in the absence of official data, and what this means for the future of the GPT Ecosystem News.

Section 1: The Black Box Dilemma and the Rise of Efficiency Benchmarking

The Shift from Performance to Sustainability

For the past few years, OpenAI GPT News has been dominated by headlines touting massive leaps in parameters and training tokens. GPT-3.5 News brought AI to the masses, and GPT-4 News demonstrated reasoning capabilities that rivaled human experts. However, the law of diminishing returns is beginning to intersect with physical limitations. The computational power required to train and run these models is immense, leading to a surge in GPT Efficiency News.

The core issue lies in the lack of transparency. Unlike the open research culture of the past, the specifications of state-of-the-art models are now closely guarded trade secrets. This includes details on GPT Architecture News, such as whether a model utilizes a dense architecture or a Mixture-of-Experts (MoE) design. Without this data, calculating precise energy consumption per token becomes a challenge. Consequently, the industry is witnessing a pivot where GPT Competitors News and open-source advocates are emphasizing transparency as a competitive advantage against closed providers.

Why Energy Benchmarks Matter

The demand for GPT Benchmark News regarding energy is driven by three main factors:

- Cost Operationalization: For businesses integrating GPT APIs News, the correlation between energy usage and API pricing is direct. High-energy models are expensive models.

- Environmental Impact: GPT Ethics News now encompasses environmental stewardship. The carbon footprint of training and inference is a growing concern for ESG-conscious corporations.

- Hardware Constraints: As we look toward GPT Edge News and on-device AI, understanding the power draw is essential. We cannot deploy GPT-class intelligence on mobile devices if the energy requirements drain batteries in minutes.

This shift is also influencing GPT Regulation News. Governments worldwide are beginning to draft frameworks that may require AI labs to disclose energy consumption data, treating compute resources similarly to industrial emissions.

Section 2: Methodologies for Benchmarking Opaque Models

Reverse-Engineering Resource Usage

How do experts benchmark the energy consumption of a model like GPT-4 or the anticipated GPT-5 when the weights are hidden? The answer lies in sophisticated “black-box” testing techniques that analyze GPT Inference News.

Researchers utilize GPT Latency & Throughput News as a proxy for model size and complexity. By measuring the Time To First Token (TTFT) and the inter-token latency under various load conditions, analysts can infer the underlying architecture. For instance, a sudden spike in latency during complex reasoning tasks might suggest the activation of specific expert parameters in an MoE architecture. By correlating these latency figures with known power consumption profiles of H100 or A100 GPU clusters, experts can derive estimated energy usage ranges.

The Role of Quantization and Compression

A significant area of focus in GPT Optimization News is the impact of quantization. GPT Quantization News refers to the process of reducing the precision of the model’s parameters (e.g., from 16-bit floating-point to 4-bit integers). Benchmarks now frequently compare the “full” model against its quantized counterparts to measure the trade-off between accuracy and energy efficiency.

Furthermore, GPT Compression News and GPT Distillation News are becoming headline topics. Distillation involves using a massive “teacher” model (like GPT-4) to train a smaller, more efficient “student” model. Benchmarking these student models often reveals that they can achieve 80% of the teacher’s performance at a fraction of the energy cost. This is particularly relevant for GPT Custom Models News, where enterprises fine-tune smaller models for specific tasks to avoid the overhead of generalist giants.

Multimodal Complexity

The challenge of benchmarking is compounded by GPT Multimodal News. When a model processes not just text but also images (GPT Vision News) or audio, the computational load varies wildly. A benchmark that only tests text generation fails to capture the energy spike associated with processing a high-resolution image. Current efforts in GPT Research News are focused on creating composite benchmarks that simulate real-world workflows—combining visual analysis, code execution (GPT Code Models News), and creative writing—to provide a holistic view of energy consumption.

Section 3: Implications for Industry and Society

The Economic Divide in AI Adoption

The opacity of energy metrics creates an uneven playing field in the GPT Ecosystem News. Large tech conglomerates can absorb the high costs of inefficient inference, but startups and smaller institutions cannot. If the energy consumption of next-gen models remains undisclosed, smaller players risk building business models on GPT Platforms News that become economically unsustainable if energy prices fluctuate or if API costs rise to cover utility bills.

This is particularly critical in sectors like GPT in Education News and GPT in Healthcare News. Schools and hospitals operate on tight budgets. An educational tool powered by a high-energy model might be too costly to deploy at scale. Therefore, GPT Tools News in these sectors is increasingly focusing on “Small Language Models” (SLMs) that offer transparency and predictable operational costs.

Real-World Scenario: Financial Modeling

Consider a scenario in GPT in Finance News. A hedge fund wants to use an LLM to analyze market sentiment in real-time. They have two choices: a proprietary “black box” model with state-of-the-art reasoning but unknown energy intensity, or an open-source model with slightly lower reasoning capabilities but fully benchmarked resource usage.

If the firm chooses the proprietary model, they might face latency issues during peak trading hours due to the model’s massive computational overhead—a factor hidden by the lack of GPT Inference Engines News transparency. Conversely, by choosing a benchmarked, optimized model, they can ensure low-latency performance (GPT Latency & Throughput News) which is critical for high-frequency trading. This illustrates how benchmarking is not just an academic exercise but a critical business intelligence tool.

Safety and Reliability Concerns

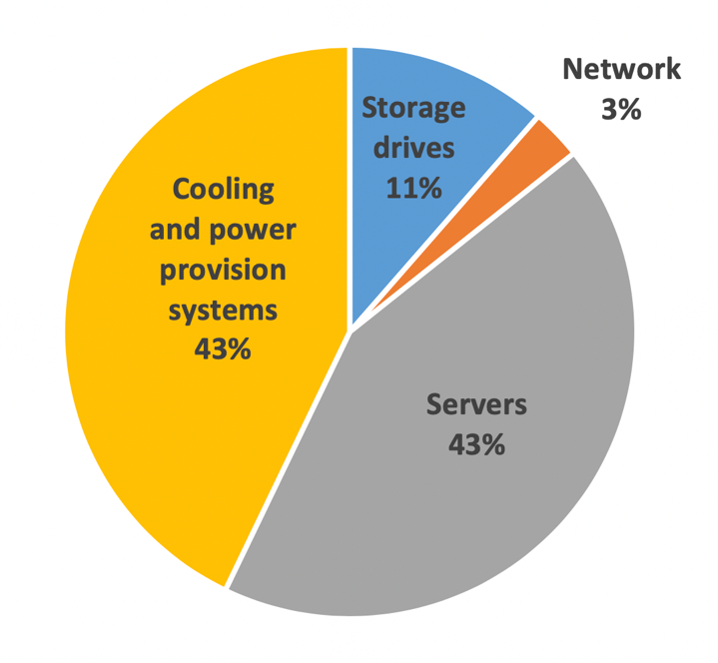

GPT Safety News is inextricably linked to system stability. Models that consume excessive energy generate massive heat and stress on data center infrastructure. In extreme cases, this can lead to hardware throttling, resulting in erratic model behavior or service outages. GPT Bias & Fairness News also intersects here; if high-efficiency (low-cost) models are significantly dumber or more biased than high-energy models, we risk a two-tiered society where only the wealthy can afford “unbiased” and “smart” AI.

Section 4: Strategic Recommendations for the AI Era

Best Practices for Model Selection

In light of the evolving GPT Trends News, organizations must adopt a “Green AI” strategy. Here are key recommendations for navigating the current landscape:

- Demand Transparency: When negotiating with vendors regarding GPT Integrations News, ask for energy metrics. If they cannot provide them, conduct independent pilot tests to measure latency and throughput as proxies for cost and energy.

- Right-Size the Model: Do not use a sledgehammer to crack a nut. For tasks like sentiment analysis or basic summarization, utilize GPT Fine-Tuning News on smaller models rather than querying the largest available frontier model. This aligns with GPT Optimization News best practices.

- Leverage the Edge: For applications involving GPT in IoT News, prioritize models capable of running on edge hardware. This reduces reliance on central data centers and minimizes network latency.

- Monitor Inference Engines: Stay updated on GPT Hardware News. The efficiency of a model is heavily dependent on the inference engine (e.g., vLLM, TensorRT-LLM) and the underlying hardware. Optimizing the software stack can yield energy savings of up to 50%.

Navigating the “Black Box” Future

As we look toward GPT Future News, it is likely that the largest models will remain closed. However, the GPT Open Source News community is rapidly catching up. Tools like Llama 3 or Mixtral offer competitive performance with full transparency. For many use cases involving GPT Content Creation News or GPT in Marketing News, the open-source route provides a better balance of capability, cost, and control.

Furthermore, keep an eye on GPT Agents News. As AI systems become agentic—performing multiple steps to achieve a goal—the compounding energy cost of chained prompts becomes massive. Benchmarking the efficiency of agentic workflows will be the next frontier in GPT Research News.

Conclusion

The narrative surrounding Generative AI is maturing. We are moving past the initial hype cycle where capability was the only metric that mattered. The emerging focus on GPT Benchmark News regarding energy and resource efficiency signals a shift toward sustainable, scalable, and economically viable AI. While major players may withhold specific data regarding GPT-5 News or other future iterations, the community’s resolve to benchmark these systems from the outside in is stronger than ever.

From GPT Privacy News to GPT Cross-Lingual News, every facet of AI development is now being viewed through the lens of efficiency. For developers, businesses, and policymakers, the message is clear: the most powerful model is not always the best model. The best model is one that balances intelligence with the tangible constraints of our physical and economic reality. As we await the next wave of GPT Applications News, staying informed about these underlying benchmarks will be the key to competitive advantage in the AI-driven future.