The No-Code Revolution: How Deep GPT Integrations Are Reshaping Enterprise Workflows

The Dawn of a New Era: AI Integration Beyond the Chatbot

For the past year, the conversation around generative AI has been dominated by standalone applications and chatbots. We’ve seen a torrent of ChatGPT News, with businesses and individuals alike marveling at the power of large language models (LLMs) to generate text, write code, and answer complex questions. However, the true revolution isn’t happening in a separate browser tab; it’s unfolding quietly within the enterprise software we use every day. We are witnessing a monumental shift from AI as a destination to AI as a deeply embedded, contextual utility. The latest GPT Integrations News signals a move towards AI-ready platforms that empower non-technical users to build sophisticated automations. This democratization of AI is transforming workflows, supercharging productivity, and fundamentally altering how businesses operate. Instead of requiring teams of data scientists and developers, companies can now leverage no-code and low-code tools to weave the power of models like GPT-4 directly into their existing processes, from sales and marketing to customer support and internal operations. This article explores this transformative trend, delving into the technology, applications, and strategic considerations of this new wave of GPT integrations.

Section 1: The Rise of AI-Ready Platforms and Embedded Intelligence

The concept of an “AI-ready” platform represents a significant evolution in enterprise software architecture. It signifies a foundational shift from systems of record to systems of intelligence. No longer are platforms like CRMs, ERPs, and collaboration hubs just passive repositories for data; they are becoming active participants in the workflow, capable of understanding context, making suggestions, and automating complex tasks. This transformation is powered by the accessibility and maturity of generative AI, with the latest GPT-4 News highlighting models that are more powerful, nuanced, and capable of following complex instructions than ever before.

What Defines an AI-Ready Platform?

An AI-ready platform is characterized by several key features that facilitate seamless AI integration for users of all technical abilities. The core components include:

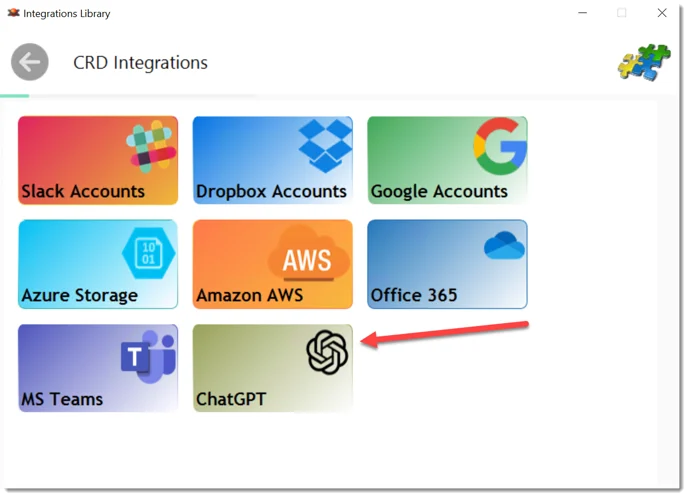

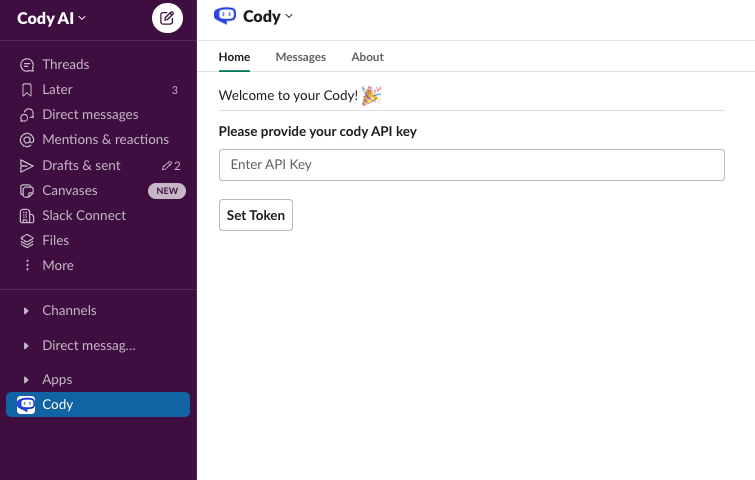

- Native Integration with LLMs: These platforms have built-in connectors or native capabilities that link directly to powerful models like those from OpenAI or other providers. This eliminates the need for complex, custom-built API connections, making the latest OpenAI GPT News directly actionable for businesses.

- No-Code/Low-Code Workflow Builders: The most critical element is a user-friendly interface, often a drag-and-drop workflow builder. This allows a business analyst, a marketing manager, or a sales lead to design an automation by defining triggers, actions, and logic without writing a single line of code.

- Prompt-Based Action Steps: Instead of configuring complex parameters, users can embed AI actions by writing simple, natural language prompts. For example, a step in a workflow could be, “Summarize the following customer feedback into three bullet points and identify the overall sentiment as positive, negative, or neutral.”

- Contextual Data Access: The platform provides the AI with secure, permission-based access to relevant business data. An AI action to draft a sales email can automatically pull the contact’s name, company, recent interactions, and relevant product information, making the output highly personalized and effective. This is a key area of development covered in GPT Ecosystem News.

This paradigm shift is about moving AI from a specialized tool to an ambient, ever-present assistant. The focus of GPT Applications News is no longer just on novel chatbots but on practical, ROI-driven enhancements to core business processes. Platforms are becoming intelligent layers that augment human capability at every step, creating a more efficient and responsive organization.

Section 2: Deconstructing the No-Code GPT Integration Workflow

While the front-end experience of a no-code AI workflow appears simple, a sophisticated technical orchestration happens behind the scenes. Understanding this process is crucial for effective implementation, troubleshooting, and appreciating the nuances of this technology. Let’s break down the components of a typical no-code GPT integration.

The Technical Underpinnings: APIs, Prompts, and Context

At its heart, every no-code integration is an abstraction layer built on top of powerful APIs. When a user designs a workflow, they are essentially creating a visual script that makes a series of calls to a generative AI model.

1. The Trigger: Every automation begins with a trigger—an event that initiates the workflow. This could be a new email arriving in a shared inbox, a customer support ticket being created, a deal stage changing in a CRM, or a new message posted in a specific channel.

2. Data Aggregation: Once triggered, the platform gathers the necessary context. This is a critical step. For a support ticket, it might pull the ticket description, customer history, and related knowledge base articles. The quality of this context directly impacts the quality of the AI’s output. The latest GPT APIs News often focuses on improving how developers can pass this context efficiently.

3. The Prompt Assembly: The no-code platform then dynamically assembles a detailed prompt. This is more than just the user’s simple instruction. It combines a system-level directive (e.g., “You are a helpful customer support assistant”), the user’s specific prompt (e.g., “Draft a polite response acknowledging the issue”), and the aggregated contextual data. This complex, machine-generated prompt is what is actually sent to the LLM.

4. The API Call and Inference: The platform sends the assembled prompt to the GPT model (e.g., GPT-3.5 or GPT-4) via an API call. The model processes the input—a process known as inference—and generates a response. Factors like GPT Latency & Throughput News are critical here, as businesses need responses to be near-instantaneous to be useful in real-time workflows.

5. The Action: The platform receives the AI-generated text and performs the designated action. This could be posting the draft response in a collaboration tool for human review, updating a field in the CRM, or sending an automated email.

Common Pitfalls and Strategic Considerations

While powerful, this process is not without its challenges. One major hurdle is prompt engineering. A poorly worded prompt from a user can lead to generic, incorrect, or “hallucinated” responses. Best practices involve training users to be specific, provide examples, and define the desired tone and format. Furthermore, data security is paramount. The latest GPT Privacy News and GPT Regulation News highlight the importance of ensuring that sensitive customer data is handled correctly, anonymized where necessary, and that integrations comply with regulations like GDPR and CCPA. Finally, cost management is essential. Every API call has an associated cost based on token usage, and a poorly designed, runaway automation can quickly become expensive. Monitoring usage and setting budget alerts are crucial for sustainable GPT Deployment News.

Section 3: Real-World Applications and Transformative Business Impact

The true measure of any technology is its real-world impact. Embedded GPT integrations are already delivering tangible value across various business functions, moving from theoretical possibilities to daily operational assets. The latest GPT Trends News shows a clear pattern of adoption in areas where communication and data synthesis are key.

Case Study 1: Supercharging Sales and Marketing

A B2B software company integrates its CRM with a generative AI workflow builder. Their goal is to accelerate the lead qualification and outreach process.

- The Workflow: When a new lead from a “Contact Us” form enters the CRM, a workflow is triggered.

- The AI scans the lead’s provided information (company name, title, message).

- It performs a quick web search to enrich the data with the company’s industry, size, and recent news.

- Using this context, an AI step with the prompt, “Based on the following lead data, write a 150-word personalized outreach email. Reference their industry and our relevant case study. Adopt a helpful, consultative tone,” generates a draft email.

- The draft is automatically saved in the CRM and a task is assigned to the sales representative to review, edit, and send.

- The Impact: This automation reduces the time spent on manual research and initial drafting from 15 minutes per lead to under 2 minutes for review. This allows the sales team to engage with more leads, faster and with higher-quality personalization, directly impacting the sales pipeline. This is a prime example of the GPT in Marketing News becoming a reality.

Case Study 2: Revolutionizing Customer Support

An e-commerce company is struggling with high ticket volumes. They use an AI-ready helpdesk platform to improve efficiency and response quality.

- The Workflow: A new support ticket is created.

- An AI action immediately reads the ticket and categorizes it (e.g., “Billing Inquiry,” “Shipping Status,” “Technical Issue”).

- Based on the category, it routes the ticket to the correct team.

- Another AI step summarizes the customer’s issue and previous interactions into a concise brief for the agent.

- Finally, it searches the internal knowledge base and suggests a relevant article or a draft response, which the agent can use as a starting point.

- The Impact: First-response time is drastically reduced. Agents spend less time on administrative tasks like categorization and more time solving complex customer problems. The consistency and quality of responses improve, leading to higher customer satisfaction scores. This application of GPT Assistants News demonstrates how AI can augment, not replace, human agents.

These examples extend to virtually every department. GPT in Finance News reports on using AI to summarize earnings reports. GPT in Legal Tech News discusses drafting initial contract clauses. The core benefit is consistent: automating the synthesis of information and the generation of initial drafts, freeing up human experts for higher-level strategic work.

Section 4: Best Practices, Recommendations, and the Future Outlook

Successfully implementing embedded GPT integrations requires more than just access to the right tools. It demands a strategic approach that balances innovation with governance, and enthusiasm with pragmatism. As we look to the future, the capabilities of these systems are set to expand dramatically, making a solid foundational strategy even more critical.

Best Practices for Implementation

To maximize the benefits and mitigate the risks of no-code AI integrations, organizations should adhere to several best practices:

- Start Small, Think Big: Begin with a pilot project in a single department to address a specific, high-impact pain point. Use the learnings from this pilot to develop a broader, scalable strategy for the entire organization.

- Invest in “Prompt Literacy”: The prompt is the new user interface. Train your employees on the principles of effective prompt engineering. This includes being specific, providing context, defining the desired format, and iterating on prompts to improve results.

- Establish Clear Governance: Create a center of excellence or a dedicated team to oversee AI usage. This team should set clear guidelines on data privacy, ethical considerations (covered in GPT Ethics News), and security. All automated workflows, especially those interacting with customers, should have a “human-in-the-loop” for review and approval, at least initially.

- Monitor and Optimize: Continuously monitor the performance, cost, and accuracy of your AI automations. Use A/B testing to refine prompts and workflows. Stay current with GPT Benchmark News to understand if you are using the most efficient model for your task.

The Road Ahead: Agents, Multimodality, and GPT-5

The current wave of integrations is just the beginning. The horizon, informed by ongoing GPT Research News, promises even more profound capabilities. The buzz around potential GPT-5 News suggests models with vastly improved reasoning and a deeper understanding of context. This will enable more complex, multi-step automations that require less human guidance.

The emergence of GPT Agents News points to a future where we can assign a high-level goal (e.g., “Plan a marketing campaign for our new product launch”) and an autonomous agent will break down the task, execute the steps, and use various tools to achieve the objective. Furthermore, the integration of GPT Multimodal News will be transformative. Imagine a workflow that can analyze an image of a damaged product from a customer, cross-reference it with inventory data, and automatically initiate a replacement order. The convergence of language, vision, and action will unlock a new frontier of automation, making the insights from GPT Vision News a core part of business process management.

Conclusion: From Tool to Teammate

The proliferation of no-code, AI-ready platforms marks a pivotal moment in the adoption of artificial intelligence. By embedding the power of large language models directly into the fabric of enterprise software, businesses are moving AI from a novel curiosity to an indispensable operational tool. This trend democratizes AI development, allowing the people closest to a business problem to design and implement their own automated solutions. The impact is a significant boost in productivity, efficiency, and the capacity for innovation.

However, this power must be wielded with foresight and responsibility. Success hinges on a strategic approach that prioritizes user training, robust governance, and a keen eye on ethical implications. As we look toward an even more capable future with advancements in AI agents and multimodality, the companies that build a strong foundation today will be best positioned to lead tomorrow. The latest GPT Integrations News is clear: AI is no longer just a tool we use; it is becoming a teammate we collaborate with.