The Distillation Dilemma: Training AI on AI in the Generative Pre-trained Transformer Era

The Secret Sauce and Ethical Minefield of Modern AI Development

In the rapidly evolving landscape of artificial intelligence, the race to build more powerful, efficient, and specialized language models is relentless. While behemoths like GPT-4 dominate headlines with their staggering capabilities, a quieter, more controversial technique is shaping the next generation of AI: knowledge distillation. This process, where a smaller “student” model learns from the outputs of a larger “teacher” model, has become a critical tool for creating nimble, cost-effective AI solutions. However, it also sits at the epicenter of a complex debate surrounding data provenance, intellectual property, and the very terms of service that govern the use of foundational models.

This article delves deep into the world of GPT Distillation News, exploring the technical underpinnings of this powerful method, its real-world applications, and the significant ethical and legal challenges it presents. We will examine how distillation is democratizing AI development while simultaneously creating friction between open-source communities and the commercial giants that build the teacher models. Understanding this dynamic is crucial for anyone involved in the AI ecosystem, from developers and researchers to business leaders and policymakers navigating the future of this transformative technology.

Section 1: Understanding GPT Distillation: The Technical Foundations

At its core, knowledge distillation is a form of model compression and knowledge transfer. It’s a technique designed to imbue a smaller, computationally cheaper model with the sophisticated capabilities of a much larger, more resource-intensive one. This process is becoming a cornerstone of modern GPT Training Techniques News, enabling the deployment of powerful AI on devices with limited resources.

What is Knowledge Distillation in the Context of GPTs?

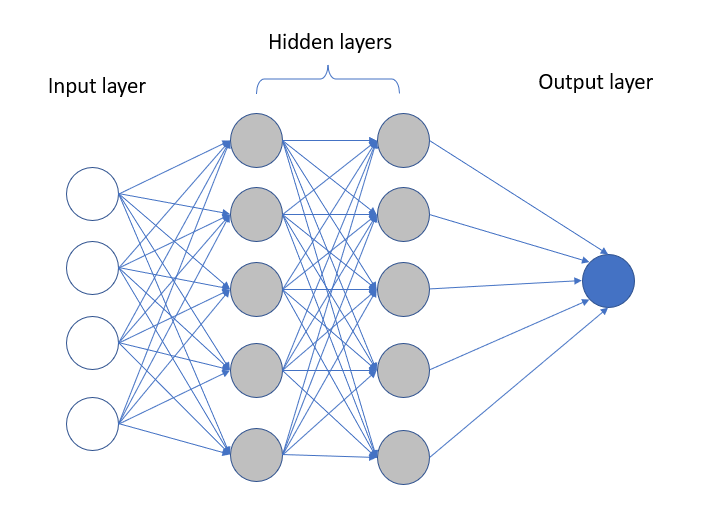

Imagine a master artisan (the “teacher” model, like GPT-4) and an apprentice (the “student” model, perhaps a smaller open-source model). The apprentice cannot replicate the master’s years of experience and intricate neural network, but it can learn by meticulously studying the master’s finished work. In AI terms, the “teacher” model processes a vast set of prompts and generates detailed, high-quality responses. This curated set of prompts and responses then becomes a synthetic, high-quality training dataset for the “student” model. The student learns the patterns, style, and reasoning abilities exhibited by the teacher, effectively inheriting a compressed version of its knowledge.

The Distillation Process: From Teacher to Student

The distillation process typically involves several key steps:

- Synthetic Data Generation: A developer uses the teacher model’s API (a key part of GPT APIs News) to generate a large, diverse dataset. This involves crafting thousands of high-quality prompts across various domains—from coding and creative writing to financial analysis—and recording the teacher model’s outputs.

- Training the Student Model: The smaller student model is then trained on this synthetic dataset. Instead of just learning from the final “hard label” (the chosen text), advanced techniques often use “soft labels.” These are the probability distributions the teacher model assigned to all possible next words. This provides a much richer learning signal, teaching the student not just *what* the teacher said, but *how confident* it was in its alternatives, which helps it capture more of the teacher’s nuanced reasoning.

- Evaluation and Refinement: The student model is rigorously evaluated against various benchmarks to see how well it has captured the teacher’s capabilities. This is a critical area of GPT Benchmark News, as new metrics are constantly being developed to assess the quality of distilled models. The process may be iterated to improve performance.

Why Distill? The Driving Motivations

The push for distillation is driven by powerful practical and economic incentives, making it a hot topic in GPT Efficiency News and GPT Compression News.

- Reduced Inference Costs: Running a massive model like GPT-4 is expensive. A smaller, distilled model can answer queries for a fraction of the cost, making AI applications economically viable for a wider range of businesses.

- Lower Latency: Smaller models are faster. For real-time applications like chatbots or interactive coding assistants, reducing latency and improving throughput is critical for a good user experience. This is a major focus of GPT Latency & Throughput News.

- Deployment on the Edge: Distillation allows for the creation of models small enough to run on local devices like smartphones or IoT sensors (GPT Edge News), enhancing privacy and enabling offline functionality.

- Specialization: A company can distill a general-purpose model into a highly specialized expert for a specific domain, such as a GPT in Legal Tech News model that excels at contract analysis, without the overhead of the generalist’s broader knowledge.

Section 2: The Distillation Ecosystem in Practice: Applications and Case Studies

Knowledge distillation is not just a theoretical concept; it’s actively being used to build a new wave of AI applications. From open-source projects to enterprise-level custom models, this technique is democratizing access to high-performance AI.

Case Study: Creating a Specialized Coding Assistant

Consider a software development company that wants to build a custom coding assistant. While GPT-4 is an excellent general-purpose coder, the company needs a model that understands its proprietary codebase, follows its specific coding style, and can be deployed efficiently within its developers’ IDEs.

Instead of training a large model from scratch, they can use distillation.

- Data Generation: They use the GPT-4 News API to generate thousands of examples. They prompt it with snippets of their codebase and ask it to explain, refactor, or document the code according to their internal standards.

- Student Model Selection: They choose a powerful yet efficient open-source model, perhaps from the latest GPT Open Source News, as their student.

- Fine-Tuning/Distillation: They fine-tune this student model on the synthetically generated dataset. The result is a specialized GPT Code Models News assistant that is fast, cheap to run, and perfectly aligned with their internal development practices.

This approach is being replicated across industries, leading to a surge in GPT Custom Models News and specialized GPT Applications News in fields like healthcare, finance, and marketing.

The Role of APIs in Fueling the Distillation Fire

The proliferation of powerful APIs from models like GPT-3.5 and GPT-4 has been the primary catalyst for the distillation boom. These APIs provide easy access to state-of-the-art “teacher” capabilities, allowing developers and researchers to generate vast quantities of high-quality synthetic data that would be impossible to create manually. This has led to an explosion in the GPT Ecosystem News, with countless new tools and platforms emerging to streamline the distillation workflow, from data generation to model evaluation.

Section 3: The Double-Edged Sword: Performance, Perils, and Prohibitions

Despite its immense benefits, GPT distillation is fraught with technical challenges and significant ethical and legal hurdles. Navigating this landscape requires a deep understanding of the trade-offs involved.

The Performance Question: Is Distilled Knowledge Second-Hand?

A key question in GPT Research News is whether a distilled model truly inherits the “reasoning” of its teacher or merely mimics its stylistic patterns. While many distilled models perform exceptionally well on standard benchmarks, they can sometimes fail on out-of-distribution or adversarial prompts where deeper reasoning is required. The student learns the “what” but may not fully grasp the “why.”

Furthermore, there is the growing concern of “model collapse.” This phenomenon, a major topic in GPT Safety News, describes a degenerative process where models trained exclusively on the output of other models eventually lose fidelity and diversity. Over successive generations of distillation, the information quality can degrade, leading to a bland, homogenous, and less capable AI ecosystem.

The Ethical and Legal Minefield: Terms of Service and Data Provenance

This is where the practice of distillation becomes most contentious. The terms of service for most major AI API providers, including OpenAI, explicitly prohibit using their models’ outputs to develop models that compete with them. This creates a fundamental conflict. Many smaller companies and open-source projects that use distillation to create competitive models are, by the letter of the law, violating their user agreements.

This raises profound questions central to GPT Ethics News and GPT Regulation News:

- Intellectual Property: Who owns the output of an AI model? Is it a derivative work of the model’s training data, or is it a new creation owned by the user who wrote the prompt?

- Data Provenance: As models are trained on the output of other models, the lineage of data becomes blurred. This makes it incredibly difficult to audit for bias, safety, or privacy issues, a critical concern for GPT Bias & Fairness News.

- Competitive Landscape: Incumbent players argue that allowing unrestricted distillation devalues their massive investment in creating foundational models. Conversely, the open-source community argues that such restrictions stifle innovation and cement the dominance of a few large corporations.

This ongoing tension is a defining narrative in current GPT Competitors News and will likely be a focal point for future AI regulation.

Section 4: Best Practices, Recommendations, and the Future Outlook

For those looking to leverage distillation responsibly and effectively, it’s crucial to adopt best practices and stay informed about the evolving technical and ethical landscape.

Best Practices for Responsible Distillation

When used for non-competitive, internal applications where it is permitted, distillation can be a powerful tool. To maximize its effectiveness:

- Focus on High-Quality Prompts: The quality of the synthetic data is paramount. “Garbage in, garbage out” applies just as much to the teacher model as it does to the student.

- Ensure Data Diversity: Generate a wide variety of examples covering numerous topics, styles, and edge cases to prevent the student model from becoming too narrow.

- Combine with Real Data: Whenever possible, augment the synthetic dataset with high-quality, human-curated data. This can help ground the student model and mitigate the risk of model collapse.

- Rigorous Evaluation: Don’t just rely on standard benchmarks. Test the distilled model extensively on real-world tasks specific to your application to uncover its weaknesses.

The Future of Distillation and the Road to GPT-5

The field of knowledge distillation is continuously evolving. We are seeing new techniques in GPT Architecture News that make student models more adept at learning from teachers. The future may bring “distillation-aware” models designed specifically to transfer knowledge efficiently.

The anticipated arrival of next-generation models, a constant source of speculation in GPT-5 News, will raise the bar for teacher models, making the potential gains from distillation even greater. As models become multimodal, we will see distillation techniques applied not just to text but to images, audio, and video, driving innovation in GPT Vision News and GPT Multimodal News. The central challenge will be balancing the immense potential of these techniques with the need for a healthy, ethical, and legally sound AI ecosystem.

Conclusion: Navigating the Distillation Dilemma

Knowledge distillation stands as one of the most impactful and controversial techniques in the modern AI toolkit. It is a powerful engine for innovation, enabling the creation of efficient, specialized, and accessible AI models that are driving new applications across every industry. It fuels the vibrant open-source community and allows smaller players to build competitive products.

However, its practice is mired in a complex web of technical challenges, such as model collapse, and profound ethical and legal questions surrounding terms of service and data ownership. The resolution of this “distillation dilemma” will have lasting consequences for the future of AI. It will shape the competitive landscape, influence regulation, and ultimately determine whether the incredible power of generative models becomes a shared resource for innovation or a closely guarded asset of a select few. As the technology continues its relentless advance, navigating this issue with transparency and foresight will be one of the most critical tasks for the entire AI community.