The AI Arms Race: Deconstructing OpenAI’s Global Infrastructure Expansion and the Dawn of GPT-5

The Unprecedented Scale of AI: Charting the Course for Tomorrow’s GPT Models

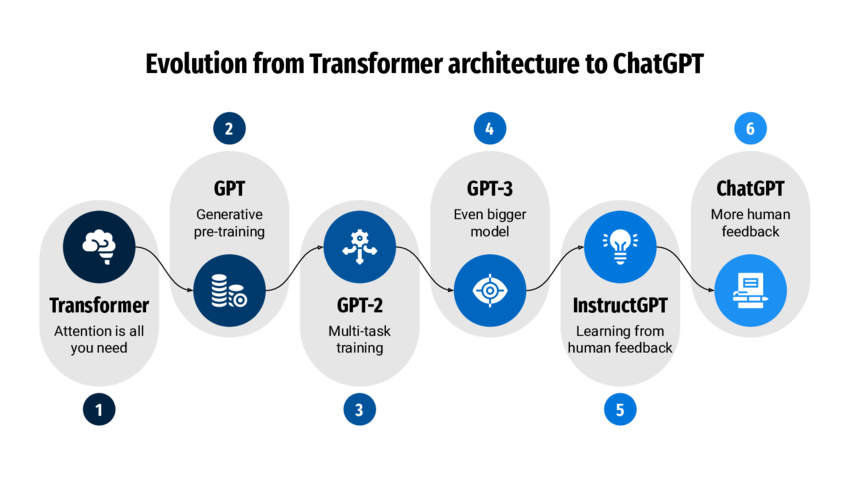

The world of artificial intelligence is no longer defined by algorithms alone; it is now a game of colossal infrastructure, global strategy, and unprecedented capital investment. Recent developments signal a seismic shift in the landscape, moving from theoretical advancements to the physical construction of “AI factories” on a planetary scale. OpenAI, in partnership with tech giants like Microsoft, Oracle, and Google, is laying the groundwork for the next generation of generative models, a move that promises to dwarf the capabilities of even the formidable GPT-4. This expansion is not merely an upgrade—it’s a fundamental reimagining of the computational power required to push the boundaries of AI. This article delves into the latest GPT Models News, exploring the massive infrastructure build-out, its technical underpinnings, and the profound implications for businesses, developers, and society at large as we stand on the precipice of the GPT-5 era.

At the heart of this transformation is the insatiable demand for computational power. Training state-of-the-art models requires supercomputers of unimaginable scale, and serving them to millions of users globally demands a resilient, low-latency inference network. We will dissect the strategic multi-cloud partnerships, the cutting-edge hardware powering these new data centers, and the global expansion aimed at democratizing access and fostering localized AI ecosystems. This analysis provides critical insights into the latest OpenAI GPT News and what it signals for the future of AI development and deployment.

Section 1: The Global Blueprint for AI Supremacy

The narrative of AI’s future is being written in concrete and silicon across the globe. OpenAI’s strategy reveals a multi-pronged approach focused on securing overwhelming computational superiority, diversifying its infrastructure backbone, and establishing a significant international footprint. This isn’t just about incremental upgrades; it’s about building a global machine to train and run models orders of magnitude more complex than what we see today, directly impacting all future GPT-4 News and setting the stage for its successors.

The “Stargate” Initiative and the Multi-Cloud Imperative

The most ambitious piece of this puzzle is the “Stargate” supercomputer project, a joint venture with Microsoft reportedly valued in the billions. This initiative aims to create a dedicated AI data center with power requirements measured in gigawatts—a scale typically associated with large metropolitan areas. The first phase of this project, reportedly taking shape in locations like Abilene, Texas, is already seeing the deployment of next-generation hardware. This move underscores a critical trend in GPT Scaling News: the direct correlation between computational power and model capability, often referred to as “scaling laws.” The more data and compute you can throw at a model, the more intelligent and capable it becomes.

Simultaneously, OpenAI is aggressively pursuing a multi-cloud strategy to mitigate risks and leverage unique advantages from different providers. While the partnership with Microsoft Azure remains foundational—powering the training of models like GPT-4 in its Iowa facilities—OpenAI has expanded its supplier list to include Oracle and, more recently, Google Cloud. This diversification is crucial for several reasons:

- Resilience and Redundancy: Relying on a single provider creates a single point of failure. A multi-cloud approach ensures that services like ChatGPT and the GPT APIs remain available even if one provider experiences an outage.

- Access to Specialized Hardware: Different cloud providers may offer early access to specialized AI accelerators or have unique network architectures, giving OpenAI a competitive edge in both training and inference. This is a key aspect of GPT Hardware News.

- Geographic Reach and Performance: Leveraging data centers from multiple providers allows OpenAI to place inference workloads closer to end-users, reducing latency and improving the user experience, a critical factor in GPT Latency & Throughput News.

Global Expansion: Beyond Silicon Valley

The strategy extends far beyond U.S. borders. The establishment of international hubs, such as the “Stargate UAE” initiative and the opening of an office in New Delhi, India, signals a deliberate push for global integration. This expansion is not just about sales and marketing; it’s about building a worldwide GPT Ecosystem News. Plans for a major data center in India, with a potential power capacity exceeding 1 gigawatt, highlight the ambition to build localized infrastructure. This move addresses data sovereignty concerns, improves performance for a massive user base, and allows for the development of models better attuned to local languages, cultures, and contexts, which is vital for GPT Multilingual News and creating more inclusive AI.

Section 2: Under the Hood: The Technology Fueling the Next AI Wave

The immense scale of these new data centers is matched only by the sophistication of the technology within them. The leap from GPT-4 to what comes next requires a complete overhaul of the hardware and software stack, from the individual chips to the networking fabric that connects them. This section explores the technical details driving the latest GPT Architecture News and training methodologies.

The NVIDIA GB200 and the Future of AI Compute

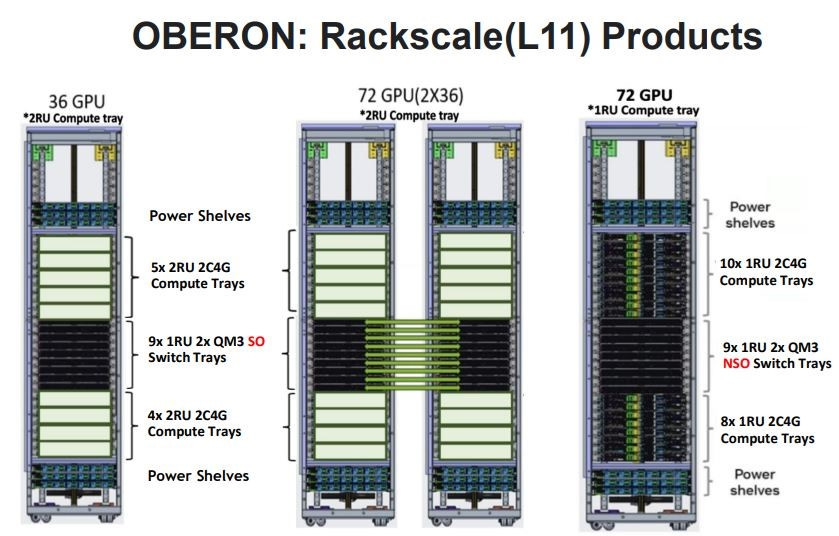

A central component of this new infrastructure is NVIDIA’s next-generation Grace Blackwell GB200 Superchip. Reports suggest that facilities like the one in Abilene could house tens of thousands of these powerful processors. The GB200 is not just an incremental improvement; it represents a paradigm shift in AI hardware. It combines two B200 GPUs with a Grace CPU, connected via an ultra-fast NVLink interconnect. This tightly integrated design is crucial for several reasons:

- Massive Memory Bandwidth: Training large language models is often bottlenecked by how quickly data can be moved to and from the GPU’s memory. The GB200’s architecture dramatically increases this bandwidth, allowing for larger models and faster training cycles.

- Enhanced Scalability: The NVLink fabric allows thousands of GB200 chips to be connected into a single, massive computational unit, behaving almost like one giant GPU. This is essential for the distributed training techniques required by future models, a core topic in GPT Training Techniques News.

- Improved Inference Efficiency: While built for training, the GB200 also promises significant gains in inference performance, which is critical for cost-effectively deploying powerful GPT Agents News and multimodal systems at scale.

This hardware upgrade will directly enable new model architectures. With more memory and compute, researchers can explore larger context windows, more complex mixtures-of-experts (MoE) models, and natively integrated GPT Multimodal News, where models can seamlessly process text, images, audio, and video as a single data stream.

The Software and Networking Stack

Hardware alone is not enough. The efficiency of these AI supercomputers depends on a highly optimized software stack and a lightning-fast network. This involves advancements in several areas:

- GPT Inference Engines: Custom inference engines are being developed to maximize the throughput of models on new hardware. Techniques like GPT Quantization News (reducing the precision of model weights) and GPT Distillation News (training smaller, specialized models from a larger one) will be key to making these powerful models accessible and affordable.

- Networking Fabric: The communication between thousands of GPUs is often the biggest bottleneck. High-speed networking solutions like NVIDIA’s Quantum InfiniBand are essential to ensure that the GPUs are constantly fed with data and not sitting idle, waiting for information from other nodes.

- Data Management: The scale of GPT Datasets News is also exploding. Managing and pre-processing petabytes of text, image, and video data requires a sophisticated data pipeline that can feed the training clusters without interruption.

Section 3: Real-World Implications: What This Means for Industries and Developers

This colossal investment in infrastructure is not an academic exercise. It is the foundation for a new generation of AI applications that will redefine industries and create entirely new markets. The capabilities unlocked by this computational power will translate directly into more powerful, versatile, and accessible AI tools, impacting everything from healthcare to content creation.

Case Study: The Future of AI in Healthcare

Consider the impact on GPT in Healthcare News. Current models can assist with summarizing patient notes or drafting communications. However, a future model trained on this new infrastructure could act as a true diagnostic and research assistant.

- Multimodal Diagnostics: A GPT-5-level model could analyze a patient’s entire record—including doctor’s notes (text), MRI scans (images), EKG readings (time-series data), and genetic sequences—to identify patterns and potential diagnoses that a human might miss.

- Drug Discovery: The computational power could be used to simulate protein folding and molecular interactions at an unprecedented scale, dramatically accelerating the drug discovery process.

- Personalized Medicine: By analyzing vast datasets, these models could help create truly personalized treatment plans based on an individual’s unique genetic makeup and lifestyle.

Transforming Development and Business Operations

For developers and businesses, this expansion signals several key trends:

- Hyper-Capable GPT APIs: The next generation of GPT APIs News will be far more powerful. Expect models with near-perfect code generation capabilities (GPT Code Models News), sophisticated reasoning for complex financial analysis (GPT in Finance News), and the ability to autonomously execute multi-step tasks as true GPT Agents News.

- Rise of Custom AI: While foundation models will become more powerful, the infrastructure will also support more efficient GPT Fine-Tuning News and the creation of GPT Custom Models News. Businesses will be able to train highly specialized models on their proprietary data for applications in legal tech, marketing, and more.

- New Creative Frontiers: In fields like gaming and content creation, this power will enable real-time generation of dynamic narratives, photorealistic assets, and interactive characters, transforming the landscape of GPT in Gaming News and GPT in Content Creation News.

Section 4: Navigating the Future: Recommendations and Ethical Considerations

This rapid, large-scale expansion is not without significant challenges and risks. The immense power consumption raises environmental concerns, while the concentration of such powerful technology in the hands of a few companies brings up questions of governance, bias, and safety. Navigating this new era requires a proactive and thoughtful approach.

Challenges and Risks

- Environmental Impact: Gigawatt-scale data centers have a massive energy and water footprint. The industry must prioritize sustainable energy sources and efficient cooling technologies to mitigate environmental harm.

- Ethical and Safety Concerns: As models become more powerful and autonomous, the importance of GPT Ethics News and GPT Safety News grows exponentially. Robust guardrails, alignment research, and transparent governance are no longer optional but essential to prevent misuse.

- Geopolitical Tensions: The global distribution of AI infrastructure introduces geopolitical complexity. Data sovereignty, international regulations (GPT Regulation News), and competition between nations will shape the future of AI deployment.

- Economic Disruption: The capabilities unlocked by these models will automate many tasks, leading to significant economic and labor market shifts that societies must prepare for.

Best Practices and Recommendations for Businesses

- Prepare for a Paradigm Shift: Do not view the next generation of GPT models as an incremental update. Begin planning now for how truly autonomous agents and hyper-intelligent systems could fundamentally reshape your business processes and products.

- Invest in Data Infrastructure: The value of these models is unlocked by high-quality, proprietary data. Businesses should focus on building clean, well-structured datasets to leverage future fine-tuning and custom model capabilities.

- Adopt a Multi-Platform Mindset: Just as OpenAI is diversifying its cloud providers, businesses should avoid vendor lock-in. Stay informed about GPT Competitors News and be prepared to leverage the best models and GPT Platforms News for specific tasks.

- Prioritize Ethical Implementation: Establish a clear AI ethics framework within your organization. Address issues of GPT Bias & Fairness News and GPT Privacy News proactively to build trust with customers and avoid regulatory pitfalls.

Conclusion: Building the Engine of Intelligence

The latest wave of GPT Trends News is clear: the future of artificial intelligence is being forged in the fires of massive, globe-spanning supercomputers. OpenAI’s strategic expansion, from the Stargate project in the U.S. to new hubs in the UAE and India, is a testament to the new reality where computational power is the primary driver of progress. The move to a multi-cloud strategy and the adoption of next-generation hardware like the NVIDIA GB200 are not just technical upgrades; they are the necessary steps to unlock models with capabilities that will redefine what we consider possible.

For businesses, developers, and researchers, this signals a period of unprecedented opportunity and challenge. The tools we will have at our disposal in the near future will enable solutions to problems once thought intractable. However, this power comes with immense responsibility. Staying informed, preparing strategically, and prioritizing ethical considerations will be the keys to successfully navigating the transformative era of AI that is dawning right before our eyes.