Beyond Brute Force: The New Era of GPT Efficiency and Performance Optimization

The artificial intelligence landscape has long been dominated by a singular pursuit: scale. For years, the prevailing wisdom was that bigger was unequivocally better. We witnessed a meteoric rise in parameter counts, with models like GPT-3 and GPT-4 pushing the boundaries of what was computationally possible. While this “era of scale” delivered breathtaking capabilities in language understanding and generation, it also created significant challenges related to cost, latency, and accessibility. Today, the narrative is shifting. The latest GPT Models News signals a pivotal move towards a new frontier: efficiency. This evolution isn’t about sacrificing power; it’s about making that power smarter, faster, more accessible, and sustainable. This comprehensive article delves into the critical trend of GPT efficiency, exploring the new multi-model strategies, the underlying technologies making it possible, and the profound impact this shift is having across industries.

The New Paradigm: A Multi-Model Strategy for a Diverse World

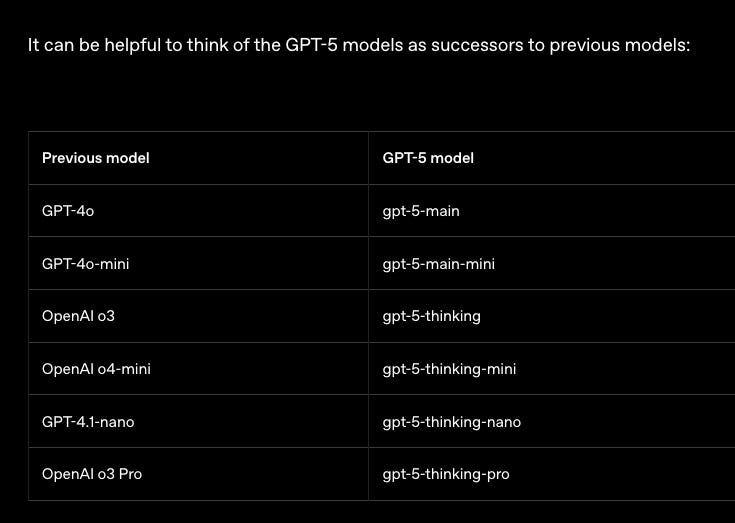

The one-size-fits-all approach to large language models (LLMs) is becoming a relic of the past. Leading AI labs are now championing a more nuanced, tiered strategy, often releasing a “family” of models instead of a single monolithic one. This approach acknowledges that not every task requires the full force of a 1-trillion-parameter behemoth. The latest OpenAI GPT News and developments from competitors highlight this trend, which is fundamentally about providing the right tool for the right job.

Understanding the Performance vs. Efficiency Trade-off

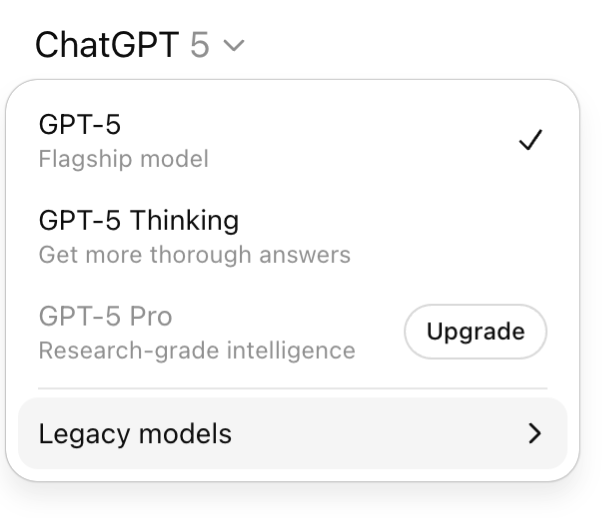

A multi-model ecosystem is typically structured around a core trade-off curve involving three key variables: capability, speed (latency), and cost (compute resources). A hypothetical next-generation “GPT-5” family might look something like this:

- A “Max” or “Ultra” Model: This is the flagship model, boasting the highest parameter count and the most advanced reasoning capabilities. It excels at complex, multi-step tasks like detailed scientific analysis, novel writing, or sophisticated code generation. However, it comes with the highest inference cost and the longest latency, making it suitable for high-value, non-real-time applications. This is the model that pushes the boundaries in GPT Research News and sets new records on the GPT Benchmark News circuit.

- A “Pro” or “Balanced” Model: This model represents the sweet spot for most mainstream applications. It offers a significant portion of the flagship’s power but at a fraction of the cost and with much lower latency. This is the workhorse for enterprise-grade GPT Chatbots News, advanced content creation tools, and complex GPT Agents News that need to balance performance with responsiveness.

- A “Lite” or “Fast” Model: Optimized for speed and cost-effectiveness, this model is designed for high-throughput and real-time applications. While its reasoning abilities are more constrained, it’s perfect for tasks like sentiment analysis, data classification, simple Q&A, and powering on-device GPT Assistants News. The development of such models is a major focus of GPT Edge News, as they can run on consumer hardware like smartphones and IoT devices.

This strategic diversification is a direct response to market demands. A startup building a real-time translation app has vastly different needs from a pharmaceutical company using AI for drug discovery. By offering a spectrum of options, AI providers are democratizing access and enabling a much wider range of GPT Applications News.

Under the Hood: Key Techniques Driving GPT Efficiency

The ability to create these smaller, faster, and cheaper models is not magic; it’s the result of years of research and engineering breakthroughs in model optimization. The latest GPT Efficiency News is filled with advancements in several key areas that work in concert to shrink and accelerate these powerful systems without catastrophically degrading their performance.

Model Compression and Optimization

At the heart of efficiency lies model compression. These techniques aim to reduce the model’s size (memory footprint) and computational requirements (FLOPs). The most prominent methods include:

- Quantization: This is one of the most impactful techniques, covered extensively in GPT Quantization News. It involves reducing the numerical precision of the model’s weights. Instead of storing weights as 32-bit or 16-bit floating-point numbers (FP32/FP16), quantization converts them to lower-precision formats like 8-bit integers (INT8) or even 4-bit integers. This dramatically reduces the model’s size and can significantly speed up calculations on modern hardware, which often has specialized units for integer math. The challenge is to perform this conversion without losing too much accuracy.

- Pruning: This technique is analogous to pruning a tree. It involves identifying and removing redundant or unimportant connections (weights) within the neural network. By setting these weights to zero, we can create a “sparse” model that requires less storage and, with the right hardware and software, fewer computations during inference.

- Knowledge Distillation: A fascinating approach detailed in GPT Distillation News, this involves using a large, powerful “teacher” model (like a GPT-4 class model) to train a much smaller “student” model. The student model learns to mimic the output distribution of the teacher, effectively inheriting its “knowledge” in a more compact form. This allows for the creation of highly specialized, efficient models for specific tasks.

Innovations in Inference and Architecture

Beyond compressing the model itself, there are significant gains to be had in how we run it. The latest GPT Inference News focuses on optimizing the process of generating output.

- Optimized Inference Engines: Software like NVIDIA’s TensorRT-LLM or open-source projects like vLLM are designed to maximize GPT Latency & Throughput News. They use techniques like kernel fusion (combining multiple operations into one), paged attention, and intelligent batching to squeeze every last drop of performance from the underlying GPT Hardware News.

- Architectural Shifts: The underlying GPT Architecture News is also evolving. The Mixture-of-Experts (MoE) architecture, for example, is a key innovation. In an MoE model, the network is divided into smaller “expert” sub-networks. For any given input token, a routing mechanism activates only a few relevant experts. This means that while the model may be massive in total parameter count, the actual computation performed for each token is much smaller, leading to faster and more efficient inference.

- Speculative Decoding: This is a clever technique where a much smaller, faster “draft” model proposes a sequence of several tokens at once. The larger, more powerful model then checks this draft in a single pass, accepting or rejecting it. Since accepting is much faster than generating token-by-token, this can lead to a 2-3x speedup in inference time.

–

Real-World Impact: Where Efficiency Meets Application

The push for efficiency is not merely an academic exercise; it is unlocking a new wave of practical, real-world AI applications that were previously infeasible due to cost or latency constraints. This is where the theoretical advancements in GPT Training Techniques News translate into tangible business value.

Transforming the Enterprise with Accessible AI

For businesses, the high cost of flagship model APIs has often been a barrier to large-scale deployment. More efficient models are changing this calculus. A company can now deploy a cost-effective model for its internal customer support chatbot, handling thousands of queries per day without breaking the bank. This is a major driver in GPT APIs News and the growth of the surrounding GPT Ecosystem News.

Case Study: AI in Financial Services

Consider a financial firm that needs to analyze thousands of news articles and earnings reports in real-time to inform trading decisions. Using a top-tier model for every document would be prohibitively expensive. Instead, they can use a fast, efficient “Lite” model to perform an initial triage—classifying documents, extracting key entities, and assessing sentiment. Only the most critical or ambiguous documents are then escalated to a more powerful “Pro” model for in-depth analysis. This tiered approach, a hot topic in GPT in Finance News, maximizes insight while carefully managing costs.

Powering the Edge and Enhancing Privacy

Perhaps the most exciting implication of efficiency is the ability to run powerful AI directly on user devices. When a model can run on a smartphone, it doesn’t need to send user data to the cloud for processing. This has massive implications for privacy and user experience, as covered in GPT Privacy News.

Real-World Scenario: On-Device Personal Assistants

Imagine a personal assistant on your phone that can summarize your emails, draft replies, and organize your calendar without any of that personal data ever leaving your device. This is made possible by highly compressed and quantized models. This trend is also revolutionizing GPT Applications in IoT News, enabling smart devices in homes and factories to perform complex tasks locally, with lower latency and greater reliability than cloud-dependent solutions.

Democratizing Innovation and Customization

Efficient models are a boon for the entire AI community. Smaller models are easier and cheaper to fine-tune, a key area of focus in GPT Fine-Tuning News. This allows startups, researchers, and even individual developers to create GPT Custom Models News tailored to specific domains, from legal document analysis (GPT in Legal Tech News) to creative writing assistance (GPT in Creativity News). The rise of powerful, efficient open-source models, a major theme in GPT Open Source News, further accelerates this trend, fostering a competitive and innovative landscape beyond the major AI labs.

Navigating the Efficient Frontier: Best Practices and Future Outlook

As the ecosystem of models diversifies, developers and businesses must become more sophisticated in their selection and deployment strategies. Making the right choice is crucial for building successful, scalable, and cost-effective AI products.

Tips for Choosing the Right Model

- Benchmark for Your Specific Task: General benchmarks are a good starting point, but always test models on your actual use case. A model that excels at creative writing may not be the best for structured data extraction. Define your key metrics—is it accuracy, latency, or cost per inference?

- Start Small and Scale Up: A common pitfall is defaulting to the largest, most powerful model available. Begin with a smaller, more efficient model. If it meets your performance requirements, you’ve just saved significant operational costs. Only scale up to a larger model if the task complexity truly demands it.

- Consider the Total Cost of Ownership (TCO): The API price is just one part of the equation. Factor in the development time, the cost of fine-tuning, and the infrastructure required for deployment. Sometimes, a slightly more expensive but easier-to-integrate model can have a lower TCO.

- Stay Informed: The field is moving incredibly fast. Keep up with the latest GPT Trends News and GPT Competitors News. A new model released next month might offer a 50% cost reduction for the same performance level you have today.

The Future is Smart, Not Just Big

Looking ahead, the focus on efficiency will only intensify. We can expect to see further breakthroughs in GPT Compression News, with techniques like 1-bit quantization becoming more viable. Hardware will become even more specialized, with chips designed specifically for running optimized transformer architectures. The ultimate future may lie in self-optimizing AI systems—models that can analyze a task and dynamically configure their own architecture and precision for maximum efficiency. This will move us further away from brute-force scaling and towards a future of elegant, efficient, and truly intelligent systems.

Conclusion

The conversation around generative AI is maturing. While the raw power of massive models continues to capture headlines, the real revolution is happening in the trenches of optimization and efficiency. The shift towards multi-model ecosystems, powered by sophisticated techniques like quantization, distillation, and optimized inference, is making AI more practical, accessible, and sustainable. This new era is not about diminishing capability but about democratizing it. By providing a spectrum of tools tailored to diverse needs, the AI community is unlocking a new wave of innovation, from privacy-preserving on-device assistants to cost-effective enterprise solutions. The latest GPT Future News is clear: the smartest path forward is not just about building bigger models, but about building smarter ones.