Beyond Chatbots: OpenAI’s New Frontier in Scientific Discovery with Advanced GPT Tools

The Dawn of a New Scientific Paradigm: AI-Powered Discovery

For years, the discourse around Generative Pre-trained Transformers (GPT) has been dominated by their remarkable ability to generate human-like text, write code, and power conversational agents. From the versatile GPT-3.5 to the more powerful GPT-4, the latest GPT Models News has largely centered on democratizing AI for content creation, customer service, and software development. However, the landscape is undergoing a seismic shift. We are now witnessing a pivotal evolution from general-purpose assistants to highly specialized, domain-specific instruments of discovery. The latest and most significant GPT Tools News signals a new chapter: the concerted effort to build sophisticated AI tools specifically designed to tackle the world’s most complex scientific challenges.

This strategic move represents a profound recognition that the next wave of innovation lies not just in making models bigger, but in making them smarter, more specialized, and deeply integrated into critical professional workflows. OpenAI is spearheading a new initiative focused on “AI for Science,” assembling a world-class team of scholars and researchers to build a new generation of GPT-powered tools. This program aims to augment human intellect, accelerate the pace of research, and unlock breakthroughs in fields ranging from medicine and materials science to climate change and astrophysics. It’s a declaration that the future of AI is not just about communication; it’s about profound, quantifiable discovery.

Core Objectives: The Scientific Co-Pilot

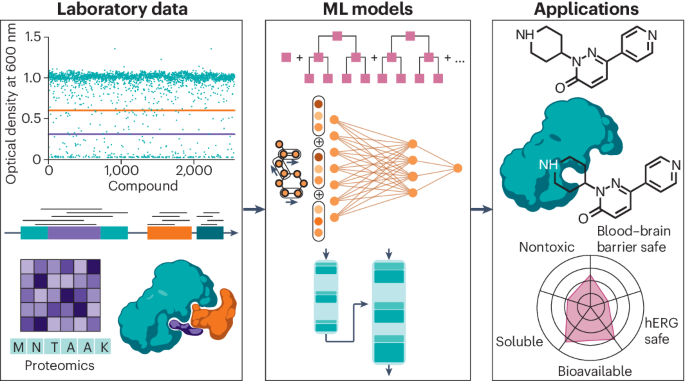

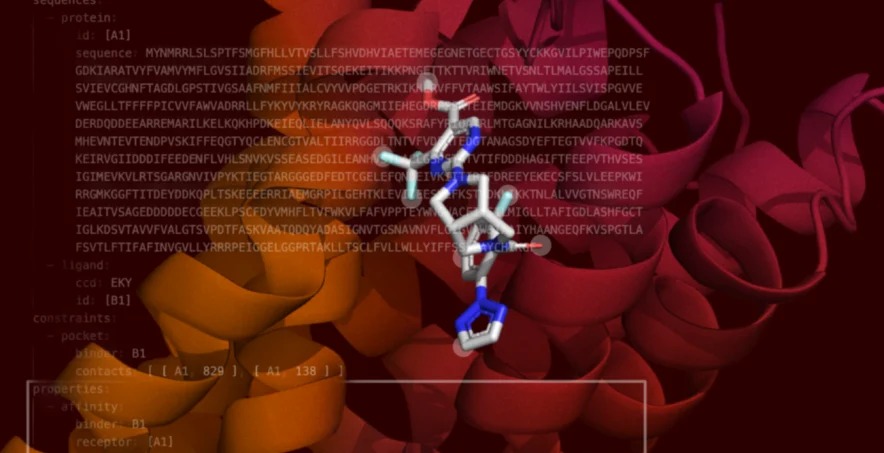

The primary mission of this initiative is not to replace scientists but to empower them with an intelligent “co-pilot.” Imagine a tool that can read, understand, and synthesize the entirety of published medical literature, identify hidden patterns across thousands of genomic datasets, or propose novel molecular structures for drug development. This is the vision driving the latest OpenAI GPT News. The collaboration with leading academics is crucial, ensuring that these tools are built with a deep understanding of scientific methodologies, validation processes, and the nuanced challenges specific to each domain. This fusion of state-of-the-art AI from the GPT Research News pipeline and deep domain expertise is the cornerstone of the program’s strategy.

From Generalist Models to Specialized Instruments

While models like GPT-4 are incredibly capable, their knowledge is broad rather than deep. Scientific research demands an unprecedented level of precision, contextual understanding, and reasoning. This is where the focus on GPT Custom Models News becomes critical. The initiative will undoubtedly leverage advanced GPT Fine-Tuning techniques, training base models on massive, curated scientific GPT Datasets—from peer-reviewed journals and clinical trial data to protein databanks and particle physics simulations. Furthermore, science is inherently multimodal. Future tools will need to interpret not just text but also microscopy images, spectral analysis charts, and 3D protein models, making GPT Multimodal News and GPT Vision News central to this endeavor. This specialization may even influence the very foundation of future models, with speculation in GPT-5 News pointing towards new architectures optimized for logical inference and causal reasoning—abilities paramount to the scientific method.

Engineering the Future of Research: A Technical Breakdown

Creating AI tools capable of contributing to scientific discovery is a monumental engineering challenge that extends far beyond standard model training. It requires a vertically integrated approach, encompassing data curation, novel training methodologies, autonomous agent development, and highly optimized deployment strategies. This section delves into the technical underpinnings of what it will take to build these revolutionary scientific co-pilots.

Data, Training, and Advanced Fine-Tuning

The adage “garbage in, garbage out” is exponentially more critical in a scientific context. The foundation of these tools will be meticulously curated datasets far exceeding the scope of the public internet. This involves ingesting and structuring petabytes of information from sources like PubMed, arXiv, chemical databases, and proprietary experimental results. A key piece of GPT Training Techniques News will be the development of methods to teach models the principles of scientific reasoning. This could involve Reinforcement Learning from Human Feedback (RLHF) where the “human” is a panel of Nobel-laureate-level experts, guiding the model’s understanding of complex topics. The models must learn to differentiate between correlation and causation, understand statistical significance, and recognize the hierarchy of evidence in scientific literature.

The Rise of Autonomous GPT Agents in the Lab

The true power of these tools will be realized when they evolve from passive information processors to active research assistants. This is where the latest GPT Agents News becomes transformative. A future scientific workflow might look like this:

- A biologist tasks a GPT agent with investigating cellular mechanisms of a specific disease.

- The agent scans millions of research papers, identifies a promising but underexplored protein interaction, and formulates a novel hypothesis.

- Leveraging GPT Code Models News, the agent writes Python scripts to analyze existing genomic data from a public repository, finding a correlation that supports its hypothesis.

- It then designs a series of lab experiments, generating a protocol and even interfacing with automated lab equipment via APIs.

- Finally, it analyzes the experimental results, drafts a preliminary report with visualizations, and presents it to the human researcher for validation and final interpretation.

This level of autonomy requires robust GPT Integrations News, with models seamlessly connecting to lab information management systems (LIMS), data analysis software, and scientific instruments. The underlying connectivity will be powered by a new generation of specialized GPT APIs News.

Performance, Efficiency, and Deployment

Scientific computation is demanding. While large-scale model training will occur in the cloud, practical deployment requires high efficiency. The latest GPT Inference News highlights the need for low latency and high throughput. Researchers can’t wait minutes for a query response while in the middle of an experiment. This will drive innovation in GPT Optimization techniques. Methods like GPT Quantization (reducing the numerical precision of model weights) and GPT Distillation (training a smaller, faster model to mimic a larger one) will be essential. This also opens the door for GPT Edge News, where optimized models could run on local servers or even directly on scientific instruments to process data in real-time, ensuring data privacy and reducing reliance on cloud connectivity.

Transforming Industries: Potential Applications and Impact

The development of specialized GPT tools for science is not an academic exercise; it’s a catalyst for profound, industry-wide transformation. By drastically shortening research and development cycles, these technologies promise to deliver tangible breakthroughs that will impact everything from human health to global economic competitiveness. This is the most exciting aspect of the ongoing GPT Applications News.

Revolutionizing Healthcare and Drug Discovery

Perhaps no field stands to gain more than medicine. The GPT in Healthcare News is poised for a revolution.

- Drug Discovery: Current processes can take over a decade and cost billions. AI tools can analyze complex biological systems, predict how molecules will interact with proteins, and identify promising drug candidates at a fraction of the time and cost. This could lead to faster development of treatments for diseases like Alzheimer’s, cancer, and rare genetic disorders.

- Personalized Medicine: A GPT-powered tool could analyze a patient’s entire genomic profile, medical history, and lifestyle data to recommend hyper-personalized treatment plans, moving medicine from a one-size-fits-all approach to one tailored to the individual.

- Clinical Trial Analysis: These models can sift through vast amounts of clinical trial data in real-time, identifying adverse events, spotting efficacy signals earlier, and optimizing trial design for faster, more reliable results.

Accelerating Materials Science and Climate Solutions

The search for new materials with novel properties is a cornerstone of technological progress. AI can act as a “materials discovery engine.” By understanding the fundamental principles of chemistry and physics, a specialized GPT model could propose new compounds for:

- Renewable Energy: Designing more efficient and cheaper materials for solar cells and batteries.

- Carbon Capture: Inventing novel polymers or metal-organic frameworks that can efficiently capture CO2 from the atmosphere.

- Sustainable Manufacturing: Creating biodegradable plastics or stronger, lighter alloys for more fuel-efficient vehicles and aircraft.

This directly addresses some of humanity’s most pressing challenges and will be a major focus of future GPT Trends News.

The Competitive Landscape and Ecosystem Growth

OpenAI’s initiative places it in direct competition with other major players like Google’s DeepMind, which has already seen massive success with its AlphaFold model for protein structure prediction. This competitive pressure, a key driver in GPT Competitors News, will accelerate innovation across the board. Furthermore, this move will catalyze the entire GPT Ecosystem. We can expect a Cambrian explosion of startups and academic labs building on top of these foundational science models, creating specialized tools for niche scientific domains. This will create a vibrant marketplace of ideas and applications, further solidifying the role of AI in research and development.

Navigating the Uncharted Territory: Ethics, Bias, and Practical Considerations

While the potential of AI in science is immense, its deployment is fraught with complex challenges that must be addressed proactively. The integrity of the scientific process is paramount, and integrating powerful but opaque AI tools requires a new framework for ethics, validation, and governance. The discourse around GPT Ethics News and GPT Safety News is more critical here than in any other domain.

Addressing Bias and Ensuring Reproducibility

A significant risk is that AI models will inherit and amplify existing biases present in their training data. If historical scientific literature underrepresents certain demographics or contains flawed theories, the AI may perpetuate these issues. This is a core concern in GPT Bias & Fairness News. To combat this, rigorous dataset auditing and bias mitigation techniques are essential.

Furthermore, reproducibility is the bedrock of science. If an AI proposes a groundbreaking hypothesis, researchers must be able to understand its reasoning. “Black box” models are unacceptable. Future tools must incorporate explainability features, allowing scientists to audit the AI’s “thought process” and validate its conclusions. Without transparency, trust in AI-driven discoveries will erode.

Data Privacy, Security, and Regulation

Scientific research often involves highly sensitive information, from confidential patient data in medical studies to proprietary intellectual property in corporate R&D. The handling of this data raises significant GPT Privacy News concerns. Secure, on-premise, or “private cloud” deployment models will be necessary. As these tools become more influential, they will inevitably attract regulatory scrutiny. The global conversation around GPT Regulation News will need to evolve to include standards for validating and certifying AI tools used in critical applications like drug approval or climate modeling.

Best Practices for Adoption

For research institutions and companies looking to leverage these emerging technologies, a strategic approach is key:

- Invest in Data: The quality of your proprietary data will be your biggest competitive advantage. Begin curating, cleaning, and structuring your internal research data now to prepare for fine-tuning.

- Prioritize Human-in-the-Loop Systems: Frame AI as an augmentation tool, not a replacement. Design workflows that combine AI’s computational power with human intuition, expertise, and critical judgment.

- Start with Focused Pilot Projects: Instead of attempting to solve everything at once, identify a specific, high-impact problem and build a proof-of-concept. This allows your team to build expertise and demonstrate value incrementally.

- Foster Cross-Disciplinary Collaboration: Success requires a tight feedback loop between AI developers, data scientists, and domain experts (biologists, chemists, physicists). Break down organizational silos to facilitate this collaboration.

Conclusion: Charting the Course for a New Era of Discovery

The deliberate pivot towards creating specialized GPT tools for science is more than just an interesting product update; it is a landmark event in the history of technology and research. It signals the maturation of AI from a novelty into a fundamental instrument of human progress. This initiative moves the conversation beyond general-purpose chatbots and into the realm of targeted, high-impact applications designed to solve humanity’s most complex problems. The fusion of advanced AI architectures, vast multimodal datasets, and deep domain expertise promises to create a powerful synergy, accelerating the pace of discovery in ways we are only beginning to imagine.

The path forward is filled with technical, ethical, and logistical challenges, but the potential rewards are immeasurable. As we follow the unfolding GPT Future News, it is clear that we are on the cusp of a new scientific revolution—one where the partnership between human intellect and artificial intelligence unlocks a deeper understanding of our world and universe. This is the next great frontier for AI, and its exploration has officially begun.