Beyond the API: How Internal GPT Tools Signal a Seismic Shift for Enterprise Software

The AI Platform’s Paradox: Innovate Internally, Disrupt Externally

In the rapidly evolving landscape of artificial intelligence, the most profound developments often begin quietly, behind closed doors. Recent insights into how leading AI research labs like OpenAI are leveraging their own foundational models to build sophisticated internal tools have sent a clear and powerful signal to the global software market. This practice, often called “dogfooding,” is more than just a testament to their technology’s capability; it’s a blueprint for the future of enterprise software and a potential existential threat to a generation of SaaS companies. When the creator of a foundational model demonstrates the ability to replicate complex, vertical-specific software solutions for its own internal use, it fundamentally alters the “build versus buy” calculation for every enterprise and redefines what it means to have a competitive moat in the age of AI. This latest chapter in ChatGPT News isn’t just about a single company’s internal processes; it’s a harbinger of a massive industry-wide transformation, forcing businesses to re-evaluate their technology stacks, competitive strategies, and the very nature of software itself.

Section 1: Unpacking the Internal AI Revolution

The core of this development lies in the creation of custom, internal AI systems that go far beyond the capabilities of publicly available chatbots or simple API integrations. These are not just productivity hacks; they are sophisticated, purpose-built applications designed to automate and augment complex business functions that are currently served by established SaaS players. This trend represents a significant update in GPT Applications News, moving from generalized assistants to specialized, high-impact business agents.

What Are These Internal Tools?

While specifics are often proprietary, the functions these internal tools perform mirror those of major software categories. We can infer their nature based on common enterprise needs and the capabilities demonstrated by advanced models like GPT-4.

- Contract Analysis and Legal Tech: Imagine an internal tool that can ingest thousands of vendor contracts, analyze clauses for non-standard terms, summarize risks, and compare them against legal playbooks in seconds. This directly challenges the core value proposition of companies in the GPT in Legal Tech News space, which have spent years building similar solutions.

- Financial Planning & Analysis (FP&A): An AI agent with secure access to internal financial data could automate budget variance analysis, generate sophisticated forecast models, and answer natural language queries from executives about business performance. This is a domain where accuracy and data privacy are paramount, making an internal solution highly attractive and a major topic in GPT in Finance News.

- Advanced Customer Support and Resolution: Instead of a simple chatbot that deflects tickets, these internal systems can function as powerful GPT Agents. They can access customer history, analyze technical logs, draft and execute troubleshooting steps, and even process refunds or credits, all with minimal human oversight.

- Hyper-Optimized Coding Assistants: While tools like GitHub Copilot are public, internal versions can be trained on a company’s entire private codebase, adhering to specific coding standards, and understanding proprietary libraries and architecture. This is a crucial development in GPT Code Models News, offering a level of customization public tools cannot match.

The “Why” Behind the Internal Build-Out

Building these tools internally offers several compelling advantages that drive this trend. First is the unparalleled access to the latest technology. Internal teams often work with more advanced, unreleased, or fine-tuned versions of models, a key piece of GPT-4 News and a preview of future GPT-5 News. Second, it solves critical issues around GPT Privacy News and data security, as sensitive corporate data never has to leave the company’s secure environment. Finally, it allows for a degree of customization and integration that is simply impossible with off-the-shelf software, enabling the creation of deeply embedded, workflow-native AI capabilities.

Section 2: The Technical Moat: Beyond a Simple API Wrapper

The disruptive potential of these internal platforms stems from their technical architecture, which is fundamentally more powerful than what most third-party applications, built as thin wrappers around public APIs, can achieve. Understanding these technical differentiators is key to grasping the magnitude of the shift underway in the GPT Ecosystem News.

Advanced Model Access and Customization

The most obvious advantage is access to proprietary models and cutting-edge GPT Architecture News. Internal teams can leverage models with larger context windows, faster inference speeds, or specialized multimodal capabilities long before they are commercially available. Furthermore, the real power lies in deep customization.

- Strategic Fine-Tuning: This goes beyond basic prompt engineering. Internal platforms can be continuously fine-tuned on massive, proprietary datasets, such as every customer support ticket ever resolved or every line of code ever written. This is a core topic in GPT Fine-Tuning News, creating models that are true experts in a company’s specific domain.

- Retrieval-Augmented Generation (RAG) at Scale: Internal systems can implement highly sophisticated RAG pipelines, connecting the AI to vast, real-time internal knowledge bases (e.g., Confluence, Jira, Salesforce). This ensures the AI’s responses are grounded in factual, up-to-the-minute company data.

- Proprietary Training Techniques: The latest GPT Training Techniques News often involves methods like reinforcement learning from human feedback (RLHF) and direct preference optimization (DPO). Internal teams can apply these techniques using their own expert employees as trainers, creating a powerful feedback loop that rapidly improves model performance on specific tasks.

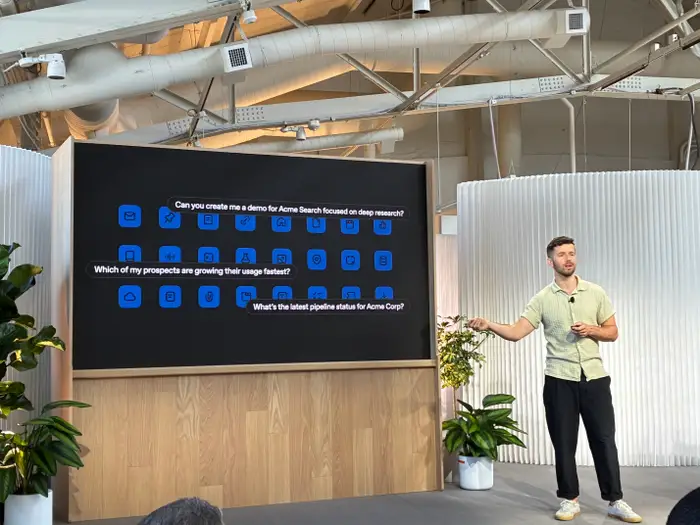

The Rise of Complex, Agentic Workflows

Perhaps the most significant leap is the move from single-call-and-response interactions to complex, multi-step agentic systems. A public API call might summarize a document. An internal AI agent can perform a sequence of actions: receive an email with a new contract, use GPT Vision News capabilities to extract text and structure, compare it against a database of approved clauses, flag deviations, draft a summary email to the legal team, and schedule a review meeting in their calendars. This is the essence of the latest GPT Agents News: AI that doesn’t just process information, but takes action within a business environment. This requires sophisticated orchestration, tool use integration, and self-correction capabilities that are difficult to build and maintain on top of a public API alone.

Section 3: Market Tremors: Implications for SaaS, Enterprises, and Developers

The revelation that foundational model providers can easily replicate the functionality of specialized SaaS products is not just a competitive threat; it’s an earthquake reshaping the entire software landscape. The implications are far-reaching and impact every player in the ecosystem.

For SaaS Companies: The Great Value Re-evaluation

For years, the SaaS playbook involved identifying a niche workflow, building a user-friendly interface around it, and creating a subscription business. Today, if that workflow primarily involves processing text, data, or images, it is at risk of being commoditized by foundational models. SaaS companies that are merely “thin wrappers” around a GPT API are in a precarious position.

Case Study Scenario: “DocuSigner AI”

A hypothetical startup, “DocuSigner AI,” builds a tool that uses a public GPT API to summarize legal contracts for small businesses. Their value proposition is convenience and a slick UI. When an enterprise discovers it can build a more powerful, secure, and customized version of this tool internally in a matter of weeks using its own fine-tuned models, the startup’s $50/month subscription becomes difficult to justify. The startup’s “moat” was the UI, not the underlying intelligence, and that moat is rapidly evaporating. This is a critical lesson in the latest GPT in Legal Tech News.

For Enterprises: The “Build vs. Buy” Dilemma Intensifies

Chief Information Officers (CIOs) and Chief Technology Officers (CTOs) now face a strategic inflection point. The decision is no longer just about whether to buy a finished software product or build one from scratch. The new option is to leverage a foundational AI platform (like those from OpenAI, Google, or Anthropic) as a base and build lightweight, customized solutions on top.

- Pros of Building: Unmatched customization, enhanced data security, potential for a significant competitive advantage, and potentially lower long-term costs than paying for dozens of niche SaaS subscriptions.

- Cons of Building: Requires significant in-house AI talent, poses challenges in GPT Safety News and ethical oversight, and involves ongoing maintenance and model management.

This shift is driving demand for new roles and platforms focused on GPT Deployment News and MLOps for large language models.

For Developers and the Ecosystem

The opportunity for developers is shifting from creating standalone AI apps to building the tools and infrastructure that enable enterprises to build their own solutions. The new frontier lies in creating robust GPT Integrations, developing specialized GPT Tools for agentic workflows, and providing consulting on GPT Custom Models News and fine-tuning strategies. The value is moving down the stack, closer to the model and the data.

Section 4: Strategies for the New AI-Native World

Navigating this new environment requires a proactive and strategic approach. Complacency is not an option for any business, whether it’s a software provider or a software consumer.

Recommendations for SaaS Providers

To survive and thrive, SaaS companies must build deep, defensible moats that AI cannot easily replicate. The focus must shift away from the AI itself and onto other sources of value.

- Proprietary Data & Network Effects: The most defensible moat is a unique dataset that you own and that improves your product over time. For example, a marketing automation platform’s value isn’t just its AI copywriter (a commodity) but its vast dataset on which email subject lines perform best in which industries.

- Deep Workflow Integration: Don’t just be a feature; be the entire workflow. Embed your tool so deeply into a customer’s critical business processes that ripping it out is prohibitively complex. This is where the best GPT in Marketing News is heading.

- Human-in-the-Loop & Vertical Expertise: Focus on hybrid models where AI handles 80% of the work, but a human expert provides the critical final 20%. This is especially relevant in fields like healthcare and finance, where GPT Regulation News and compliance are critical. Become the expert system for a specific vertical, not a general-purpose tool.

Recommendations for Enterprises

Enterprises should not wait to be disrupted. They must actively develop an internal AI strategy to harness the power of these foundational models.

- Invest in an AI Center of Excellence: Create a dedicated internal team responsible for evaluating foundational models, developing best practices for GPT Fine-Tuning and deployment, and identifying high-ROI use cases for internal tool development.

- Prioritize Data Governance: Before you can leverage AI effectively, your data must be clean, accessible, and well-governed. A strong data foundation is the prerequisite for building any meaningful internal AI capability. This also directly addresses major concerns in GPT Privacy News.

- Start with a Hybrid Approach: You don’t have to replace every SaaS tool overnight. Begin by identifying one or two critical, data-sensitive workflows (like contract analysis or internal Q&A) to build as a proof-of-concept. Use this experience to build skills and demonstrate value.

Conclusion: The Dawn of the AI Operating System

The news of OpenAI and other AI leaders building their own powerful business tools is more than just an interesting footnote in the story of AI; it is the central plot. It signals a fundamental transition where large language models are evolving from novelties or feature enhancements into true operating systems for the enterprise. For SaaS companies, this is a moment of reckoning, forcing a pivot from building AI features to building businesses on defensible moats like proprietary data and deep workflow integration. For enterprises, it is an unprecedented opportunity to build bespoke, intelligent systems that can create a durable competitive advantage. The shockwaves from this internal “dogfooding” will continue to ripple outwards, and the companies that understand and adapt to this new paradigm will be the ones to define the future of software.