Beyond Tokens: The Architectural Shift Redefining GPT and the Future of AI

The Unseen Bottleneck: Why GPT Tokenization is AI’s Next Great Challenge

In the rapidly evolving world of artificial intelligence, Large Language Models (LLMs) like those in the GPT series have become ubiquitous. From powering sophisticated chatbots to drafting complex legal documents, their capabilities seem to expand daily. Yet, beneath the surface of this incredible progress lies a foundational, often-overlooked component that is both an enabler and a significant bottleneck: tokenization. This process, which breaks down human language into pieces a machine can understand, has been a cornerstone of model architecture. However, recent breakthroughs and emerging research are challenging this paradigm, signaling a fundamental shift in how we build and scale the next generation of AI. This isn’t just an incremental update; it’s a potential revolution in GPT Architecture News that could solve some of the most persistent problems in efficiency, multilingual performance, and true multimodality. As we look toward the horizon of GPT-5 News and beyond, understanding this shift away from traditional tokenization is crucial for developers, researchers, and businesses aiming to leverage the full potential of AI.

Section 1: Deconstructing the Token: The Foundation and Flaws of Current GPT Models

To appreciate the magnitude of the coming changes, we must first understand the current state of affairs. Every interaction with models like ChatGPT or those accessed via GPT APIs News begins with tokenization. It’s the invisible translator between our world of words and the model’s world of numbers.

What Are Tokens and Why Do They Matter?

At its core, tokenization is the process of segmenting a piece of text into smaller units called tokens. An LLM doesn’t see words or sentences; it sees a sequence of numerical IDs, where each ID corresponds to a specific token in its predefined vocabulary. For example, the sentence “AI is transforming our world” might be tokenized into `[“AI”, ” is”, ” transform”, “ing”, ” our”, ” world”]`. These tokens are then converted into vectors for the model to process.

Most modern models, including those from OpenAI, use subword tokenization algorithms like Byte-Pair Encoding (BPE). This method is a clever compromise between word-level and character-level tokenization. It can represent common words as a single token (“world”) while breaking down rarer or more complex words into smaller, recognizable subwords (“transforming” -> “transform”, “ing”). This approach helps manage vocabulary size and allows the model to handle words it has never seen before. This has been a key component in the success described in GPT-3.5 News and GPT-4 News.

The Hidden Costs and Inefficiencies of Subword Tokenization

While BPE and similar methods have been effective, they introduce a host of subtle but significant problems that represent a major topic in current GPT Research News.

- Semantic Fragmentation: Breaking words into subwords can split their meaning. The word “unhappiness” is conceptually singular, but a tokenizer might see it as “un” and “happiness,” forcing the model to expend extra effort to reassemble the concept.

- Multilingual Inequity: Tokenizers are typically trained on a specific corpus, which is often predominantly English. Consequently, they are far less efficient for other languages. A sentence in German or Japanese might require two to three times as many tokens as its English equivalent. This not only degrades performance but also makes using GPT APIs more expensive for non-English users, a critical issue in GPT Multilingual News.

- Domain-Specific Blind Spots: In specialized fields like medicine or law, unique jargon and complex terms are often broken down into near-meaningless characters. This makes fine-tuning on specific domains, a hot topic in GPT Fine-Tuning News, less effective than it could be. This also impacts GPT Code Models News, as unconventional variable names or syntax can be poorly tokenized.

- The “Out-of-Vocabulary” Problem: While subword tokenization reduces the chance of a truly unknown word, it handles novel strings, slang, or even simple misspellings inefficiently by breaking them into individual characters or bytes, creating long and noisy sequences for the model to interpret.

These limitations have spurred a search for a better way, leading to one of the most exciting developments in the GPT Ecosystem News: the move toward tokenizer-free models.

Section 2: The Byte-Level Revolution: A New Architectural Paradigm

The most promising solution to the tokenization problem is to eliminate it almost entirely. A new wave of architectural research focuses on models that operate directly on raw data—specifically, on bytes. This represents a fundamental redesign of how LLMs ingest and process information.

From Tokens to Bytes: How It Works

Instead of a vocabulary of 30,000 or 50,000 subword tokens, a byte-level model works with a vocabulary of just 256—the number of possible byte values in standard encodings like UTF-8. Every piece of text, regardless of language, script, or content, is simply a sequence of bytes. This approach completely sidesteps the need for a pre-trained, language-specific tokenizer.

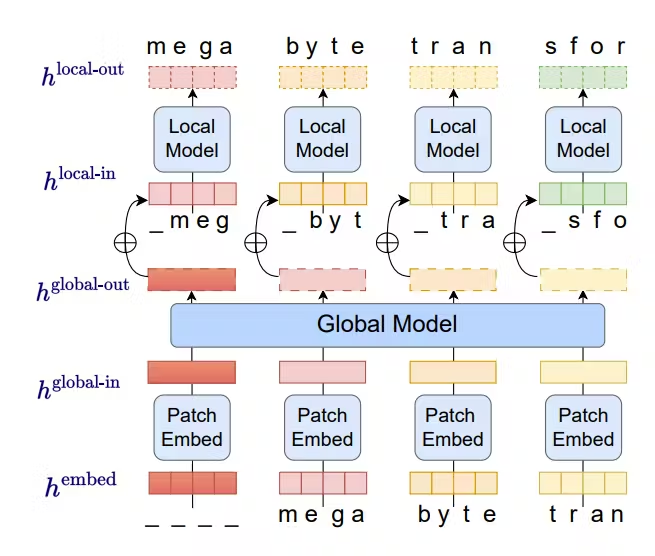

However, this creates a new challenge: sequence length. A single character can be 1 to 4 bytes, meaning a byte-level sequence is significantly longer than a token-level one. Processing these ultra-long sequences with standard Transformer architectures would be computationally prohibitive. The innovation, therefore, lies in new model architectures designed to handle this scale. One leading approach involves a hierarchical structure: a “local” model processes small patches or “megabytes” of the byte sequence, summarizing them into representations that a “global” model then processes to understand the broader context. This is a major breakthrough in GPT Scaling News and GPT Efficiency News.

Architectural Implications and Advantages

This byte-level approach offers profound advantages that are setting the agenda for GPT Future News.

- True Language Agnosticism: By operating on the universal substrate of bytes, these models are inherently multilingual. A single model could process English, Mandarin, Swahili, and Python code with equal, unbiased efficiency. This is a game-changer for GPT Language Support News and efforts to mitigate bias, a key topic in GPT Bias & Fairness News.

- Unified Multimodality: The most exciting implication is for multimodality. Text, images, audio, and video are all, at their core, just streams of bytes. A byte-level architecture provides a unified framework for processing any data type without needing separate encoders for each modality. This could accelerate progress in GPT Vision News and GPT Multimodal News, leading to models that can seamlessly understand a document containing text, charts, and embedded audio clips.

- Improved Robustness and Simplicity: By removing the tokenizer, the architecture becomes simpler and more robust. It can handle any string of characters, from chemical formulas and genetic sequences to previously unseen languages, without breaking a sweat. This has huge implications for GPT Applications in IoT News, where raw sensor data can be processed directly.

Section 3: Real-World Impact: What a Tokenizer-Free Future Means for Developers and Industries

The shift from subword tokens to byte-level processing is not merely an academic exercise. It has far-reaching, practical implications for everything from API costs to the development of novel AI applications across various sectors.

Economic and Performance Gains in the GPT Ecosystem

For developers using platforms like OpenAI’s, the most immediate impact will be on cost and performance. The “token tax” on non-English languages would disappear, making global applications more economically viable. This is significant GPT Platforms News for companies operating in international markets. Furthermore, by removing the tokenization step, a known source of latency, we could see improvements in GPT Inference News, leading to faster response times for GPT Assistants News and chatbots. This optimization is crucial for real-time applications and enhancing user experience.

Case Study: Transforming GPT Applications in Healthcare and Finance

Consider the real-world scenarios where current tokenization fails.

- In Healthcare: As covered in GPT in Healthcare News, models are used to analyze electronic health records (EHRs). These records are filled with complex medical terminology, abbreviations, and alphanumeric codes (e.g., “ICD-10-CM Z85.46”). A traditional tokenizer would mangle these codes, losing critical information. A byte-level model would process them as-is, preserving their exact structure and meaning, leading to more accurate analysis and summarization.

- In Finance: Financial reports often contain a mix of natural language, numerical data, and unique identifiers. A byte-level model could process a raw financial filing without misinterpreting ticker symbols or complex table structures, providing a more reliable foundation for analysis, a key development for GPT in Finance News. Similarly, in GPT in Legal Tech News, processing complex case law with precise citations becomes far more robust.

Unlocking New Frontiers in Creativity and Content Creation

The benefits extend to creative fields. For GPT in Content Creation News, a model that understands the nuances of poetic structure or the syntax of a rare dialect without being constrained by a tokenizer can generate more authentic and diverse content. In gaming, as discussed in GPT in Gaming News, this could lead to more believable non-player character (NPC) dialogue that can adapt to unique player-generated names and slang. This also opens doors for more powerful GPT Agents News that can process and act upon a wider variety of unstructured data from the real world.

Section 4: The Road Ahead: Challenges, Recommendations, and Future Trends

While the promise of tokenizer-free, byte-level models is immense, the path to widespread adoption is not without its challenges. The new architectures required are at the cutting edge of GPT Training Techniques News and demand significant computational resources.

Navigating the Hurdles

The primary challenge is managing the astronomical sequence lengths. Processing a sequence of one million bytes requires innovations in attention mechanisms and memory management that go far beyond current Transformer models. This will drive new research into GPT Optimization News and demand more powerful GPT Hardware. Furthermore, these models lose the “inductive bias” that subword tokens provide. A subword tokenizer gives the model a head start in understanding language structure. Byte-level models must learn all of this from scratch, which may require even larger datasets and more sophisticated training strategies, a core topic in GPT Datasets News.

Best Practices and Recommendations for the AI Community

For developers, data scientists, and business leaders, the key is to stay informed and be prepared for this architectural shift.

- Audit Your Current Tokenization Costs: Use tokenizer visualizer tools to understand how your data is being processed. Identify areas, particularly in multilingual or domain-specific applications, where tokenization is inefficient and costly. This will help you build a business case for adopting new models when they become available.

- Monitor GPT Research News: Keep a close watch on publications from major AI labs. The transition to byte-level models will likely be gradual, with hybrid approaches appearing first. Understanding the latest GPT Benchmark News will be crucial for evaluating when to make a switch.

- Focus on Data Quality: Regardless of the model architecture, high-quality data remains paramount. For future byte-level models, having clean, diverse, and extensive datasets will be even more critical as the model learns linguistic properties from the ground up.

- Consider Customization and Fine-Tuning: While new base models are on the horizon, continue to explore GPT Custom Models News. Fine-tuning existing models on your specific data can help mitigate some of the current tokenization issues by familiarizing the model with your domain’s vocabulary.

Conclusion: A More Fundamental Understanding of Data

The ongoing discourse around GPT tokenization is more than just a technical debate; it represents a pivotal moment in the evolution of artificial intelligence. For years, we have forced machines to see our world through the narrow lens of pre-defined tokens. The emerging paradigm of byte-level processing promises to shatter that lens, allowing models to interact with data in its most raw and fundamental form. This architectural leap forward will not only make AI more efficient and equitable across languages but will also pave the way for truly unified multimodal systems that can understand the world with unprecedented fidelity. While significant engineering and research challenges remain, the move beyond tokens is a critical step toward building more capable, robust, and intelligent systems. It is a foundational shift that will undoubtedly be a defining characteristic of the next generation of AI and a central theme in the ongoing story of GPT Trends News.