Breaking Language Barriers: A Technical Guide to Building a GPT-Powered Multilingual News Summarizer

Introduction

In our hyper-connected world, information is generated at an unprecedented rate across countless languages. For global businesses, researchers, and avid news consumers, this presents a significant challenge: how to efficiently monitor and comprehend global developments when they are fragmented by linguistic barriers. The rise of powerful Large Language Models (LLMs) like OpenAI’s GPT series offers a transformative solution. These models are not just capable of understanding and generating human-like text; they possess profound multilingual capabilities that can be harnessed to build sophisticated applications. This article provides a comprehensive technical guide on how to architect, build, and deploy a multilingual news article summarizer using the power of GPT models and modern development frameworks. We will delve into the underlying technology, explore practical implementation blueprints, discuss advanced techniques for scaling and optimization, and navigate the ethical considerations inherent in such powerful tools. This exploration of GPT Multilingual News technology is essential for any developer or organization looking to unlock global insights from the world’s vast repository of information.

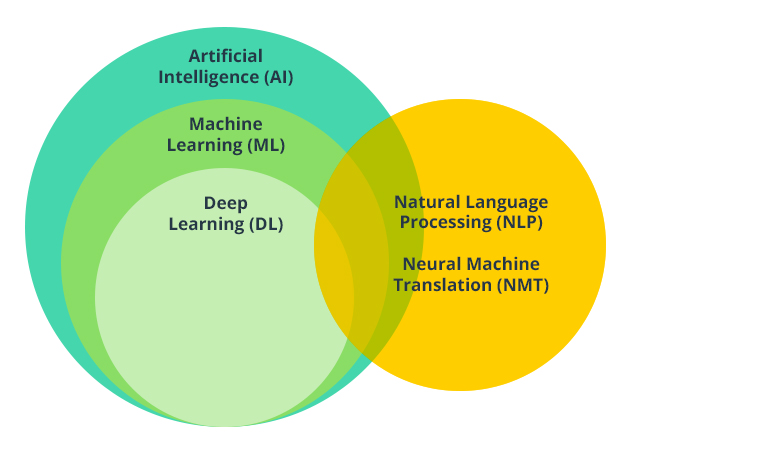

The Core Engine: GPT’s Multilingual Prowess

At the heart of any multilingual news application lies the remarkable capability of Generative Pre-trained Transformer (GPT) models. Understanding how these models handle multiple languages is crucial for building effective and efficient systems. Their proficiency isn’t an afterthought but a fundamental outcome of their design and training process, a key topic in recent GPT Architecture News.

From Monolingual Roots to Cross-Lingual Fluency

The transformer architecture, the foundation of GPT models, excels at identifying complex patterns and relationships in sequential data. When trained on a massive, web-scale corpus, this architecture doesn’t just learn English; it learns the patterns of every language present in its training data. The latest GPT Training Techniques News highlights that models like GPT-3.5 and GPT-4 are exposed to a diverse mix of languages, from Spanish and French to Japanese and Swahili. This allows them to develop a shared, abstract representation of concepts—an “interlingua”—that transcends individual languages. Consequently, the model understands that “cat,” “chat,” and “gato” all refer to the same feline concept, enabling it to perform impressive zero-shot, cross-lingual tasks without explicit translation instructions. The quality and diversity of these GPT Datasets News are paramount to the model’s final performance.

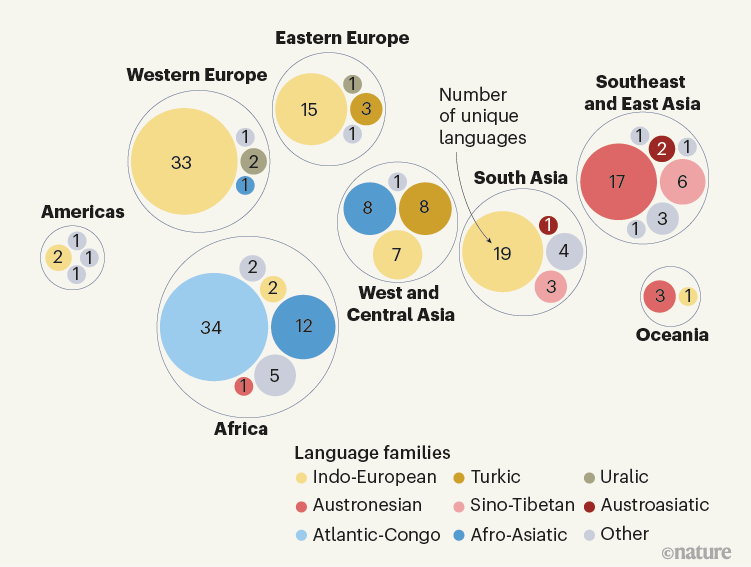

The Role of Tokenization in Multilingual Contexts

A critical, often overlooked aspect is tokenization. As covered in GPT Tokenization News, models don’t see words; they see tokens. Modern tokenizers, like OpenAI’s `tiktoken`, are designed to handle a vast array of languages using a single vocabulary. However, this process has significant implications for performance and cost. Languages with Latin-based scripts, like English or German, are generally token-efficient. In contrast, languages with complex character sets, like Japanese or Thai, may require more tokens to represent the same amount of information. This “token cost” directly impacts API usage fees and how much text can fit into the model’s limited context window, a crucial factor when processing lengthy news articles.

Comparing GPT-3.5 vs. GPT-4 for Multilingual Tasks

The choice of model is a key decision. The latest GPT-3.5 News indicates it is a highly capable and cost-effective option for many tasks. It can reliably summarize and translate news in major languages. However, GPT-4 News consistently shows its superiority in tasks requiring deep nuance, cultural context, and idiomatic understanding. For instance, summarizing a politically charged article from a French newspaper, GPT-4 is more likely to capture subtle implications and maintain the original tone in its English summary. For high-stakes applications in finance or legal tech, where precision is non-negotiable, the advanced reasoning of GPT-4 often justifies its higher cost. Recent GPT Benchmark News confirms GPT-4’s lead in multilingual understanding and generation tasks, making it the preferred choice for premium applications.

Blueprint for a Multilingual Summarizer

Architecting a robust summarization tool involves more than just a single API call. It requires a structured workflow that handles data ingestion, processing, interaction with the LLM, and presentation. Leveraging modern frameworks can dramatically simplify this process, turning a complex idea into a functional application.

Core Components and Workflow

A typical multilingual news summarizer can be broken down into four key stages:

- Article Fetching: The process begins by acquiring the source article. This is typically done by scraping the content from a given URL using Python libraries like `requests` for fetching the page and `BeautifulSoup` or `Scrapy` for parsing the HTML and extracting the main body of text.

- Text Pre-processing: Raw HTML content is noisy. This stage involves cleaning the extracted text by removing HTML tags, navigation links, advertisements, and other boilerplate content to isolate the core article. This clean text is the input for the model.

- The Summarization Chain: This is the core logic where the LLM is invoked. For articles that exceed the model’s context window (e.g., >4k tokens for some models), a simple prompt is insufficient. This requires a more sophisticated strategy, often involving “chaining” multiple calls to the model.

- Output Generation: The final step involves formatting the results from the LLM—such as a summary in English and another in French—and presenting them to the user in a clean, readable format, often alongside the original source link.

Leveraging LangChain for Efficiency and Structure

The GPT Ecosystem News is buzzing with tools designed to streamline LLM application development, and LangChain stands out as a leader. It’s a powerful framework that helps manage interactions with LLMs, chain together multiple calls, and connect them to other data sources. For our summarizer, LangChain is invaluable for handling long articles. Instead of manually splitting the text, making multiple API calls, and then combining the results, we can use a pre-built chain like `MapReduceDocumentsChain`. This chain “maps” a summarization prompt over each chunk of the article and then “reduces” the individual summaries into a final, coherent summary. This approach is a cornerstone of modern GPT Integrations News and a best practice for building scalable GPT Applications News.

Prompt Engineering for High-Quality Summaries

The quality of your output is directly proportional to the quality of your prompt. This is where you guide the model to perform the specific task you need. A well-crafted prompt for a multilingual summarizer should be explicit and structured.

Example Prompt Template:

You are a professional news analyst tasked with providing multilingual briefings. Your summaries must be objective, concise, and based strictly on the information within the provided text.

For the article below:

1. Provide a 3-bullet point summary in English, highlighting the key findings.

2. Provide a single-paragraph comprehensive summary in French.

ARTICLE:

{article_text}

This “role-playing” technique, combined with clear, multi-part instructions, significantly improves the reliability and formatting of the model’s response, a key insight from ongoing GPT Research News.

Beyond Basic Summarization: Advanced Features and Applications

A basic summarizer is a powerful tool, but its real value is unlocked when scaled and tailored for specific, real-world use cases. This involves addressing technical challenges like latency and throughput, and considering advanced techniques like fine-tuning for domain-specific accuracy.

Handling Large Volumes and Real-Time Feeds

To move from a simple script to a production-grade service, you must plan for scale. The latest GPT Scaling News emphasizes efficiency. Instead of processing articles one by one, implement asynchronous API calls to handle multiple requests in parallel, drastically reducing overall processing time. To manage costs and improve performance, as discussed in GPT Latency & Throughput News, a caching layer (e.g., using Redis) is essential. This prevents the system from re-summarizing the same article if it’s requested multiple times. For GPT Deployment News, consider serverless architectures like AWS Lambda or Google Cloud Functions. These platforms automatically scale with demand and are cost-effective, as you only pay for the compute time you use, making them ideal for applications with variable traffic.

Fine-Tuning for Domain-Specific Summaries

While prompt engineering is powerful, some applications demand specialized knowledge. This is where fine-tuning comes in. According to GPT Fine-Tuning News, you can adapt a base model like GPT-3.5 on a curated dataset to improve its performance on a niche task. For example, a firm specializing in GPT in Finance News could fine-tune a model on thousands of earnings reports and their corresponding summaries. The resulting model would learn the specific jargon, key metrics (like P/E ratios or EBITDA), and summary style preferred by financial analysts. Similarly, a service for GPT in Legal Tech News could be fine-tuned on case law to produce highly accurate legal summaries. This creates a custom model with a competitive advantage that is difficult to replicate with prompting alone.

Real-World Use Cases Across Industries

The applications of this technology are vast and transformative:

- Market Intelligence: A corporation can monitor news about its competitors across the globe, receiving daily briefings in English regardless of the source language.

- Content Creation: As seen in GPT in Content Creation News, media outlets can quickly repurpose international news for local audiences, generating summaries in multiple languages to increase engagement.

- Healthcare Research: Scientists can stay abreast of medical research papers published in different languages, accelerating innovation, a key area of GPT in Healthcare News.

- Humanitarian Aid: NGOs can monitor reports from crisis zones in local languages to gain a faster and more accurate understanding of the situation on the ground.

Navigating Challenges and Embracing the Future

Building with powerful AI models is not without its challenges. Responsible development requires a keen awareness of potential pitfalls, a commitment to best practices, and a forward-looking perspective on where the technology is heading.

Common Pitfalls and Best Practices

Developers must navigate several key challenges, a constant topic in GPT Ethics News and GPT Safety News.

- Hallucinations and Factual Inaccuracy: Models can occasionally invent facts or misinterpret the source text.

Best Practice: Ground the model firmly by including instructions in your prompt like, “Summarize *only* using information from the provided text and do not add any external information.” For critical applications, a human-in-the-loop review process is recommended. - Bias and Nuance: LLMs can inadvertently perpetuate biases present in their training data or the source articles. The latest GPT Bias & Fairness News stresses the importance of this.

Best Practice: Be aware of this limitation, especially when summarizing sensitive social or political topics. Implement content moderation filters and consider providing users with a link to the original source for full context. - Cost Management: API calls, especially to advanced models like GPT-4, can become expensive at scale.

Best Practice: Use the most cost-effective model that meets your quality bar (e.g., GPT-3.5-Turbo for less critical tasks). Implement strict caching, monitor your token usage via the OpenAI dashboard, and set budget alerts.

The Road Ahead: GPT-5 and Multimodal News

The field is evolving rapidly. The community eagerly awaits GPT-5 News, which promises even more powerful and efficient models. The most exciting frontier, however, is multimodality. Future GPT Multimodal News and GPT Vision News point to systems that can understand not just text, but also images, charts, and even video within a news report. Imagine an application that could “watch” a news broadcast in another language and produce a text summary with key visual snapshots included. Furthermore, the rise of GPT Agents News suggests a future where autonomous agents can proactively monitor global news feeds based on user preferences, delivering personalized, multilingual intelligence briefings automatically. This convergence of capabilities will redefine how we consume and interact with global information.

Conclusion

Building a multilingual news summarizer is no longer a futuristic concept but a tangible and immensely valuable application of modern AI. By leveraging the powerful cross-lingual capabilities of GPT models like GPT-3.5 and GPT-4, and employing robust development frameworks like LangChain, developers can create tools that break down language barriers and deliver actionable insights from global sources. The key to success lies in a thoughtful architecture, meticulous prompt engineering, and a clear-eyed approach to the challenges of cost, accuracy, and ethical implementation. As the underlying technology continues to advance, the potential for GPT Applications News to foster a more informed, connected, and understanding world is truly limitless. The journey from global headlines to localized insights is now just an API call away.