Deconstructing the Brain of AI: A Comprehensive Deep Dive into GPT Architecture and Future Innovations

Introduction: The Engine Behind the Revolution

The landscape of artificial intelligence has been irrevocably altered by the emergence of Large Language Models (LLMs), with the Generative Pre-trained Transformer (GPT) standing at the forefront of this technological renaissance. As we analyze the latest GPT Models News, it becomes evident that the magic of tools like ChatGPT is not merely in their training data, but in the elegance and scalability of their underlying architecture. Understanding the nuts and bolts of how these models function is no longer just an academic exercise; it is a necessity for developers, businesses, and enthusiasts trying to navigate the rapid cadence of OpenAI GPT News.

At its core, the GPT architecture represents a shift from rule-based computing to probabilistic generation. It is a system designed to predict the next token in a sequence, yet through massive scaling and architectural refinements, it has unlocked emergent behaviors resembling reasoning and creativity. From the initial release of GPT-1 to the multimodal capabilities highlighted in recent GPT-4 News, the evolution of this architecture offers a roadmap for the future of machine learning. This article delves deep into the structural components of GPT, exploring how recent advancements in GPT Architecture News are driving efficiency, capability, and new applications across industries.

Section 1: The Core Anatomy of a Transformer

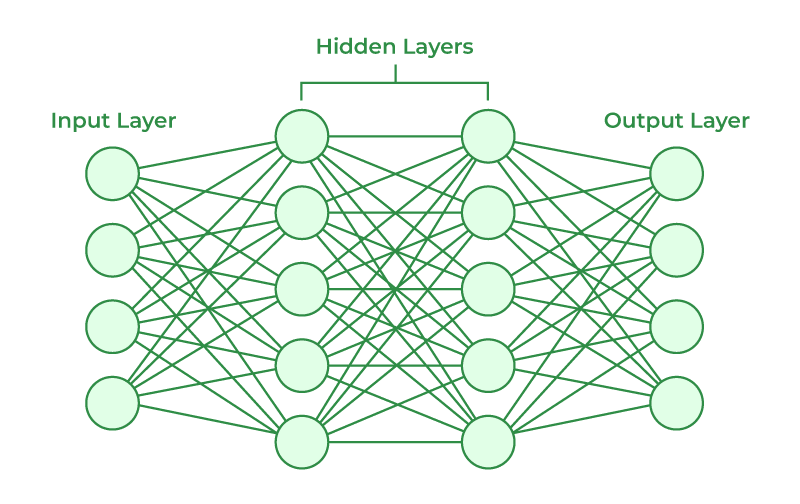

To understand the latest GPT Trends News, one must first grasp the foundational elements that make up a Transformer model. The “T” in GPT stands for Transformer, a neural network architecture introduced by Google researchers in 2017, which relies entirely on self-attention mechanisms rather than recurrent processing. GPT specifically utilizes a “decoder-only” architecture, optimized for generative tasks.

Tokenization and Embeddings: From Text to Math

The journey of a prompt begins with tokenization. As highlighted in recent GPT Tokenization News, the efficiency of a model is often dictated by how well it breaks down text. A tokenizer converts raw text into numerical tokens—fragments of words that the model can process. Advanced tokenizers in GPT-4 are designed to be more efficient, handling multiple languages and code syntaxes with fewer tokens than their predecessors.

Once tokenized, these inputs are converted into embeddings—high-dimensional vectors that represent the semantic meaning of the token. However, unlike humans, a neural network does not inherently understand the order of words. This is where Positional Encodings come into play. By adding a positional vector to the embedding, the model retains information about the sequence, distinguishing between “The dog bit the man” and “The man bit the dog.”

The Power of Self-Attention

The crown jewel of GPT Architecture News remains the multi-head self-attention mechanism. This component allows the model to weigh the importance of different words in a sentence relative to one another. For instance, in the sentence “The animal didn’t cross the street because it was too tired,” the model must understand that “it” refers to the “animal” and not the “street.”

Attention mechanisms calculate a score for every token against every other token in the context window. As GPT Scaling News reports suggest, increasing the context window (the amount of text the model can consider at once) is computationally expensive because the attention mechanism scales quadratically. This is why recent breakthroughs in GPT Optimization News, such as Flash Attention, are critical for enabling the massive context windows seen in newer models.

Feed-Forward Networks and Layer Normalization

Following the attention layer, the data passes through a Feed-Forward Network (FFN). If attention is where the model gathers context, the FFN is where it “thinks” about that context. This layer processes the information independently for each position. To ensure training stability—a frequent topic in GPT Training Techniques News—Layer Normalization is applied before or after these sub-layers. Residual connections (or skip connections) allow gradients to flow through the network more easily during backpropagation, preventing the “vanishing gradient” problem that plagued earlier deep learning architectures.

Section 2: Evolution, Scaling, and The Mixture of Experts

The transition from GPT-3.5 News to the current state of the art involves more than just making the models bigger; it involves making them smarter and more efficient. The architecture has evolved to handle complex reasoning, multimodal inputs, and massive scale.

The Shift to Mixture of Experts (MoE)

One of the most significant rumors and confirmed shifts in GPT-4 News and potential GPT-5 News is the adoption of Mixture of Experts (MoE) architectures. In a dense model (like GPT-3), every parameter is used for every input. This is computationally inefficient. In an MoE architecture, the model is divided into several smaller “expert” networks. A gating mechanism determines which experts are needed for a specific prompt.

This approach dramatically impacts GPT Inference News. It allows a model to have a massive total parameter count (trillions) while only utilizing a fraction of them (billions) during the actual inference pass. This results in lower latency and higher throughput, addressing key concerns in GPT Latency & Throughput News. It essentially decouples the cost of training from the cost of inference, allowing for smarter models that are cheaper to run.

Multimodality: Vision and Audio Integration

The architecture is no longer confined to text. GPT Multimodal News and GPT Vision News describe how the transformer architecture has been adapted to process images and audio. By tokenizing images into patches (similar to words), the model can perform self-attention across visual data. This unified architecture allows for seamless transitions between describing an image, writing code based on a diagram, or analyzing audio patterns.

This evolution is critical for GPT Applications News. For example, in GPT in Healthcare News, multimodal models can analyze X-rays while simultaneously processing patient history text. In GPT in Education News, a model can grade a handwritten math test by “seeing” the image and “reading” the logic simultaneously.

Reinforcement Learning from Human Feedback (RLHF)

While the base architecture predicts the next token, it is the alignment process that makes it a helpful assistant. GPT Research News emphasizes the role of RLHF. This involves training a reward model based on human preferences and using it to fine-tune the GPT policy. This step is crucial for GPT Safety News and GPT Bias & Fairness News, ensuring the model refuses harmful instructions and maintains a helpful tone. Recent developments in “Constitutional AI” and automated alignment are pushing this further, reducing the reliance on massive human labeling farms.

Section 3: Optimization, Efficiency, and Deployment

As the demand for AI grows, the focus has shifted from “can we build it?” to “can we run it efficiently?” This section covers the engineering marvels that allow these massive architectures to function in the real world.

Compression and Quantization

Running a state-of-the-art model requires immense VRAM. GPT Compression News and GPT Quantization News are dominating the technical discourse. Quantization involves reducing the precision of the model’s parameters from 16-bit floating-point numbers to 8-bit or even 4-bit integers. Surprisingly, recent GPT Benchmark News indicates that 4-bit quantization often results in negligible performance loss while halving the memory requirements.

Furthermore, GPT Distillation News highlights techniques where a massive “teacher” model is used to train a smaller “student” model. The student learns to mimic the teacher’s outputs, resulting in a compact model that retains much of the larger model’s reasoning capabilities. This is vital for GPT Edge News, enabling powerful AI to run on local devices, laptops, and potentially smartphones, reducing reliance on the cloud.

Hardware and Inference Engines

The architecture is only as good as the hardware it runs on. GPT Hardware News focuses on the development of specialized chips (TPUs, LPUs, and next-gen GPUs) designed specifically for transformer workloads. Concurrently, GPT Inference Engines News covers software optimizations like vLLM and TensorRT-LLM, which optimize memory allocation (PagedAttention) to maximize the number of concurrent users a single GPU can handle.

The Rise of Agents and Tool Use

Architecturally, models are being trained to recognize when they need outside help. GPT Agents News and GPT Plugins News discuss how models are fine-tuned to emit special tokens that trigger API calls. This transforms the GPT from a passive text generator into an active orchestrator. In GPT in Finance News, an agent might query a live stock database before generating advice. In GPT in Legal Tech News, it might search a proprietary case law database. This capability is bridging the gap between static training data and dynamic real-world information.

Section 4: Implications, Challenges, and Best Practices

The rapid advancement of GPT architecture brings both immense opportunities and significant challenges. For organizations looking to leverage GPT Ecosystem News, understanding the implications is key.

The Buy vs. Build Dilemma

With the rise of GPT Open Source News, companies face a choice: use proprietary GPT APIs News or host open-source competitors (like Llama or Mistral). Proprietary models generally offer higher reasoning capabilities and ease of use (GPT Deployment News), but open-source models offer data privacy and control. GPT Custom Models News from OpenAI bridges this gap by allowing enterprises to fine-tune proprietary models on their private data.

Best Practices for Implementation

- Prompt Engineering vs. Fine-Tuning: Before investing in GPT Fine-Tuning News workflows, maximize performance with prompt engineering and Few-Shot prompting. Fine-tuning is best reserved for teaching the model a specific style or format, not new knowledge.

- RAG (Retrieval-Augmented Generation): To combat hallucinations, implement RAG. This involves retrieving relevant documents and feeding them into the context window. This is often more effective than fine-tuning for factual accuracy.

- Monitor Costs: Keep an eye on GPT Efficiency News. Token usage can spike quickly. Use caching strategies for frequent queries.

Ethical and Regulatory Considerations

As architecture becomes more powerful, GPT Regulation News becomes more relevant. Governments are scrutinizing GPT Privacy News and the potential for misuse. Developers must prioritize GPT Ethics News, ensuring that applications built on these architectures have guardrails against bias and toxicity. The “black box” nature of deep neural networks makes explainability a significant hurdle in sectors like healthcare and finance.

Conclusion: The Future of GPT Architecture

The trajectory of GPT Future News points toward a world where the lines between architecture, data, and application blur. We are moving away from monolithic text processors toward dynamic, multimodal agents capable of reasoning, planning, and acting. The innovations in GPT Architecture News—from Mixture of Experts to 4-bit quantization—are democratizing access to super-intelligence, allowing it to run faster, cheaper, and on more devices.

As we look toward GPT-5 News and beyond, the focus will likely shift from raw parameter count to data efficiency, reasoning depth, and agentic autonomy. Whether you are following GPT in Marketing News, GPT in Gaming News, or GPT in Creativity News, the underlying architectural advancements discussed here are the engine driving the transformation. Staying informed about these technical underpinnings is essential for anyone wishing to harness the full potential of the AI revolution.