The Democratization of Vision: How Efficient Multimodal Models Are Redefining the AI Landscape

Introduction: The Shift Toward Efficient Multimodality

The artificial intelligence landscape is currently undergoing a seismic shift, moving away from the “bigger is better” philosophy that dominated the early days of Large Language Models (LLMs). While the industry was previously captivated by the raw power of massive parameter counts, recent developments have pivoted toward efficiency, accessibility, and, most crucially, multimodality. The latest wave of GPT Multimodal News suggests a future where models are not only capable of processing text but are intrinsically designed to understand, analyze, and generate insights from visual data with remarkable speed and accuracy.

For developers and enterprises, this marks a turning point. The monopoly of massive, closed-source models is being challenged by agile, open-license architectures that rival the performance of industry standards like GPT-4o Mini. This evolution is not merely about incremental improvements; it represents a fundamental change in GPT Architecture News, enabling complex reasoning across different modalities—text and vision—without the prohibitive latency or cost associated with earlier generations. As we analyze the trajectory of GPT Models News, it becomes clear that the next frontier is defined by models that are powerful enough to reason but small enough to run efficiently, democratizing access to advanced AI capabilities.

Section 1: The Rise of Open, Efficient Multimodal Architectures

The current headline in GPT Open Source News is the emergence of models that combine high-performance reasoning with permissive licensing (such as Apache 2.0). This trend is disrupting the ecosystem previously dominated by proprietary APIs. These new architectures are designed to bridge the gap between GPT Vision News and practical, real-world deployment.

Breaking the Proprietary Barrier

Historically, top-tier multimodal capabilities were locked behind paywalls and closed APIs. GPT Competitors News now highlights a surge in models that offer “GPT-4 class” performance in smaller, more manageable packages. These models utilize advanced GPT Training Techniques News, such as data curation and architectural optimizations, to achieve high benchmarks with fewer parameters. By releasing these models under open licenses, the AI community is fostering an environment where GPT Ecosystem News is no longer dictated solely by a few major tech giants.

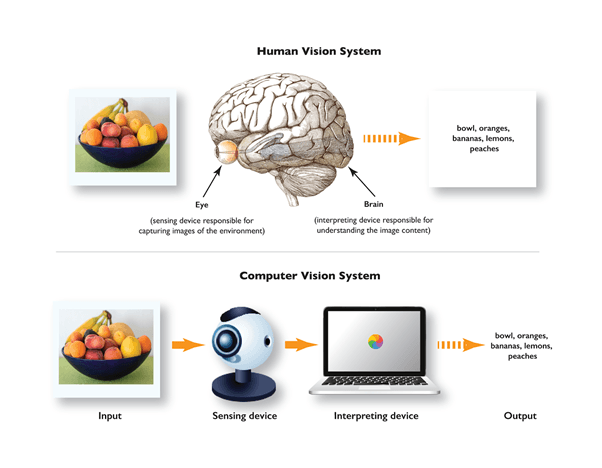

The Convergence of Text and Vision

Multimodality is no longer an add-on; it is a core feature. The latest GPT Research News indicates that training models on mixed datasets of text and images simultaneously—rather than stitching together separate vision and language modules—results in superior semantic understanding. This allows for seamless transitions between analyzing a chart, reading the text within it, and writing code to replicate it. This integration is vital for GPT Code Models News, where developers increasingly rely on AI to interpret UI screenshots and convert them into functional frontend code.

Efficiency vs. Scale

A recurring theme in GPT Scaling News is the diminishing return of simply adding more parameters. The focus has shifted to GPT Efficiency News. New models are demonstrating that with high-quality training data, a model with fewer than 10 billion parameters can outperform older models with ten times the bulk. This efficiency directly impacts GPT Inference News, allowing for lower latency and higher throughput, which is critical for real-time applications.

Section 2: Technical Deep Dive: Latency, Throughput, and Deployment

To truly understand the impact of these new multimodal models, we must look under the hood. The advancements in GPT Latency & Throughput News are enabling use cases that were previously impossible due to the sluggishness of large vision models.

Optimized Inference Engines

Artificial intelligence analyzing image – Convergence of artificial intelligence with social media: A …

The secret sauce behind these high-performing small models often lies in GPT Optimization News. Techniques such as GPT Quantization News allow these models to run on consumer-grade hardware with minimal loss in accuracy. By reducing the precision of the model’s weights (e.g., from 16-bit to 4-bit), developers can deploy powerful multimodal agents on local machines or edge devices. This connects directly to GPT Edge News, where the goal is to process data locally to ensure speed and privacy.

Tokenization and Context Windows

GPT Tokenization News is another critical area of innovation. Multimodal models require specialized tokenizers that can efficiently compress visual information into tokens that the LLM can process alongside text. The latest models boast extended context windows, allowing them to “see” high-resolution images or multiple documents in a single pass. This capability is essential for GPT Applications News involving detailed document analysis, where the AI must correlate information across different pages of a PDF containing mixed media.

Distillation and Fine-Tuning

GPT Distillation News refers to the process of training smaller “student” models using the outputs of larger “teacher” models. This technique has been instrumental in creating the current generation of compact multimodal powerhouses. Furthermore, GPT Fine-Tuning News has become more accessible. Developers can now take a base multimodal model and fine-tune it on specific datasets—such as medical imaging or legal contracts—creating specialized tools that outperform generalist models in niche tasks.

Hardware Considerations

The relationship between software and hardware is tightening. GPT Hardware News suggests that new inference chips are being optimized specifically for the transformer architectures used in these multimodal models. This synergy ensures that GPT Inference Engines News can deliver real-time responses, a non-negotiable requirement for interactive agents and robotics.

Section 3: Industry Implications and Real-World Applications

The proliferation of efficient, open multimodal models is reshaping various sectors. The ability to process visual and textual data simultaneously opens up transformative possibilities across industries, influencing GPT Trends News globally.

Healthcare and Medical Imaging

In the realm of GPT in Healthcare News, the stakes are high. Efficient multimodal models can be deployed within hospital networks to assist in analyzing X-rays, MRIs, and handwritten patient notes. Unlike cloud-based APIs that raise GPT Privacy News concerns, these open models can be hosted on-premise (HIPAA compliant), ensuring patient data never leaves the secure facility while still leveraging state-of-the-art diagnostic support.

Financial Analysis and Document Processing

GPT in Finance News is buzzing with the potential of automated document processing. Financial analysts deal with a deluge of PDFs, charts, and spreadsheets. A multimodal model can ingest a screenshot of a complex market trend graph and generate a textual summary or extract the raw data into a CSV format. This capability drastically reduces manual entry errors and accelerates decision-making processes.

Education and Interactive Learning

GPT in Education News highlights the potential for personalized tutors. Imagine an AI that can “look” at a student’s handwritten math problem, identify exactly where the calculation went wrong, and explain the correction visually and textually. These models make such adaptive learning platforms economically viable due to reduced inference costs.

Artificial intelligence analyzing image – Artificial Intelligence Tags – SubmitShop

Marketing and Content Creation

For GPT in Marketing News and GPT in Content Creation News, the implications are vast. Marketers can use these tools to analyze competitor ad creatives, automatically generating alt-text, or brainstorming variations of visual campaigns based on historical performance data. GPT Creativity News is expanding beyond text generation to include visual critique and ideation.

Legal Tech and Compliance

GPT in Legal Tech News benefits from the ability to scan physical documents and contracts. Optical Character Recognition (OCR) is being superseded by multimodal LLMs that don’t just “read” the text but understand the layout, signatures, and stamps, providing a holistic understanding of legal evidence.

Section 4: Strategic Recommendations and Future Outlook

While the rise of open multimodal models is exciting, navigating this new landscape requires strategic foresight. Here are key considerations for developers and businesses, balancing GPT Pros/Cons.

Choosing the Right Model: Open vs. Closed

The debate in GPT Platforms News often centers on the trade-off between control and convenience.

- Pros of Open Models: Complete data sovereignty, no API costs, ability to fine-tune deeply, and immunity to vendor lock-in. This is crucial for GPT Ethics News and GPT Bias & Fairness News, as organizations can audit the model’s behavior directly.

- Cons of Open Models: Requires infrastructure management (GPUs) and technical expertise to deploy and optimize.

- Recommendation: For high-volume, privacy-sensitive, or latency-critical applications, adopt efficient open models (like the latest 3.1 versions appearing in the wild). For prototyping or low-volume generalist tasks, proprietary APIs may still offer convenience.

The Role of Agents and Automation

Artificial intelligence analyzing image – Artificial intelligence in healthcare: A bibliometric analysis …

GPT Agents News suggests that the future is autonomous. Multimodal models act as the “eyes” for software agents. Best practices involve chaining these models: use a small, fast vision model to filter images, and a larger reasoning model to take action. This composite approach optimizes for both cost and intelligence.

Navigating Regulation and Safety

With great power comes great responsibility. GPT Regulation News and GPT Safety News are becoming increasingly prominent. When deploying open models, developers must implement their own safety guardrails, as they cannot rely on the API provider’s content filters. This includes monitoring for hallucinations and ensuring the model does not generate harmful content based on visual inputs.

Integration and Tooling

GPT Tools News and GPT Integrations News are evolving rapidly. Frameworks like LangChain and LlamaIndex are adapting to support multimodal inputs natively. Developers should focus on building robust RAG (Retrieval-Augmented Generation) pipelines that can index and retrieve both text and image vectors, creating a truly comprehensive knowledge base.

Conclusion

The narrative surrounding GPT Future News is clear: the era of the monolithic, text-only model is fading. We are entering a phase of accessible, efficient, and multimodal intelligence. The release of high-performance, open-license models that challenge established giants like GPT-4o Mini is a testament to the vibrancy of the open-source community.

For organizations, the barrier to entry for advanced AI is lower than ever. Whether it is GPT Applications in IoT News bringing vision to smart devices, or GPT Cross-Lingual News breaking down language barriers through visual translation, the tools are now available to build the next generation of intelligent applications. By staying informed on GPT Benchmark News and embracing the shift toward efficient architectures, developers can leverage these powerful tools to solve complex, real-world problems with unprecedented speed and precision.