The New AI Arms Race: How Big Tech’s Internal Models Are Reshaping the GPT Ecosystem

The generative AI landscape, once defined by clear frontrunners and strategic partnerships, is undergoing a seismic shift. The era of symbiotic alliances, where tech giants provided the computational backbone for pioneering AI labs, is evolving into a complex state of “co-opetition.” Major players who were once primarily enablers are now stepping into the ring as direct competitors, developing their own formidable foundation models. This strategic pivot is not merely a change in corporate relationships; it signals a fundamental maturation of the AI market. This article delves into the latest OpenAI GPT News and the broader industry trends, analyzing the technical drivers, strategic implications, and future trajectory of a world no longer dominated by a single model architecture. For developers, enterprises, and researchers, understanding this new dynamic is critical to navigating the future of artificial intelligence.

The Shifting Alliances in the Generative AI Ecosystem

The rapid ascent of models like GPT-3.5 and GPT-4 was supercharged by a seemingly perfect partnership model. OpenAI, with its research prowess, joined forces with Microsoft, which provided the immense capital and cloud infrastructure necessary for training and scaling these behemoths. This alliance created a powerful feedback loop: OpenAI gained access to unparalleled computational resources on Azure, and Microsoft integrated cutting-edge AI into its entire product stack, from Bing to Office 365, driving the narrative for ChatGPT News and enterprise AI adoption. This model became a template for the industry, accelerating the pace of innovation and solidifying the lead of the key players. The resulting GPT Ecosystem News was dominated by stories of deeper integrations and new platform capabilities built upon this foundation.

The Inevitable Pivot: The Rise of In-House Giants

The very success of this model sowed the seeds for its evolution. As generative AI transitioned from a research curiosity to a mission-critical enterprise technology, the strategic calculus for major tech players began to change. Relying on a single external partner for the most transformative technology of a generation introduces significant long-term risks. Consequently, we are now witnessing a deliberate and well-funded pivot towards developing powerful, in-house foundation models. Companies like Microsoft are reportedly developing models like MAI-1, a massive 500-billion parameter model designed to compete directly with the top-tier offerings from OpenAI, Google, and Anthropic. The latest GPT Competitors News is filled with announcements of these internal projects.

The motivations behind this shift are multifaceted:

- Strategic Independence: Reducing dependency on a third-party model provider mitigates risks related to pricing changes, API access, and shifts in strategic direction.

- Full-Stack Control: Owning the entire stack—from custom silicon and GPT Hardware News to the training data and the final model—allows for unprecedented optimization. This vertical integration can drastically improve performance and efficiency, a key topic in GPT Efficiency News.

- Economic Value: By developing their own models, tech giants can capture a larger share of the value chain, moving from being a reseller of AI services to the primary provider.

- Customization and Specialization: In-house models can be tailored for specific use cases, such as internal code generation (a focus of GPT Code Models News) or integration with proprietary datasets, offering a competitive edge that generic models cannot match.

What This Means for OpenAI and the GPT Model Family

This new competitive pressure is a double-edged sword for pioneers like OpenAI. On one hand, it validates their vision and the market they created. On the other, it forces them to innovate at an even more frantic pace. The response is already visible in the rapid release of models like GPT-4o, which pushes the boundaries of multimodality and real-time interaction, dominating the GPT Multimodal News cycle. The roadmap for the much-anticipated GPT-5 News will likely be heavily influenced by this competitive landscape, with a focus on not just raw intelligence but also on efficiency, safety, and the development of more sophisticated GPT Agents News that can perform complex, multi-step tasks autonomously.

Under the Hood: Comparing Incumbent and Challenger Models

As the field becomes more crowded, differentiation is increasingly found in the technical details of model architecture, training techniques, and deployment efficiency. The conversation is shifting from simply “who has the biggest model” to “who has the most efficient, capable, and adaptable model for a specific task.”

Architectural Innovations and Scaling Laws

The Transformer architecture remains the bedrock of most large language models, but the latest GPT Architecture News reveals significant innovations. Techniques like Mixture-of-Experts (MoE), famously used in models like Mixtral 8x7B and reportedly in GPT-4, are becoming standard for scaling models efficiently. MoE allows a model to grow its parameter count massively while only activating a fraction of those parameters for any given inference, saving computational cost. The ongoing GPT Research News also explores novel architectures that could potentially supersede the Transformer, focusing on overcoming its quadratic complexity with long contexts. The industry is closely watching GPT Scaling News to see if current approaches will continue to yield improvements or if a new paradigm is needed.

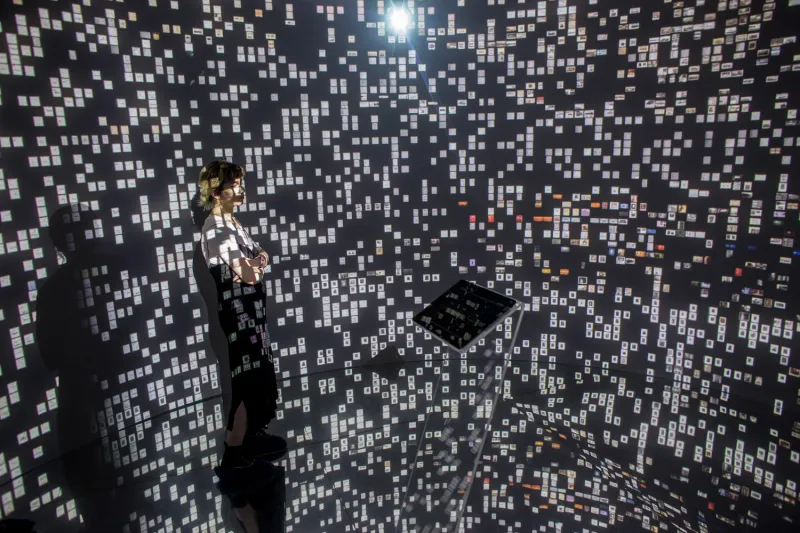

The Multimodal Frontier: Beyond Text

The most significant recent trend is the rapid advancement in multimodality. Models are no longer just text-in, text-out systems. The latest GPT Vision News highlights how models like GPT-4o can seamlessly process and reason about a combination of text, images, and audio in real-time. This capability unlocks a vast array of new GPT Applications News, from live visual assistants for the blind to interactive educational tools. Competitors are racing to match and exceed these capabilities, developing specialized models for high-fidelity voice synthesis or nuanced video understanding. This specialization is a key competitive vector, as a model fine-tuned for a specific modality can often outperform a general-purpose one.

Benchmarks, Inference, and Deployment

With a plethora of models available, objective performance measurement is crucial. The latest GPT Benchmark News shows models competing on leaderboards like MMLU (Massive Multitask Language Understanding), HumanEval for coding, and various reasoning benchmarks. However, these metrics don’t tell the whole story. The real-world performance is dictated by GPT Inference News, particularly metrics like GPT Latency & Throughput News. A model that is 5% more accurate on a benchmark is useless if it’s too slow or expensive for a real-time application.

This is where optimization techniques become critical. The fields of GPT Quantization News (reducing the precision of model weights), GPT Distillation News (training a smaller model to mimic a larger one), and GPT Compression News are vital for making these massive models deployable. These optimizations are essential for enabling applications on resource-constrained environments, a key topic in GPT Edge News, and for improving the overall cost-effectiveness of GPT Deployment News on a large scale.

Navigating the New Reality: Impact on Developers, Enterprises, and the Market

The proliferation of powerful foundation models creates both opportunities and challenges for the entire AI ecosystem. The strategic decisions made today will determine the winners and losers in this new, multi-polar AI world.

For Developers: The Proliferation of Choice and Complexity

Developers are no longer limited to a single provider’s GPT APIs News. They can now choose from a menu of models, each with different strengths, weaknesses, and pricing structures. A developer building a customer service chatbot might use a cost-effective model for simple queries but route complex, multi-step issues to a more powerful and expensive model. This requires a more sophisticated approach to development.

Best Practice: Implement an AI abstraction layer. Instead of coding directly against the OpenAI API, build an internal service that can route requests to different models (GPT-4o, Claude 3, Gemini 1.5 Pro, or a future native Azure model) based on criteria like cost, latency, or task complexity. This prevents vendor lock-in and future-proofs the application. Staying current with GPT Tools News and GPT Integrations News that facilitate these multi-model workflows is essential.

For Enterprises: De-risking and Specialization

For enterprises, the biggest advantage of a competitive market is the ability to de-risk their AI strategy. Over-reliance on a single provider is a significant business risk. Diversifying model usage across providers mitigates this. Furthermore, enterprises can now pursue more specialized solutions. For instance, the GPT in Healthcare News highlights the need for models trained on specific medical data with strong privacy guarantees, a domain where specialized providers or custom-trained models may excel. Similarly, GPT in Legal Tech News and GPT in Finance News point towards a future of highly specialized models that understand domain-specific jargon and regulatory constraints. This trend is fueling interest in GPT Custom Models News and GPT Fine-Tuning News as companies seek to build a competitive moat around their proprietary data.

Market Dynamics: Commoditization and the Search for Value

As the capabilities of base foundation models begin to converge, we may see a degree of commoditization. When multiple models can achieve 99% accuracy on a given task, the deciding factors will shift to cost, speed, and ease of integration. The ultimate value will move up the stack—from the model itself to the applications built on top of it. This is where we will see an explosion of innovation in areas like autonomous GPT Agents News, which can orchestrate multiple tools and models to solve complex problems. The impact will be felt across all sectors, from GPT in Marketing News, with agents planning and executing entire campaigns, to GPT in Content Creation News, where AI moves from a writing assistant to a collaborative creative partner.

Charting the Course: Recommendations for a Multi-Model Future

Navigating this complex and rapidly changing landscape requires a proactive and informed approach. Stakeholders across the spectrum must adapt their strategies to thrive.

Practical Recommendations for Stakeholders

- For Businesses: Don’t just chase the “best” model. Define your business problem first, then select the most appropriate tool for the job. Evaluate the Total Cost of Ownership (TCO), which includes not just API calls but also development, integration, and maintenance costs. A key consideration in GPT Platforms News is choosing platforms that offer flexibility and a choice of models.

- For the Community: Champion and contribute to the GPT Open Source News movement. Open-source models provide a crucial counterbalance to the dominance of large, closed-source systems. They foster transparency, enable academic research, and provide a foundation for smaller companies to build upon, ensuring a more diverse and resilient ecosystem.

The Road Ahead: Ethics, Safety, and the Next Frontier

As models become more powerful and autonomous, the importance of safety and ethics grows exponentially. The discourse around GPT Ethics News, GPT Safety News, and GPT Bias & Fairness News is no longer academic. It is a critical engineering discipline. As these systems are integrated into high-stakes domains, robust governance and alignment techniques are paramount. We can expect GPT Regulation News to become more prominent as governments worldwide grapple with how to manage the societal impact of this technology while fostering innovation. Issues surrounding GPT Privacy News will also be central, especially as models are trained on ever-larger swaths of public and private data.

Looking forward, the GPT Future News points towards a world of specialized, interconnected AI agents. The next major breakthrough may not be a single, larger model, but rather a system for effectively orchestrating hundreds of smaller, expert models, creating a form of collective intelligence that can tackle problems far beyond the scope of today’s technology.

Conclusion

The generative AI ecosystem is entering its next chapter. The era of straightforward partnerships is giving way to a more complex and competitive multi-polar world where today’s allies are tomorrow’s rivals. This shift, driven by the strategic imperatives of Big Tech, is fundamentally reshaping the landscape for developers, enterprises, and society at large. The result will be an explosion of choice, accelerating innovation, and a necessary focus on efficiency, specialization, and safety. While this introduces new complexities, it ultimately fosters a more resilient and dynamic market. The key takeaway is clear: the future will not be won by a single model or a single company. It will be defined by those who can master the art of navigating a diverse, multi-model ecosystem, leveraging the best tool for every task in the ongoing quest to unlock the full potential of artificial intelligence.