The New Frontier in AI Ethics: Using GPT Models to Actively Combat Algorithmic Bias

The rapid proliferation of Generative Pre-trained Transformer (GPT) models has been a double-edged sword for digital society. On one hand, these powerful AI systems offer unprecedented capabilities in everything from content creation to complex data analysis. On the other, they carry the inherent risk of amplifying and perpetuating the societal biases embedded in their vast training data. For years, the conversation surrounding GPT Ethics News has focused on identifying and measuring these biases. However, a significant paradigm shift is underway. The latest GPT Bias & Fairness News reveals a new, proactive approach: using the very same GPT technologies as sophisticated tools to uncover, analyze, and actively mitigate bias in critical systems. This evolution marks a pivotal moment, moving the field from passive observation to active intervention, with profound implications for sectors like healthcare, finance, and legal tech. This article explores this emerging frontier, delving into the technical strategies, real-world applications, and the future trajectory of building fairer AI.

The Dual Nature of Bias in Large Language Models

Understanding how to use GPT models to fight bias first requires a clear understanding of how bias originates within them. The issue is multifaceted, stemming from the data they learn from, the way they are built, and the methods used to train them. This dual capacity—to both reflect and detect bias—is central to the latest developments in AI fairness.

The Roots of Bias: Data, Architecture, and Training

The primary source of bias in models like GPT-3.5 and GPT-4 is the colossal corpus of text and data they are trained on. This data, scraped from the internet and other sources, is a mirror of human history, culture, and communication—warts and all. It contains historical inequities, stereotypes, and underrepresentation of marginalized groups. The latest GPT Datasets News frequently discusses the challenge of curating petabytes of data while filtering for toxicity and bias. When a model learns from this data, it doesn’t just learn language; it learns the statistical associations present in the text. For example, if historical data frequently associates certain job titles with a specific gender, the model will learn and replicate that stereotype.

Beyond the data, GPT Architecture News highlights how model design can inadvertently amplify these issues. The attention mechanisms that make transformers so powerful can sometimes over-index on spurious correlations, reinforcing biased connections. Furthermore, initial GPT Training Techniques News shows that pre-training objectives, which focus on predicting the next word, are inherently agnostic to fairness. The model’s goal is statistical accuracy, not social equity, making post-hoc alignment and fine-tuning crucial for ethical deployment.

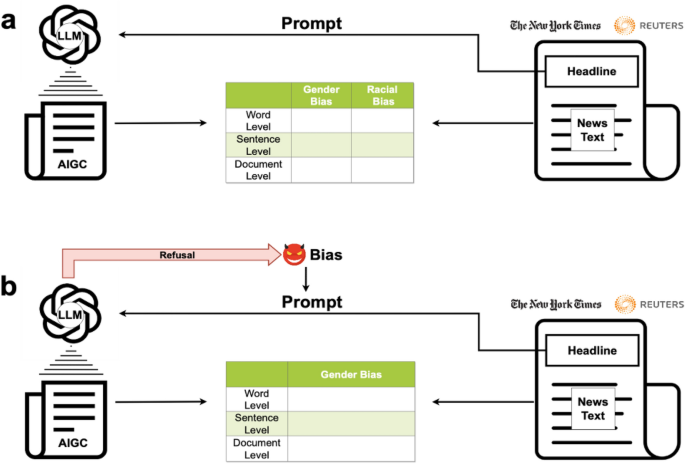

From Perpetuator to Detector: A Paradigm Shift

The exciting turn in recent GPT Research News is the realization that the same pattern-recognition capabilities that absorb bias can be repurposed to detect it with surgical precision. A model trained on a vast swath of human language develops a nuanced understanding of context, sentiment, and subtle linguistic cues. This makes it exceptionally good at identifying patterns that a human auditor might miss. For example, a GPT model can be tasked to scan thousands of performance reviews for subtle differences in language used to describe employees from different demographic groups. It might find that feedback for one group is consistently focused on collaboration and personality, while feedback for another is focused on technical skill and achievement—a classic example of benevolent bias that can impact career progression. This shift from being the problem to being part of the solution is a dominant theme in current GPT Trends News.

Technical Deep Dive: Strategies for Algorithmic Fairness

Actively combating bias is not a single action but a multi-stage process involving interventions before, during, and after model training. The latest GPT Fine-Tuning News and developments in GPT Custom Models News showcase a growing toolkit of sophisticated techniques aimed at instilling fairness directly into the AI lifecycle.

Pre-Processing: Curating Fairer Datasets

The most effective way to reduce bias is to address it at the source: the data. Pre-processing techniques focus on cleaning, balancing, and augmenting datasets before they are used for training or fine-tuning. This can involve:

- Data Augmentation: Using a GPT model to generate counterfactual data points. For instance, if a dataset shows a bias in medical diagnoses, a model could generate synthetic patient records that swap demographic details while keeping clinical symptoms identical, helping to decouple the two in the model’s learning process.

- Re-sampling: Identifying and over-sampling data from underrepresented groups to ensure the model has sufficient examples to learn from, preventing it from treating the majority group as the default.

- Bias Identification: Employing a pre-trained GPT model as a screening tool to scan and flag potentially biased content within a massive, unstructured dataset before it is used for training a new model.

In-Processing: Fine-Tuning and Architectural Adjustments

During the training phase, several methods can be used to guide the model towards fairer outcomes. Fine-tuning is a powerful technique where a pre-trained base model is further trained on a smaller, domain-specific, and carefully curated dataset. By fine-tuning a model on a dataset that has been balanced and vetted for fairness, developers can significantly steer its behavior. Reinforcement Learning from Human Feedback (RLHF), a cornerstone of models like ChatGPT, is a critical in-processing technique. The “human feedback” component can be explicitly designed to reward fair, unbiased, and equitable responses, effectively teaching the model human values beyond simple predictive accuracy. This is a hot topic in OpenAI GPT News and discussions around the upcoming GPT-5 News.

Post-Processing: Auditing and Correcting Outputs

Once a model is deployed, the work on fairness is not over. Post-processing involves creating a “fairness firewall” or an auditing layer that sits between the model and the end-user. This is where GPT APIs News becomes highly relevant. Developers can chain API calls, using one GPT model to generate a response and a second, specialized GPT model to audit that response for bias, toxicity, or harmful stereotypes before it is delivered. For example, a customer service chatbot’s response could be checked by a fairness-auditing model to ensure its tone and language are equitable across all customer demographics. This approach is a practical application seen in the development of new GPT Tools News and GPT Platforms News.

Case Studies in Action: GPT as a Tool for Equity

The theoretical applications of using AI to fight bias are now being realized in high-stakes, real-world scenarios. From hospital wards to bank offices, GPT-powered systems are being deployed as powerful auditors to promote equity.

Revolutionizing Healthcare: Tackling Bias in Diagnostic Systems

One of the most promising areas is covered in GPT in Healthcare News. Consider an early warning system designed to predict cardiac arrest in hospital patients. Historically, such systems have been trained on data predominantly from a single demographic group, leading them to be less accurate for women and ethnic minorities whose symptoms may present differently. A new generation of projects is using GPT-based systems to analyze millions of anonymized electronic health records. The AI can identify subtle, hidden correlations between demographic data, symptom descriptions, and clinical outcomes that human researchers have struggled to quantify. By surfacing these biases, the model provides the specific data needed to retrain and recalibrate the early warning system, making it more accurate and equitable for all patient populations. This not only improves fairness but directly saves lives.

Promoting Fairness in Finance and Legal Tech

The GPT in Finance News and GPT in Legal Tech News verticals are also seeing significant innovation. In finance, GPT models are being used to audit automated loan approval systems. The AI can analyze thousands of approved and rejected applications to determine if protected characteristics (or their proxies, like zip codes) are statistically significant factors in decision-making, helping institutions comply with fair lending laws. In the legal field, GPT-powered tools can analyze legal documents and judicial opinions to detect biased language or identify systemic disparities in sentencing recommendations based on demographic factors. These GPT Applications News demonstrate a move towards using AI for regulatory compliance and social good, a key topic in ongoing GPT Regulation News discussions.

The Road Ahead: Best Practices and Future Directions

While the potential is immense, using AI to correct for bias is not without its challenges. It requires a thoughtful, multi-stakeholder approach that combines technical rigor with ethical oversight.

Navigating the Pitfalls

A primary concern is the “quis custodiet ipsos custodes?” or “who will guard the guardians?” problem. If we use a biased GPT model to debias another system, we risk simply swapping one set of biases for another. This makes the transparency and explainability of the auditing model paramount. Another risk is “fairness gerrymandering,” where a system is optimized to perform well on a specific, narrow fairness metric while causing unforeseen harm in other areas. This highlights the need for a holistic approach to fairness rather than a simplistic, check-the-box exercise.

Best Practices for Developers and Organizations

To navigate this complex landscape, organizations leveraging GPT Deployment News should adopt a set of best practices:

- Build Diverse Teams: The teams creating and auditing these AI systems must be diverse in terms of background, expertise, and lived experience to spot a wider range of potential biases.

- Prioritize Transparency: Be open about the fairness metrics being used, the limitations of the system, and the steps taken to mitigate bias.

- Invest in Red Teaming: Proactively hire teams of experts to “attack” the model and actively search for fairness and safety vulnerabilities before they can cause real-world harm. This is becoming a standard part of the GPT Safety News conversation.

– Embrace Continuous Monitoring: Fairness is not a static, one-time fix. Models must be continuously audited after deployment as data drifts and new biases emerge.

The development of standardized GPT Benchmark News for fairness will also be crucial, allowing for more objective comparisons and holding the entire GPT Ecosystem News to a higher standard.

Conclusion: A New Mandate for AI Development

The narrative around generative AI is undergoing a crucial transformation. We are moving beyond the passive acknowledgment of AI bias and into an era of active, technologically-driven intervention. The latest GPT Bias & Fairness News shows us that models like GPT-4 are not just potential sources of the problem; they are also among our most powerful tools for crafting the solution. By leveraging these models to audit medical algorithms, financial systems, and legal processes, we can begin to untangle and correct deep-seated societal inequities at a scale never before possible. The path forward requires a vigilant, collaborative effort from researchers, developers, and policymakers. As we look toward the GPT Future News, the ultimate goal must be to build systems where fairness is not an afterthought or a patch, but a foundational principle woven into the very fabric of the AI’s architecture and purpose.