The New Guard: How GPT Models Are Evolving with Advanced Safety Architectures

The Next Frontier in AI Safety: A Multi-Model Approach

The rapid evolution of generative AI has been one of the most significant technological stories of our time. As models like GPT-4 and its successors become more powerful and integrated into our daily lives, the conversation around their safety and ethical deployment has intensified. The latest GPT Models News reveals a pivotal shift in how AI developers are tackling this challenge. Instead of relying on a single, monolithic model to handle every query while simultaneously policing itself, a more sophisticated, multi-layered architecture is emerging. This new paradigm involves intelligently routing potentially harmful or sensitive user prompts to specialized “safety models,” representing a major leap forward in AI moderation and a core topic in recent OpenAI GPT News. This architectural innovation moves beyond simple content filters or blunt refusals, creating a more nuanced, scalable, and resilient system for ensuring responsible AI interaction.

This article provides a comprehensive technical deep-dive into this advanced safety architecture. We will explore the underlying mechanics of this routing system, analyze its components, and discuss the profound implications for developers, businesses, and the future of the entire GPT Ecosystem News. From performance benchmarks to ethical considerations, we will unpack what this evolution means for everything from GPT APIs News to the ongoing discourse on GPT Regulation News.

Section 1: Deconstructing the Intelligent Safety Routing Architecture

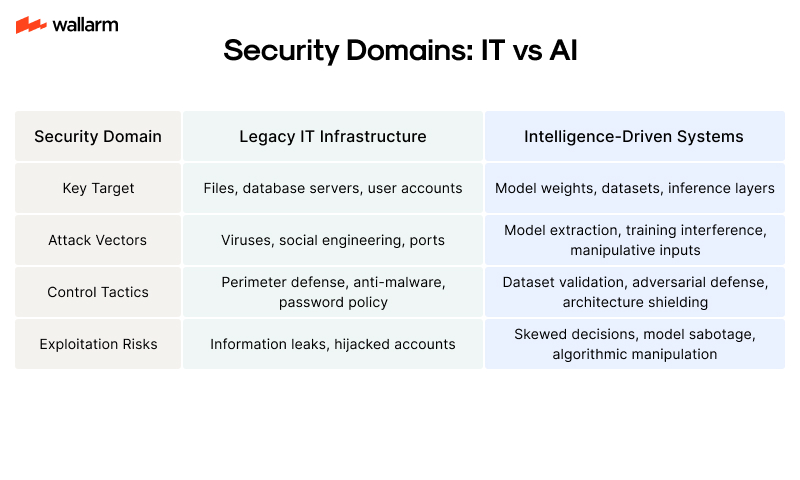

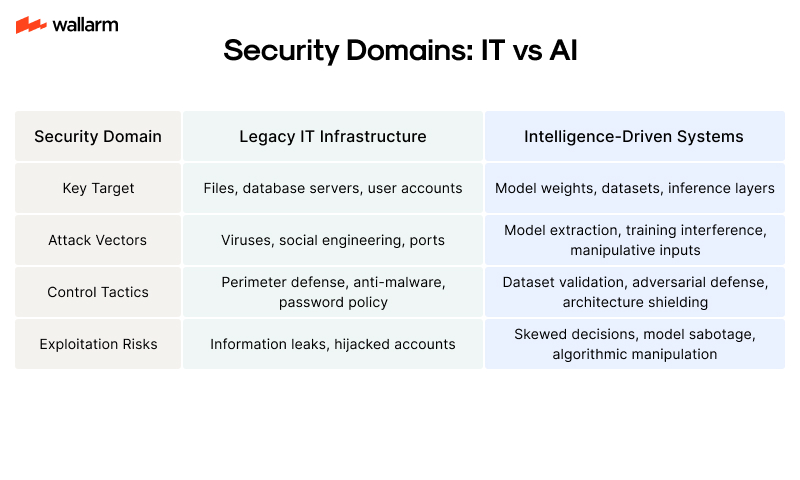

At its core, the new safety paradigm is a departure from the all-in-one model approach. Previously, a powerful model like GPT-3.5 or GPT-4 was responsible for both generating creative and complex responses and simultaneously self-moderating based on a set of safety policies. This often led to a trade-off: overly strict models could result in frustrating “false positive” refusals for benign queries, while looser restrictions risked generating harmful content. The latest GPT Architecture News points to a more elegant solution: a modular, multi-model system designed for separation of concerns.

The Core Components of the System

This advanced architecture can be broken down into three primary components working in concert:

- The Prompt Classifier (or “Router”): This is the system’s frontline. When a user submits a prompt, it doesn’t go directly to the main generative model. Instead, it is first analyzed by a highly efficient, specialized classifier. This router model is a key piece of GPT Training Techniques News, as it’s trained on vast GPT Datasets News containing millions of examples of safe, borderline, and clearly harmful prompts. Its sole purpose is to rapidly assess the user’s intent and categorize the query based on a predefined risk spectrum. This process must be incredibly fast to avoid adding noticeable latency, a critical factor in GPT Latency & Throughput News.

- The Specialized Safety Model: If the router identifies a prompt as potentially problematic, ambiguous, or directly violating policy, it reroutes the query away from the main model. The prompt is instead sent to a dedicated safety model. This model is smaller, less computationally expensive, and has been heavily fine-tuned with a singular objective: to provide a safe, helpful, and non-evasive response that de-escalates the situation. Instead of a generic “I cannot answer that,” it might explain the safety policy in question or reframe the user’s query in a constructive way. This is a significant update in GPT Fine-Tuning News, showcasing how targeted models can excel at specific tasks.

- The Main High-Performance Model (e.g., GPT-4o): This is the powerhouse model that users are familiar with. It handles the vast majority of legitimate, safe queries. By offloading the cognitive load of moderating every single input, this primary model can be further optimized for its core competencies: creativity, complex reasoning, and multimodal understanding. This separation allows for better resource allocation and potentially improves the performance and efficiency of the entire system, a key topic in GPT Efficiency News and GPT Optimization News.

A Real-World Scenario: Before and After

To understand the practical difference, consider a user asking a borderline question about a sensitive historical event.

- Old System: The main GPT-4 model would have to pause its generative process, run the query against its internal safety guidelines, and make a binary decision. This could lead to a slow, unhelpful refusal if the query tripped a keyword filter, even if the user’s intent was academic.

- New System: The router instantly flags the query’s sensitive nature. It forwards it to the safety model. The safety model, specifically trained for this context, provides a nuanced response that acknowledges the topic’s sensitivity, provides historical context from reliable sources, and explains why it cannot engage in speculative or harmful interpretations. The user gets a helpful, safe answer, and the main model’s resources are never consumed.

Section 2: Technical Breakdown and Implementation Considerations

The implementation of an intelligent routing system is a complex engineering feat that touches upon several advanced AI concepts. Understanding these technical nuances is crucial for developers building on top of these platforms and for researchers tracking the latest GPT Research News.

The Router Model: Speed and Accuracy

The success of the entire system hinges on the performance of the router. This model is likely not a full-blown LLM but a more lightweight, transformer-based classifier. Its development involves several key considerations:

- Training Data: The model must be trained on an incredibly diverse dataset that covers everything from overtly harmful requests to subtle, “red-teaming” attempts designed to jailbreak the AI. This dataset is a closely guarded asset and a central part of ongoing GPT Safety News.

- Efficiency and Latency: To be effective, the router must add minimal overhead. This is where techniques like GPT Quantization News (reducing the precision of the model’s weights) and GPT Distillation News (training a smaller model to mimic a larger one) become critical. The goal is to achieve classification in milliseconds. This is a major focus in the field of GPT Inference Engines News.

- The Classification Spectrum: The router doesn’t just make a “safe” or “unsafe” decision. It likely outputs a probability distribution across multiple categories (e.g., hate speech, self-harm, misinformation, benign). This allows the system to route queries with different levels of nuance. A slightly ambiguous query might go to the safety model, while a blatantly illegal one might trigger an immediate hard block and report.

The Safety Model: A Specialist in De-escalation

The safety model represents a new class of specialized AI. Unlike general-purpose models optimized for creativity, this model is optimized for constraint and helpfulness within strict boundaries. Its design principles likely include:

- Reinforcement Learning from Human Feedback (RLHF): This model would undergo intensive RLHF, where human reviewers specifically reward responses that are safe, non-committal on dangerous topics, and constructively educational about AI safety policies. This is a core element of modern GPT Training Techniques News.

- Reduced Generative Freedom: The model’s “creativity” (often measured by a temperature setting) is likely locked down to prevent it from accidentally generating problematic content. Its responses are more templated and deterministic by design.

- Contextual Policy Explanation: A key function is to not just refuse, but to explain *why*. This helps educate users on acceptable use policies and provides transparency, a crucial topic in GPT Ethics News and GPT Bias & Fairness News.

This multi-tiered approach has profound implications for the GPT Hardware News landscape, as it allows for different computational resources to be allocated dynamically. The lightweight router can run on less powerful hardware, even potentially on the edge (a topic of interest in GPT Edge News), while the massive primary models are reserved for heavy lifting in data centers.

Section 3: Broader Implications for the AI Ecosystem

The shift to a modular safety architecture is more than just a technical update; it signals a maturation of the AI industry and has far-reaching consequences for various stakeholders. This development is a cornerstone of current GPT Trends News and will shape the GPT Future News for years to come.

For Developers and Businesses

For those building applications on top of GPT APIs, this change brings both opportunities and challenges. The most immediate impact is on response consistency.

- Reduced False Positives: Applications in sensitive fields like GPT in Healthcare News or GPT in Legal Tech News will benefit from fewer abrupt refusals on complex but legitimate queries. A medical chatbot, for example, can now get a more nuanced, safe answer on a sensitive health topic instead of a hard block.

- New Response Patterns: Developers must update their application logic to handle the new types of responses from the safety model. Instead of just coding for a successful generation or a total failure, they now need to account for a third category: a “safe, explanatory refusal.” This requires more sophisticated error handling and user interface design in GPT Applications News.

- API Transparency: A key question for the developer community is whether API calls will return metadata indicating which model handled the request (e.g., `model_used: ‘gpt-4o’` vs. `model_used: ‘safety-shield-v2’`). This transparency would be invaluable for debugging and performance analysis, a hot topic in GPT APIs News.

For AI Ethics and Regulation

This development is a proactive step by AI labs to address the growing chorus of calls for better regulation. It provides a more defensible and auditable safety system.

- A More Granular Audit Trail: Regulators can now scrutinize not just the final output, but the entire decision-making chain—from the initial classification by the router to the final response generated by the safety model. This adds a layer of accountability.

- Addressing Bias and Fairness: A major challenge in AI safety is ensuring that safety filters don’t disproportionately censor certain dialects or cultural perspectives. By using a dedicated safety model, researchers can focus their GPT Bias & Fairness News efforts on this smaller, more manageable model, making it easier to identify and mitigate biases.

- Scalable Content Moderation: As AI-generated content explodes, this architecture offers a scalable solution for platforms. The same principles could be applied to moderate user-generated content, impacting the future of GPT in Content Creation News.

Section 4: Advantages, Challenges, and Best Practices

While the intelligent routing architecture is a significant step forward, it is not a silver bullet. It introduces its own set of complexities and potential vulnerabilities. A balanced perspective is essential for anyone working in the GPT Ecosystem News.

The Advantages

![Multi-model AI architecture - Multi -Model AI Technical Architecture [2]. | Download Scientific ...](https://gpt-news.net/wp-content/uploads/2025/12/inline_71157b01.jpg)

- Improved User Experience: Fewer frustrating refusals for legitimate prompts leads to a better overall experience for users of GPT Chatbots News and GPT Assistants News.

- Enhanced Efficiency: The main, expensive models are freed up to do what they do best, improving overall system throughput and potentially lowering costs for safe queries. This is a critical aspect of GPT Scaling News.

- Robust and Layered Defense: It creates a “defense-in-depth” security posture. Attackers must now bypass two separate, specialized models instead of just one, making “jailbreaking” attempts more difficult.

- Focused Improvement: Safety and performance teams can work independently on their respective models, allowing for faster, more targeted improvements in both capability and safety.

Potential Challenges and Pitfalls

- The Router as a Single Point of Failure: The entire system’s integrity relies on the router’s accuracy. If adversaries find a way to consistently fool the classifier, they can gain direct access to the more powerful, less-constrained main model. This makes the router a high-value target.

- Lack of Transparency: Without clear documentation or API feedback, developers and users are left in the dark about why a particular prompt was routed to the safety model. This “black box” nature can be frustrating.

- Architectural Complexity: Managing a multi-model inference pipeline is significantly more complex than a single-model endpoint. This increases the operational burden and potential points of failure in GPT Deployment News.

Tips and Recommendations for Stakeholders

For businesses and developers leveraging these advanced models, adaptation is key.

- Conduct Thorough Edge-Case Testing: Proactively test how your application handles borderline or sensitive topics to understand the new response behaviors. Don’t assume previous prompt engineering will work the same way.

- Build Resilient User Interfaces: Design your UI to gracefully handle the explanatory responses from the safety model. Inform the user why their request could not be fully completed, using the information provided by the model.

- Stay Informed on API Updates: Closely follow the OpenAI GPT News and API documentation for any new features, such as metadata flags, that provide insight into the routing process.

Conclusion: A New Era of Responsible AI Scaling

The introduction of an intelligent safety routing architecture marks a pivotal moment in the ongoing narrative of generative AI. It represents a fundamental shift from a monolithic, one-size-fits-all approach to a sophisticated, modular, and specialized system. This is more than just a feature update; it is a foundational change that reflects a maturing understanding of the complex challenges of AI safety. By separating the task of high-performance generation from the critical responsibility of content moderation, AI developers are creating a more resilient, scalable, and ultimately more trustworthy ecosystem.

While challenges like router vulnerability and transparency remain, this multi-model paradigm sets a new standard for responsible AI deployment. It provides a more nuanced way to handle the vast spectrum of human intent, fostering a safer environment for innovation across all sectors, from GPT in Education News to GPT in Finance News. As we look toward the future, including potential GPT-5 News, this layered approach to safety will undoubtedly be a cornerstone of building AI systems that are not only powerful but also principled.