The Next Frontier: How GPT Multimodal Vision is Revolutionizing Industries Like Healthcare

The Dawn of a New AI Paradigm: Beyond Text to True Understanding

For years, the narrative surrounding artificial intelligence has been dominated by the remarkable linguistic prowess of Large Language Models (LLMs). From GPT-3.5 to the more advanced GPT-4, these models have redefined content creation, customer service, and code generation. However, the latest developments in GPT Multimodal News signal a monumental shift. We are moving beyond models that merely process text to systems that can see, interpret, and reason about the world through multiple data types simultaneously. This evolution from a text-centric to a context-centric AI is not just an incremental update; it’s a paradigm shift unlocking applications previously confined to the realm of science fiction. The integration of vision capabilities, a key topic in recent GPT Vision News, allows these models to analyze images, charts, and real-world scenes with a nuanced understanding that bridges the gap between pixels and purpose. This article explores this transformative technology, using a deep dive into its groundbreaking application in medical imaging to illustrate its profound potential and the technical considerations for its deployment across all sectors.

Section 1: Understanding the Multimodal Leap Forward

The transition to multimodal capabilities represents a fundamental change in AI architecture and functionality. It’s the difference between an AI that can read a book and one that can watch the movie adaptation, read the screenplay, and understand the director’s artistic choices. This leap is creating a wave of innovation covered extensively in GPT Applications News and GPT Trends News.

What is Multimodal AI?

At its core, multimodal AI refers to a system’s ability to process and understand information from multiple modalities—such as text, images, audio, and video—concurrently. Instead of analyzing each data type in isolation, a multimodal GPT model can find relationships, patterns, and context across them. For example, it can look at a photograph of a hospital room (vision), read the accompanying doctor’s notes (text), and correlate specific visual cues with diagnostic terminology. This holistic understanding is what makes the technology so powerful. This area of GPT Research News is one of the most active, focusing on creating unified representations of diverse data types within a single model.

The Architectural Shift: From Text Transformers to Vision-Language Models

The underlying technology enabling this is a significant evolution of the original Transformer architecture. While early GPT models were trained exclusively on vast text corpora, models like GPT-4 incorporate a vision encoder. This component processes an image, breaking it down into a sequence of patches that are then converted into numerical representations (embeddings), similar to how words are tokenized. These image embeddings are then fed into the language model alongside text embeddings. The model learns to map concepts between the visual and textual domains during its extensive training phase. This fusion is a hot topic in GPT Architecture News, with ongoing research into more efficient and powerful ways to combine modalities, a key aspect of GPT Training Techniques News.

Unlocking a New Class of Applications

This architectural innovation unlocks a vast array of new possibilities. In finance, an AI can analyze a stock chart (image) alongside a company’s quarterly earnings report (text) to provide a comprehensive market analysis, a development tracked in GPT in Finance News. In legal tech, it can review photographic evidence in conjunction with case files. For creative professionals, it can generate descriptive text for a complex scene in a photograph, revolutionizing areas covered by GPT in Content Creation News. However, nowhere is the impact more immediate and potentially life-altering than in specialized fields like healthcare, where precision and contextual understanding are paramount.

Section 2: A Case Study in Precision: Multimodal GPTs in Medical Imaging

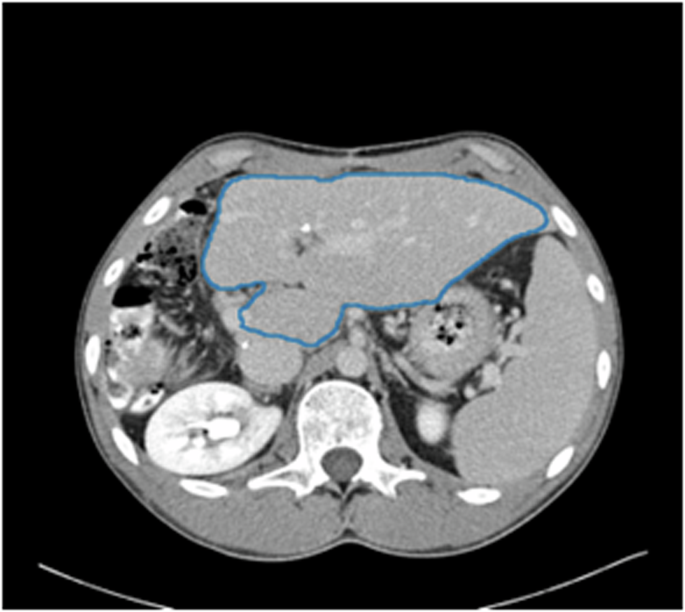

The field of radiology, which relies on the expert interpretation of medical images like CT scans and MRIs, provides a perfect case study for the transformative power of multimodal AI. Recent breakthroughs in this area are a major focus of GPT in Healthcare News, demonstrating a tangible leap beyond traditional computer vision metrics.

The Challenge with Traditional AI in Radiology

For years, AI in radiology has focused on narrow tasks using standard computer vision models. These models might be excellent at one specific thing, like detecting a nodule in a lung CT scan (segmentation) or classifying an image as “abnormal” (classification). However, they lack clinical context. They cannot read the patient’s history, understand the radiologist’s preliminary notes, or generate a nuanced, human-readable report that synthesizes all available information. Furthermore, evaluating these AI-generated findings has relied on pixel-based metrics (like Intersection over Union) or text-based metrics (like BLEU score for report generation), which often fail to capture clinical significance. A model could be 99% accurate at the pixel level but miss the one tiny, clinically crucial detail a human expert would spot.

How GPT-4 Vision Revolutionizes Radiological Evaluation

A multimodal approach, powered by models like GPT-4, changes the game entirely. Imagine a system that is given a series of CT scan images along with the patient’s electronic health record, which includes symptoms, lab results, and previous diagnoses. The model can:

- Visually Identify Anomalies: It analyzes the CT images to identify potential findings, such as lesions, fractures, or inflammation.

- Correlate with Clinical Text: It cross-references these visual findings with the patient’s history. For example, it might note that a newly identified lung nodule is particularly concerning given the patient’s history as a long-term smoker.

- Generate Clinically Aligned Reports: Instead of just outputting coordinates of a finding, it can generate a comprehensive, coherent paragraph for a radiology report, using standard medical terminology and highlighting the most critical findings in order of clinical importance.

This process has led to the development of new evaluation standards. Rather than relying on old metrics, researchers are now using GPT-4 itself to create a new gold standard for evaluation. A new AI-generated finding can be evaluated by a specialized GPT-4 system that has been fine-tuned by expert radiologists to score the finding based on its clinical relevance, accuracy, and clarity of description. This creates a feedback loop and a new type of GPT Benchmark News, where advanced AI is used to validate other AI systems, ensuring they align with expert human judgment.

Data, Performance, and the Future of Diagnostics

Early results from such systems are incredibly promising. In controlled studies, these multimodal evaluation frameworks have shown a much higher correlation with the consensus of human radiologists than traditional automated metrics. This is because the model understands *why* a finding is important, not just *where* it is. This represents a significant development in GPT Fine-Tuning News, as these models are not just pre-trained but are custom-tailored for highly specific, high-stakes domains. The datasets required for this are complex, pairing high-resolution medical images with detailed, anonymized clinical data, a key topic in GPT Datasets News.

Section 3: Broader Implications and Technical Hurdles

While the application in medical imaging is a powerful example, the implications of multimodal GPTs extend across nearly every industry. However, deploying these sophisticated models comes with a unique set of challenges that organizations must navigate carefully, touching on everything from GPT Ethics News to GPT Deployment News.

The Ripple Effect Across Industries

The ability to reason across data types is a universal catalyst for innovation.

- Legal Tech: Multimodal AI can analyze contracts, cross-referencing clauses with visual exhibits or diagrams embedded within the documents, a trend noted in GPT in Legal Tech News.

- Marketing: A model can analyze the visual elements of an ad campaign alongside its text copy and performance metrics (e.g., charts of click-through rates) to provide actionable insights for optimization, a key area of GPT in Marketing News.

- Education: It can create accessible learning materials by generating textual descriptions of complex diagrams or creating interactive tutorials that respond to both visual and text-based inputs from students, a development in GPT in Education News.

This expansion is driving the growth of the entire GPT Ecosystem News, with new GPT Platforms News and GPT Tools News emerging to support multimodal development.

The Technical Frontier: Common Pitfalls and Challenges

Deploying these models is not a simple plug-and-play operation. Key challenges include:

- Data Privacy and Security: In fields like healthcare and finance, the data is extremely sensitive. Ensuring compliance with regulations like HIPAA and GDPR is paramount. This is a critical aspect of GPT Privacy News and GPT Regulation News.

- Computational Cost: Processing images alongside text is significantly more resource-intensive than text alone. This impacts both training and inference costs. Discussions around GPT Hardware News, GPT Inference Engines News, and techniques like GPT Quantization News and GPT Distillation News are crucial for making these models economically viable.

- Bias and Hallucinations: Multimodal models can inherit biases from their training data, potentially misinterpreting images based on demographic or other factors. They can also “hallucinate” or misidentify objects in an image, a critical safety concern in high-stakes applications. Addressing this is a central theme in GPT Bias & Fairness News and GPT Safety News.

- Domain-Specific Validation: A model that works well for general image description may fail spectacularly in a specialized domain like medical imaging. Rigorous validation by domain experts is non-negotiable.

Section 4: The Road Ahead: Best Practices and Future Trends

As we look toward the future, the capabilities of multimodal AI are set to expand even further. The much-anticipated GPT-5 News is filled with speculation about even deeper integration of modalities, potentially including real-time audio and video processing. For organizations looking to harness this power, a strategic approach is essential.

Best Practices for Multimodal AI Integration

To succeed with multimodal GPTs, developers and businesses should adhere to several best practices:

- Curate High-Quality, Paired Datasets: The model’s performance is directly tied to the quality of its training data. Invest in creating clean, well-labeled datasets that accurately pair visual and textual information.

- Implement Human-in-the-Loop (HITL) Systems: Especially in critical applications, AI should augment, not replace, human experts. Design workflows where the AI provides a first-pass analysis or a second opinion, with a human expert making the final decision. This is a core principle for responsible GPT Deployment News.

- Prioritize Explainability and Interpretability: Strive to understand why the model makes a particular decision. Techniques that highlight which part of an image led to a specific textual conclusion are vital for building trust and for debugging.

- Start with Focused Pilot Projects: Begin with a well-defined, high-impact use case rather than attempting a broad, enterprise-wide implementation. This allows for iterative learning and demonstrates value quickly.

The Future is Integrated: GPT-5 and Beyond

The trajectory is clear. Future models will likely handle even more modalities seamlessly. We can anticipate AI assistants that can participate in a video conference, understanding spoken words, on-screen presentations, and the non-verbal cues of participants. In the world of IoT, this means AI could process data from a network of sensors—temperature, motion, and video feeds—to provide a holistic understanding of a physical environment, a key topic for GPT Applications in IoT News. This ongoing research, covered in GPT Future News, points to a future of truly ambient, context-aware computing powered by ever-more-capable GPT Agents News.

Conclusion: A New Era of Contextual Intelligence

The emergence of multimodal GPTs marks a pivotal moment in the evolution of artificial intelligence. By breaking free from the confines of text, these models are developing a more holistic and contextually aware understanding of the world. The application in medical imaging is a powerful harbinger of what’s to come, showcasing the ability to enhance human expertise, improve accuracy, and create new standards for quality and evaluation in a high-stakes field. While significant technical, ethical, and financial challenges remain, the path forward is illuminated by immense potential. For developers, businesses, and researchers, the key takeaway is clear: the future of AI is not just about processing information; it’s about understanding context. The organizations that embrace this multimodal paradigm today will be the ones that lead the innovations of tomorrow.